Here we state the problem of face recognition using AI and Edge devices. Then list the main steps: detection, alignment, feature extraction, and identification. And finally, give a short outline of the series and state the tools we’ll use: Python, MTCNN, Keras with TensorFlow, OpenCV, FaceNet.

Introduction

Face recognition is one area of Artificial Intelligence (AI) where deep learning (DL) has had great success over the past decade. The best face recognition systems can recognize people in images and video with the same precision humans can – or even better. The two main base stages of face recognition are person verification and identification.

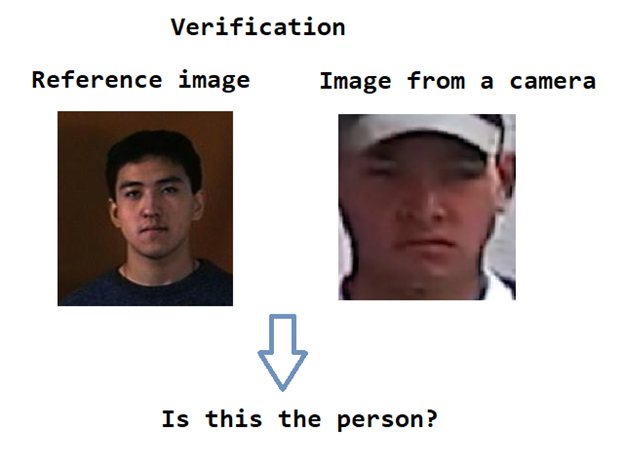

Verification is the task of comparing two face images and defining if these depict the same person. Modern face authentication systems on mobile phones perform this very task.

The face identification problem arises when we have a database of known faces and a sample image of a person. The task is to decide if this sample image belongs to one of the known people in the database or if this is an image of an unknown subject. Access control modules of video surveillance systems solve this problem using AI methods.

All modern AI methods for face recognition are based on Convolutional Neural Networks (CNN), a.k.a. Deep Neural Networks (DNN). The recognition algorithm includes several common steps: face detection, face alignment (or normalization), feature extraction, and feature matching.

Face Detection and Alignment

The face detection algorithm is responsible for finding locations of faces in a picture or in a video frame. A face location is commonly defined by a bounding box; it can also include additional information about the face – the landmarks (eyes, nose, and mouth points). Once a face location has been found, it must be cropped from the image and aligned to satisfy certain geometric requirements. The aligned image of the face is then used as input for a DNN, which extracts a vector of features called embeddings. You can use the feature vector to find a distance in similarity to any other feature vector. Comparing distances for the different faces, you can match these faces for verification or identification purposes.

Face Recognition

A face recognition system usually consists of two parts: an edge device with a photo or video camera and a server with a database of faces. Typically, the low-powered edge device would be in charge of taking pictures of people while the high-performance server would be responsible for running the recognition algorithm. However, recent advances in AI have opened up new possibilities. We can now run some of the AI operation required for facial recognition – namely, face detection and alignment – on the low-powered edge devices. This lets us reduce the amount of data to be sent across the network for processing on a central server.

This series of articles will demonstrate how to create a facial recognition system that includes the following parts:

- A face detection neural network running on a Raspberry Pi edge device. This application will detect human faces in camera frames, crop the faces out of the frames, and send the cropped faces to a central server. Note that we're using a Raspberry Pi as an example of a low-powered edge device. A Pi is great for prototyping, but keep in mind you'd likely want to use more robust hardware when creating a commercial face detector.

- A facial recognition neural network running on a server. It will be wrapped in a simple web API to enable it to receive images sent by the face detection application.

This Series of Articles

Throughout this series, we’ll show how to implement all the parts of the face recognition system.

In the first (current) half, we’ll provide a description of the existing AI face detection methods and develop a program to run a pretrained DNN model. Then we’ll consider face alignment and implement some alignment algorithms using face landmarks. We’ll then run face detection DNN on a Raspberry Pi device, explore its performance, and consider possible ways to run it faster, as well as to detect faces in real-time mode. Finally, we’ll show you how to create a simple face database and fill it with faces extracted from images or videos.

The second (future) half of the series will be dedicated to realization of the face recognition server. We’ll show you how to run a pretrained DNN for face recognition and wrap it in a simple web API to receive face images from a Raspberry Pi device. We’ll consider how the server-side application can be easily deployed and scaled with Docker containers and Kubernetes. Finally, we’ll cover the basics of developing facial recognition neural networks from scratch.

Tools and Assumptions

This series will use the following software and libraries:

- The Python language and Jupyter Notebook for code development

- OpenCV library for processing images and video

- The Keras framework with the TensorFlow backend to run DNN models

- The MTCNN library for face detection

- The FaceNet model for face recognition

We assume that you are familiar with DNN, Python, Keras, and TensorFlow. You are welcome to download this project code ...

Next Steps

Let’s get started. In the next article, we’ll run a pretrained DNN model to detect faces in video. Stay tuned!