Here we'll explain the structure of the simple face database for face identification, develop the Python code of the utilities to add faces to a face database, and give the references to download faces for creating the database. Finally, we'll explain how to launch the utility code for extracting faces from images and video.

Introduction

Face recognition is one area of Artificial Intelligence (AI) where deep learning (DL) has had great success over the past decade. The best face recognition systems can recognize people in images and video with the same precision humans can – or even better. The two main base stages of face recognition are person verification and identification.

In the first (current) half of this article series, we will:

- Discuss the existing AI face detection methods and develop a program to run a pretrained DNN model

- Consider face alignment and implement some alignment algorithms using face landmarks

- Run the face detection DNN on a Raspberry Pi device, explore its performance, and consider possible ways to run it faster, as well as to detect faces in real time

- Create a simple face database and fill it with faces extracted from images or videos

We assume that you are familiar with DNN, Python, Keras, and TensorFlow. You are welcome to download this project code ...

In the previous article, we’ve adapted our AI face detector to run in the near real-time mode on edge devices. In this article, we’ll discuss another component of our recognition system – a database of faces.

What’s In the Database

The first question is what exactly we must save to the database. Generally speaking, we must store in our database the identifier of a person – say, their first and last name – and their facial features, which we can compare with the features of another face to evaluate the degree of similarity. In most real facial recognition systems, the face features are called embeddings. These embeddings are extracted from a face image with a DNN model.

To keep our system generic and straightforward, we’ll use a very simple database structure. It will be represented by a folder with face images in the PNG format, one image per person. The files will be named with the person’s identifier (name). The image of each person will contain the aligned face extracted from a picture. When we use the database for face identification, we’ll extract the embeddings on the fly. We can use the same database with different DNN models.

Creating the Database

Let’s create our database. We have two options for getting face data: from a video and from an image. We already have the code for extracting the face data from a video. We can run our face detector as follows:

d = MTCNN_Detector(50, 0.95)

vd = VideoFD(d)

v_file = r"C:\PI_FR\video\5_3.mp4"

save_path = r"C:\PI_FR\detect"

(f_count, fps) = vd.detect(v_file, save_path, True, False)

print("Face detections: "+str(f_count))

print("FPS: "+str(fps))

Note that the value of the save_path parameter is the folder where all the extracted faces are stored. The align parameter is True because faces must be aligned; and the draw_keypoints parameter is False because we don’t want to store facial landmarks.

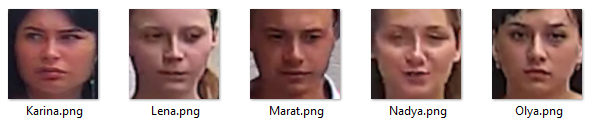

When the process is finished, we can choose specific face samples for every person we’d like to add to the database. Here are the samples for five people, extracted from five testing videos, that we saved to our database.

We intentionally haven’t added to the database some of the people present in our video files. These will be used to test the identification model on unknown humans in the videos

Populating the Database

Let’s write the Python code that will extract faces from images and add them to our database:

from matplotlib import pyplot as plt

fa = Face_Align_Mouth(160)

db_path = r"C:\PI_FR\db"

align = True

p_name = "Woman05"

f_file = r"C:\PI_FR\faces\CF0055_1100_00F.jpg"

fimg = cv2.imread(f_file, cv2.IMREAD_UNCHANGED)

faces = d.detect(fimg)

r_file = os.path.join(db_path, p_name+".png")

face = faces[0]

if align:

(f_cropped, f_img) = fa.align(fimg, face)

else:

(f_cropped, f_img) = d.extract(fimg, face)

if (not (f_img is None)) and (not f_img.size==0):

cv2.imwrite(r_file, f_img)

plt.imshow(f_img)

print(p_name+" appended to Face DB.")

else:

print("Wrong image.")

With the above code, we can easily add face samples to the database using peoples’ photographs.

To make our database facilitate testing for all face recognition scenarios, we must add to it some faces of people who don’t appear in the test video files. We could extract these faces from other videos. But here we’ll cut a corner and borrow faces from free face databases.

We collect some Faces collected from several sources and place them in the image archive. We then run our face extraction code on this archive.

This adds ten face samples to our database. So we have the total of fifteen people in the database.

We name the new people "Man01, …, Woman05" to differentiate them from the known people - those who are present in the test videos. You are welcome to download the database samples.

Next Steps

Now we have all the components of a face recognition application ready. In the second half of this series, we’ll select a face recognition DNN model and develop code for running this model against a video feed.