In the first article of this series, we discussed the advantages of analyzing real-time data and suggested ways to accomplish this without disrupting applications. In this article, we start our hands-on demonstration of Azure Synapse Link for Azure Cosmos DB. We will prepare the environment that we will later use to show Azure Synapse Link’s hybrid transactional and analytical processing (HTAP) capabilities.

We will create an Azure Cosmos DB account then feed it with sample retail sales data. After this, we’ll enable Azure Cosmos DB’s necessary features, create an Azure Synapse workspace, and access Azure Synapse Studio. Along the way, we will explore how to develop HTAP-enabled containers under Azure Cosmos DB to enable real-time data science and BI on Azure Synapse Analytics (formerly called SQL Data Warehouse).

Setting up an Azure Cosmos DB

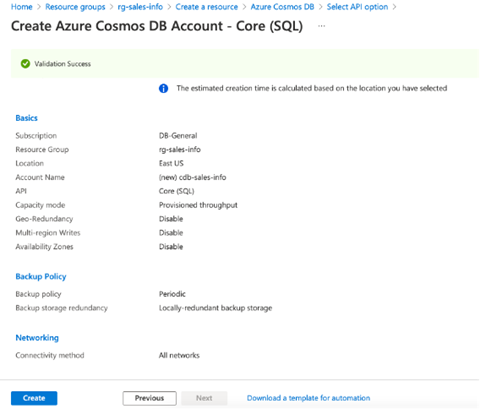

Using the Azure portal, we start by creating the resource group "rg-sales-info" within the East US Azure region (or another preferred region) to prepare the environment. Then, under this group, we create an instance of Azure Cosmos DB with Core (SQL) API. We set the account name to "cdb-sales-info," disable global distribution and configure locally redundant backup storage.

The figure below depicts the final configuration. Note that the Azure Synapse Link for Cosmos DB is available only for SQL API and Azure Cosmos DB API for MongoDB at the time of writing this article.

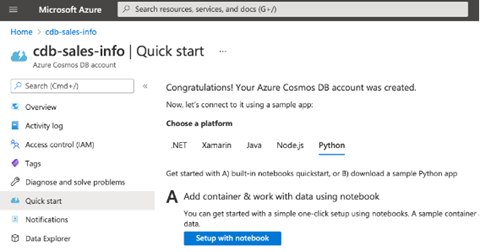

After creating our Azure Cosmos DB account, we go to the Quickstart pane. It contains a startup guide for the most popular programming tools: .NET, Xamarin, Java, Node.js, and Python. As the following figure shows, we select Python then click Setup with notebook:

Clicking this takes us to the Data Explorer pane, where we can enable notebooks for our Azure account. Then, create a Jupyter notebook to import, analyze, and visualize your data. In this case, it is sample retail data.

The retail data is a JSON collection. Each item comprises twelve properties, like CartID, Price, UserName, Country, Latitude/Longitude, and more.

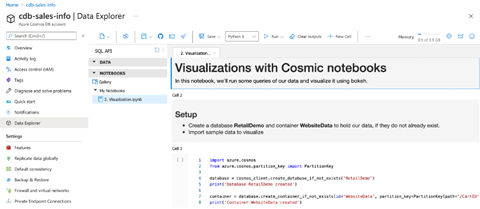

By default, the quick start includes the Python code from the referenced tutorial. So, we should see a screen like this:

The above code uses azure.cosmos Python packages to create the database RetailDemo, then the container WebsiteData.

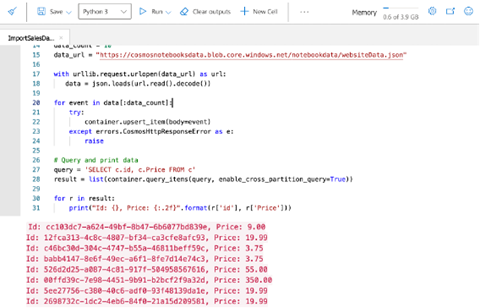

Let’s make a new notebook and import the data into the SalesData container under the SynapseDemo database. To make the notebook, we click New Notebook from the context menu of My Notebooks. Then, we rename the new notebook ImportSalesData.ipynb, and paste in the following code:

import azure.cosmos

import urllib.request, json

from azure.cosmos.partition_key import PartitionKey

db_name = 'SynapseDemo'

container_name = 'SalesData'

database = cosmos_client.create_database_if_not_exists(db_name)

container = database.create_container_if_not_exists(

id=container_name,

partition_key=PartitionKey(path='/CartID'))

data_count = 10

data_url = "https://cosmosnotebooksdata.blob.core.windows.net/notebookdata/websiteData.json"

with urllib.request.urlopen(data_url) as url:

data = json.loads(url.read().decode())

for event in data[:data_count]:

try:

container.upsert_item(body=event)

except errors.CosmosHttpResponseError as e:

raise

query = 'SELECT c.id, c.Price FROM c'

result = list(container.query_items(query, enable_cross_partition_query=True))

for r in result:

print("Id: {}, Price: {:.2f}".format(r['id'], r['Price']))

After creating the database and container, we fetch the data from the URL and insert the first ten items into the container. The last part of the code queries the Azure Cosmos DB container data and prints it to the console. Specifically, we select only two properties: id and price. Then, we print them to the console.

After we run the above notebook in Data Explorer, we should see the following result:

Creating a Synapse Workspace

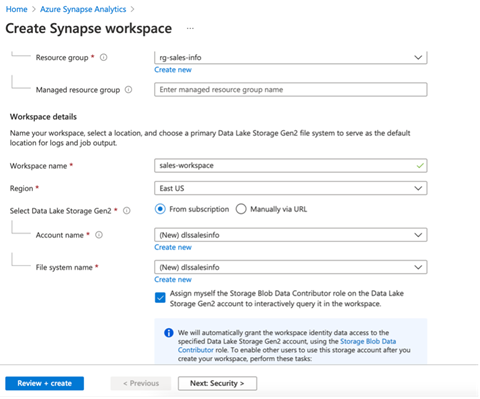

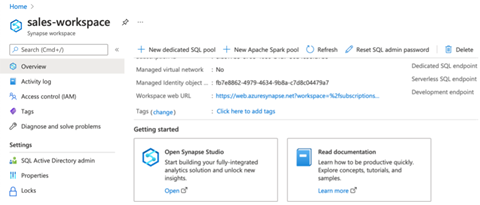

Now that our data is ready, we can create the Synapse workspace then link it to Azure Cosmos DB. To make the workspace, follow Microsoft's tutorial. In the Azure portal, we go to Azure Synapse Analytics and click the Create Synapse workspace button. It opens the typical Azure form, where we provide the following:

- Resource group: Here, we use rg-sales-info.

- Workspace name: We set it to sales-workspace.

- Region: We use East US.

- Data Lake Storage Gen 2: We choose the From subscription radio button and create a new account and file system. We set their names to "dlssalesinfo".

This image summarizes our configuration:

After creating the workspace, we are ready to link it to Azure Cosmos DB through Azure Synapse Link for Azure Cosmos DB. We have several options to enable Azure Synapse Link.

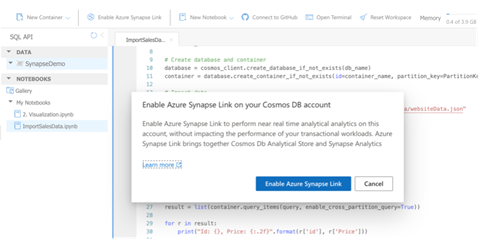

If we switch back to Data Explorer under our Azure Cosmos DB account, we click SynapseDemo database. Clicking this changes the action pane in the top row. There are now several buttons, including the Enable Azure Synapse Link button. Once we click it, we confirm our action in the dialog box that appears:

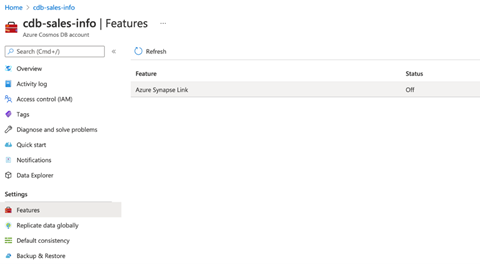

We can also use the Azure portal or Azure Cloud Shell (or a locally installed Azure CLI) to enable Azure Synapse Link. When using the portal, we go to our Azure Cosmos DB account (here, we use cdb-sales-info), choose Features on the left, and switch Azure Synapse Link from Off to On:

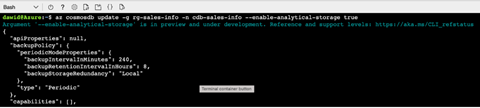

If we are using a command-line interface (CLI), we type:

az cosmosdb update -g rg-sales-info -n cdb-sales-info --enable-analytical-storage true

This command enables analytical storage and Synapse Link. The image below shows the sample use and command output:

In this tutorial, we invoke the above command in Azure Cloud Shell.

Configuring Synapse Studio

We have all the building blocks ready. We can go ahead and configure them in Synapse Studio. To open Synapse Studio, we go back to our Synapse workspace, then click Open Synapse Studio in the Getting Started section:

Alternatively, we can access Synapse Studio using a dedicated workspace web URL, which appears in Overview. This option is helpful in practice for faster access.

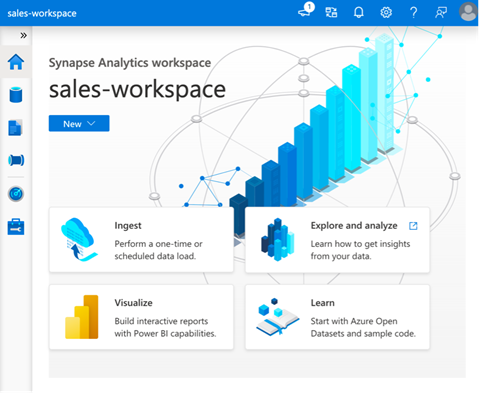

Note that it takes a while before Synapse Studio launches. Afterward, we should see a welcome screen that looks like this:

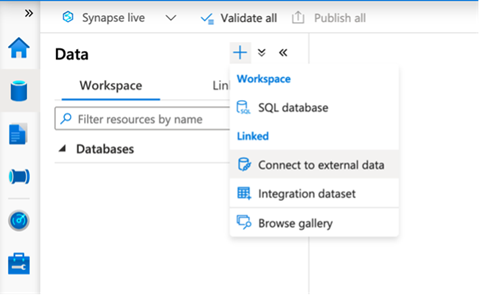

Now, we need to link our Azure Cosmos DB account (cdb-sales-info) with the Synapse workspace. To do so, in the Synapse workspace, we click the data tab (the second icon on the left side). Then, we click the + icon and choose Connect to external data:

Synapse displays another form in the right pane where we choose Azure Cosmos DB (SQL API):

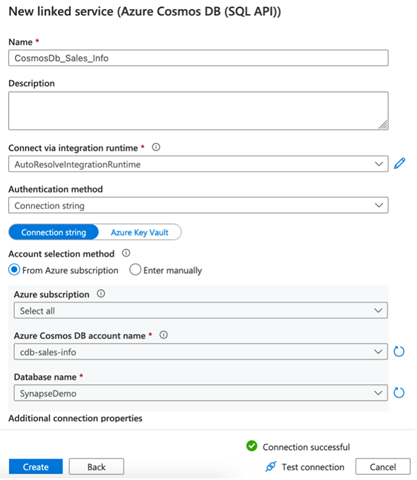

Then, we configure the link by providing three values:

- Name of the linked service. Here, we use CosmosDb_Sales_Info.

- Azure Cosmos DB service. Here, it is cdb-sales-info.

- Database name. We use SynapseDemo (this must match the Python script name from the notebook).

At this point, we can click the Test connection link to check that everything is fine. Our configuration screenshot is below:

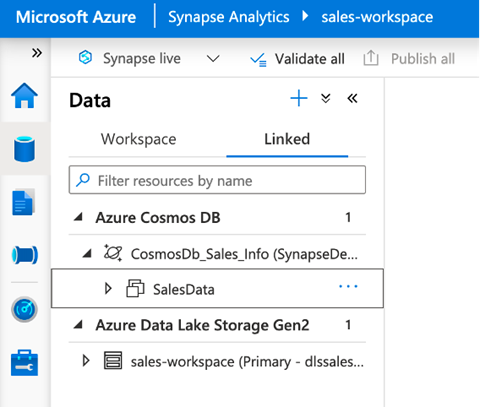

After creating this link, we eventually need to refresh Synapse Studio. To do so, we use the dedicated icon in the top right corner of Synapse Studio. Then, the linked database along with our SalesData container appears in the Linked tab:

The Synapse Link distinguishes OLTP-only from HTAP-enabled Azure Cosmos DB containers by the corresponding icons Microsoft documentation describes. In the above screenshot, our SalesData container is OLTP-only.

We will now create another HTAP-enabled container, SalesDataHTAP. To do so, let’s modify the ImportSalesData.ipynb notebook by changing the container_name to "SalesDataHTAP."

We also add the analytical_storage_ttl parameter to the create_container_if_not_exists method:

container_name = 'SalesDataHTAP'

database = cosmos_client.create_database_if_not_exists(db_name)

container = database.create_container_if_not_exists(id=container_name,

partition_key=PartitionKey(path='/CartID'),

analytical_storage_ttl=-1)

The analytical_storage_ttl parameter controls the analytical store. More specifically, it determines our data’s time to live in the analytical store (analytical TTL) and can take one of three values:

- 0 or Null: disables the analytical store

- -1: specifies that the data will be stored infinitely

- Another number: specifies the actual TTL in seconds

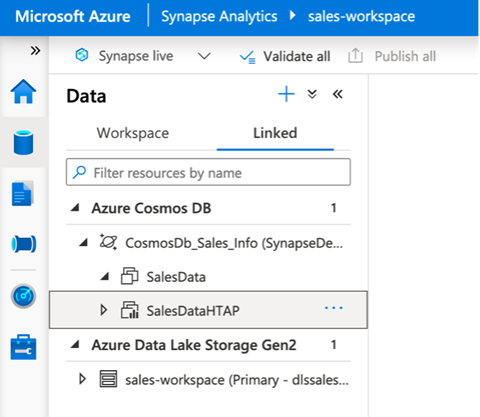

In the above code, we use -1, which sets the TTL to infinity. After we run the modified notebook and refresh Synapse Studio, we should see both containers (note the different icons):

As we will see later on, the containers differ by the operations we can perform on them.

Note that if you see errors when running the modified code, ensure that analytical storage through Azure CLI has been enabled.

Summary

In this article, we prepared the Azure Cosmos DB environment, which mimics the typical operational database of cloud-native solutions. We configured the database so we can link it with Azure Synapse to enable further data science without affecting the operational database’s performance.

We can now extend this capability by setting up operational reporting through Power BI, for example, or by preparing or aggregating data for machine learning. We will explore how to display our data in Power BI charts in the third article of this series.

Plus, you can learn more about how to drive business intelligence and machine learning by attending Microsoft’s Hands-on Training Series for Azure Synapse Analytics. Led by a member of the Azure Synapse engineering team, each episode offers hands-on Azure Synapse training.