The Mali Offline Compiler is a command line tool used to validate shaders and generate simulated performance reports.

While the previous, now-legacy version of the offline compiler was used to generate shippable shader binaries, this functionality has been deprecated in favor of a new workflow we’ll discuss in this article. Precompiled shaders can load faster at runtime. However, they don’t have full-program optimization, which often can happen only at link time on the device. The precompiled binaries also lack optimizations available after user-installed driver updates, which creates friction in a game’s release cycle.

To deal with this, Arm has invested in tooling a la LLVM-MCA to analyze shaders and guide optimizations at the source level.

In this article, we’ll introduce you to the usage and expected benefits of using Mali Offline Compiler as a key step in your game development workflow.

To follow along in the article, first install Arm Mobile Studio that ships with Malioc (the Mali Offline Compiler) and ensure <install_location>/mali_offline_compiler is in your path if working on Linux or macOS (this is done automatically if you are using the Windows installer).

Shader Optimization Background

Unlike code that runs on a CPU, shaders can often be difficult to benchmark in isolation.

For one thing, shader performance is fairly cache-sensitive, and memory access patterns can change depending on how a shader is used, what resources are bound to the shader, and when the shader is scheduled.

Furthermore, shaders can be compiled to different instruction set architectures (ISAs) and have different performance characteristics on different architectures as a result.

Instead of attempting to run an isolated benchmark for each platform and device driver, Malioc performs a form of static analysis to generate a report as shown below:

We’ll get into how to interpret this report soon, First, however, let’s discuss shader performance in general terms by quickly summarizing some properties that make one shader slower than another shader.

The first (and clearest) thing to compare when comparing the performance of two shaders is the operation count. How many memory loads and stores is one shader performing over the other? How many texture samples? How many operations?

We should expect that shaders that do more work – all else being equal – perform slower. However, remember that a quick visual scan of a shader can fail to fairly assess the work to be done. For example, it can be easy to gloss over a texture sample instruction, which ends up being more expensive because the texture being sampled is a cube texture. For more complicated shaders that invoke functions defined in shader headers, it may be an expensive accounting exercise to mentally tally how much work the shader is doing.

Another aspect to consider when comparing two shaders is the set of implementation differences, which result in differing register usages. Even when performing the same computation, implementation differences can cause one shader to allocate fewer registers or spill into the stack if the number of available registers is insufficient. In addition, implementation differences can create load store dependencies that lengthen the critical path or inhibit the compiler from hiding the latency of loads from buffer or texture memory.

The use of the various shader instructions have implications for the pipeline’s performance. For example, modifying z in a fragment (pixel) shader prevents the early z-test optimization from activating. If, however, a fragment shader doesn’t modify z, the driver may be able to perform the z-test on groups of fragments at a time, resulting in less dispatched work. Shaders that ultimately disable early z-testing are particularly detrimental to performance on mobile devices because they disable HSR (hidden surface removal) culling.

Shaders can also differ in terms of cache coherence, instruction selection (such as fused multiply-add instructions), local data share (LDS) usage, floating point precision, memory bank utilization, attribute interpolation pressure, and more.

Let’s look into how we can investigate shader performance ourselves using the Mali offline compiler on some key examples.

General Mali Offline Compiler Usage

Once you’ve installed Arm Mobile Studio (which ships with Malioc), using the utility is straightforward. First, create a file called test.frag with the following contents.

#version 450

layout (binding = 1) uniform sampler2D u_sampler;

layout (location = 0) in vec2 in_uv;

layout (location = 0) out vec4 out_color;

void main() {

out_color = texture(u_sampler, in_uv);

}

This fragment shader is a simple one for illustrative purposes. It receives a UV coordinate of a fragment, samples a texture with it, and writes out the color to the render target bound to slot 0.

To analyze this shader, we can invoke the following command:

malioc --vulkan test.frag

Because we are using Vulkan bindings in our shader, we pass the --vulkan flag. This can be omitted if your shaders use OpenGLES bindings instead.

This command generates a report for the default Mali GPU (Mali-G78, from the Valhall architecture family, in this case), but you can explicitly specify another Mali GPU using the -c flag. The report generated in this case is as follows:

Mali Offline Compiler v7.2.0 (Build 05290c)

Copyright 2007-2020 Arm Limited, all rights reserved

Configuration

=============

Hardware: Mali-G78 r1p1

Architecture: Valhall

Driver: r25p0-00rel0

Shader type: Vulkan Fragment

Main shader

===========

Work registers: 5

Uniform registers: 2

Stack spilling: false

16-bit arithmetic: 0%

FMA CVT SFU LS V T Bound

Total instruction cycles: 0.00 0.02 0.00 0.00 0.25 0.25 V, T

Shortest path cycles: 0.00 0.02 0.00 0.00 0.25 0.25 V, T

Longest path cycles: 0.00 0.02 0.00 0.00 0.25 0.25 V, T

FMA = Arith FMA, CVT = Arith CVT, SFU = Arith SFU,

LS = Load/Store, V = Varying, T = Texture

Shader properties

=================

Has uniform computation: false

Has side-effects: false

Modifies coverage: false

Uses late ZS test: false

Uses late ZS update: false

Reads color buffer: false

Note: This tool shows only the shader-visible property state.

API configuration may also impact the value of some properties.

Note: This tool shows only the shader-visible property state.

API configuration may also impact the value of some properties.

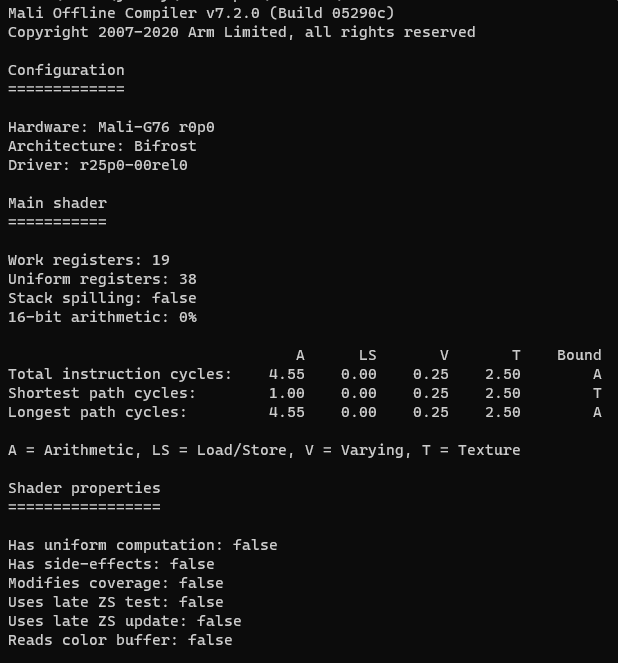

Depending on the selected architecture, some of the columns may not appear in the report. For example, the Valhall ISA exposes three distinct ALU columns corresponding to the FMA (fused multiply-add), CVT (convert), and SFU (special function unit) pipelines. The cycles associated with each set of units are analyzed and displayed separately, as in the report above. In contrast, running the same command passing -c Mali-G76 to compile for the Mali-G76 GPU, from the Bifrost architecture family, will generate the following report (abbreviated to highlight differences):

A LS V T Bound

Total instruction cycles: 0.12 0.00 0.25 0.50 T

Shortest path cycles: 0.12 0.00 0.25 0.50 T

Longest path cycles: 0.12 0.00 0.25 0.50 T

Unlike the Valhall report, all arithmetic instructions are grouped into a single column corresponding to a unified arithmetic pipeline.

For the remainder of this article, we’ll stay focused on the Valhall architecture, but the concepts will apply to other existing or future architectures as well. Refer to the Arm Mali Offline Compiler User Guide for specific information about the pipelines available to each architecture.

It’s important to remember that what is being simulated by the offline compiler is not the GPU scheduling front end, which tracks warp state and retires instructions to the back end. This means that the effects of thread occupancy and secondary effects of GPU work scheduling must be measured with direct benchmarks on a real device.

Next, let’s analyze the same shader sampling a cubemap (as if we were sampling a skybox) instead of a 2D texture. To do this, change the contents of the shader as:

#version 450

layout(binding = 1) uniform samplerCube u_sampler;

layout(location = 0) in vec3 in_uv;

layout(location = 0) out vec4 out_color;

void main() {

out_color = texture(u_sampler, in_uv);

}

If we re-run the report, we should see the following results (abbreviated for brevity to show just the register usage and performance table):

Work registers: 6

Uniform registers: 4

Stack spilling: false

16-bit arithmetic: 0%

FMA CVT SFU LS V T Bound

Total instruction cycles: 0.05 0.02 0.38 0.00 0.38 0.25 SFU, V

Shortest path cycles: 0.05 0.02 0.38 0.00 0.38 0.25 SFU, V

Longest path cycles: 0.05 0.02 0.38 0.00 0.38 0.25 SFU, V

Note that, compared to what we saw before, the total number of the needed registers and cycles has increased. That’s because additional implicit work is required to filter a cubemap.

"What if I wanted to point-sample the cube map?" you might ask. The offline compiler has no way of knowing what sampler you intend to bind at runtime. Thus, it has to make an assumption about the sampler state, and it assumes that all samplers perform bilinear filtering. Similar assumptions are made about the texture format, with the compiler assuming that the texture unit can process at full rate. We can see in the Mali Data Sheet that this isn’t always true; Mali-G77 formats that are wider than 32-bits per texel will run at half rate.

To interpret the numbers in the columns of the performance table, the rule of thumb is that lower is better.

Remember that memory latencies cannot be simulated accurately because memory access is dependent on how resources are bound and utilized at runtime.

Furthermore, when attempting to optimize a shader, the column with the highest cycle count reported for either the longest or shortest path is a good candidate for targeted optimization. The pipelines can progress multiple shaders in parallel, so the pipeline with the highest load tends to be the critical path.

So far, all our examples have identical rows between Total instruction cycles, Shortest path cycles, and Longest path cycles. In the next section, we’ll look at examples that demonstrate how the analysis reflects the effect of branch instructions in the shader.

Analyzing Branching Shaders

Suppose you had a compute shader that shaded pixels by the lights present in a given tile (as in the Forward+ tiled deferred lighting algorithm). Each invocation must perform the following tasks:

- Retrieve the scene color at the invocation’s fragment position

- For each light associated with the invocation’s tile:

- Cull the light against the fragment position and depth

- Accumulate the light’s radiance contribution

- Write out the lit result

Because the full contents of such a shader would be a bit heavy for this article, we’ll instead look at a representative shader which has similar, but less complicated, requirements.

#version 450

#extension GL_EXT_nonuniform_qualifier : require

layout (local_size_x = 16, local_size_y = 16) in;

layout (binding = 0, rgba8) uniform image2D scene;

layout (binding = 1) buffer Tile

{

uint count;

uint indices[];

} tiles[];

layout (binding = 2) buffer Lights

{

uint light_type;

vec4 color;

} lights[];

void main() {

ivec2 uv = ivec2(gl_GlobalInvocationID.x, gl_GlobalInvocationID.y);

vec4 color = imageLoad(scene, uv);

uint tile_id = gl_WorkGroupID.x + gl_WorkGroupID.y * gl_NumWorkGroups.x;

uint count = tiles[tile_id].count;

for (uint i = 0; i != count; ++i) {

uint light_index = tiles[tile_id].indices[i];

uint light_type = lights[light_index].light_type;

switch (light_type) {

case 0:

color += lights[light_index].color;

break;

case 1:

color -= lights[light_index].color;

break;

default:

color *= lights[light_index].color;

break;

}

}

imageStore(scene, uv, color);

}

What this shader performs is nonsensical – it simply illustrates a common pattern: per-invocation loop, branch within a loop, and diverging access pattern. Running Malioc on this shader produces the following report:

Mali Offline Compiler v7.2.0 (Build 05290c)

Copyright 2007-2020 Arm Limited, all rights reserved

Configuration

=============

Hardware: Mali-G78 r1p1

Architecture: Valhall

Driver: r25p0-00rel0

Shader type: Vulkan Compute

Main shader

===========

Work registers: 22

Uniform registers: 4

Stack spilling: false

16-bit arithmetic: 0%

FMA CVT SFU LS T Bound

Total instruction cycles: 0.19 0.30 0.19 11.00 0.00 LS

Shortest path cycles: 0.00 0.06 0.12 5.00 0.00 LS

Longest path cycles: N/A N/A N/A N/A N/A N/A

FMA = Arith FMA, CVT = Arith CVT, SFU = Arith SFU, LS = Load/Store, T = Texture

Shader properties

=================

Has uniform computation: true

Has side-effects: true

There are a few things of note here.

The number of "Longest path cycles" for each pipeline is marked as N/A. This is because the loop iteration limit is not statically known. If you wanted to determine the estimated cycle cost for a representative loop count (say, for an expected number of lights per tile), you could hardcode this value temporarily and rerun the analysis.

The bulk of the work appears to occur in the load-store pipeline. This is expected in this case because our shader is relatively light on computation compared to the amount of memory accessed. As such, we can expect this shader to be memory-bound as opposed to ALU-bound.

Experimenting a bit more, you should see that changing the workgroup size does not affect the simulation results (the dispatch of the compute shader itself is not modeled). Furthermore, despite relatively low arithmetic pipeline usage, decreasing the precision globally does have a beneficial effect on the estimated FMA cycle counts, as you might expect.

You may want to experiment with techniques like scalarization, usage of LDS, and so on, to see their effect on register usage.

Optimized Vertex Shaders

Mobile GPUs optimize heavily for memory bandwidth efficiency, as accessing DRAM is one of the most battery consuming operations the GPU can perform.

To optimize vertex fetch bandwidth, recent Mali GPUs compile each vertex shader into two separate binaries. One binary will emit only the position, for the purpose of culling and binning primitives. The other binary will produce all other vertex shader outputs that need to be interpolated and forwarded to the fragment shading.

The advantage of this approach is that the second binary will only be executed for primitives that survive culling, so the associated shader execution and data fetch will be skipped for primitives that are off-screen or back-face culled.

Fortunately, Malioc will conveniently generate analyses for both variants of the vertex shaders. For example, consider the following simple vertex shader:

#version 450

layout (binding = 0) uniform View {

mat4 camera;

mat4 projection;

};

layout (push_constant) uniform Model {

vec2 uv_scale;

vec3 tint;

mat4 model;

};

layout (location = 0) in vec3 v_position;

layout (location = 1) in vec2 v_uv;

layout (location = 2) in vec3 v_color;

layout (location = 0) out vec2 f_uv;

layout (location = 1) out vec3 f_color;

void main() {

f_uv = uv_scale * v_uv;

f_color = tint * v_color;

gl_Position = projection * camera * model * vec4(v_position, 1.0);

}

Analyzing the above shader should produce a report like the following:

Mali Offline Compiler v7.2.0 (Build 05290c)

Copyright 2007-2020 Arm Limited, all rights reserved

Configuration

=============

Hardware: Mali-G78 r1p1

Architecture: Valhall

Driver: r25p0-00rel0

Shader type: Vulkan Vertex

Main shader

===========

Position variant

----------------

Work registers: 19

Uniform registers: 30

Stack spilling: false

16-bit arithmetic: 0%

FMA CVT SFU LS T Bound

Total instruction cycles: 0.25 0.00 0.00 3.00 0.00 LS

Shortest path cycles: 0.25 0.00 0.00 3.00 0.00 LS

Longest path cycles: 0.25 0.00 0.00 3.00 0.00 LS

FMA = Arith FMA, CVT = Arith CVT, SFU = Arith SFU, LS = Load/Store, T = Texture

Varying variant

---------------

Work registers: 12

Uniform registers: 30

Stack spilling: false

16-bit arithmetic: 0%

FMA CVT SFU LS T Bound

Total instruction cycles: 0.08 0.00 0.00 5.00 0.00 LS

Shortest path cycles: 0.08 0.00 0.00 5.00 0.00 LS

Longest path cycles: 0.08 0.00 0.00 5.00 0.00 LS

FMA = Arith FMA, CVT = Arith CVT, SFU = Arith SFU, LS = Load/Store, T = Texture

Shader properties

=================

Has uniform computation: true

Has side-effects: false

Here, we can see the results for not one, but two variants corresponding to the position and varying variants. As a sanity check, the position variant is more expensive (as expected) because of the extra matrix multiplications needed to transform the vertex to clip space.

Early-ZS and Hidden Surface Removal

Modern GPUs support early depth and stencil testing as a means to remove redundant processing (corresponding to occluded fragments) prior to fragment shading. In addition, most mobile GPUs include some form of hidden surface removal, which can remove occluded fragments even if they are rendered back-to-front, which is the incorrect order for normal early-zs testing to trigger.

Some fragment shader functionality can disable these optimizations. For example, a shader with a memory-visible side-effect, such as writing to an atomic, cannot be killed by hidden surface removal — doing so would change the behavior of the program.

The Shader properties section in the report highlights the shader functionality in use, which can help identify cases where early-zs and HSR is not going to be possible.

Has side-effects: false

Modifies coverage: false

Uses late ZS test: false

Uses late ZS update: false

Reads color buffer: false

Conclusion

The Mali offline compiler is a great addition to any shader or material pipeline. Although it cannot account for runtime state (texture formats, sampler state, lane divergence, whole-program optimization, and so on), what it can do is provide data to motivate targeted optimizations.

For example, Malioc analysis would underscore if a shader is likely to be ALU- or memory-bound. Also, the analysis would indicate whether an analyzed shader should be optimized to reduce register pressure.

The offline compiler can produce its output in the JSON form, making it suitable for integration in an automated pipeline. For example, a continuous integration (CI) service could be trained to flag materials that previously permitted HSR, but no longer do. Alternatively, analysis snapshots could be saved to showcase changes in the estimated cycle counts for key shaders over the development timeline of a title. Perhaps CI could flag a submission if a material that used to avoid stack-spilling suddenly started to spill into the stack.

The possibilities for maintaining a robust pipeline that helps keep performance in check are compelling.

For further reading, consult the Arm Mali Offline Compiler User Guide. This guide contains key information about the architectures being simulated, usage notes, and also considerations regarding what to optimize and how.