In this article, we analyze the use of await and Task.WhenAll in for-each loop in C#, when is possible or better use it, and how to avoid in some cases, the problem of concurrence and dependencies in the parallels execution of task inside the for-each.

Introduction

The foreach loop and in any case, the for loop in C# can get a great benefit in performance by using await and Task instruction. But this is not always possible. In this article, we analyze the use of await and task.WhenAll in foreach loop in C#, when it is possible or better use it, and how to avoid in some cases, the problem of concurrence and dependencies in the parallels execution of task inside the foreach.

We compare here, two different approaches to use the parallel program with the foreach. Also, we see the potential problem to use it in the foreach loop.

- Using

await inside the foreach loop - Using a true parallel loop using

Task.WhenAll

At the end, we also offer a possible solution to mitigate the concurrence and order of execution problem.

Background

Using Await Inside the ForEach Loop

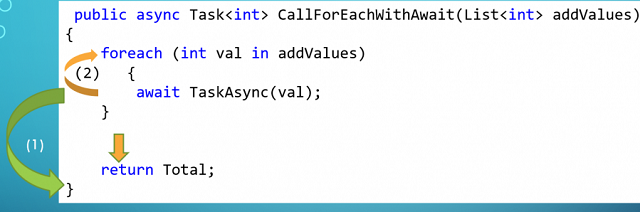

The first approach to see is situated in the await inside a foreach instruction (see image 1). In this case, when the await is reached, the thread:

- is free to continue, and inside the loop, the instruction executes each task one by one

- until the

foreach is finished, and then the instruction continues to go - to the end of the procedure.

This approach helps us to free the control from the loop to execute other tasks, but the method itself executes in synchronic form the task inside the loop. This gives you the following benefits:

- Avoids problem with concurrence in operation

- Gives you the security that the operations are executed in the same order that are entered

But, because the operations are executed in synchronic form inside the loop, the performance of the method is not very good.

Using a True Parallel Loop using Task.WhenAll

The second option gives a better performance: we can create a list of task and use the Task.WhenAll after the loop is finished, in this case, the task inside the loop is executed in parallel and the time of execution is drastically reduced.

In the image is illustrated with the green arrow how the procedure launching all the tasks inside the loop without waiting for the result and then the thread releases the control in the await instruction and waits until all tasks are finished. Then the last instruction of the procedure is executed, and the result is returned. This option as you see, gives a real improvement in performance, because it creates a real parallel execution of the task inside the loop, reducing the execution time at the time consumed for the more consumed task and not by the sum of the execution time for all the tasks. But this method has two bigger limitations. They are:

- Each task in the loop cannot have precedence relation between them.

- The data in each task cannot have concurrence problems.

Let's explain this in detail:

First, the precedence relation between task consists in that the operation needs a rigid order of execution. Remember that when you run this in parallel, you haven't the possibility to know when a task begins or finalizes before or after the other, this is impredictable, then if the task needs an order of execution, you cannot guarantee it. In this case, there is not another chance that sacrifices the performance and uses the await.

You can use the code attached to test all these conditions.

Code Examples

Order of Operation Problem

A typical task that requires order is illustrated here:

private async Task DependenTaskAsync(string status)

{

await Task.Delay(1000);

if (status == "low")

{

this.Status.Remove("low");

return;

}

if (status == "medium")

{

if (this.Status.Contains("low"))

{

throw new Exception("Low must not exists when you try to remove medium");

}

this.Status.Remove("medium");

return;

}

if (status == "high")

{

if (this.Status.Contains("low"))

{

throw new Exception("Low must not exists when you try to remove medium");

}

if (this.Status.Contains("medium"))

{

throw new Exception("Medium must not exists when you try to remove height");

}

this.Status.Remove("high");

}

}

In this task, you need to remove the values in the list from low to high if you ignore the order in which an exception is throw, you can run this code in the attached code. This is a school example, but many tasks in real life have dependencies between and require certain order of operation.

Concurrence Problem

If your operation requires isolation to be executed, then you are in a problem that can appear to be difficult to understand but a simple example can help here. For example, see the following code:

private async Task ConcurrencyAffectedTaskAsync(int val)

{

await Task.Delay(1000);

Total = Total + val;

}

This simple code fails if you use it inside a foreach with Task.Await (see example code attached to this article) that is because Total is a global variable to the class, and you cannot control that in some moment, total has a value changed by other thread and the result can be completely impredictable. If you run the example attached, you can see how many different results you get if you run this code several times.

Mitigate the Concurrence and Order of Execution Problems

In some cases, you can mitigate this problems, using a instruction in C# to force the program to only allow a unique thread to run each time in a determinate segment of code. This can be really useful, but also has limitations.

If you don't know where the concurrence problem is, or the segment of code that you need to lock consumes the big part of the total time of execution, then, you don't win so much performance and also you create a more complex code. In this case, it is preferred to use the normal await operator inside the loop.

Our example of concurrence is a good candidate to use a semaphore, it is a single instruction and the big part of the delay is outside this instruction, then it is OK. Then in this case, we can create a semaphore slim and limit the execution of the segment of code to only one thread and win a super performance without the concurrence problem.

You can declare a semaphore:

public class ForEachConcurrentDependency

{

public int Total { get; set;}

public SemaphoreSlim semaphore;

public ForEachConcurrentDependency()

{

this.Total = 0;

semaphore =new SemaphoreSlim(1, 1);

}

}

and use it, observe that the part of the code limited to one thread is only a small part of the time of processing.

private async Task ConcurrencyNoAffectedTaskAsync(int val)

{

await Task.Delay(1000);

await semaphore.WaitAsync();

try

{

Total = Total + val;

}

finally

{

semaphore.Release();

}

}

Points of Interest

- Use

AWAIT inside the foreach when the task to be executed has data concurrences or has a strict order of execution. - If the solution with

SemaphoreSlim does not give you a significant gain in performance because the process to lock is extensive in time, use AWAIT inside the FOREACH. - Use

TASK.WHENALL outside the foreach: only the tasks are independent and don't have any specific order of execution. This allows us to use the full power of parallel programing and get better performance. - You can see an explanation of the code in this article in the video: C# Pill 11 using Async Method...

History

- 1st November, 2021: First version