Development of a reliable UDP protocol based around a queue abstraction. Written in C++, this article shows the steps taken in developing the protocol .... a fun (couple of) rainy day activity :)

Introduction

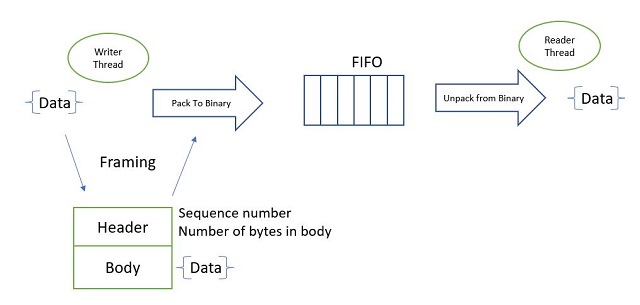

I recently had the task of designing a reliable UDP based protocol. It was developed in C++ and evolved out of the idea of a simple queue. It was designed in steps, each step reduced the reliability of the underlying communication mechanism in some way until UDP level reliability (lost, duplicate and reordered packets) was reached. Each step was unit and integration/stress tested. During most of the development process, I used FIFOs to simulate the underlying network. I like to get a feel for how the data is behaving and having that data visible in the user space is a nice way of doing that.

I've described each step I took and a link to the code related to that step. Please feel free to grab the code at any step, play with it and develop it in other directions. I've listed a few potential improvements at the end of the article.

The Process

Step 1

Pack/unpack data sent between two threads via a thread safe queue - baseline behavior.

Step 2

Refactor the code into publisher and subscriber. Two queues between publisher and subscriber will simulate the bidirectional NW communication between them. Accessing these internal queues will enable the simulation of network errors.

Step 3

Modify consumer to tolerate duplicate and out of order packets on the simulated network. This was done before addressing the missing data problem since client resends could result in both reordered and duplicate packets, so address this problem first. Consumer ACKs the last delivered frame, but the producer discards these ACKs for the moment.

Refactored the "internal network" ready for noisy simulation and UDP implementations.

Step 4

Added support for lost data. Producer maintains a pending queue of frames waiting to be ACK'd by the consumer. The oldest un-ACK'd frame resent after a timeout. ACKs are used to clear the pending list of frames the consumer has delivered. The Producer side pending frame queue is fixed in size so introduces a basic from of flow control between producer and consumer.

Added stress testing. Unit tests have timing dependencies in them -> producer should be refactored to expose internals for better testing

Rearrange entities into their own files.

Step 5

Added logging and UPD plugin between producer and consumer.

Using the Code

Below is an example of how the QUDP may be used. The client side code creates a UDPNetwork object with its port number in the constructor, then uses that in the constructor of QConsumer.

auto consumer = std::thread([]()

{

std::shared_ptr<INetwork> qudp(new UdpNetwork(31415));

auto qConsumer = std::make_unique<QConsumer<SignalData>>(qudp);

while (true)

{

SignalData data;

qConsumer->DeQ(data);

printf("Time stamp %f \t\t Signal %f\n", data.mTimeStamp_sec, data.mValue);

}

});

The server sided code creates a UDPNetwork object with the clients IP address and port number in the constructor. Then uses that in the constructor of QProducer.

auto producer = std::async(std::launch::async, []()

{

auto processStart = system_clock::now();

duration<int, std::milli> sleepTime_ms(10);

std::shared_ptr<INetwork> qudp(new UdpNetwork("127.0.0.1", 31415));

auto qProducer = std::make_unique<QProducer<SignalData>>(qudp);

while (true)

{

std::this_thread::sleep_for(sleepTime_ms);

const auto uSecSinceStart =

duration_cast<microseconds>(system_clock::now() - processStart);

const auto signal = GenerateSignal(uSecSinceStart);

const auto secSinceStart = static_cast<double>(uSecSinceStart.count()) / uSecInASec;

SignalData data{signal, secSinceStart};

qProducer->EnQ(data);

}

});

The full demo code can be found here and here.

Improvements

A few things I'm not happy with:

- There's no initialization handshaking between producer and consumer. If the producer starts before the consumer, initial packets are lost and must be resent. It takes a while to recover from this.

- The producer side pending queue size and timeouts need tuning. At high send rates, a lost frame has a tendency to briefly stall transmission of later packets.

- The ACK acknowledgment part of the protocol isn't enough to maintain a steady throughput of data where frames are getting lost. The use of NACKs (negative acknowledgements) from the consumer may reduce producer side stalling?

But it was a fun little project and I'm looking forward to developing it further. :)

History

- 14th November, 2021 - Initial version