Here we take our trained model and run it where it makes the most sense for our use case, then look at two hands hands-on scenarios to demonstrate that Azure Arc enabled ML doesn’t force you to choose one over the other.

In the previous article of the series, we learned how to train a machine learning (ML) model on an Arc-enabled Kubernetes cluster. Once the model completes training, we should ideally be able to deploy and run it where it makes the most sense for our use case.

We might run it on-premises, but we might also run it on Azure. One of the significant advantages of using Arc-enabled ML is that it doesn’t force us to choose one over the other. Instead, it allows us to run our model and enable inference anywhere, whether on the edge, on-premises or in Azure.

In this article, we’ll demonstrate deploying a model locally, then in Azure. You can view the full project code on GitHub.

Deploying an ML Model Using a Managed Online Endpoint

Online endpoints enable us to deploy and run our ML models in a scalable and managed way. They work with powerful CPU and GPU-enabled machines in Azure, allowing us to serve, scale, secure, and monitor our models without the overhead of setting up and managing the underlying infrastructure. The endpoints quickly process small requests and provide near real-time responses.

Prerequisites

To deploy our model using managed online endpoints, we must ensure we have:

- An active Azure subscription. It’s free to sign up and access popular services for a year with $200 credit.

- Installed and configured the Azure CLI

- Installed the ML extension to Azure CLI by executing the following command:

$ az extension add -n ml -y - An Azure resource group with contributor access

- An Azure Machine Learning (AML) workspace

- Docker installed on our local system since we need it for local deployment

- Set the default settings for the Azure CLI

We set the default settings for the Azure CLI with the following commands to avoid passing in the values for our subscription workspace and resource group:

$ az account set --subscription <subscription ID>

$ az configure --defaults workspace=<Azure Machine Learning workspace name> group=<resource group>

Note: You should already have a resource group and AML workspace if you've been following the article series. Use the previously-created resources to set up your system for model deployment.

Once we’ve gone through the above steps, our system is ready for deployment. To deploy our model using online endpoints, we’ll need the following:

- Trained model files or name and version of the model registered in our workspace

- A scoring file to score the model

- A deployment environment where our model runs

Let’s start by creating a model on Azure.

Creating a Model on Azure

There are many ways to create ML resources on Azure. We can choose to train and register our ML model on AML or register some previously-trained models using a CLI command. In the previous article of this series, we trained and registered the model with Azure, but we could use a CLI command.

To register a model on Azure, we need a YAML file containing the model specifications. We can use the following YAML template file to create a resource-specific YAML file.

$schema: https:

name: {model name}

version: 1

local_path: {path to local model}

The above YAML file starts by specifying a schema for the model. It also specifies attributes for the Azure model such as name, version, and a path to the location of the model locally. We can change the attributes to match a particular model.

Once we have the YAML files containing the model specifications, we execute the following command to create the model on Azure.

$az ml model create -f {path to YAML file}

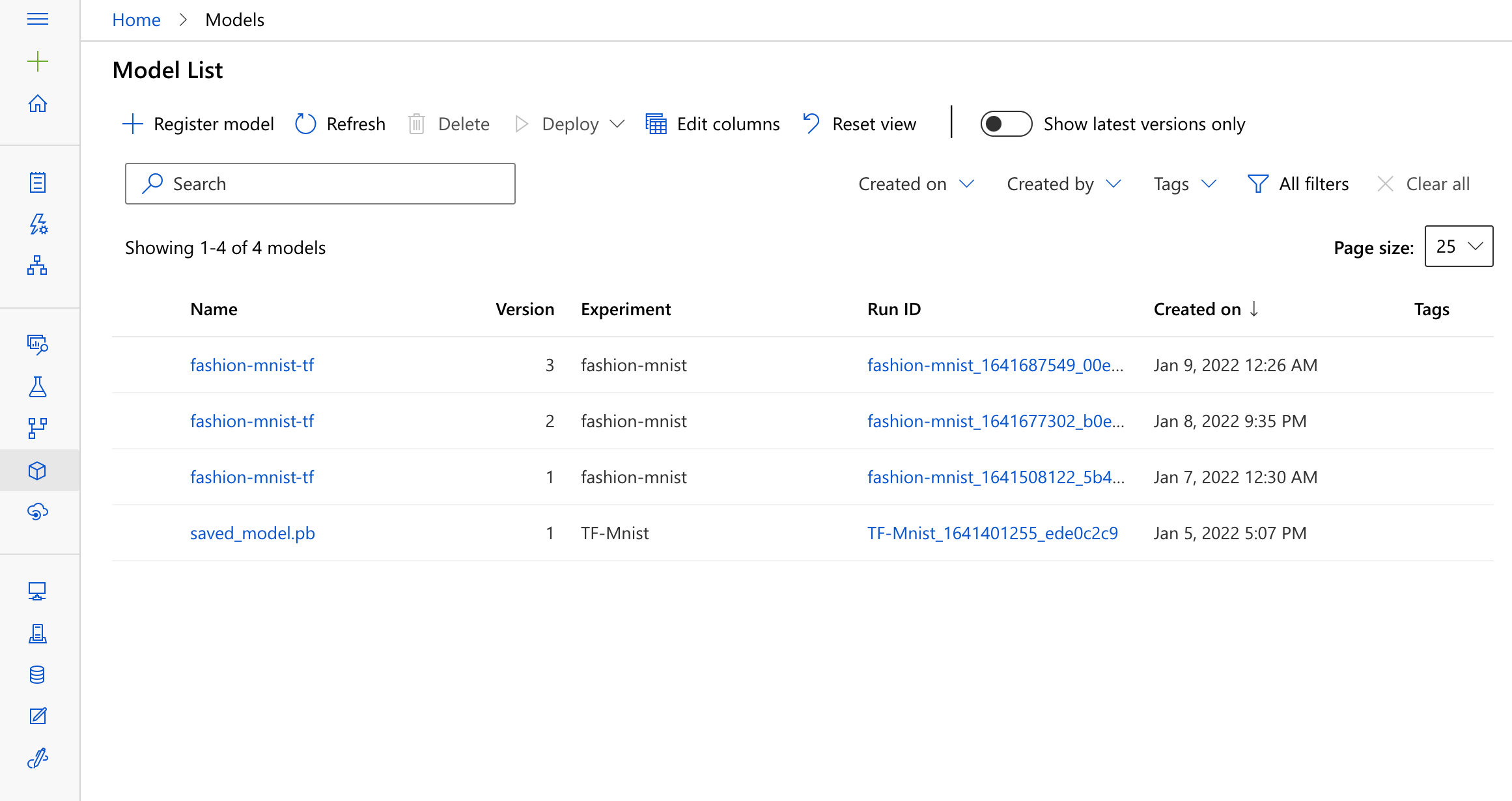

After we register our model on Azure, we should be able to see it in AML studio, under Models.

Creating a Scoring File

The endpoint calls a scoring file for actual scoring and prediction when invoked. This scoring file follows a prescribed structure and must have an init function and a run function. The endpoint calls the init function every time the endpoint is created or updated, while the endpoint calls the run function whenever it is invoked.

Let’s create a scoring file and take a deeper look at these methods by creating a score.py file on the local system and entering the following code for scoring the fashion-mnist-tf model.

import keras as K

import os

import sys

import numpy as np

import json

import tensorflow as tf

import logging

labels_map = {

0: 'T-Shirt',

1: 'Trouser',

2: 'Pullover',

3: 'Dress',

4: 'Coat',

5: 'Sandal',

6: 'Shirt',

7: 'Sneaker',

8: 'Bag',

9: 'Ankle Boot',

}

def predict(model: tf.keras.Model, x: np.ndarray) -> tf.Tensor:

y_prime = model(x, training=False)

probabilities = tf.nn.softmax(y_prime, axis=1)

predicted_indices = tf.math.argmax(input=probabilities, axis=1)

return predicted_indices

def init():

global model

print("Executing init() method...")

if 'AZUREML_MODEL_DIR' in os.environ:

model_path = os.path.join(os.getenv('AZUREML_MODEL_DIR'), './outputs/model/model.h5')

else:

model_path = './outputs/model/model.h5'

model = K.models.load_model(model_path)

print("loaded model...")

def run(raw_data):

print("Executing run(raw_data) method...")

data = np.array(json.loads(raw_data)['data'])

data = np.reshape(data, (1,28,28,1))

predicted_index = predict(model, data).numpy()[0]

predicted_name = labels_map[predicted_index]

logging.info('Predicted name: %s', predicted_name)

logging.info('Run completed')

return predicted_name

In the above code, the predict function takes the data and returns the label index with the highest probability. The init function is responsible for loading the model. The AZUREML_MODEL_DIR environment variable gives us the path to the model’s root folder in Azure. If the environment variable is not available, it loads the model from the local machine.

The run function is responsible for prediction. It takes raw_data as a parameter containing the data we pass when invoking the endpoint. The run function loads the JSON data, transforms it, calls the predict function on the data, returns the predicted results, and converts them into human-readable form by mapping the result to our defined labels.

With our scoring file in place, let’s create the environment for deployment.

Creating a Deployment Environment

The AML environment specifies the runtime and other configurations for training and prediction jobs in Azure. Azure provides various options for inference, including pre-built Docker images or base images for custom environments. Here, we use a base image provided by Microsoft and extend it manually with all the packages required for inference on Azure.

Before we create our deployment environment, we must set the endpoint name with the following command:

$ export ENDPOINT_NAME="azure-arc-demo"

We also must create a YML file containing the endpoint specifications, like schema and name. We create an endpoint.yml file as follows:

$schema: https:

name: azure-arc-demo

auth_mode: key

The next step is to define our deployment environment dependencies. Since we use a base image, we must extend the image using a Conda YAML file containing all the dependencies. We create a conda.yml file and add the following code to it:

name: test

channels:

- conda-forge

dependencies:

- python

- numpy

- pip

- tensorflow

- scikit-learn

- keras

- pip:

- azureml-defaults

- inference-schema[numpy-support]

This file contains all the dependencies required for inference. Note that we also added an azureml-defaults package, which we need for inference on Azure. Once in place, we create a deployment.yml file as follows:

$schema: https:

name: fashion-mnist

type: kubernetes

endpoint_name: azure-arc-demo

app_insights_enabled: true

model:

name: fashion-mnist-tf

version: 1

local_path: "./outputs/model/model.h5"

code_configuration:

code:

local_path: .

scoring_script: score.py

instance_type: defaultinstancetype

environment:

conda_file: ./conda.yml

image: mcr.microsoft.com/azureml/openmpi3.1.2-ubuntu18.04:latest

In the above code, we set a few variables like endpoint_name, model, scoring script, and conda_file, ensuring that the paths lead to the directory where our files are.

Once we’ve created all the above files, it’s time to deploy the model.

Deploying the Model Locally

Before deploying the model, we first create the endpoint by executing the following command:

$ az ml online-endpoint create --local -n azure-arc-demo -f ./fashion-mnist-deployment/endpoint.yml

Next, we deploy the model as follows:

$ az ml online-deployment create --local -n fashion-mnist --endpoint azure-arc-demo -f ./fashion-mnist-deployment/deployment.yml

The above command creates a local deployment named fashion-mnist under the azure-arc-demo endpoint. We verify the deployment with this command:

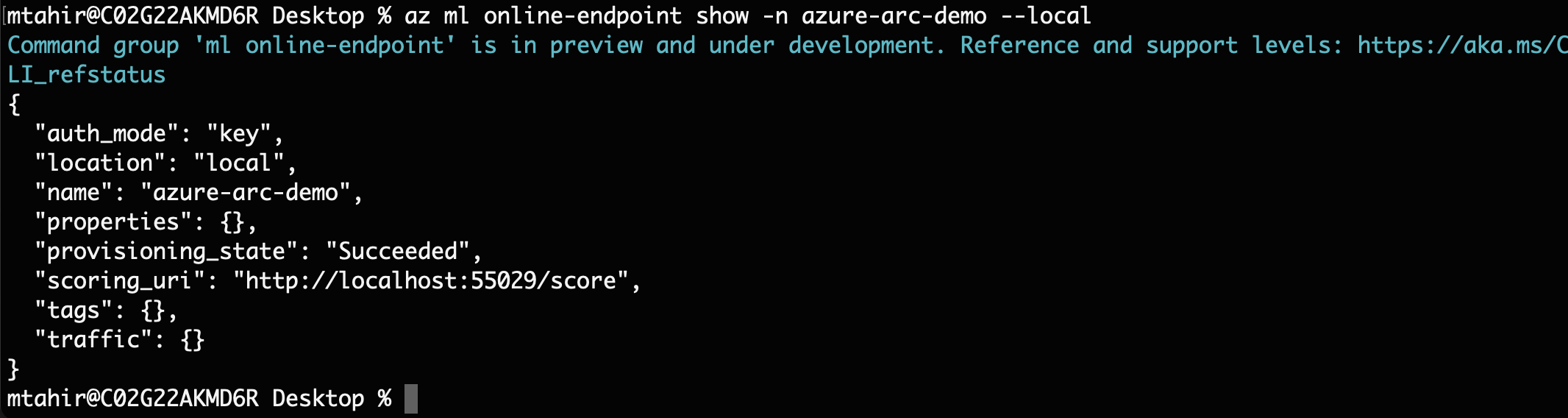

$ az ml online-endpoint show -n azure-arc-demo --local

Our deployment is successful, and we can now invoke it for inference.

Invoking the Endpoint for Inference

Once the deployment is successful, we can invoke the endpoint for inference by passing our request. But before that, we must create a file containing the input data for prediction. We can quickly get an image file from our test dataset, convert it to JSON, and pass it to the endpoint for prediction. We create a sample request using the following code.

import json

import tensorflow as tf

from keras.datasets import fashion_mnist

from tensorflow.keras.utils import to_categorical

(x_train, y_train), (x_test, y_test) = fashion_mnist.load_data()

num_classes = 10

x_test = x_test.astype('float32')

x_test /= 255

y_test = to_categorical(y_test, num_classes)

with open('mydata.json', 'w') as f:

json.dump({"data": x_test[10].tolist()}, f)

The above code loads a single image file, creates a matrix containing the image’s pixel values, adds it to the JSON dictionary with critical data, and saves it in a mydata.json file.

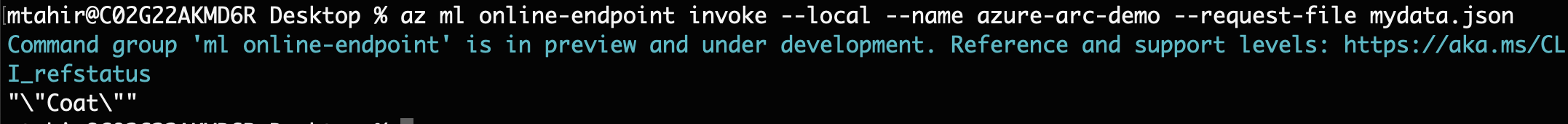

Now that we have our sample request, we invoke the endpoint as follows:

$ az ml online-endpoint invoke --local --name azure-arc-demo --request-file ./fashion-mnist-deployment/mydata.json

The endpoint responds with the prediction in near real-time.

Deploying the Online Endpoint to Azure

Deploying our endpoint to Azure is just as easy as deploying it to a local environment. When deploying the model locally, we used the --local flag, which directs the CLI to deploy the endpoints in the local Docker environment. Simply removing this flag allows us to deploy our model on Azure.

We execute the following command to create the endpoint in the cloud:

az ml online-endpoint create --name $ENDPOINT_NAME -f endpoints/online/managed/sample/endpoint.yml

$ az ml online-endpoint create --name azure-arc-demo -f ./fashion-mnist-deployment/endpoint.yml

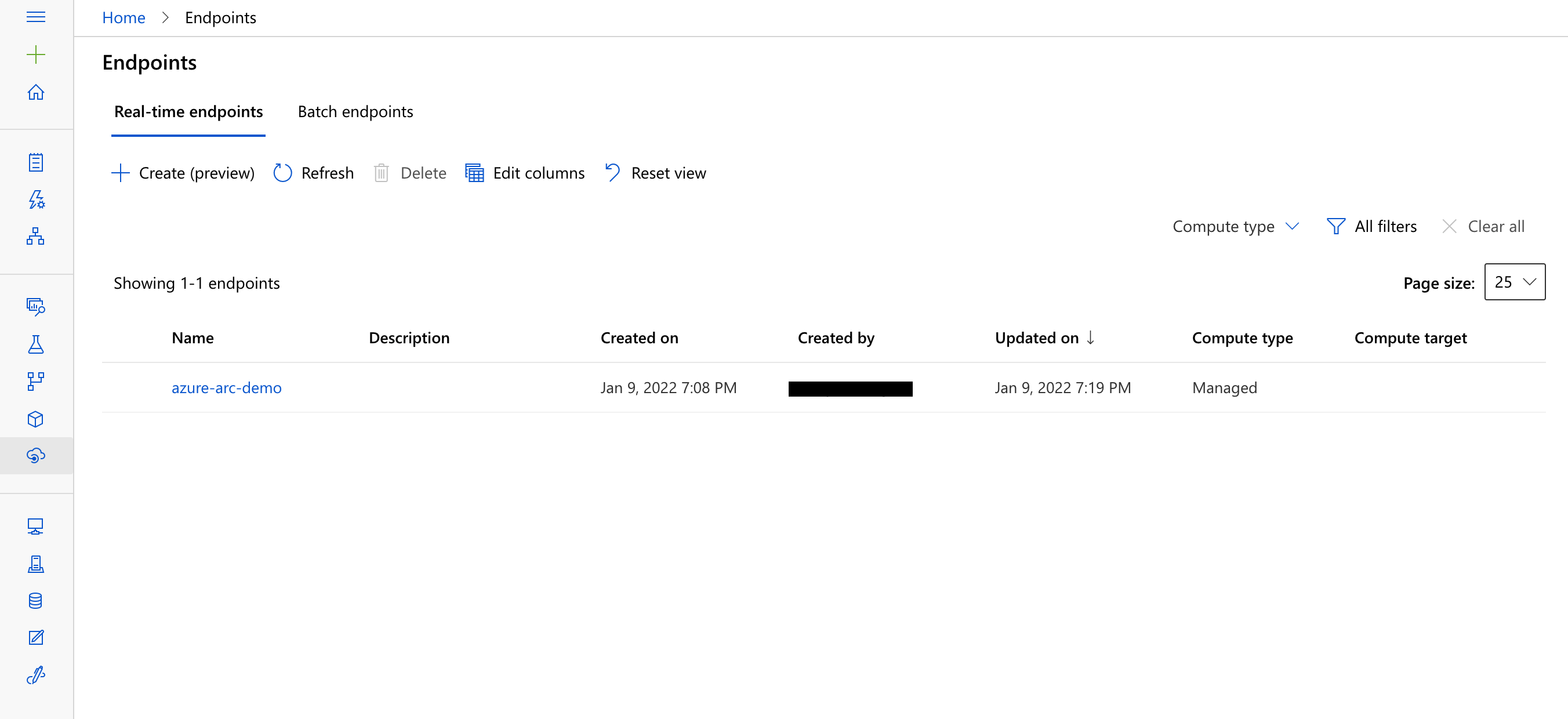

After creating the endpoint, we can see it in AML studio under Endpoints.

Next, we create a deployment named fashion-mnist under the endpoint.

$ az ml online-deployment create --name fashion-mnist --endpoint azure-arc-demo –f ./fashion-mnist-deployment/deployment.yml --all-traffic

The deployment can take some time, depending on the underlying environment. Once the deployment is successful, we can invoke the model for inference as follows:

$ az ml online-endpoint invoke --name azure-arc-demo --request-file ./fashion-mnist-deployment/mydata.json

The above command returns the same output as our locally-deployed model.

Summary

In this article, we learned to deploy the model locally and on Azure. The model deployment process would be the same even if we had an Arc-enabled Kubernetes cluster on another cloud provider or at the edge. Arc-enabled machine learning allows us to seamlessly deploy and run our model regardless of hardware and location.

This article series is an introductory guide for starting with Arc-enabled machine learning. Undoubtedly, Kubernetes unlocks many doors to optimize the machine learning process. At the same time, Arc makes the whole process smooth and gives us the freedom of training and running our machine learning models on the hardware of our choice.

To pursue powerful, scalable machine learning, sign up for a free Azure Arc account.

To learn more about how to configure Azure Kubernetes Service (AKS) and Azure Arc-enabled Kubernetes clusters for training and inferencing machine learning workloads, check out Configure Kubernetes clusters for machine learning.