To extract the best performance from modern heterogeneous systems, developers require parallel programming, using CPUs and accelerators. Workload advancements in the twenty-first century have led to complex problems that require more computing power, but hardware doesn’t always keep up with these accelerating needs.

Even when hardware can deliver improvements, software can drive new requirements or workloads may turn out to be better suited for alternate hardware. Developers want to take advantage of the diversity of hardware in the market, but some software is written for one particular hardware, limiting their ability to scale cross-platform. As such, the focus has shifted from hardware to software.

Parallel programming models are one way to improve performance by more efficient hardware use, and they need to be extended to support combinations of CPUs and accelerators. This approach uses the heterogeneous architectures already available and significantly enhances the performance of computationally intensive applications.

Let’s compare two parallel programming options: CUDA and SYCL.

NVIDIA CUDA

The Compute Unified Device Architecture (CUDA) platform is NVIDIA’s proprietary parallel computing platform and programming model for general computing. CUDA enables developers to harness the power of graphic processing units (GPUs) to speed up applications.

SYCL

SYCL is a single-source, standard C++ programming model. The SYCL parallel programming platform is an alternative to CUDA, offering several advantages.

Unlike CUDA, which is a proprietary language used only for NVIDIA hardware, SYCL allows developers to support various devices, including CPUs, GPUs, and field-programmable gate arrays (FPGAs). SYCL achieves this by using a single codebase written in standard C++.

To support programming heterogeneous systems, SYCL emphasizes supporting multiple architectures well without any vendor preference. According to an Evans Data survey, 40 percent of developers target heterogeneous systems that use more than one type of processor, processor core, or coprocessor1.

SYCL provides an abstraction layer that allows code for heterogeneous processors to be in a single source file instead of separate host and kernel files.

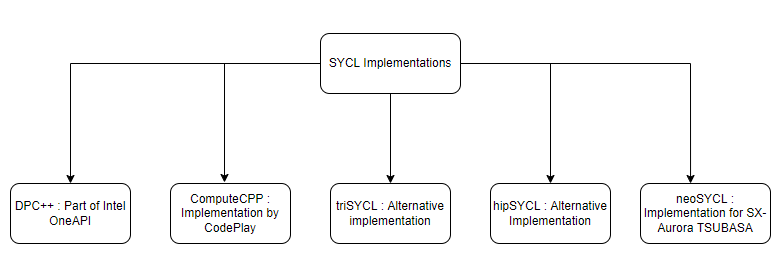

There are multiple SYCL compilers available in the market:

- Data Parallel C++ (DPC++)

- ComputeCpp

- HipSYCL

- triSYCL

- neoSYCL

The popular DPC++ compiler for C++ and SYCL is favored by Intel® oneAPI. oneAPI is an open, standards-based unified programming model which provides simple and same development experience across multiple architectures. SYCL provides data parallelism and heterogeneous programming for performance in CPUs, GPUs, FPGAs, and other accelerators that come to market.

SYCL is based on standard C++ constructs. oneAPI aims to simplify programming and allow source code reusability across hardware platforms while enabling unique accelerator tweaking.

Comparing SYCL and CUDA

SYCL and CUDA serve the same purpose: to enhance performance through processing parallelization in varied architectures. However, SYCL offers more extendibility and code flexibility than CUDA while simplifying the coding process.

Instead of using complex syntax, SYCL enables developers to use ISO C++ for programming. Unlike CUDA, SYCL is a pure C++ domain-specific embedded language that doesn’t require C++ extensions, allowing for a simple CPU implementation that relies on pure runtime rather than a particular compiler.

SYCL is a competitive alternative to CUDA in terms of programmability. With SYCL, there’s no need for a complex toolchain to develop an application, and the tools ecosystem is readily available, ensuring a hassle-free development experience.

SYCL doesn’t need separate source files for the host and device. Instead, you can find the code for the host and the device in the same C++ source file. SYCL implementations are capable of splitting up this source file, parsing the code, and sending it to the appropriate compilation backend.

As mentioned previously, SYCL is vendor-agnostic. It’s built for openness and vendor independence. SYCL code executes on practically any platform, from CPUs to accelerated servers. By generalizing and introducing additional APIs, SYCL has evolved into a high-level programming model that can target a wide range of hardware. It enables generic programming and backend-specific optimizations.

Unlike CUDA, SYCL tries to solve the challenge of architecture interoperability by providing a set of interfaces for standard building blocks. You can optimize these blocks for multiple manufacturers and target platforms. The oneAPI initiative, a SYCL implementation, delivers an application platform with one unified interface that you can implement on diverse platforms. Just combine numerous libraries spanning a wide variety of building blocks with a standard programming style.

According to a 2021 performance study that analyzed GPU applications using SYCL and CUDA on Tesla V100 GPU2, it is evident that most aspects of SYCL’s performance are comparable to CUDA. However, SYCL applications provide an edge to development because of their portability: A single written SYCL application can run across multiple devices, which is not possible using CUDA. Because of their portable and write-once nature, SYCL applications are cost-effective for development.

When working with SYCL, we only need to specify our intention to read and write for the runtime to figure out which buffers it must transfer to and from host containers. SYCL command queues must be asynchronous, and while the actual execution order is unknown, the runtime satisfies data dependencies between kernels.

Performance portability is a priority for SYCL. However, because most performance portability is for lower-level building blocks built for this purpose, various architectures need to be considered. Adapting a kernel to a new hardware platform must be as simple, convenient, and painless as possible.

Migrating from CUDA to SYCL

CUDA has established a monopoly in parallel programming and the general-purpose computing on graphics processing units (GPGPU) field. Since the industry has become accustomed to using CUDA even with inherent vendor lock-in and higher operating costs, you may think that migration from CUDA to SYCL is impossible due to code incompatibility. However, SYCL provides a way out for when you wish to transfer to SYCL but are unable to due to the legacy coding you’ve already completed in CUDA.

The oneAPI compatibility tool helps transfer CUDA-based code to SYCL. The compatibility tool generates human-readable code, with the original identifiers from the original code preserved. The tool also detects and transforms standard CUDA index computations to SYCL.

The compatibility tool modifies CUDA-related code and leaves the rest alone. As a result, you must make only minor manual changes to create a runnable application. Furthermore, the modified elements are still human-readable and hence easily inspectable.

Conclusion

SYCL is a royalty-free, cross-platform abstraction layer that enables developers to code for heterogeneous processors in ISO C++. The application’s host and kernel code are in the same source file.

SYCL has evolved into a high-level programming model that can target a wide range of hardware. SYCL outperforms CUDA in capabilities and speeds the coding process. In terms of programmability, SYCL is a viable alternative to CUDA, requiring fewer lines of code to generate kernels and less frequent calls to critical API functions.

Code written in SYCL can run on almost any platform, from CPUs to accelerated servers. The oneAPI compatibility tool transfers CUDA-based code to SYCL, helping expand developers’ options.

If SYCL’s advantages over CUDA inspire you to give SYCL a chance, we urge you to familiarize yourself with oneAPI so you can better implement the SYCL model. The oneAPI initiative is an attempt at developing an industry standard that is portable and vendor agnostic. Industry leaders in high-performance computing (HPC), AI inventors, hardware vendors and original equipment manufacturers (OEMs), and universities are all taking a considerable interest in SYCL and coming on board. Try oneAPI for yourself to experience the difference.

Resources

Footnotes:

1Evans Data Global Development Survey 2020, Volume 2

2G. K. Reddy Kuncham, R. Vaidya and M. Barve, "Performance Study of GPU applications using SYCL and CUDA on Tesla V100 GPU," 2021 IEEE High Performance Extreme Computing Conference (HPEC), 2021, pp. 1-7, doi: 10.1109/HPEC49654.2021.9622813.