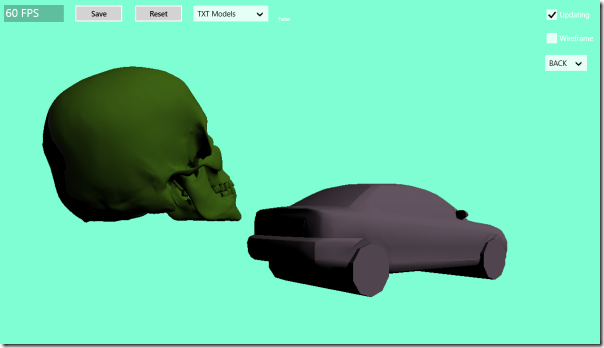

Here are my latest developments at writing a clean WinRT component exposing a clean yet complete DirectX D3D (and maybe D2D as well) API to C#.

There would be a (not so) small part about WinRT C++/Cx, generic / template and the rest will be about DirectX::D3D API and my component so far.

I can already tell now that the DirectX initialization and drawing has become yet even simpler than my previous iteration while being more flexible and closer to the native DirectX API!

Finally, I want to say my main learning material (apart from Google, the intertube, etc.) is Introduction to 3D Game Programming with DirectX 11 by Frank D. Luna. His source code can be found at D3Dcoder. My samples are loosely inspired by his, I wrote my own math lib for example.

1. Exposing C++ Array to C#

In DirectX, there are many buffers. Shape’s vertices is a typical one. One wants to expose a strongly typed array which can be updated by C# and with unlimited access to the underlying data pointer for the native code as well, of course.

Platform::Collection::Vector<T> wouldn’t do. Maybe it’s just me but I didn’t see how to access the buffer’s pointer. Plus it has no resize() method. Platform::Array<T> is fixed size as far as C# code is concerned.

I decided to roll my own templated class.

1.1. Microsoft Templated Collections

The first problem is it’s not possible to create a generic definition in C++/Cx. One can use template but they can’t be public ref class, i.e., can’t be exposed to non C++ API. But there is a twist. It’s possible to expose a concrete implementation of Microsoft’s C++/Cx templated interfaces. There are a few special templated interfaces with a particular meaning in WinRT. The namespace Windows::Foundation::Collections contains a list of templates that will automatically be mapped to generic collection type in the .NET runtime.

For instance, by defining this template:

template <class T>

ref class DataArray sealed : Windows::Foundation::Collections::IVector<T>

{

public:

internal:

std::vector<T> data;

};

I have a class that I can return in lieu of IVector<T> (which will be wrapped into an IList<T> by the .NET runtime) and I can directly manipulate its internal data, even get a pointer to it (with &data[0])

1.2. Concrete Implementation

This is a first step, but I need to provide concrete implementation. Let’s say I want to expose the following templated class:

template <class T>

ref class TList

{

public:

TList() : data(ref new DataArray<T>()) {}

property IVector<T> List { IVector<T> get() { return data; }

public void Resize(UINT n) { data->data.resize(n); }

internal:

DataArray<T>^ data;

};

I can’t make it public, but I can write a concrete implementation manually as I need it, and simply wrap an underlying template, as in:

public ref class IntList sealed

{

IntList() {}

property IVector<int> List { IVector<int> get() { return list.Data; } }

public void Resize(UINT n) { list.Resize(n); }

internal:

TList<int> list;

};

But this is quickly becoming old! What if I use an old dirty C trick, like… MACRO! I know, I know, but bear with me and behold!

#define TYPED_LIST(CLASSNAME, TYPE)\

public ref class CLASSNAME sealed\

{\

CLASSNAME() {}\

property IVector<TYPE> List { IVector<TYPE> get() { return list.Data; } }\

public void Resize(UINT n) { list.Resize(n); }\

internal:\

TList<TYPE> list;\

};

TYPED_LIST(Int32List, int)

TYPED_LIST(FloatList, float)

TYPED_LIST(UInt16List, USHORT)

At the end of this snippet, I just declared 3 strongly typed “list” items in 3 lines! All the code is just a no brainer simple wrapper and it will also be easy to debug, as the code will immediately step inside the template implementation!

It’s how I implemented all the strongly typed structure I need for this API. And I can easily add a new one as I need them in just a single line, as you can see!! ^^.

2. The DXGraphic Class

The BasicScene item in my previous blog post was quickly becoming a point of contention as I was trying to extend my samples functionality. In the end, I had a breakthrough, I dropped it and created a class called DXGraphic which is really a wrapper around the ID3D11DeviceContext1 and expose drawing primitives, albeit in simpler (yet just as complete) fashion, if I could.

All other classes are to be consumed by it while drawing. Here is what the current state of my native API looks like so far:

One just creates a DXGraphic and feeds it drawing primitive. For those who are new to DirectX, it’s a good time to introduce the DirectX rendering pipeline as described on MSDN.

The pipeline is run by the video card and processes a stream of pixel. Most of the DirectX API is used to setup data for this pipeline: vertex, texture, shader variable (constant buffer), etc. That must be copied from CPU memory to video card memory. And then, they would be processed by the shaders, which are some simple yet massively parellelized program which process each individual vertices and turn them into pixels. In a way, they are the real drawing programs, the rest is set-up.

At least 2 of these shaders must be provided by the program: the vertex shader and the pixel shader. The vertex shader will convert all the vertex in the same coordinate system in box of size 1 (using model, view and projection matrices), and the pixel shader will output color for a given pixel.

2.1. The Classes in the API (So Far)

Shaders (pixel and vertex so far) are loaded by the PixelShader and VertexShader class. I used shaders found in Frank Luna sample so far and haven’t written my own. Here is the MSDN HLSL programming guide, and here is an HLSL tutorials web site.

The PixelShader also takes a VertexLayout class argument which describes the C++ structure in the buffer to the shader. I’m only using BasicVertex class so far. In the (strongly typed buffer class) CBBasicVertex, CBBasicVertex.Layout return the layout for BasicVertex.

I have some vanilla state class, RasterizerState can turn on/off wireframe and setup face culling.

BasicTexture can load a picture.

Finally, shapes are defined by one (or, optionally) many vertices data buffer and (optionally) an index buffer. I used strongly typed one: VBBasicVertex, IBUint16/32. They can be created manually or I have an helper class, MeshLoader to create some.

One of the samples update the vertex buffer with C# on each rendering frame!

MeshLoader will also return whether the shape is in right or left handed coordinate system. DirectX uses left handed, but some models are right handed. The ModelTransform class takes care of that, as well as scaling, rotation and translation.

To draw, one setup shaders, states. Then enumerate all shapes, set its texture, its shape and call draw.

Also one can pass variable to shaders (i.e., computation parameters) by using strongly typed constant buffer. A few are defined, CBPerFrame (contains lighting information), CBPerObject (contains model, view and projection matrices).

2.2. The Context Watcher

There is a private class used by almost all classes in this API: ContextWatcher.

Most classes in this API have buffers or data that are DirectX context bound and need to be reset when the context is destroyed, recreated when it is, etc. This class takes care of the synchronization. It is important to understand it before hacking this library.

3. Input and Transform

3.1. Input

To handle input, I use a couple of method / events from the CoreWindow class which are wrapped in my InputController class.

GetKetStates(params VirtualKeys[]) will use CoreWindow.GetAsyncKeyStates().

GetTrails() will return the latest pointer down events. On Windows 8 mouse, pen, etc. have been superceded by the more generic concept of “pointer” device as explained on MSDN.

The CameraController will use the InputController to move the camera and/or model around.

HOME key will reset the rotation, LEFT CONTROL will move model. MOUSE WHEEL will move the camera on the Z axis. Mouse Drag will rotate the camera or model (if LEFT CONTROL is on) using the following rotation:

i.e., if M1 (x1,y1,0) is the mouse down point, and M2 (x2,y2,0) is the next drag point and O (x1, y1, –screenSize) is a virtual point above M1. The camera controller calculates the rotation that transforms OM1 into OM2 and applies its opposite to the camera. The opposite because dragging the world right is like moving the camera left.

3.2. Coordinate System

Initially, I was keeping the camera and model transforms as matrices (along those line on MSDN). Unfortunately, when I introduced mouse handling to drag the model. Continuously multiplying model matrix by mouse transform matrices introduced unsightly numerical errors. Particularly shear transformations.

After much tinkering, I settled on representing the model transformation as follows:

ModelTransform = Translation * Rotation (as quaternion) * Scaling

One can multiply quaternion together and there would be some small numerical error, but it will remain a rotation!

Quaternion can be created with the DXMath class:

public static quaternion toQuaternion(float x, float y, float z, float degree);

(x,y,z) being the axis of rotation.

About quaternion math (as I didn’t learn it at school  ), I found the following links:

), I found the following links:

In the end, all transformations are nicely wrapped in some class in Utils\DirectX.

Camera is the typical DirectX camera.

Model is the typical DirectX model transformed decomposed in Translation, Rotation, Scaling. There is also a LeftHanded property as it should be handled differently whether the model’s coordinate are in left handed or right handed space.

The Transforms class is a utility class to create transform matrix.

CenteredRotationTransform is used to rotate the model around a point, that can be moved.

4. Wrapping It All Together

To show what the final code looks like, here is the slightly simplified code that setup the scene with the column (second screenshot).

Even if it’s long, it’s much simpler than the C++ version, and just as versatile!

public static Universe CreateUniverse4(DXContext ctxt = null, SharedData data = null)

{

ctxt = ctxt ?? new DXContext();

data = data ?? new SharedData(ctxt);

var box = new BasicShape(ctxt, MeshLoader.CreateBox(new float3 { x = 1, y = 1, z = 1 }));

var grid = new BasicShape(ctxt, MeshLoader.CreateGrid(20, 30, 20, 20));

var gsphere = new BasicShape(ctxt, MeshLoader.CreateGeosphere(1, 2));

var cylinder = new BasicShape(ctxt, MeshLoader.CreateCylinder(0.5f, 0.3f, 3, 20, 20));

var floor = data.Floor;

var bricks = data.Bricks;

var stone = data.Stone;

float3 O = new float3 { z = 30 };

var u = new Universe(ctxt)

{

Name = "Temple",

Background = Colors.DarkBlue,

Camera =

{

EyeLocation = DXMath.vector3(0, 0.0f, 0.0f),

LookVector = DXMath.vector3(0, 0, 100),

UpVector = DXMath.vector3(0, 1, 0),

FarPlane = 200,

},

CameraController =

{

ModelTransform = { Origin = O },

},

PixelShader = data.TexPixelShader,

VertexShader = data.BasicVertexShader,

Bodies =

{

new SpaceBody

{

Location = O,

Satellites =

{

new SpaceBody

{

Shape = grid,

Texture = floor,

},

new SpaceBody

{

Scale = DXMath.vector3(3,1,3),

Location = new float3 { y = 0.5f },

Shape = box,

Texture = stone,

},

}

},

},

};

var root = u.Bodies[0];

for (int i = 0; i < 5; i++)

{

root.Satellites.Add(new SpaceBody

{

Location = DXMath.vector3(-5, 4, -10 + i * 5),

Shape = gsphere,

Texture = stone,

});

root.Satellites.Add(new SpaceBody

{

Location = DXMath.vector3(+5, 4, -10 + i * 5),

Shape = gsphere,

Texture = stone,

});

root.Satellites.Add(new SpaceBody

{

Location = DXMath.vector3(-5, 1.5f, -10 + i * 5),

Shape = cylinder,

Texture = bricks,

});

root.Satellites.Add(new SpaceBody

{

Location = DXMath.vector3(+5, 1.5f, -10 + i * 5),

Shape = cylinder,

Texture = bricks,

});

}

u.Reset();

return u;

}

And the render method that renders all samples so far:

public void Render(DXGraphic g)

{

g.Clear(Background);

g.SetPShader(PixelShader);

g.SetVShader(VertexShader);

g.SetStateSampler(sampler);

g.SetStateRasterizer(RasterizerState);

g.SetConstantBuffers(0, ShaderType.Pixel | ShaderType.Vertex, cbPerObject, cbPerFrame);

CameraController.Camera.SetProjection(g.Context);

foreach (var item in GetBodies())

{

if (item.Shape == null)

continue;

cbPerObject.Data[0] = new PerObjectData

{

projection = CameraController.Camera.Projection,

view = CameraController.Camera.View,

model = DXMath.mul(CameraController.ModelTransform.Transform,

item.FinalTransform.Transform),

material = item.Material,

};

var p0 = new float3().TransformPoint(item.FinalTransform.Transform);

var p1 = p0.TransformPoint(CameraController.Camera.View);

var p2 = p1.TransformPoint(CameraController.Camera.Projection);

cbPerObject.UpdateDXBuffer();

g.SetTexture(item.Texture);

g.SetShape(item.Shape.Topology, item.Shape.Vertices, item.Shape.Indices);

g.DrawIndexed();

}

}

5. Performance Remarks

On my machine, the app spends about 6 seconds loading textures at the start. However, if I target x64 when compiling (my machine is an x64 machine, but the project targets x86 by default), the startup drops to about 0.2 seconds!!!

Also, in 32 bits mode, the app will freeze every now and then while catching a C++ exception deep down the .NET runtime-WinRT binding code (apparently something to do with the DirectArray) but on x64, it runs smoothly.