Here we set up an Azure PostgreSQL database, migrate our local PostgreSQL database to Azure Database for PostgreSQL, and connect our on-premises app to our cloud database. Then, we run the Django app locally while connected to the Azure Postgres database.

In the first article of this series, we learned how to download and run a RealWorld example app based on Python, the Django REST Framework (DRF), and an SQLite database to provide the backend REST API. We prepared the requirements, then installed and ran the PostgreSQL Database locally.

Then, we modified the app to use PostgreSQL instead of SQLite and recreated the database schema by running a migration command. Finally, we used Postman to populate the app with sample data and ran a Vue-based web app to test the frontend against the Django app.

By the end of the series, we’ll modernize a Python app and publish it on Azure. But before we move our application, we first need to move its data.

In this article, we’ll configure an Azure PostgreSQL database, migrate our local PostgreSQL database to an Azure Database for PostgreSQL, and connect our on-premise app to our cloud database. Then, we’ll run the Django app locally while connected to the Azure Postgres database instead of the local database to ensure everything still works.

You can follow the steps in this article to get your application running or download and open this GitHub repository folder to see what the code looks like at the end of Part 2.

Configuring Azure Database for PostgreSQL

Azure Database for PostgreSQL is a relational database service in the Microsoft cloud, based on the PostgreSQL Community Edition database engine. When you migrate from a local PostgreSQL database to an Azure Database for PostgreSQL, you access many benefits, including built-in, high availability, automatic backups, automated maintenance, fast elastic scaling, monitoring, and support.

In this article series, we’ll use Single Server: a fully-managed Azure Database for PostgreSQL service with minimal requirements for database customizations. The Single Server platform handles most database management functions with minimal user configuration.

Let’s review what we need to start working with PostgreSQL in the Azure cloud.

First, go to the Design an Azure Database for PostgreSQL - Single Server using the Azure portal tutorial and execute the following steps:

- Follow the prerequisites section to create a free Azure account if you don't have a subscription yet.

- Create an Azure Database for PostgreSQL. This section guides you through creating a new resource, selecting the Single Server deployment option, and finally providing the essential information to make an Azure Database for PostgreSQL.

- Configure a server-level firewall rule. This section is vital because the Azure Database for PostgreSQL service uses a firewall at the server level. By default, this firewall prevents all external applications and tools from connecting to the server and any databases unless you create a firewall rule to open the firewall for a specific IP address range.

- Get the connection information. This section helps you provide host information, including server name, server admin login name, and Azure Database access credentials for the PostgreSQL server you've just created.

- Connect to PostgreSQL database using psql. If your client computer has PostgreSQL installed, you can use a local instance of psql or the Azure Cloud console to connect to an Azure PostgreSQL server. This section guides you through using the psql CLI locally to connect to the cloud-based PostgreSQL server and emit SQL queries.

Migrating the Application Data to Azure

We need two PostgreSQL utilities to migrate from the local database to the cloud: psql and pg_dump.

The psql utility is a terminal mode client for PostgreSQL. It enables us to enter commands interactively, submit them to PostgreSQL, and see the results. pg_dump is a utility for performing PostgreSQL backup operations.

To use these utilities, open the terminal and change the path to the local PostgreSQL installation’s \bin directory where the binaries are. You can typically find them in a Windows system’s C:\Program Files\PostgreSQL\14\bin directory.

First, generate a script to extract the schema from the local database PostgreSQL. This schema includes tables, columns, indexes, foreign keys, and other database constraints.

Next, run the following pg_dump command. It connects to the local conduit_db database at the 127.0.0.1 host with the conduit_user user. It then generates the schema to the conduit_db_schema.out file:

pg_dump -h 127.0.0.1 -U conduit_user -d conduit_db > conduit_db_schema.out

Then, move only the data to a new plain-text conduit_db_data.out file. You can use the --data-only option to dump the data without the schema (data definitions):

pg_dump -h 127.0.0.1 -U conduit_user -d conduit_db --data-only > conduit_db_data.out

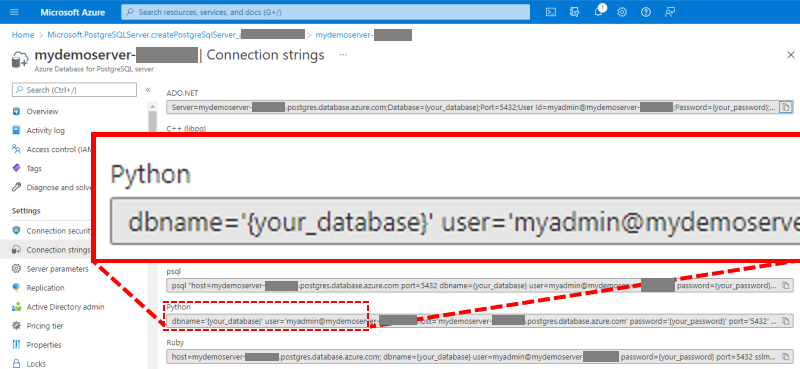

From now on, we must use the credentials provided when we created the cloud-based database. So, go to the Azure Portal and navigate to your Azure Database for PostgreSQL server to collect the host, user, and database information. Copy this information to a notepad.

We'll use the terminal-based psql utility to emit queries and commands interactively. In this case, connect to the remote Postgres database at your Azure server host:

psql -h mydemoserver-********.postgres.database.azure.com -U myadmin@mydemoserver- ******** -d postgres

Next, emit the query to drop the conduit_db database if it exists and recreate it from scratch:

postgres> DROP DATABASE IF EXISTS conduit_db;

postgres> CREATE DATABASE conduit_db;

Then, connect to the PostgreSQL database at Azure again to import the schema from the conduit_db_schema.out:

psql -h mydemoserver-********.postgres.database.azure.com -U myadmin@mydemoserver- ******** -d conduit_db -s < conduit_db_schema.out

Finally, we connect to the Azure database to migrate only the data from the conduit_db.out file:

psql -h mydemoserver-********.postgres.database.azure.com -U myadmin@mydemoserver- ******** -d conduit_db < conduit_db_data.out

At this point, your Azure Database for Postgres remote database has an exact copy of your local PostgreSQL database.

Next, let's configure our Django API web application to connect to the Azure Postgres database instead of the local one.

Switching to Azure Database for PostgreSQL

To connect using a host, database, user, and password, specify these settings in your Django app database configuration. You’ll need to modify the DATABASES constant in the \conduit\settings.py file to point to Azure Database for PostgreSQL instead of your local database:

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.postgresql',

'NAME': 'conduit_db',

'USER': 'myadmin@mydemoserver-********',

'PASSWORD': '************',

'HOST': 'mydemoserver-********.postgres.database.azure.com',

'PORT': '5432'

}

Ensuring the App Works with Azure Database for PostgreSQL

To ensure the app works with the new database, run the application by executing the runserver command:

> python manage.py runserver

Django version 1.10.5, using settings 'conduit.settings'

Starting development server at http:

Quit the server with CTRL-BREAK.

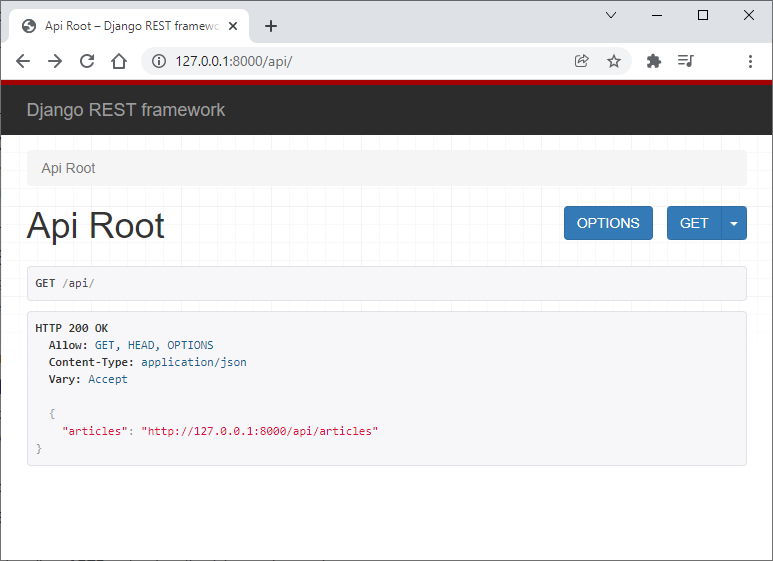

Then, open the http://127.0.0.1:8000/api URL in a browser to confirm the backend is working correctly:

Finally, visit http://127.0.0.1:8000/api/articles to verify that the database schema and data containing the articles have migrated successfully to the Azure Database for PostgreSQL:

{

"articlesCount": 3,

"articles": [

{

"author": {

"username": "alice_smith",

"bio": "",

"image": "https://static.productionready.io/images/smiley-cyrus.jpg",

"following": false

},

"body": "Share your knowledge and empower the community by creating a new implementation",

"createdAt": "2022-01-15T15:43:40.112921+00:00",

"description": "join the community by creating a new implementation",

"favorited": false,

"favoritesCount": 0,

"slug": "create-a-new-implementation-ukwcnm",

"tagList": [

"implementations"

],

"title": "AZURE Create a new implementation",

"updatedAt": "2022-01-15T15:43:40.112921+00:00"

},

{

"author": {

"username": "alice_smith",

"bio": "",

"image": "https://static.productionready.io/images/smiley-cyrus.jpg",

"following": false

},

"body": "Over 100 implementations have been created using various languages, libraries, and frameworks.\n\nExplore them on CodebaseShow.",

"createdAt": "2022-01-15T15:43:39.369865+00:00",

"description": "discover the implementations created by the RealWorld community",

"favorited": false,

"favoritesCount": 0,

"slug": "explore-implementations-h9h4zn",

"tagList": [

"implementations",

"codebaseShow"

],

"title": "AZURE Explore implementations",

"updatedAt": "2022-01-15T15:43:39.369865+00:00"

},

{

"author": {

"username": "alice_smith",

"bio": "",

"image": "https://static.productionready.io/images/smiley-cyrus.jpg",

"following": false

},

"body": "See how the exact same Medium.com clone (called Conduit) is built using different frontends and backends. Yes, you can mix and match them, because they all adhere to the same API spec",

"createdAt": "2022-01-15T15:43:38.688173+00:00",

"description": "Exemplary fullstack Medium.com clone powered by React, Angular, Node, Django, and many more",

"favorited": false,

"favoritesCount": 0,

"slug": "welcome-to-realworld-project-dgroov",

"tagList": [

"introduction",

"welcome"

],

"title": "AZURE Welcome to RealWorld project",

"updatedAt": "2022-01-15T15:43:38.688173+00:00"

}

]

}

Next Steps

We’ve discussed setting up an Azure PostgreSQL database, migrating the app’s data to Azure, and ensuring the application still works. Finally, we demonstrated how to configure the app to the new data source, so the app runs locally while connected to the Azure Postgres database.

The next article will demonstrate how to move a copy of our Python app and data to the Azure cloud infrastructure with minimal redesign and modification.

To learn more about Visual Studio Code to deploy a container image from a container registry to Azure App Service, check out our tutorial, Deploy Docker containers to Azure App Service with Visual Studio Code.