Here we learn how to download and run a RealWorld example app based on Python, the Django REST Framework (DRF), and an SQLite database to provide the backend REST API. We prepare the requirements, then install and run the PostgreSQL Database locally.

Many Python development teams know they need to modernize their applications, but aren’t sure where to start, how to do it, or what tools to use.

This six-part article series will demonstrate how to modernize Python apps and data on Azure. We’ll start with a traditional monolithic Python application represented by the Django RealWorld Example App.

Django is a mature, full-featured web framework for Python applications. However, Django can be overkill for smaller projects because it encourages developing large, monolithic applications. Developers often consider other Python web frameworks for microservices.

Suppose your organization is looking to move away from Django gradually without abandoning the work you’ve already put into your apps. In that case, Azure can help smooth the transition to cloud-native microservices.

This first article will run the back-end Django RealWorld app locally and make minor changes to replace SQLite with a PostgreSQL database. You can follow the steps in this article to get your application running, or download and open this GitHub repository folder to get the final project. You should know Python and Django to follow along.

Later in this article series, we’ll show how to modernize the application and its data by moving them to Azure. Then, we’ll gradually extend the app’s capabilities with cloud-native services and show how to migrate the app’s data to Cosmos DB.

For now, let’s start by preparing our legacy app to move to the cloud.

Requirements

Before installing and testing the application, ensure you have all the requirements for the application to work:

Preparing the PostgreSQL Database

In this section, we’ll populate the app with sample data so that we’ll have data to migrate in the following article. We’ll also make one small change to the default RealWorld app: use PostgreSQL instead of SQLite. PostgreSQL is more representative of monolithic applications that development teams would want to modernize in the cloud.

First, ensure you can run the PostgreSQL command-line interface (CLI) from your terminal. So, add the \bin and \lib folders to your system’s Path. For example, in my Windows machine, I had to add the following folders to the Path environment variable:

C:\Program Files\PostgreSQL\14\bin

C:\Program Files\PostgreSQL\14\lib

Now, open the terminal and type the following command to connect to the PostgreSQL CLI and then provide the master password you defined when you first installed PostgreSQL:

postgres=# create database conduit_db;

CREATE DATABASE

Next, create a new conduit_db database for the Conduit application:

postgres=# create database conduit_db;

CREATE DATABASE

Finally, create a new conduit_user to connect to your conduit_db database:

postgres=# create user conduit_user with password '123456';

CREATE ROLE

Configuring the App for PostgreSQL

Now you need to modify the DATABASES constant in the \conduit\settings.py file to point to PostgreSQL instead of SQLite:

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.postgresql',

'NAME': 'conduit_db',

'USER': 'conduit_user',

'PASSWORD': '<<YOUR-MASTER-PASSWORD>>',

'HOST': '127.0.0.1',

'PORT': '5432',

}

}

Then, open the requirements.txt file and add the following dependencies for psycopg2, a PostgreSQL database adapter for the Python programming language:

psycopg2==2.7.5

psycopg2-binary==2.7.5

Running the Real-World App Locally

Before running the app, let’s create a virtual environment isolated from our system directories. This way, we can independently install a set of Python packages for our new project.

First, execute the venv command to create a dedicated target directory:

> python -m venv ./venv

Then, activate the venv environment:

> ./venv/Scripts/activate

Next, install all requirements in the virtual environment:

(venv) > pip install -r requirements.txt

We have the PostgreSQL database now, but we still don’t have a database schema. So, execute the migrate command to create the PostgreSQL database and all the schema your app requires:

> python manage.py migrate

Finally, run the application by executing the runserver command:

> python manage.py runserver

Django version 1.10.5, using settings 'conduit.settings'

Starting development server at http:

Quit the server with CTRL-BREAK.

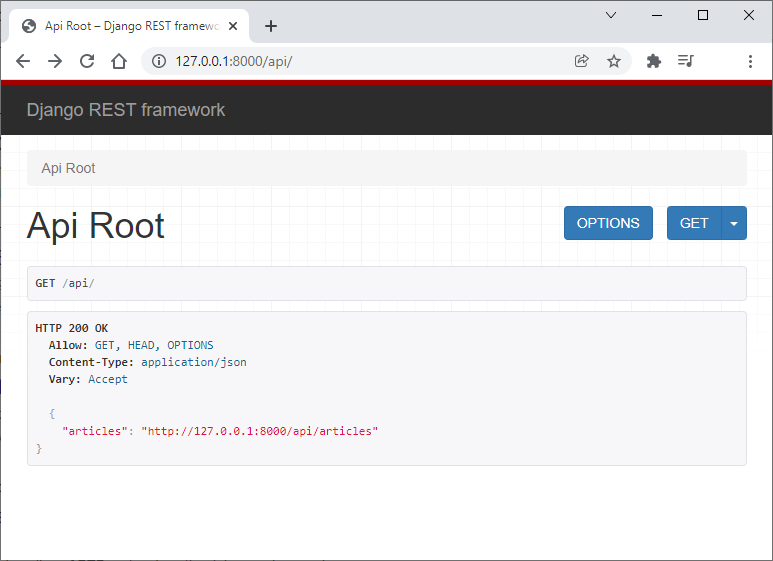

Open http://127.0.0.1:8000/api in a browser to confirm the backend is working correctly:

Populating the App with Sample Data

Unfortunately, the current code gives an "Invalid format string" error preventing you from logging in. To fix this bug, open the models.py file and replace this code block:

token = jwt.encode({

'id': self.pk,

'exp': int(dt.strftime('%s'))

}, settings.SECRET_KEY, algorithm='HS256')

With this one:

token = jwt.encode({

'id': self.pk,

'exp': dt.utcfromtimestamp(dt.timestamp())

}, settings.SECRET_KEY, algorithm='HS256')

Now, we can populate our PostgreSQL blogging database by sending HTTP requests to the API to create users, articles, and comments. Fortunately, we can use Postman to send a collection of HTTP requests, automating the population process.

First, download Conduit.postman_collection.json and save it to your local file system. Then open Postman, click the File menu, click the Import submenu, then click Upload File. Select the Conduit.postman_collection.json file in the local repository folder, then click Import.

Next, click the eye icon in the top right corner ("Environment quick look"), and then click Add New Environment.

Now, name the environment "Conduit". Then, provide the environment with the following variables and initial values:

| Variable | Initial Value |

| APIURL | http://127.0.0.1:8000/api |

| USERNAME | alice_smith |

| EMAIL | alice@smith.com |

| PASSWORD | 1234!@#$ |

Next, click Save to save and close the Conduit environment.

Now, click the No Environment field in the right top corner and change it to the "Conduit" environment.

On the left panel, select the Conduit collection. Click the right arrow, then click Run.

A new window now displays the Postman Collection Runner. At the bottom of the left panel, click the Run Conduit button.

Now, go back to the PostgreSQL CLI terminal. Connect to the conduit_db database using this command:

> psql -U postgres -h localhost conduit_db

Next, type the SQL command to fetch the ID, slug, and title from the articles you just created:

conduit_db=# select id, slug, title from articles_article;

The results should match this:

id | slug | title

----+-------------------------------------+------------------------------

1 | welcome-to-realworld-project-dgroov | Welcome to RealWorld project

2 | explore-implementations-h9h4zn | Explore implementations

3 | create-a-new-implementation-ukwcnm | Create a new implementation

(3 rows)

Now, type the SQL command to fetch the ID and body from the comments we just inserted:

conduit_db=# select id, body from articles_comment;

Finally, you should have these results:

id | body

----+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

1 | While most 'todo' demos provide an excellent cursory glance at a framework's capabilities, they typically don't convey the knowledge & perspective required to actually build real applications with it. 2 | RealWorld solves this by allowing you to choose any frontend (React, Angular, & more) and any backend (Node, Django, & more) and see how they power a real-world, beautifully designed full-stack app called Conduit.

3 | There are 3 categories: Frontend, Backend and FullStack

4 | Before starting a new implementation, please check if there is any work in progress for the stack you want to work on.

5 | If someone else has started working on an implementation, consider jumping in and helping them! by contacting the author.

(5 rows)

Bonus: Running the Frontend Alongside the Backend

This section isn’t mandatory, but it’s worthwhile if you’re curious about how the complete Conduit app looks. The idea here is to run a frontend web app side-by-side with our Django backend application so that the frontend can consume the API.

First, open another instance of Visual Studio Code. Then, clone or download the vue-realworld-example-app repository and follow the instructions on that page to get the project up and running.

Now, we need to modify the backend app for the frontend to consume. The frontend app will run at http://localhost:8080, so we need to allow cross-domain communication between the two apps.

So, open the settings.py file in the Django app and add localhost:8080 to the CORS_ORIGIN_WHITELIST constant:

CORS_ORIGIN_WHITELIST = (

'0.0.0.0:4000',

'localhost:4000',

'localhost:8080'

)

Next, rerun the Django app using this command:

> python manage.py runserver

Then, switch to the other VS Code instance to run the Vue frontend app:

> npm run serve

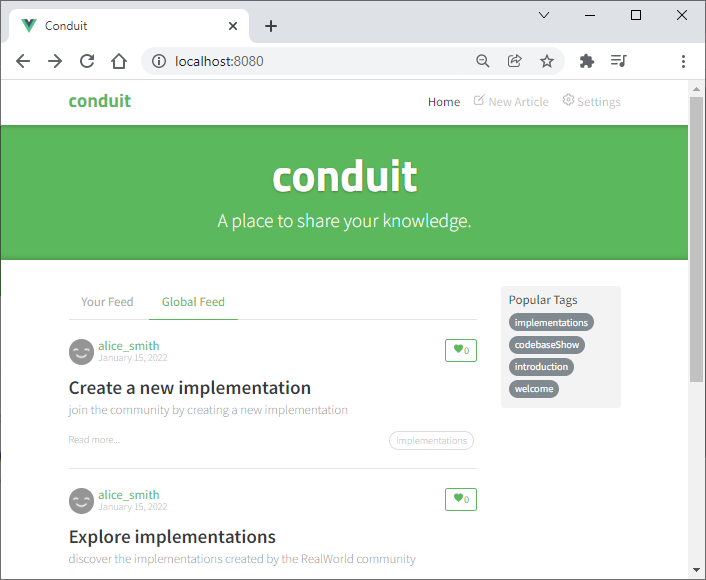

The new frontend app should now be running at http://localhost:8080.

As the screenshot shows, the data we populated with Postman is visible now, and the frontend application works seamlessly with our Django and PostgreSQL backend project.

Next Steps

So far, we’ve set up a backend project that works as a starting point for our end-to-end modernization series. Then, we used Postman to test and populate the API with initial sample data.

This series aims to move the application and its current data to Azure, migrate its functions to cloud-native services, and port the data to Cosmos DB. Our next article demonstrates how to set up an Azure PostgreSQL database, migrate the app’s data to Azure, and ensure the application still works. Finally, we’ll show that the app runs locally while connected to the Azure Postgres database instead of the local database.

Continue to article two to learn how to migrate data to the cloud.

To learn more about Visual Studio Code to deploy a container image from a container registry to Azure App Service, check out our tutorial, Deploy Docker containers to Azure App Service with Visual Studio Code.