This is Part 3 of a 3-part series that demonstrates how to use Azure Cognitive Services to build a universal translator that can take a recording of a person’s speech, convert it to text, translate it to another language, and then read it back using text-to-speech. In this article, the Java backend is completed, using the Azure translator service to translate text. Then, the Azure speech service is reused to convert the text back to speech.

The previous two parts of this three-part series built a web frontend and Java backend that enable a user to record speech and transcribe it into text.

There are two more steps to finish the universal translator: translating text and converting the translated text to speech.

Follow this tutorial to complete the Java backend, using the Azure translator service to translate text, then reuse the Azure speech service to convert the text back to speech.

Prerequisites

Ensure you have the free Java 11 JDK installed for the backend application. The backend application also needs the Azure functions runtime version 4.

GStreamer is also required to convert WebM audio files for processing by the Azure APIs.

The application’s complete source code is available from GitHub, and the backend application is also available as the Docker image mcasperson/translator.

Creating a Translator Service

Azure translator service performs the translation between two languages. Microsoft's documentation provides instructions for creating a translator resource.

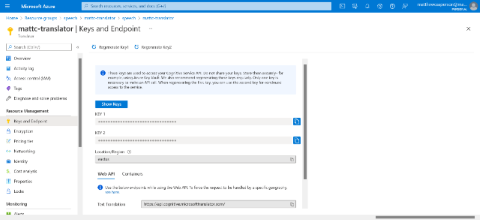

Once the resource is created, note the key and region. You’ll need these when we configure the backend service:

Translating Text Between Languages

The TranslateService class defines the logic to translate text between languages:

package com.matthewcasperson.azuretranslate.services;

import java.io.IOException;

import okhttp3.HttpUrl;

import okhttp3.MediaType;

import okhttp3.OkHttpClient;

import okhttp3.Request;

import okhttp3.RequestBody;

import okhttp3.Response;

import org.apache.commons.text.StringEscapeUtils;

public class TranslateService {

This class exposes a single method called translate which takes the text to be translated, source language, and target language as strings:

public String translate(final String input, final String sourceLanguage,

final String targetLanguage) throws IOException {

The app interacts with the Azure translator service through its REST API. This code builds up the endpoint to call:

final HttpUrl url = new HttpUrl.Builder()

.scheme("https")

.host("api.cognitive.microsofttranslator.com")

.addPathSegment("/translate")

.addQueryParameter("api-version", "3.0")

.addQueryParameter("from", sourceLanguage)

.addQueryParameter("to", targetLanguage)

.build();

Then the OkHttp client makes the API request:

final OkHttpClient client = new OkHttpClient();

The REST API call passes a JSON object in the request body containing the text to be translated:

final MediaType mediaType = MediaType.parse("application/json");

final RequestBody body = RequestBody.create("[{\"Text\": \""

+ StringEscapeUtils.escapeJson(input) + "\"}]", mediaType);

Two HTTP headers are included with the translator service key and region, both defined in environment variables:

final Request request = new Request.Builder().url(url).post(body)

.addHeader("Ocp-Apim-Subscription-Key", System.getenv("TRANSLATOR_KEY"))

.addHeader("Ocp-Apim-Subscription-Region", System.getenv("TRANSLATOR_REGION"))

.addHeader("Content-type", "application/json")

.build();

The request executes, and the response returns:

final Response response = client.newCall(request).execute();

return response.body().string();

}

}

Converting Text to Speech

The SpeechService class defines the logic to convert text to speech:

package com.matthewcasperson.azuretranslate.services;

import com.microsoft.cognitiveservices.speech.AudioDataStream;

import com.microsoft.cognitiveservices.speech.SpeechConfig;

import com.microsoft.cognitiveservices.speech.SpeechSynthesisOutputFormat;

import com.microsoft.cognitiveservices.speech.SpeechSynthesisResult;

import com.microsoft.cognitiveservices.speech.SpeechSynthesizer;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import org.apache.commons.io.FileUtils;

public class SpeechService {

This class exposes a single method called translateText, which takes the text to convert and the language to speak as strings:

public byte[] translateText(final String input, final String targetLanguage)

throws IOException {

The Azure SDK enables saving the audio as a file. Capture this audio in a temporary file:

final Path tempFile = Files.createTempFile("", ".wav");

The code then creates a SpeechConfig object with the speech service key and region, both sourced from environment variables:

try (final SpeechConfig speechConfig = SpeechConfig.fromSubscription(

System.getenv("SPEECH_KEY"),

System.getenv("SPEECH_REGION"))) {

Next, the code configures the target language:

speechConfig.setSpeechSynthesisLanguage(targetLanguage);

Then, the code defines the audio file’s format. Dozens of formats are available, and any would be fine for the sample application, although the Opus compression scheme is supposed to be well-suited to speech-based web applications:

speechConfig.setSpeechSynthesisOutputFormat

(SpeechSynthesisOutputFormat. Webm24Khz16Bit24KbpsMonoOpus);

The SpeechSynthesizer class now performs the speech synthesis.

final SpeechSynthesizer synthesizer = new SpeechSynthesizer(speechConfig, null);

Then, the application converts the text to speech.

final SpeechSynthesisResult result = synthesizer.SpeakText(input);

The application saves the resulting audio to a file and returns the file contents:

final AudioDataStream stream = AudioDataStream.fromResult(result);

stream.saveToWavFile(tempFile.toString());

return Files.readAllBytes(tempFile);

} finally {

Finally, the code cleans up the temporary file:

FileUtils.deleteQuietly(tempFile.toFile());

}

}

}

Exposing the New Endpoints

Next, the application exposes the two services as function endpoints in the Function class.

First, we create the services as static variables:

private static final SpeechService SPEECH_SERVICE = new SpeechService();

private static final TranslateService TRANSLATE_SERVICE = new TranslateService();

Then, we adds the two new methods to the Function class.

The first, called translate, exposes an HTTP POST endpoint that takes the text to be translated in the body of the request, and the source and target languages as query parameters. The method returns the JSON body that the upstream Azure translation service returned:

@FunctionName("translate")

public HttpResponseMessage translate(

@HttpTrigger(

name = "req",

methods = {HttpMethod.POST},

authLevel = AuthorizationLevel.ANONYMOUS)

HttpRequestMessage<Optional<String>> request,

final ExecutionContext context) {

if (request.getBody().isEmpty()) {

return request.createResponseBuilder(HttpStatus.BAD_REQUEST)

.body("The text to translate file must be in the body of the post.")

.build();

}

try {

final String text = TRANSLATE_SERVICE.translate(

request.getBody().get(),

request.getQueryParameters().get("sourceLanguage"),

request.getQueryParameters().get("targetLanguage"));

return request.createResponseBuilder(HttpStatus.OK).body(text).build();

} catch (final IOException ex) {

return request.createResponseBuilder(HttpStatus.INTERNAL_SERVER_ERROR)

.body("There was an error translating the text.")

.build();

}

}

The second function, called speak, also exposes an HTTP POST endpoint. This endpoint takes the text to be synthesized in the body of the request and the language to convert the text to as a query parameter, then returns the binary audio in the response:

@FunctionName("speak")

public HttpResponseMessage speak(

@HttpTrigger(

name = "req",

methods = {HttpMethod.POST},

authLevel = AuthorizationLevel.ANONYMOUS)

HttpRequestMessage<Optional<String>> request,

final ExecutionContext context) {

if (request.getBody().isEmpty()) {

return request.createResponseBuilder(HttpStatus.BAD_REQUEST)

.body("The text to translate file must be in the body of the post.")

.build();

}

try {

final byte[] audio = SPEECH_SERVICE.translateText(

request.getBody().get(),

request.getQueryParameters().get("targetLanguage"));

return request.createResponseBuilder(HttpStatus.OK).body(audio).build();

} catch (final IOException ex) {

return request.createResponseBuilder(HttpStatus.INTERNAL_SERVER_ERROR)

.body("There was an error converting the text to speech.")

.build();

}

}

Defining the New App Settings

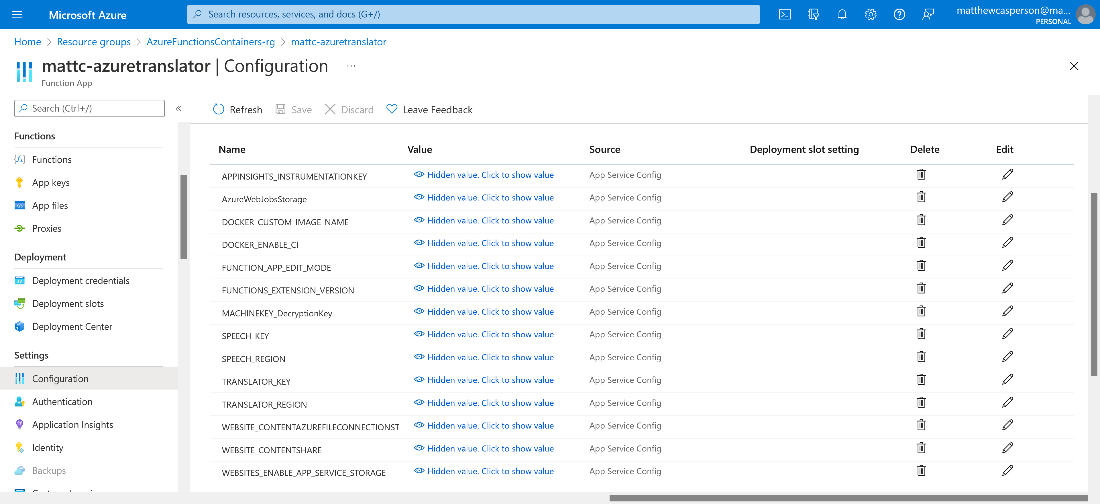

The code now requires two new environment variables: TRANSLATOR_KEY and TRANSLATOR_REGION. These variables must be defined as app settings in the Azure functions app:

az functionapp config appsettings set --name yourappname

--resource-group yourresourcegroup --settings "TRANSLATOR_KEY=yourtranslatorkey"

az functionapp config appsettings set --name yourappname --resource-group yourresourcegroup

--settings "TRANSLATOR_REGION=yourtranslatorregion"

The four environment variables (TRANSLATOR_KEY, TRANSLATOR_REGION, SPEECH_KEY, and SPEECH_REGION) used by the backend code defined as app settings are visible in the screenshot below:

Testing the API Locally

The speech service key and region must be exposed as environmental variables. So, we run the following commands in PowerShell:

$env:SPEECH_KEY="your key goes here"

$env:SPEECH_REGION="your region goes here"

$env:TRANSLATOR_KEY="your key goes here"

$env:TRANSLATOR_REGION="your region goes here"

Alternatively, we can run the equivalent commands in Bash:

export SPEECH_KEY="your key goes here"

export SPEECH_REGION="your region goes here"

export TRANSLATOR_KEY="your key goes here"

export TRANSLATOR_REGION="your region goes here"

Next, to build the backend, we run:

mvn clean package

Then, to run the function locally, we run the following command:

mvn azure-functions:run

Finally, we start the frontend web application detailed in the previous article with the command:

npm start

The application is now completely functional! Users can record a message, transcribe it, translate it, and convert the translated text to speech.

Deploying the Backend App

Fortunately, the previous article already did all the hard work to deploy the backend app. All that’s left is to rebuild the Docker image. We do this using the command:

docker build . -t dockerhubuser/translator

Then, we push the updated image to DockerHub:

docker push dockerhubuser/translator

Assuming continuous deployment is configured on the Azure function, DockerHub will trigger an Azure function redeployment once the new image is pushed.

With that, the app is live and ready to translate!

Next Steps

This tutorial concludes our series on the universal translator by enabling text translation between languages and providing the ability to synthesize text to speech. So, the universal translator can translate browser-recorded speech into another language, helping travelers, workers, and more speak with almost anyone on Earth.

The sample application demonstrates how easy adding advanced text and speech AI to Java applications is. Although it was science fiction just a few years ago, developers can now embed a universal translator into their applications with just a few hundred lines of code.

For those looking to take the next step and embed speech and text services in other applications, fork the frontend and backend code from GitHub. Then, explore the full range of Azure cognitive services to enhance apps with unique features.

To learn more about and view samples for the Microsoft Cognitive Services Speech SDK, check out Microsoft Cognitive Services Speech SDK Samples.