Here we introduce developers and DevOps practitioners to Azure Application Services, explain the benefits from a practitioner’s perspective, and then show how to get started using the Kubernetes cluster we connected to Azure Arc in the previous series.

The Cloud Native Computing Foundation (CNCF) indicates Kubernetes adoption has increased globally and become mainstream. With this growth comes an increased likelihood that organizations will scatter their clusters across on-premises services and multiple cloud providers, such as Google Cloud, Amazon Web Services, and RedHat.

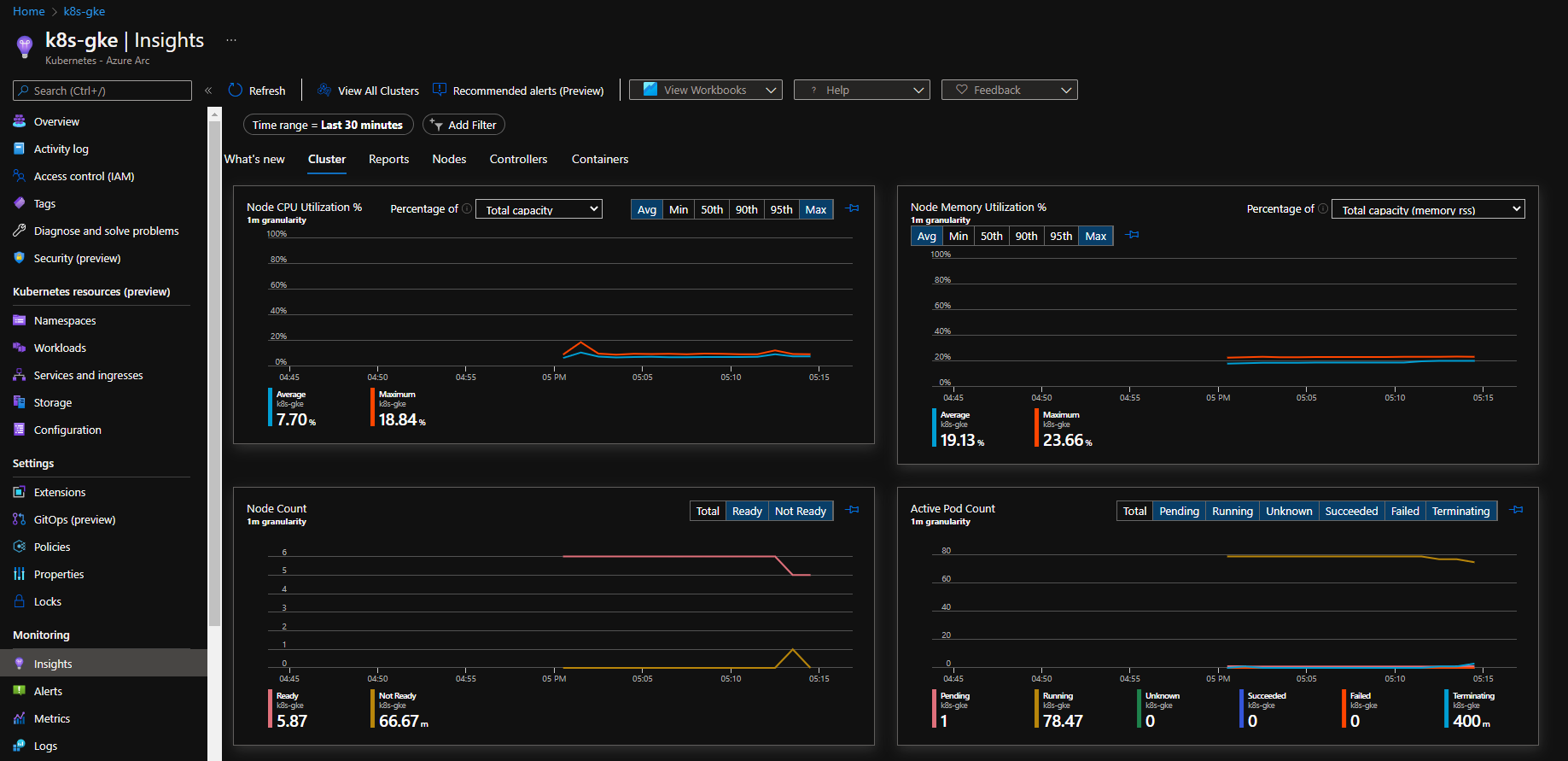

Azure Arc-enabled Kubernetes provides centralized management for all these Kubernetes environments without requiring us to move them into a single cloud service provider or host them on Azure. Azure Arc provides all the capabilities of Azure hosting, such as Azure Monitor, which allows us to collect metrics and logs, run analytical queries, and receive alerts for anything from disk space used to possible security breaches.

In this article, we look closely at how hooking up existing Kubernetes clusters enables us to host Azure App Services — and the data services upon which they rely — virtually anywhere. This includes any Cloud Native Computing Foundation (CNCF)-certified Kubernetes clusters and Azure’s own Kubernetes offerings, of which there is an extensive list of validated distributions.

Introducing the Concept of a Custom Location

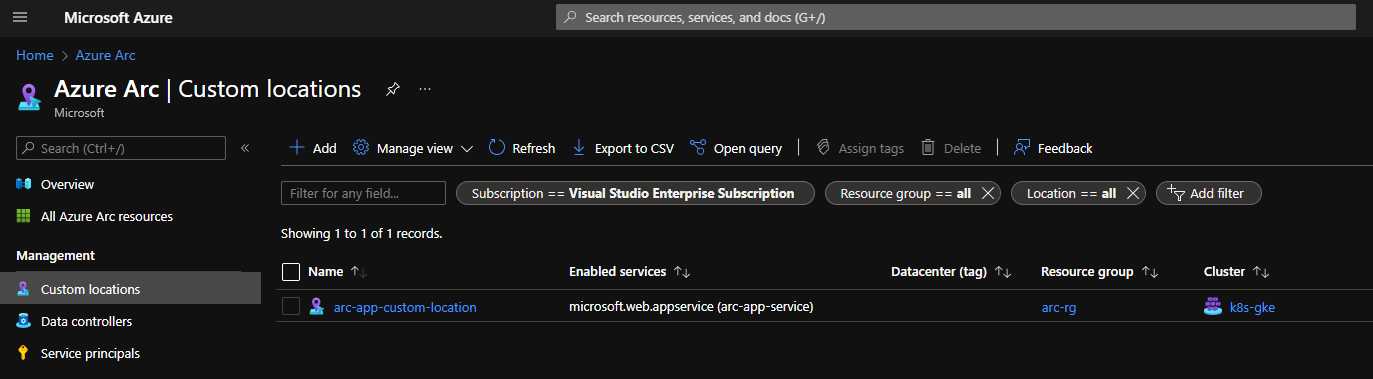

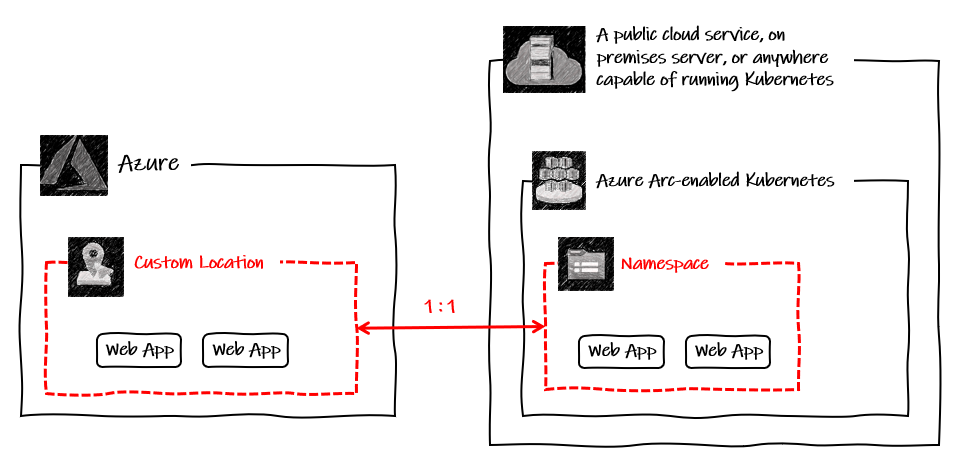

Whenever we provision new Azure resources, we must specify a location. However, as Azure Arc allows us to host our turnkey applications in other cloud providers that do not align with Azure’s locations, we need custom locations.

From an Azure perspective, a custom location is just another resource type. Conceptually, it provides Azure with a way to manage a resource hosted on another cloud in the same way as native resources in Azure regions.

This approach creates a one-to-one mapping to a namespace within an Azure Arc-enabled Kubernetes cluster, abstracting away the underlying cross-cloud infrastructure. This provides developers and administrators with a target for developing resources and deployments.

Although it is hosted outside of Azure, we can integrate the cluster into Application Insights for monitoring:

Native Azure Services Hosted Outside Azure

Whether hosted in another cloud or on-premises, connecting a Kubernetes environment to Azure Arc grants us access to an infrastructure that supports a growing list of native Azure services. We simply point our deployments to the custom location, and they deploy to our Kubernetes cluster.

At the time of writing, the supported services include:

- Event Grid (in preview)

- App Services, including Web Apps, Function Apps, Logic Apps (we take a closer look at Function and Web App examples in the following articles in the series)

- Data Services, including Azure SQL Managed Instance and PostgreSQL Hyperscale

This is extremely significant. It means that we can deploy an Azure Function App to an on-premises Kubernetes cluster that we manage in-house!

These services deploy seamlessly, as though Azure were hosting them. In doing so, we bring them under the umbrella of the Azure management services that we already use with confidence. This helps us implement a consistent approach in all hosting environments.

Let’s consider the service examples of access controls and policies.

Access Controls

Combined with Azure role-based access control (RBAC), an administrator can grant a developer access to deploy apps to a particular namespace in a cluster hosted on-premises (for example), without needing to give the developer details of the underlying cluster.

Azure Policy

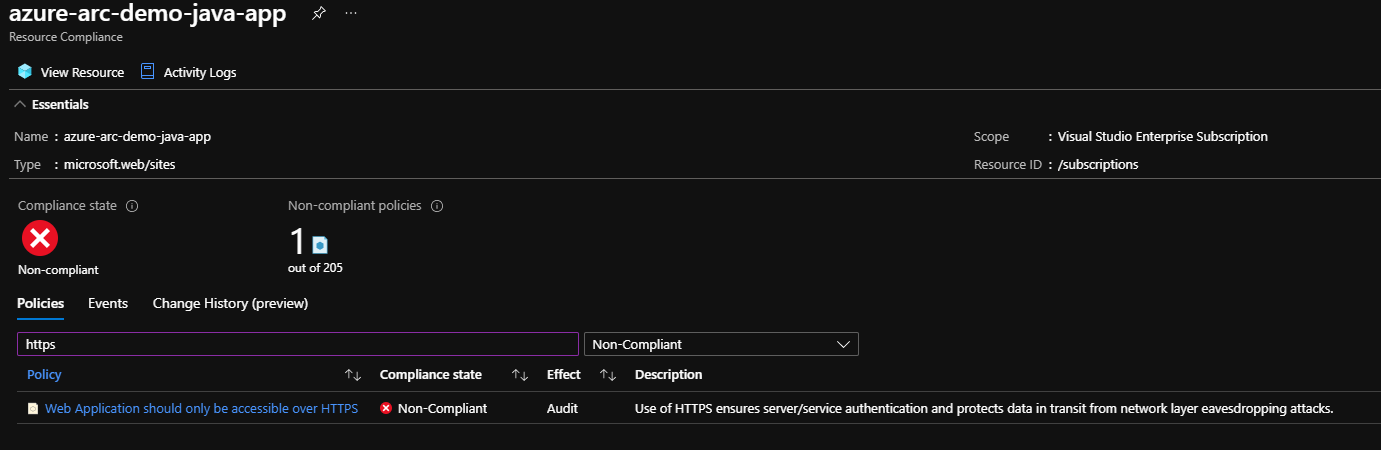

One of the reasons we love the cloud is the speed with which we can start up a necessary resource. However, we must still maintain some control to avoid an instance wherein — for example — someone with access to our organization within Azure provisions highly expensive premium services. Plus, we likely want to exercise control over regions, data governance, and naming conventions.

Fortunately, Azure Policy provides the answer to managing these components. If we have a policy that requires HTTPS to access all web applications, this policy now applies to all services — those hosted in Azure and those using Azure Arc on another cloud provider or on-premises.

Below is a warning in Azure Portal, showing that a Java web application running in Google Cloud is non-compliant with an HTTPS-only policy.

Note: We will deploy this Java app using Azure Arc in the third article of this series.

Data Services

It’s common for organizations to have accumulated hundreds — if not thousands — of databases over many years. They host these databases on bare metal, virtual machines, clouds, and data centers. These organizations could greatly benefit were this data made available to the PostgreSQL Hyperscale and the Azure SQL Managed Instance services.

An Azure Arc-enabled SQL Managed Instance enables existing SQL Servers to move data to Azure while maintaining data sovereignty and with minimal changes. It also shares a common code base with the latest stable version of SQL Server, so most features are identical. Plus, it features all the aforementioned standard benefits of Azure Arc. We can then leverage the power of Azure services with our SQL Server instances, such as Log Analytics, the security center, and SQL environment help, which periodically validates configurations for things like tuning and security.

Prepare to Deploy Azure Functions to Google Cloud

The next article in this series deploys Python Azure Functions to an Arc-enabled Kubernetes cluster. Here are the necessary prerequisites:

Check that you have Azure CLI, version 2.1.50 or above:

az --version

Check that you have Helm, version 3.0 or above:

helm version

Ensure that the CLI extensions are up to date:

az extension add --upgrade -n connectedk8s

az extension add --upgrade -n k8s-configuration

Log in to your Azure Account:

az login

Set the context to the desired subscription:

az account set -s <subscription_id>

Create a resource group (must be West Europe or East US during the Arc preview):

az group create --name arc-rg --location westeurope

Register the resource providers:

az provider register -n Microsoft.Kubernetes –wait

az provider register --namespace Microsoft.KubernetesConfiguration --wait

az provider register --namespace Microsoft.ExtendedLocation –wait

In this series of articles, we are working with Google Cloud, so you need the Google Cloud SDK to follow along. Click to download the Google Cloud SDK.

Follow the guidance on the Azure Arc Jumpstart site for creating a new GCP project, then run the following commands to create a Kubernetes cluster in Google Cloud:

gcloud container clusters create azure-arc-demo-gke-cluster --zone europe-west2-a --tags=arc-demo-node --enable-autoscaling --min-nodes=5 --max-nodes=8

gcloud compute addresses create demo-cluster-endpoint-ip --region europe-west2

gcloud compute addresses list --filter="name=demo-cluster-endpoint-ip"

The third line outputs your cluster’s external IP address. Keep an accessible copy of this close by, as you will need it in the next article.

Connect the cluster to Azure.

az connectedk8s connect --name k8s-gke --resource-group arc-rg

Check Kubernetes connectivity. Are all your nodes ready?

kubectl get nodes

Check resources created by onboarding. Is azure-arc namespace active?

kubectl get namespace

Are all the pods in that namespace running?

kubectl get pods -n azure-arc

Next Steps

Now that you have an understanding of how you can run cloud-native Azure apps anywhere — even outside of Azure — let’s walk through a step-by-step example.

Once you’ve completed the preparation steps in the previous section, continue to the next article to learn how to deploy Azure Functions to an Arc-hosted Kubernetes cluster hosted in another cloud service.

To learn how to manage, govern, and secure your Kubernetes clusters across on-premises, edge, and cloud environments with Azure Arc Preview, check out Azure webinar series Manage Kubernetes Anywhere with Azure Arc Preview.