Sometimes developers feel like managers want everything under the sun completed right now. They face a dilemma: take the time to do it right, going over budget and past the deadline, or make something quickly, on-time and on-budget, even if it’s not perfect.

This choice has a hidden cost: technical debt. A quick and easy solution often triggers hidden costs down the road as developers, and users, discover errors that need fixing. A longer-term solution often becomes outdated, making maintenance challenging. Either way, today’s solutions rack up technical debt with more and more work for tomorrow.

In this article, we’ll look a little deeper into technical debt and explore some solutions for developers to dig themselves out of the hole.

Origins of Technical Debt

Before we dive into technical details, let’s review what technical debt is, where it originates, and how it influences the development process. For simplicity, we’ll look at two software project development scenarios, each leading to technical debt in a different way.

In the first scenario, an army of developers, gathered just for this project, complete the project on time, with mounting requirements and pressing deadlines. Technical debt can appear and grow when, toward the end of a project, developers are left with no choice but to cut corners to survive.

Under pressing conditions, it may not be possible to spend enough time on code quality. Developers may complete features faster by copying and pasting fragments of code more often, either manually or using code generators.

The project goes live, but multiple code duplication cloaks program logic and hides dependencies, preventing developers from making changes efficiently. Eventually, developers spend more and more time debugging after adding a new feature. After a series of successful project stages, managers are surprised that the development team can’t implement subsequent milestones on time.

Everyone points fingers, but the real problem is that code quality has fallen below the critical mark of effective project maintainability. The growing cost of compensating for low code quality resembles interest payments on growing "technical debt".

A small team of dedicated developers works on the second project. It runs for many years and sometimes decades. Working in a relatively relaxed environment, developers take time to properly design code for optimal structure and maintainability. System design meets or exceeds standards which existed years ago, and debugging is usually no problem since developers know the code by heart.

However, technically, the project became frozen in time. It accepts a few minor technology upgrades over a lifetime, but a principal upgrade usually requires a complete rewrite. Technical debt develops from a different angle: the growing cost of compensating for old technology, such as finding and training skilled staff, securing vendor support, and, ultimately, the inability to implement new requirements due to technical limitations.

Both scenarios exhibit increasing "technical" expenses, which do not directly add any value, just service the growing debt. This virtual debt is called "technical debt".

Would implementing better staff retention, outsourcing, or giving developers more time to think in the first scenario, and frequently adding fresh technical people to the team in the second scenario, have helped? Management professionals debate and analyse the pros and cons of these moves.

Is there a "magic pill" that works for both projects? Each scenario is unique, and there isn’t one solution for all. You need to ask the question - despite technical issues, is there substantial value? If you launch a replacement project, will the number of hours spent on development and meetings be exceptionally high? Are parts of the logic not covered by documentation? Does productivity of many people critically depend on the problematic code?

If the answer is yes, before trying other options, it is worth considering code transformation. When planned carefully, code transformation has the potential to reduce technical debt. Better known as "refactoring" or "rewriting", you can do it manually for a small amount of code. For a large number of lines, the cost of manual transformation becomes comparable or exceeds the cost of a replacement project.

Is there a solution for larger projects? Automated code transformation is not widely known although it has existed for decades. Familiar mostly to computer language researchers, automated code transformation is a true gem residing in the very heart of computer science. Discovering this gem is our focus in this article.

Basics of Compiler Theory

Compiling and automated code transformation are different names for the same process. Computer language compilation, a process well known to programmers, is actually the transformation of one computer language into another. Initially designed for direct conversion between two languages, like assembly to machine code, modern compilation technology increasingly uses a third "intermediate" language. Widely-known examples include C# to Microsoft Intermediate Language (MSIL) to machine code and TypeScript to JavaScript to machine code.

Compiler Theory provides building blocks to design any computer language transformation, including transformation of code created with the assistance of "copy-paste" into maintainable logic which reuses code. Another example is rewriting "old technology" code into a modern language and platform while adhering to optimal coding practices required by today’s architectures.

It sounds unbelievable, but these tasks have been solved before. From the perspective of Compiler Theory, these are nothing more than "compiler optimizations" because the latter constitutes rewriting elements of code in a more efficient, optimized way, while preserving their behavior.

To design and implement a specialized compiler to perform these "optimizations" on an indebted project, we need to arm ourselves with the basics of Compiler Theory. The task of transforming a project can grow incredibly complex.

A compiler is essentially a parser. While you can develop parsers in many ways, not all of them result in a compiler solution which remains maintainable while continually implementing support for new transformations. In other words, if you do not follow certain rules from the beginning, a missing foundation will limit the height of the building.

Therefore, we’ll start with a scientific foundation, laid by Edsger Dijkstra while he created the first compiler for Algol. Algol was the first language which could not be easily parsed. When Dijkstra created the Algol compiler, it paved the way for computer language evolution not restricted by parser limitations, which resulted in the development of Pascal, Basic, Fortran, and their respective compilers on the same principles. Today, Dijkstra’s method is standard for computer language compilers, and has found a way into computer-assisted human speech recognition. It is useful in any type of transformation task.

Simple ideas are good ones, and Compiler Theory is no exception. The main idea,breaking complex tasks into simpler ones, is accommodated by splitting compiling into two stages - parsing and code generation - each stage consisting of multiple steps.

The first step of the parsing stage converts a stream of characters into a stream of tokens. The second step converts a stream of tokens into an object tree. An optional enrichment or ‘data mining’ third step collects coding statistics and patterns for performance optimization and improving target code maintainability. This article illustrates fact discovery using artificial intelligence (AI) in the form of a tiny ‘expert system’.

In the second stage, code generation, an optimized and enriched object model is either consumed directly for code emission or transformed into another object tree, which suits the existing code generator for target language.

Introduction to Rewriting and .NET Compiler Platform (Roslyn)

Code rewriting or transformation replaces certain syntax elements with improved ones using better technology. This can range from spot rewrite, illustrated in this article, to a complete rewrite, when old technology source code is transformed into modern technology source code. In either case, it is important to identify the transformation source and destination syntax. In other words, what must be transformed into what.

For a technology rewrite, you must carefully select the target architecture and platform, or sometimes create them by extending one of the modern platforms, to equivalently and compactly support features available in the source platform. You usually must also create a full source platform syntax parser and object modeller. Source object coverage may need to extend beyond source code and cover additional metadata such as storage and user interface.

For spot rewrite, depending on the source language, you may not need to create a compiler. For example, when transforming C#, a rewriter can build upon an existing .NET compiler platform.

To do this, open Visual Studio 2019 installer and install the "Visual Studio extension development" profile, with the optional feature ".NET Compiler Platform SDK" selected.

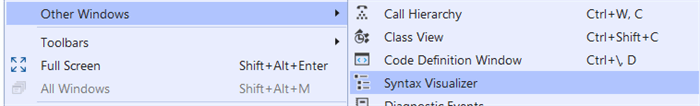

The C# syntax parser converts characters of source code into tokens and objects and returns a "syntax tree". You can display objectified source code in a window within Visual Studio while typing syntax:

If the "Syntax Visualizer" window remains blank after you place the cursor in code, modify any character in source and undo modification to trigger a refresh. Each node in the tree represents a physical or logical element in the syntax.

The .NET Compiler Platform (nicknamed Roslyn) also provides a syntax generator. Simply calling the ".ToString()" method on the node in the tree returns that node spelled in C#. The ability to convert between arbitrary string and syntax nodes and vice versa simplifies rewriting, since new syntax usually contains elements of the old syntax.

Practical C# Code Rewriting

To start, clone the source code repository from GitHub.

In this rewriting example, the original code contains semi-repeating logic, created by copying and pasting and modified in-place to suit requirements. The programmer’s intention was to retrieve a list from the database and apply a limited set and variety of post-processing operations to the list before using the list in code.

Performance metrics indicate the operations consume a significant amount of time. However, it’s not easy to cache the results since the set of operations varies between code locations. The first location applies operations one and two, while the second location applies operations two and three. We’ll assume order of operations doesn’t matter:

public static void ShowExample()

{

var list = GetListFromSomewhere("HELLO");

Operation1(list);

Operation2(list);

var list2 = GetListFromSomewhere("BONJOUR" + ObtainSuffix("PARIS")):

Operation2(list);

Operation3(list);

}

We can improve several aspects of the code. If a variety of needed operations is passed in parameter to the routine, which retrieves a list from the database, performance can be improved by caching post-processed results. Code maintainability improves by shifting from procedural to declarative programming. The latter is achieved by replacing the logic of procedure calls with enumerated processing options.

The first step is to convert the string representing the original source code into an object tree:

public static void Main(string[] args)

{

var tree = CSharpSyntaxTree.ParseText(@"

public class Sample

[

public void ShowExample()

{

{

var list = GetListFromSomeWhere(""HELLO"");

Operation1(list);

Operation2(list);

// process list

// ...

Next, to provide optimization, the rewriter may require access to information in the preceding and following nodes, or other information collected from the tree. Because the rewriter feeds nodes sequentially, it performs code analysis in advance by gathering facts of intention from the code. In simple words, fact of intention is what a programmer really wanted to do. It is the "higher truth" behind code, which is used for reliable replacement of original code with a better equivalent.

Gathering facts of intention benefits from statistical methods and artificial intelligence. In this example, we use a tiny "expert system", which is an early form of AI consisting of a Language Integrated Query (LINQ) followed by scanning surrounding nodes in the syntax tree:

var orinalTreeRoot = tree.GetRoot();

var detectedFacts = CodeAnalyzer.GatherFacts(originalTreeRoot);

The CodeAnalyzer class detects candidate nodes for transformation by searching the syntax tree using LINQ. It focuses on invocations of "GetListFromSomewhere" and checks the following sibling nodes to identify which operations were applied. The output is the list of facts, for example "node XYZ retrieved list with given keyword and applied such and such operations". Notice that the "keyword" in GetListFromSomewhere is not necessarily a string, but any syntax expression returning a string. You can mostly obtain the GatherFacts syntax below by opening ‘Quick Watch’ and copying and pasting the expression, without knowing the Roslyn object model by heart:

class CodeAnalyzer

{

public static List<GenericFact> GatherFacts(SyntaxNode syntaxRoot)

{

var keyNodes = syntaxRoot.DescendantNodes().OfType<LocalDeclarationStatementSyntax>()

.Where(m => ((IdentifierNameSyntax)

((InvocationExpressionSyntax)m.Declaration.Variables[0].Initializer.Value)

.Expression).Identifier.Value.ToString() == "GetListFromSomewhere").ToArray();

var detectedFacts = new List<GenericFact>();

Once facts are detected, they are used to fill the node replacement dictionary. This dictionary contains a new rewritten node for a group of old nodes in the original tree identified as "fact".

Dictionary<SyntaxNode, SyntaxNode> nodeRewrites = new Dictionary<SyntaxNode, SyntaxNode>();

foreach (var intention in detectedFacts)

{

nodeRewrites.Add(intention.KeyNode, intention.GetRewrittenNode());

foreach (var nodeToRemove in intention.OtherNodes)

{

nodeRewrites.Add(nodeToRemove, null);

}

}

Produce the final code by calling the ToFullString() method on the root node of the transformed tree. Roslyn syntax trees are immutable: they cannot be modified directly. A special method accommodates rewriting, which consists of implementing a class (SimpleNodeRewriter) that inherits from CSharpSyntaxRewriter. Syntax tree node rewriter visits all nodes in the tree. While on a node, it has a choice of leaving the node unmodified or creating and returning a replacement node:

var rewriter = new SimpleRewriter(nodeRewrites);

var transformedTreeRoot = rewriter.Visit(orinalTreeRoot);

Console.WriteLine(transformedTreeRoot.ToFullString());

The console displays the rewritten source code:

Next Steps

We’ve examined the causes of technical debt and why it is a problem, and looked at some solutions, including rewriting code based on Compiler Theory. With these methods, you can change your own code, helping reduce your technical debt so you can focus on future product enhancements.

To learn more about reducing technical debt, check out these resources:

This article originally appeared as a guest submission on ContentLab.