Introduction

Ray is a framework with simple and universal APIs for building innovative AI applications. BigDL is an open-source framework for building scalable end-to-end AI on distributed Big Data. It leverages Ray and its native libraries to support advanced AI use cases such as AutoML and Automated Time Series Analysis.

In this blog, we will introduce some of the core components in BigDL and showcase how BigDL takes advantage of Ray and its native libraries to build out the underlying infrastructure (such as RayOnSpark, AutoML, etc.), and how these will help users build AI applications such as Automated Time Series Analysis using Project Chronos.

RayOnSpark: Seamlessly run Ray applications on top of Apache Spark

Ray is an open-source distributed framework for easily running emerging AI applications such as deep reinforcement learning and automated machine learning. BigDL seamlessly integrates Ray into big data preprocessing pipelines through RayOnSpark and it has already been used to build several advanced end to end AI applications for specific areas such as AutoML and Chronos. RayOnSpark runs Ray programs on top of Apache Spark on big data clusters (e.g., a Apache Hadoop* or Kubernetes* cluster) and as a result, objects like in-memory DataFrames can be directly streamed into Ray applications for advanced AI applications. With RayOnSpark, users can directly try various emerging AI applications on their existing big data clusters in a production environment. In addition, it also allows Ray applications to seamlessly integrate into Big Data processing pipelines and directly run on in-memory DataFrames.

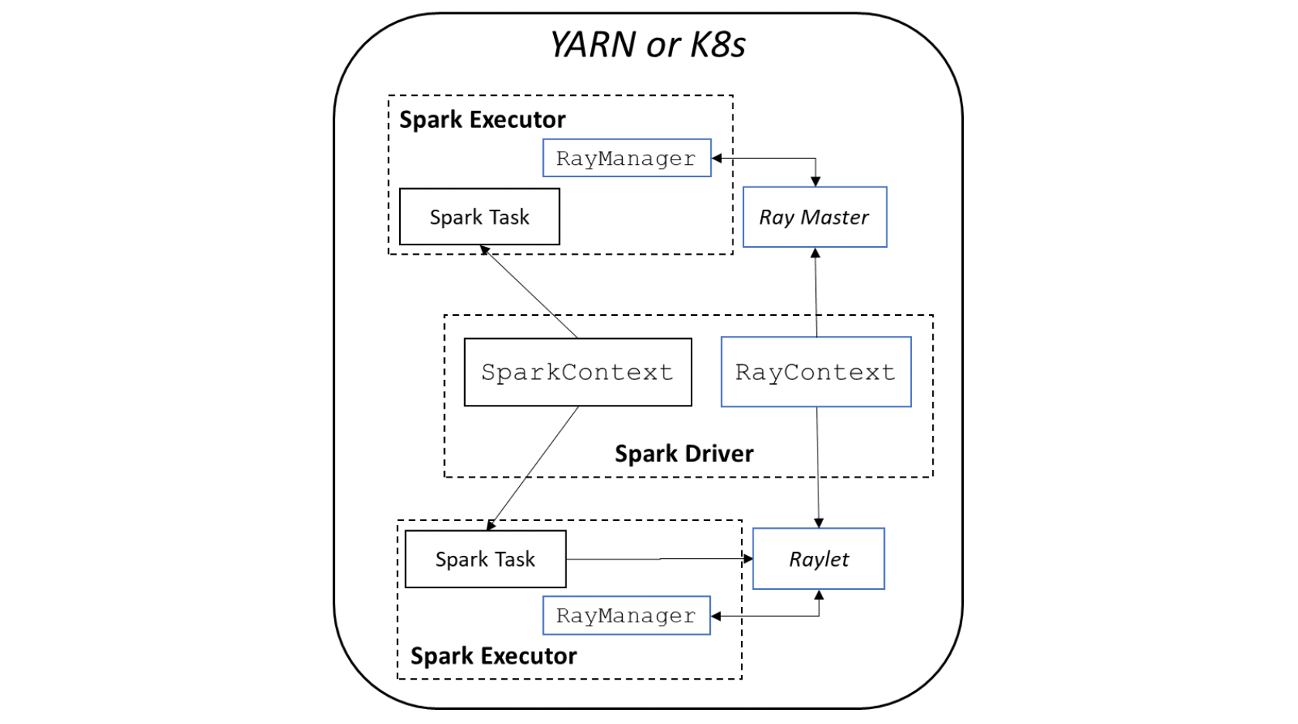

Figure 1: RayOnSpark Architecture

Figure 1 illustrates the architecture of RayOnSpark. In the Spark implementation, a Spark program runs on the driver node and creates a SparkSession with a SparkContext object responsible for launching multiple Spark executors on a cluster to run Spark jobs. In RayOnSpark, the Spark driver program additionally creates a RayContext object, which will automatically launch Ray processes alongside each Spark executor across the same cluster. RayContext will also create a RayManager inside each Spark executor to manage Ray processes (e.g., automatically shutting down the processes when the program exits). The code block below demonstrates how users can directly write Ray code inside standard Spark applications after initializing RayOnSpark.

import ray

from bigdl.orca import init_orca_context

from bigdl.orca.ray import RayContext

sc = init_orca_context(cluster_mode="yarn", cores=...,memory=...,num_nodes=...)

ray_ctx = RayContext(sc, object_store_memory=...,...)

ray_ctx.init()

@ray.remote

class Counter(object)

def __init__(self):

self.n = 0

def increment(self):

self.n += 1

return self.n

counters = [Counter.remote() for i in range(5)]

ray.get([c.increment.remote() for c in counters])

ray_ctx.stop()

sc.stop()

Figure 2: Sample code of RayOnSpark

AutoML (orca.automl): Tune AI applications effortlessly using Ray Tune

Hyperparameter Optimization (HPO) is important for a data scientist to achieve their goal in terms of accuracy, performance, etc. of a machine learning or deep learning model. However, manual HPO tuning can be a time-consuming process with results that may not optimize thoroughly enough. On the other hand, HPO in a distributed environment could be difficult to implement. BigDL introduces an AutoML capability (via orca.automl) built on top of Ray Tune to make life easier for data scientists.

What is orca.automl?

In many cases, data scientists would prefer to prototype, debug, and tune their AI applications on their laptop, and if the same code can be moved intact to a cluster and just run, it will improve the end-to-end productivity greatly.

BigDL’s Orca project helps users to seamlessly scale their code from laptop to a big data cluster. Furthermore, BigDL’s orca.automl leverages the RayOnSpark and Ray Tune, and provides a distributed and hyper-parameter tuning API called AutoEstimator. As Ray Tune is framework agnostic, AutoEstimator is suitable for both PyTorch and TensorFlow models. Users can tune their model in a consistent manner on their laptop, local server, K8s cluster, Hadoop/YARN cluster, etc.

With these features, orca.automl in BigDL can be used to automatically explore the search space (including models, hyper parameters etc.) for many AI applications. As an example, we have implemented AutoXGBoost (XGBoost with HPO) using BigDL’s orca.automl to automatically fit and optimize XGBoost models. Compared to a similar solution on a Nvidia A100, training with AutoXGBoost is ~1.7x faster, and the final model is more accurate. Please see more information in the blog. You may also refer to orca.automl User Guide for design detail and the AutoXGBoost Quick Start or Auto Tuning for arbitrary models for hands-on practice knowledge.

Chronos: Build Automated Time Series Analysis using AutoTS on Ray

We have also developed a framework for Automatics Time Series Analysis, known as Project Chronos. orca.automl is leveraged to tune hyperparameters during the automatic analysis.

Why do we need Chronos?

Time series (TS) analysis is now widely used in many real-world applications (such as network quality analysis in Telecom, log analysis for data center operations, predictive maintenance for high-value equipment, etc.) and getting more and more important. Accurate forecasting and detection have become the most sought-after tasks and prove to be huge challenges for traditional approaches. Deep learning methods often perceive time series forecasting and detection as a sequence modeling problem and have recently been applied to these problems with many successes.

On the other hand, building the machine learning applications for time series forecasting/detection can be a laborious and knowledge-intensive process. Hyperparameters setting, preprocessing and feature engineering may all become the bottleneck for a dedicated deep learning model. To provide an efficient, powerful, and easy-to-use time series analysis toolkit, we launched Project Chronos, a framework for building large-scale time series analysis applications. This can be used to apply AutoML and distributed training since it is built on top of Ray Tune, Ray Train, and RayOnSpark.

Chronos architecture introduction

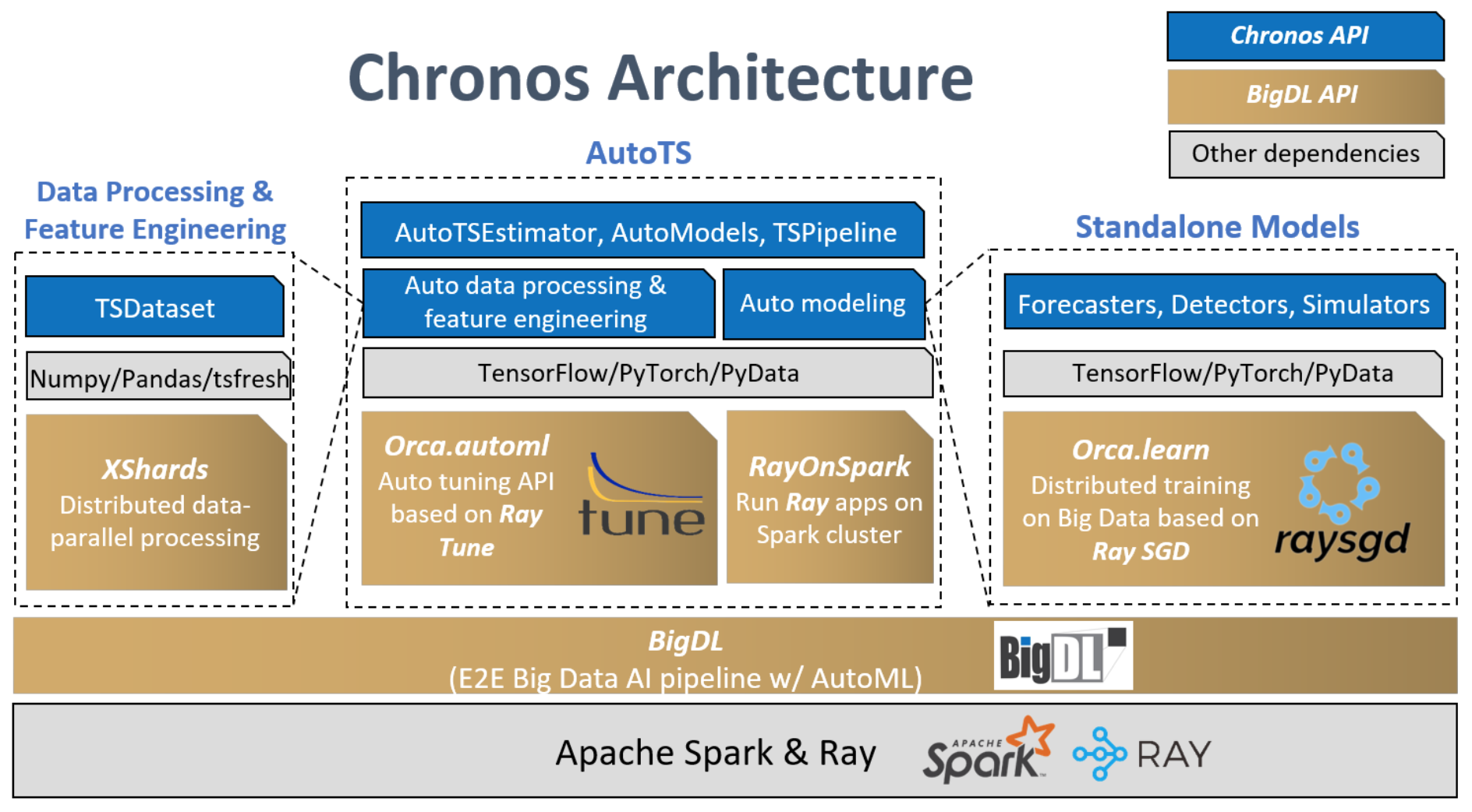

Chronos features several (10+) built-in deep learning and machine learning models for time series forecasting, detection, and simulation as well as many (70+) data processing and feature engineering utils. Users can call standalone algorithms and models (Forecasters, Detectors, Simulators) themselves to acquire the highest flexibility or use our highly integrated and scalable and automated workflow for time series (AutoTS). The inferencing process has also been optimized in a number of ways which includes integrating ONNX runtimec.

The following figure illustrates Chronos's architecture on the top of BigDL and Ray. This section focuses on the AutoTS component. The AutoTS framework uses Ray Tune as a hyper-parameter search engine (running on top of RayOnSpark). For auto data processing, the search engine selects the best lookback value for a prediction task. For auto feature engineering, the search engine selects the best subset of features from a set of features that are automatically generated by various feature generation tools (e.g., tsfresh). For auto modeling, the search engine searches for hyper-parameters such as hidden dim, learning rate, etc.

Figure 3: Project Chronos architecture

Chronos hands-on example for AutoTS workflow

The code below illustrates the training and inferencing process of a time series forecasting pipeline using Chronos's friendly and highly integrated AutoTS workflow. This particular workflow utilizes the simple and straightforward API on the TSDataset to do some typical time series processing (e.g., imputing, scaling, etc.) and feature generation.

import pandas as pd

from sklearn.preprocessing import StandardScaler

from bigdl.chronos.data import TSDataset

df = pd.read_csv("table.csv")

tsdata_train, tsdata_val, tsdata_test = TSDataset.from_pandas(df,

dt_col="StartTime",

target_col="AvgRate",

with_split=True,

val_ratio=0.1)

standard_scaler = StandardScaler()

for tsdata in [tsdata_train, tsdata_val, tsdata_test]:

tsdata.gen_dt_feature()\

.impute(mode="last")\

.scale(standard_scaler, fit=(tsdata is tsdata_train))

Then users can initialize AutoTSEstimator by stating the model (built-in model name / model create function for 3rd party model), lookback and horizon. The AutoTSEstimator runs the search procedure on top of Ray Tune; each run generates several trials (each with a different combination of hyper-parameters and subset of features) at a time and distributes the trials in the Ray cluster. After all trials complete, the best set of hyper-parameters, optimized model and data processing procedure are retrieved according to the target metrics, which are used to compose the resulting TSPipeline.

from bigdl.chronos.autots import AutoTSEstimator

import bigdl.orca.automl.hp as hp

auto_estimator = AutoTSEstimator(model='tcn',

past_seq_len=hp.randint(50,100),

future_seq_len=1)

ts_pipeline = auto_estimator.fit(data=tsdata_train,

validation_data=tsdata_val)

The TSPipeline can be used for prediction, evaluation and incremental fitting.

y_pred = ts_pipeline.predict(tsdata_test)

test_mse = ts_pipeline.evaluate(tsdata_test, metrics = ['mse'])

For detailed information, Chronos user guide is a great place to start.

5G Network Time Series Analysis using Chronos AutoTS

Chronos has been adopted widely in many areas, such as Telecommunication and AIOps. Capgemini Engineering leverages Chronos AutoML workflow and inferencing optimization in their 5G Medium Access Controller (MAC) to realize cognitive capabilities as part of Intelligent to RAN Controller nodes. In their tasks, Chronos is used to forecast UE’s mobility to assist the MAC scheduler in efficient link adaptation on 2 key KPIs. With Chronos AutoTS, Capgemini engineers changed their model to our built-in TCN model and enlarged the lookback value, which successfully increased the AI accuracy by 55%. Detailed information please refer to the white paper.

Conclusion

In this article, we introduced how BigDL leverages Ray and its libraries to build scalable AI applications for big data (using RayOnSpark), improve end-to-end AI development productivity (using AutoML on top of RayTune), and build domain specific AI use cases such as Automatic Time Series Analysis with project Chronos. BigDL also adopts Ray in other aspects, for example Ray Train is being used in BigDL Orca project to seamlessly scale out single node Python notebooks across large data clusters. We are also exploring other use cases such as recommendation systems, reinforcement learning etc. which will leverage the AutoML capabilities built on top of Ray.