This is Part 5 of a 6-part series that demonstrates how to take a monolithic Python application and gradually modernize both the application and its data using Azure tools and services. This article shows how to configure a new cloud database with Azure Cosmos DB API for MongoDB, migrate our Azure Database for PostgreSQL, and connect our app to the new MongoDB database.

We learned how to move our local PostgreSQL data into a cloud-hosted database in the previous articles of this six-part series. We modified the app connection to use Azure Database for PostgreSQL instead of local PostgreSQL. We recreated the database schema and the data on the cloud by running a migration command.

This article will configure a new cloud database with Azure Cosmos DB API for MongoDB, migrate our Azure Database for PostgreSQL, and connect our app to the new MongoDB database. Then, we’ll run the Django app locally while connected to the Azure Cosmos DB database to ensure everything still works.

Azure Cosmos DB offers many advantages over a traditional database. It’s a globally distributed multi-model database natively supporting JSON-like documents, key-value stores, graphs, and column-family (tabular) data models. Developers benefit from Azure Cosmos DB's API support, enabling connections with various databases, including MongoDB, SQL, Graph, and Azure Tables.

Businesses benefit from Azure Cosmos DB’s high availability, throughput, consistency, and low-latency reads and writes. It also offers straightforward autoscaling and features to effectively handle disaster recovery.

The MongoDB API for Azure Cosmos DB automatically indexes all the data without needing schema and index management, making it easy to use Cosmos DB with the familiar MongoDB experience.

MongoDB is much faster than traditional relational databases. For example, MongoDB allows rich object models, secondary indexes, replication, high availability, native aggregation, and schema-less models. On the other hand, relational database management systems (RDBMS) like PostgreSQL excel at atomic transaction support, joins, and data security.

Get your application running by following this tutorial’s steps. Alternatively, download and open this GitHub repository folder to see what the code looks like at the end of this fifth article.

Upgrading the Project

We need to declare the package versions required for our new code to run. So, open the requirements.txt file. Replace its contents with the following:

# requirements.txt file

asgiref==3.5.0

Django==3.1.4

django-cors-headers==3.10.1

djangorestframework==3.11.0

djongo==1.3.6

gunicorn==20.0.4

pymongo==3.12.1

pytz==2019.3

sqlparse==0.2.4

django-cors-middleware==1.3.1

django-extensions==3.1.5

psycopg2==2.8.6

psycopg2-binary==2.8.6

PyJWT==1.4.2

six==1.10.0

Then create a new virtual environment (if you haven’t yet) using this command:

> python -m venv .venv

Next, activate the .venv virtual environment using this command:

> .venv\Scripts\activate

Then install the requirements using this command:

(.venv) > pip install -r requirements.txt

Creating a Cosmos DB MongoDB Account

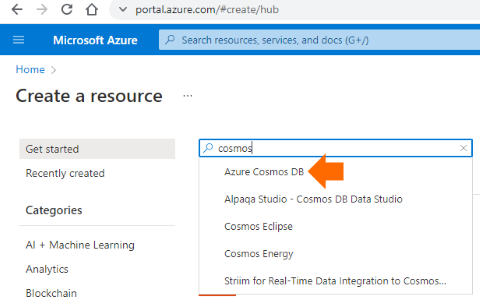

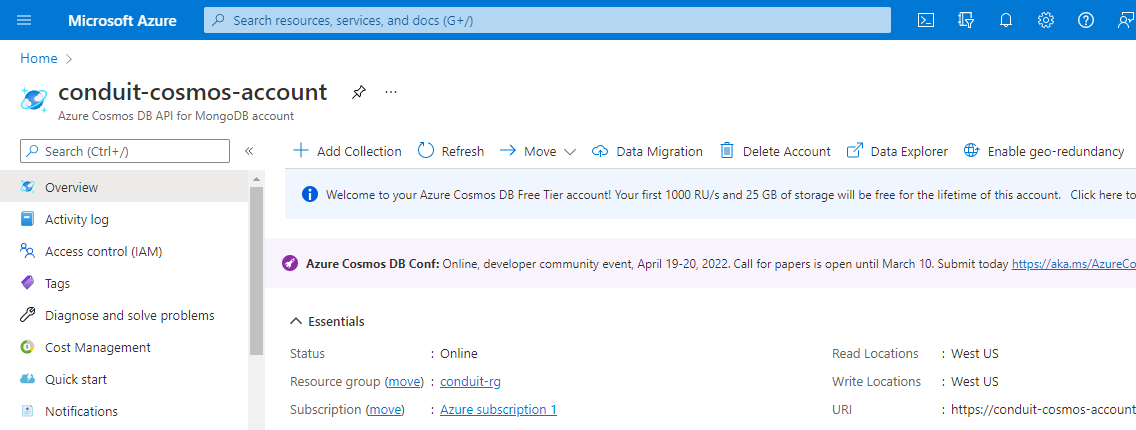

Sign in to the Azure portal in a new browser window. Then, search for “Azure Cosmos DB”:

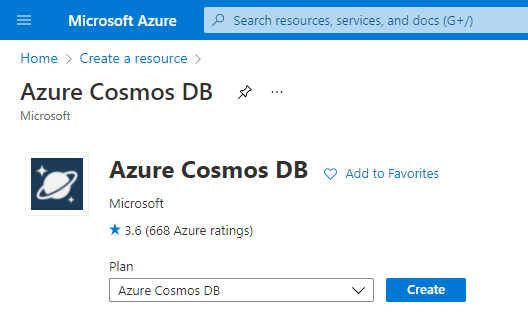

Select the Azure Cosmos DB plan and click Create.

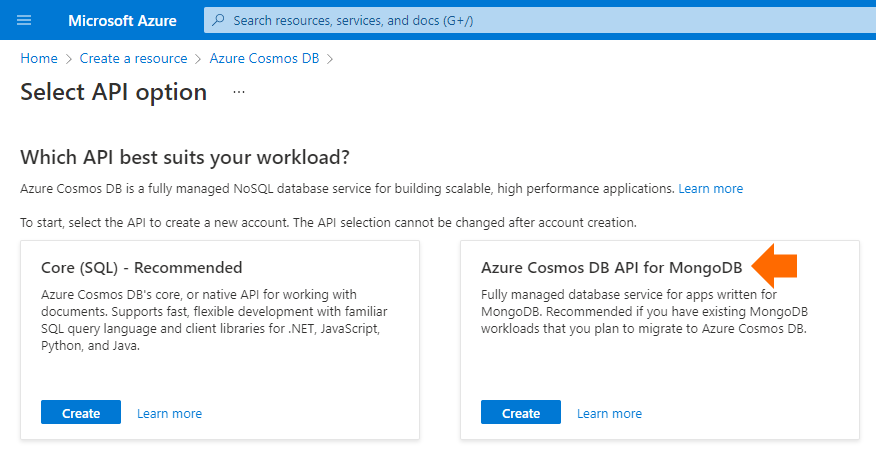

On the Select API option page, select Azure Cosmos DB API for MongoDB > Create.

The API determines the type of account to create. Select Azure Cosmos DB API for MongoDB because you’ll make a database and a series of collections that work with MongoDB.

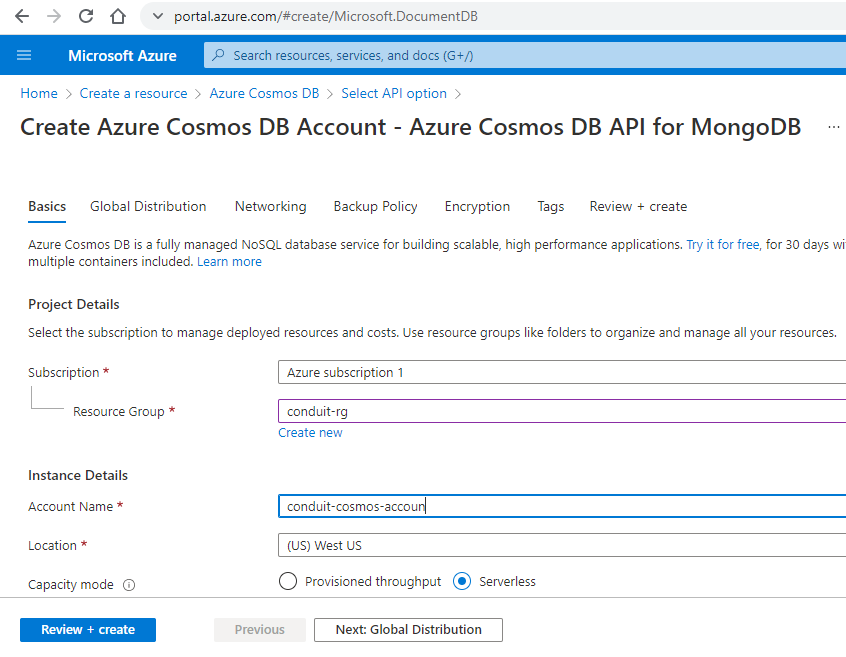

On the Create Azure Cosmos DB Account page, enter the settings for the new Azure Cosmos DB account, like the Resource Group and Account Name, then click Review + create.

Next, click the Go to Resource link and wait a few minutes until Azure creates the account. Wait for the portal to display your new Cosmos DB account’s overview.

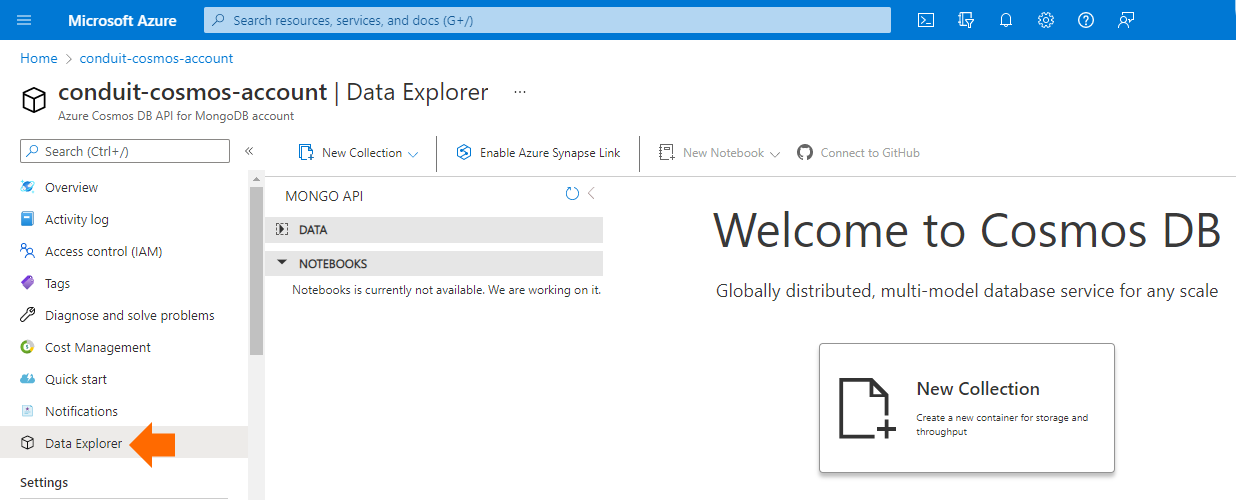

We still need to create the Mongo DB database. So, click Data Explorer on the left menu:

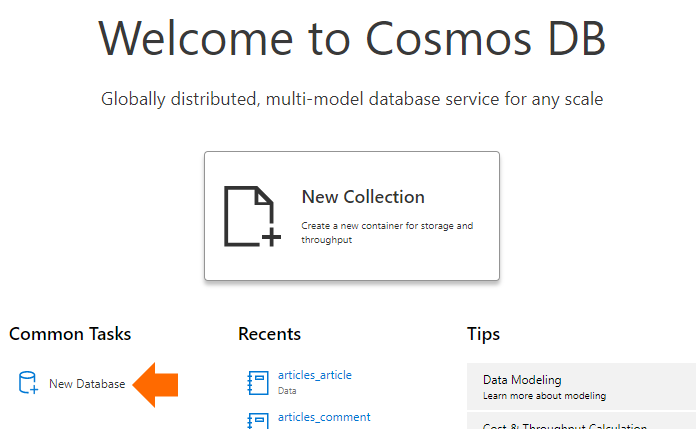

Then click the New Database link on the center panel:

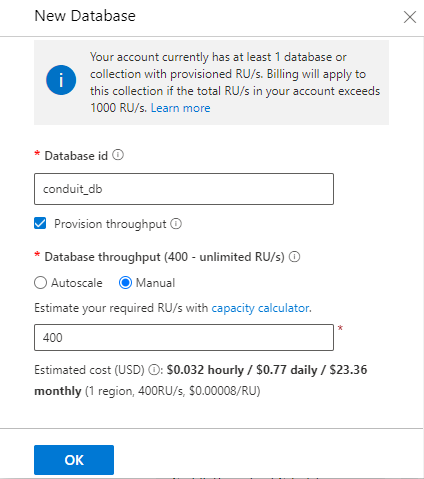

Name the database “conduit_db”. Configure the Database throughput as Manual, then click OK to save the database.

Connecting to a Cosmos DB Account and Applying Migrations

How challenging is it to change our Python application’s current database configuration, which currently points to a relational database, to the new document-based MongoDB database? Surprisingly, it’s relatively easy when we have the right tools.

Fortunately, some clever people have created Djongo, a database mapper for Django applications connecting to MongoDB databases.

Djongo enables you to use MongoDB as a backend database for your Django project without changing the Django object-relational mapping (ORM). Djongo simply translates a SQL query string into a MongoDB query document, greatly simplifying the process of replacing an RDBMS with MongoDB. As a result, all Django features, models, and other components work as-is.

Compared to traditional Django ORM, Djongo enables you to develop and evolve your app models quickly. Since MongoDB is a schemaless database, MongoDB doesn’t expect you to redefine the schema every time you redefine your model.

Also, with Djongo, you don't need database migrations anymore. Just define ENFORCE_SCHEMA: False in your database configuration. Djongo manages collections transparently for developers, as it knows how to emit PyMongo commands to create MongoDB collections implicitly.

Make sure you’re running in the virtual environment and install Djongo with the following command:

(.venv) > pip install djongo

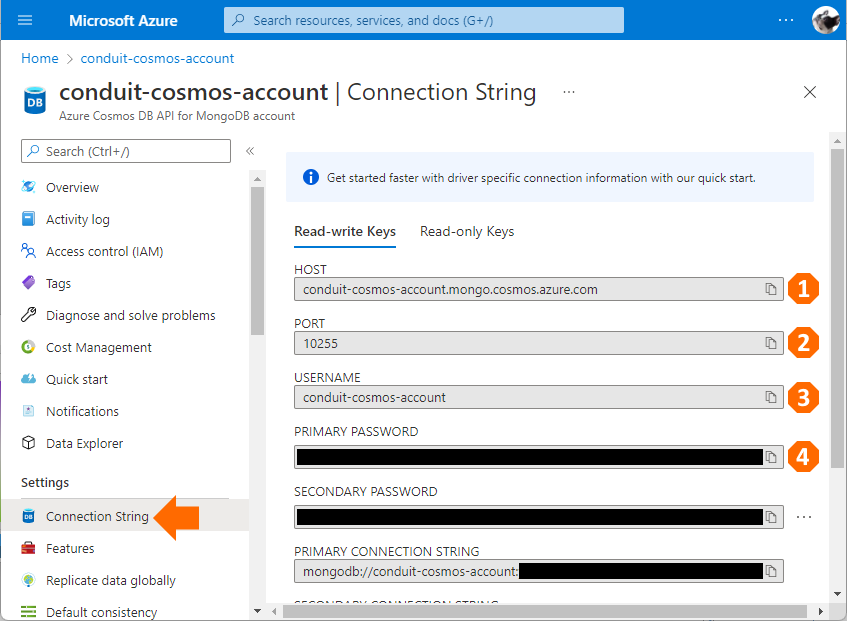

Now go back to the Azure Portal, click the Connection String menu, and copy the contents of these four fields from your account: HOST, PORT, USERNAME, and PRIMARY PASSWORD.

Next, edit the \conduit\settings.py file and replace the DATABASES constant with the settings below:

DATABASES = {

'default': {

'ENGINE': 'djongo',

'ENFORCE_SCHEMA': False,

'NAME': 'conduit_db',

'CLIENT': {

'host': '<<YOUR-ACCOUNT-HOST>>',

'port': 10255,

'username': '<<YOUR-ACCOUNT-USERNAME>>,

'password': ' <<YOUR-ACCOUNT-PASSWORD>> ',

'authMechanism': 'SCRAM-SHA-1',

'ssl': True,

'tlsAllowInvalidCertificates': True,

'retryWrites': False

}

}

}

Then modify the CORS_ORIGIN_WHITELIST constant to include the http scheme in the localhost addresses:

CORS_ORIGIN_WHITELIST = (

'http://0.0.0.0:4000',

'http://localhost:4000',

'http://localhost:8080'

)

Applying auth.0002_alter_permission_name_max_length... OK

Applying auth.0003_alter_user_email_max_length... OK

Applying auth.0004_alter_user_username_opts... OK

Applying auth.0005_alter_user_last_login_null... OK

Applying auth.0006_require_contenttypes_0002... OK

Applying auth.0007_alter_validators_add_error_messages... OK

Applying auth.0008_alter_user_username_max_length... OK

Applying authentication.0001_initial... OK

Applying admin.0001_initial... OK

Applying admin.0002_logentry_remove_auto_add... OK

Applying admin.0003_logentry_add_action_flag_choices... OK

Applying profiles.0001_initial... OK

Applying articles.0001_initial... OK

Applying articles.0002_comment... OK

Applying articles.0003_auto_20160828_1656... OK

Applying auth.0009_alter_user_last_name_max_length... OK

Applying auth.0010_alter_group_name_max_length... OK

Applying auth.0011_update_proxy_permissions... OK

Applying auth.0012_alter_user_first_name_max_length... OK

Applying profiles.0002_profile_follows... OK

Applying profiles.0003_profile_favorites... OK

Applying sessions.0001_initial... OK

Exporting Data from Azure Database for PostgreSQL to JSON Files

We could just create an empty MongoDB database and start working without any data. However, remember that we've been working on the same PostgreSQL database for several articles, and we don't want to lose this data. You would need help migrating this data from the PostgreSQL database to the Mongo DB database in the real world. Let's do that now, step-by-step, but on a smaller scale.

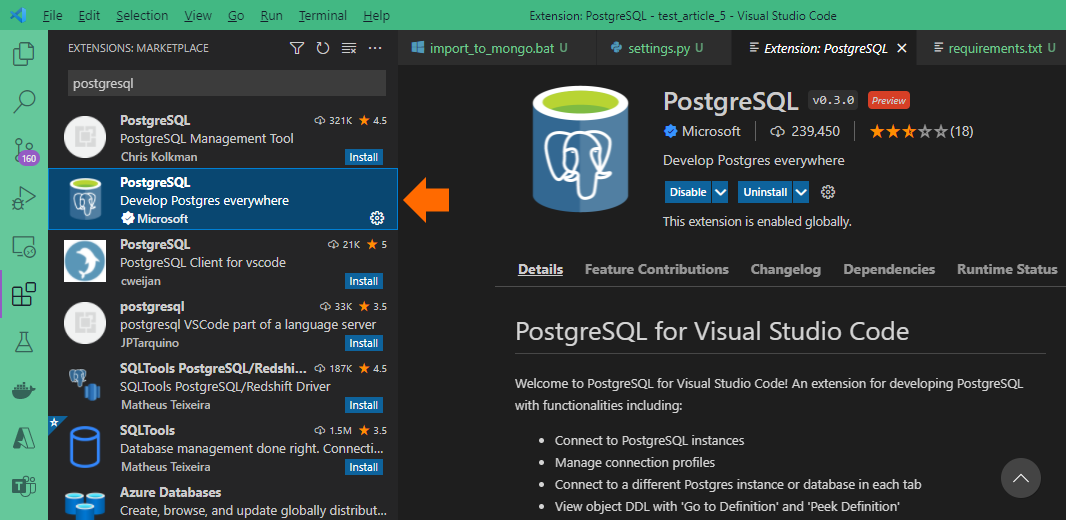

Open Visual Studio Code, click the Extensions tab, and look for PostgreSQL. Install Microsoft’s PostgreSQL extension for VS Code:

After installing the PostgreSQL extension, you’ll be able to connect to your Azure Database for PostgreSQL instance, run queries against the database, and save results as JSON, CSV, or Excel.

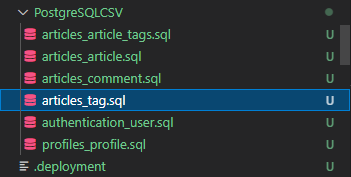

Now, open your project files and create a new folder named PostgreSQLCSV, then create the following SQL files with the respective contents:

PostgreSQLCSV\articles_tag.sql

SELECT

id as "id.int32()",

to_char(created_at, 'yyyy-mm-dd HH24:MI:SS') as "created_at.date_ms(yyyy-MM-dd H:mm:ss)",

to_char(updated_at, 'yyyy-mm-dd HH24:MI:SS') as "updated_at.date_ms(yyyy-MM-dd H:mm:ss)",

tag as "tag.string()",

slug as "slug.string()"

FROM articles_tag;

PostgreSQLCSV\authentication_user.sql

SELECT

id as "id.int32()",

password as "password.string()",

is_superuser as "is_superuser.boolean()",

username as "username.string()",

email as "email.string()",

is_active as "is_active.boolean()",

is_staff as "is_staff.boolean()",

to_char(created_at, 'yyyy-mm-dd HH24:MI:SS') as "created_at.date_ms(yyyy-MM-dd H:mm:ss)",

to_char(updated_at, 'yyyy-mm-dd HH24:MI:SS') as "updated_at.date_ms(yyyy-MM-dd H:mm:ss)"

FROM authentication_user;

PostgreSQLCSV\profiles_profile.sql

SELECT

id as "id.int32()",

to_char(created_at, 'yyyy-mm-dd HH24:MI:SS') as "created_at.date_ms(yyyy-MM-dd H:mm:ss)",

to_char(updated_at, 'yyyy-mm-dd HH24:MI:SS') as "updated_at.date_ms(yyyy-MM-dd H:mm:ss)",

bio as "bio.string()",

image as "image.string()",

user_id as "user_id.int32()"

FROM profiles_profile;

PostgreSQLCSV\articles_article.sql

>SELECT

id as "id.int32()",

to_char(created_at, 'yyyy-mm-dd HH24:MI:SS') as "created_at.date_ms(yyyy-MM-dd H:mm:ss)",

to_char(updated_at, 'yyyy-mm-dd HH24:MI:SS') as "updated_at.date_ms(yyyy-MM-dd H:mm:ss)",

slug as "slug.string()",

title as "title.string()",

description as "description.string()",

body as "body.string()",

author_id as "author_id.int32()"

FROM articles_article;

PostgreSQLCSV\articles_article_tags.sql

SELECT

id as "id.int32()",

article_id as "article_id.int32()",

tag_id as "tag_id.int32()"

FROM articles_article_tags;

PostgreSQLCSV\articles_comment.sql

SELECT

id as "id.int32()",

to_char(created_at, 'yyyy-mm-dd HH24:MI:SS') as "created_at.date_ms(yyyy-MM-dd H:mm:ss)",

to_char(updated_at, 'yyyy-mm-dd HH24:MI:SS') as "updated_at.date_ms(yyyy-MM-dd H:mm:ss)",

body as "body.string()",

article_id as "article_id.int32()",

author_id as "author_id.int32()"

FROM articles_comment;

Each query targets a specific table from our PostgreSQL database. Note that the column names also include the data type. This naming format will help us later since we can provide the MongoDB import tool with these data types.

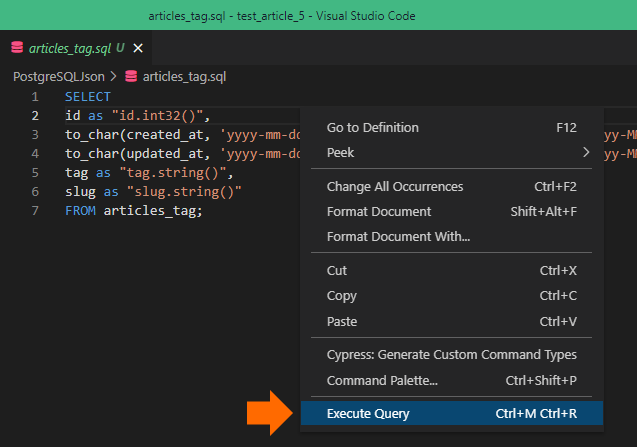

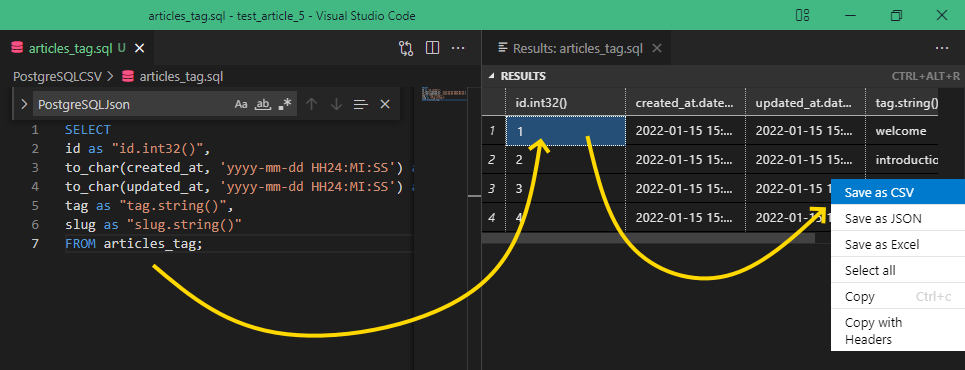

We’ll run each query above using the PostgreSQL extension for VS Code. Execute the following four steps for each SQL query:

- Locate and select the SQL query file inside the PostgreSQLCSV folder:

- Open the SQL file in the central panel, double-click the query, and click the Execute Query menu:

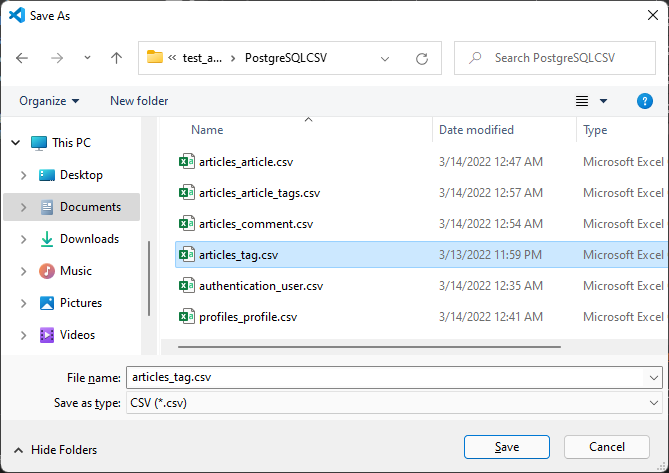

- You’ll notice a right window with the resulting table view. Right-click a cell within the table and click the Save as CSV menu option:

- Finally, save the file with the same name as the query, replacing the SQL extension with CSV (for example, articles_tag.sql becomes articles_tag.csv). Save the resulting file in the same PostgreSQLCSV folder.

Creating a Composite Index on an articles_tag Collection

Some queries in our Conduit Django app (such as the articles_tag collection) use an Order By clause on multiple fields within that collection. Our app won’t be able to perform such queries until we create a single composite index that covers those fields.

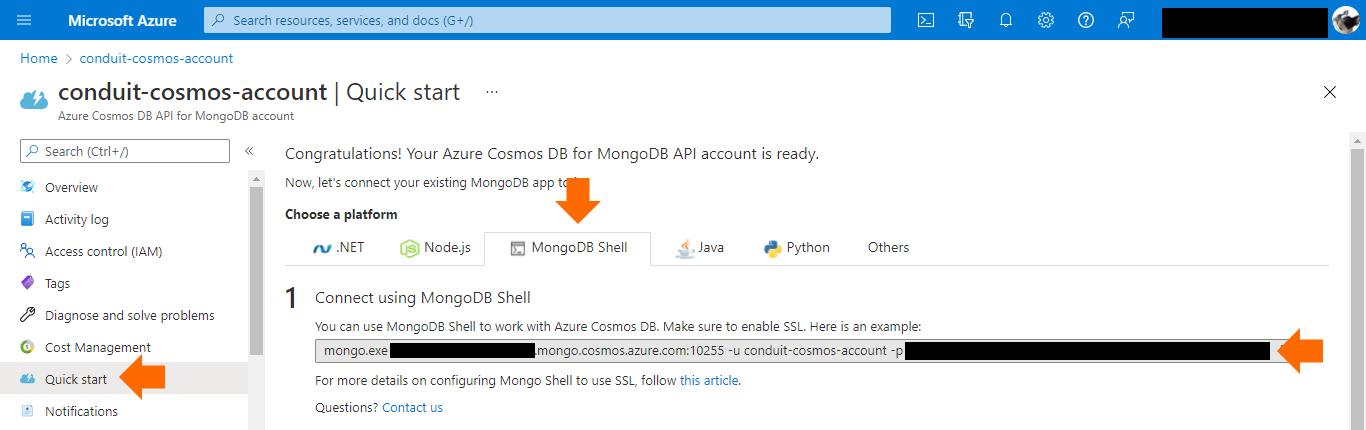

So, go to your Conduit Cosmos DB account and click the Quick start menu item on the left panel. Then, go to the MongoDB Shell tab and copy the line under Connect using MongoDB Shell:

Now paste that line to your terminal and run it:

> mongo.exe <<YOUR-COSMOSDB-HOST>>:10255 -u <<YOUR-COSMOSDB-USER>>

-p <<YOUR-COSMOSDB-PASSWORD>> --ssl --sslAllowInvalidCertificates

Next, run the show databases command:

globaldb:PRIMARY> show databases

conduit_db 0.000GB

Then select the conduit_db database:

globaldb:PRIMARY> use conduit_db

switched to db conduit_db

Create a composite index on the articles_tag collection covering the created_at and updated_at fields:

globaldb:PRIMARY> db.articles_tag.createIndex({created_at:1,updated_at:1})

{

"createdCollectionAutomatically" : false,

"numIndexesBefore" : 3,

"numIndexesAfter" : 4,

"ok" : 1

}

Importing JSON Files into a Cosmos DB MongoDB Database

Now it’s time to import the previously-created CSV files from PostgreSQL into Mongo DB collections. First, locate and install Mongo DB Database Tools for your system.

Next, run the mongoimport command-line tool to convert the CSV files into MongoDB documents under your Cosmos DB account. Note that you must run mongoimport for each of the six files below:

articles_tag.csv

mongoimport --host <<YOUR-COSMOSDB-HOST>>:10255 -u <<YOUR-COSMOSDB-USER>>

-p <<YOUR-COSMOSDB-PASSWORD>> --db conduit_db --collection articles_tag --ssl --type csv

--headerline --columnsHaveTypes --file PostgreSQLCSV\articles_tag.csv

--writeConcern="{w:0}"

authentication_user.csv

mongoimport --host <<YOUR-COSMOSDB-HOST>>:10255 -u <<YOUR-COSMOSDB-USER>>

-p <<YOUR-COSMOSDB-PASSWORD>> --db conduit_db --collection authentication_user

--ssl --type csv --headerline --columnsHaveTypes

--file PostgreSQLCSV\authentication_user.csv --writeConcern="{w:0}"

profiles_profile.csv

mongoimport --host <<YOUR-COSMOSDB-HOST>>:10255 -u <<YOUR-COSMOSDB-USER>>

-p <<YOUR-COSMOSDB-PASSWORD>> --db conduit_db --collection profiles_profile

--ssl --type csv --headerline --columnsHaveTypes

--file PostgreSQLCSV\profiles_profile.csv --writeConcern="{w:0}"

articles_article.csv

mongoimport --host <<YOUR-COSMOSDB-HOST>>:10255 -u <<YOUR-COSMOSDB-USER>>

-p <<YOUR-COSMOSDB-PASSWORD>> --db conduit_db --collection articles_article

--ssl --type csv --headerline --columnsHaveTypes

--file PostgreSQLCSV\articles_article.csv --writeConcern="{w:0}"

articles_comment.csv

mongoimport --host <<YOUR-COSMOSDB-HOST>>:10255 -u <<YOUR-COSMOSDB-USER>>

-p <<YOUR-COSMOSDB-PASSWORD>> --db conduit_db --collection articles_comment

--ssl --type csv --headerline --columnsHaveTypes

--file PostgreSQLCSV\articles_comment.csv --writeConcern="{w:0}"

articles_article_tags.csv

mongoimport --host <<YOUR-COSMOSDB-HOST>>:10255 -u <<YOUR-COSMOSDB-USER>>

-p <<YOUR-COSMOSDB-PASSWORD>> --db conduit_db --collection articles_article_tags

--ssl --type csv --headerline --columnsHaveTypes

--file PostgreSQLCSV\articles_article_tags.csv --writeConcern="{w:0}"

Running the Python App Locally

Open your terminal. Then, run this command in your virtual environment:

(.venv) PS > python manage.py runserver

Django version 3.1.4, using settings 'conduit.settings'

Starting development server at http://127.0.0.1:8000/

Quit the server with CTRL-BREAK.

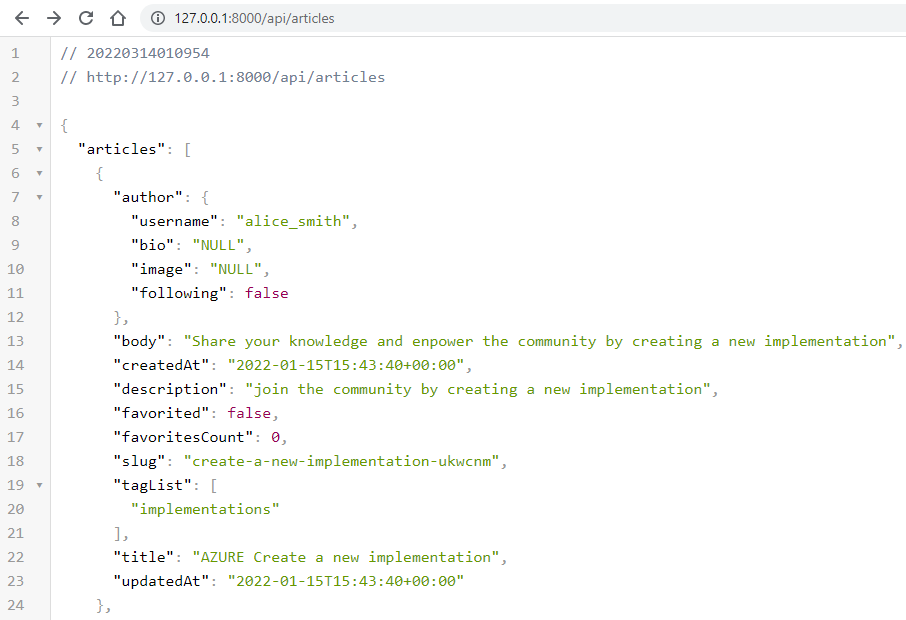

Open your browser now. Go to http://127.0.0.1:8000/api/articles to check the articles coming from the MongoDB database hosted in your Cosmos DB account:

Finally, open http://127.0.0.1:8000/api/tags to get the tag list:

Next Steps

In this article, we modified our Python app to access our MongoDB installation in a new Azure Cosmos DB database.

We started by creating a new Azure Cosmos DB API for MongoDB. Then we installed the Djongo Mapper, which overcomes common pitfalls of PyMongo programming by mapping Python objects to MongoDB documents. Djongo enables you to seamlessly replace your relational database, mapping MongoDB documents to Python objects by adjusting your app’s database configuration.

So far, we’ve explored how to modernize an app by moving its data and code to the cloud. In the final article of this series, we’ll discuss how to start making the app more cloud-native. We’ll demonstrate how to begin moving the legacy app’s functionality into Azure function-based microservices. We’ll pick some existing functionality from the application and show how to move it into an Azure Function written in Python. Continue to the following article to learn how.

To learn more about how to build and deploy your Python apps in the cloud, check out Python App Development - Python on Azure.