In the seventh part of this tutorial series on developing PHP on Docker, we will setup a CI (Continuous Integration) pipeline to run code quality tools and tests on Github Actions and Gitlab Pipelines.

All code samples are publicly available in my Docker PHP Tutorial repository on github.

You find the branch for this tutorial at part-7-ci-pipeline-docker-php-gitlab-github.

Published Parts of the Docker PHP Tutorial

If you want to follow along, please subscribe to the RSS feed or via email to get automatic notifications when the next part comes out. :)

Introduction

CI is short for Continuous Integration and to me mostly means running the code quality tools and tests of a codebase in an isolated environment (preferably automatically). This is

particularly important when working in a team, because the CI system acts as the final gatekeeper before features or bugfixes are merged into the main branch.

I initially learned about CI systems when I stubbed my toes into the open source water. Back in the day, I used Travis CI for my own projects and replaced it with Github Actions at some point. At ABOUT YOU, we started out with a self-hosted Jenkins server and then moved on to Gitlab CI as a fully managed solution (though we use custom runners).

Recommended Reading

This tutorial builds on top of the previous parts. I'll do my best to cross-reference the corresponding articles when necessary, but I would still recommend to do some upfront reading on:

And as a nice-to-know:

Approach

In this tutorial, I'm going to explain how to make our existing docker setup work with Github Actions and Gitlab CI/CD Pipelines. As I'm a big fan of a "progressive enhancement" approach, we will ensure that all necessary steps can be performed locally through make. This has the additional benefit of keeping a single source of truth (the Makefile) which will come in handy when we set up the CI system on two different providers (Github and Gitlab).

The general process will look very similar to the one for local development:

- build the docker setup

- start the docker setup

- run the qa tools

- run the tests

You can see the final results in the CI setup section, including the concrete yml files and links to the repositories, see:

On a code level, we will treat CI as an environment, configured through the env variable ENV. So far we only used ENV=local and we will extend that to also use ENV=ci. The necessary changes are explained after the concrete CI setup instructions in the sections:

Try It Yourself

To get a feeling for what's going on, you can start by executing the local CI run:

This should give you a similar output as presented in the Execution example.

git checkout part-7-ci-pipeline-docker-php-gitlab-github

# Initialize make

make make-init

# Execute the local CI run

bash .local-ci.sh

CI Setup

General CI Notes

Initialize make for CI

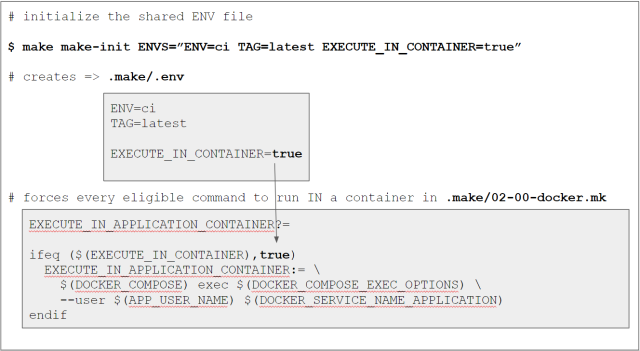

As a very first step, we need to "configure" the codebase to operate for the ci environment. This is done through the make-init target as explained later in more detail in the Makefile changes section via:

make make-init ENVS="ENV=ci TAG=latest EXECUTE_IN_CONTAINER=true GPG_PASSWORD=12345678"

$ make make-init ENVS="ENV=ci TAG=latest EXECUTE_IN_CONTAINER=true GPG_PASSWORD=12345678"

Created a local .make/.env file

ENV=ci ensures that we:

TAG=latest is just a simplification for now because we don't do anything with the images yet. In an upcoming tutorial, we will push them to a container registry for later usage in production deployments and then set the TAG to something more meaningful (like the build number).

EXECUTE_IN_CONTAINER=true forces every make command that uses a RUN_IN_*_CONTAINER setup to run in a container. This is important, because the Gitlab runner will actually run in a docker container itself. However, this would cause any affected target to omit the $(DOCKER_COMPOSER) exec prefix.

GPG_PASSWORD=12345678 is the password for the secret gpg key as mentioned in Add a password-protected secret gpg key.

wait-for-service.sh

I'll explain the "container is up and running but the underlying service is not" problem for the mysql service and how we can solve it with a health check later in this article at Adding a health check for mysql. On purpose, we don't want docker compose to take care of the waiting because we can make "better use of the waiting time" and will instead implement it ourselves with a simple bash script located at .docker/scripts/wait-for-service.sh:

name=$1

max=$2

interval=$3

[ -z "$1" ] && echo "Usage example: bash wait-for-service.sh mysql 5 1"

[ -z "$2" ] && max=30

[ -z "$3" ] && interval=1

echo "Waiting for service '$name' to become healthy,

checking every $interval second(s) for max. $max times"

while true; do

((i++))

echo "[$i/$max] ...";

status=$(docker inspect --format "{{json .State.Health.Status }}"

"$(docker ps --filter name="$name" -q)")

if echo "$status" | grep -q '"healthy"'; then

echo "SUCCESS";

break

fi

if [ $i == $max ]; then

echo "FAIL";

exit 1

fi

sleep $interval;

done

This script waits for a docker $service to become "healthy" by checking the .State.Health.Status info of the docker inspect command.

CAUTION: The script uses $(docker ps --filter name="$name" -q) to determine the id of the container, i.e., it will "match" all running containers against the $name - this would fail if there is more than one matching container! i.e., you must ensure that $name is specific enough to identify one single container uniquely.

The script will check up to $max times in a interval of $interval seconds. See these answers on the "How do I write a retry logic in script to keep retrying to run it up to 5 times?" question for the implementation of the retry logic. To check the health of the mysql service for 5 times with 1 seconds between each try, it can be called via:

bash wait-for-service.sh mysql 5 1

Output:

$ bash wait-for-service.sh mysql 5 1

Waiting for service 'mysql' to become healthy, checking every 1 second(s) for

max. 5 times

[1/5] ...

[2/5] ...

[3/5] ...

[4/5] ...

[5/5] ...

FAIL

# OR

$ bash wait-for-service.sh mysql 5 1

Waiting for service 'mysql' to become healthy, checking every 1 second(s) for

max. 5 times

[1/5] ...

[2/5] ...

SUCCESS

The problem of "container dependencies" isn't new and there are already some existing solutions out there, e.g.:

But unfortunately, all of them operate by checking the availability of a host:port combination and in the case of mysql that didn't help, because the container was up, the port was reachable but the mysql service in the container was not.

Setup for a "local" CI run

As mentioned under Approach, we want to be able to perform all necessary steps locally and I created a corresponding script at .local-ci.sh:

set -e

make docker-down ENV=ci || true

start_total=$(date +%s)

cp secret-protected.gpg.example secret.gpg

docker version

docker compose version

cat /etc/*-release || true

make make-init ENVS="ENV=ci TAG=latest EXECUTE_IN_CONTAINER=true GPG_PASSWORD=12345678"

start_docker_build=$(date +%s)

make docker-build

end_docker_build=$(date +%s)

mkdir -p .build && chmod 777 .build

start_docker_up=$(date +%s)

make docker-up

end_docker_up=$(date +%s)

make gpg-init

make secret-decrypt-with-password

start_qa=$(date +%s)

make qa || FAILED=true

end_qa=$(date +%s)

start_wait_for_containers=$(date +%s)

bash .docker/scripts/wait-for-service.sh mysql 30 1

end_wait_for_containers=$(date +%s)

start_test=$(date +%s)

make test || FAILED=true

end_test=$(date +%s)

end_total=$(date +%s)

echo "Build docker: " `expr $end_docker_build - $start_docker_build`

echo "Start docker: " `expr $end_docker_up - $start_docker_up `

echo "QA: " `expr $end_qa - $start_qa`

echo "Wait for containers: " `expr $end_wait_for_containers - $start_wait_for_containers`

echo "Tests: " `expr $end_test - $start_test`

echo "---------------------"

echo "Total: " `expr $end_total - $start_total`

make make-init

make docker-down ENV=ci || true

if [ "$FAILED" == "true" ]; then echo "FAILED"; exit 1; fi

echo "SUCCESS"

Run Details

- As a preparation step, we first ensure that no outdated

ci containers are running (this is only necessary locally, because runners on a remote CI system will start "from scratch"):

make docker-down ENV=ci || true

- We take some time measurements to understand how long certain parts take via:

start_total=$(date +%s)

to store the current timestamp

- We need the secret

gpg key in order to decrypt the secrets and simply copy the password-protected example key (in the actual CI systems, the key will be configured as a secret value that is injected in the run):

# STORE GPG KEY

cp secret-protected.gpg.example secret.gpg

- I like printing some debugging info in order to understand which exact circumstances we're dealing with (tbh, this is mostly relevant when setting the CI system up or making modifications to it):

# DEBUG

docker version

docker compose version

cat /etc/*-release || true

- For the docker setup, we start with initializing the environment for

ci:

# SETUP DOCKER

make make-init ENVS="ENV=ci

TAG=latest EXECUTE_IN_CONTAINER=true GPG_PASSWORD=12345678"

then build the docker setup:

make docker-build

and finally add a .build/ directory to collect the build artifacts:

mkdir -p .build && chmod 777 .build

- Then, the docker setup is started:

# START DOCKER

make docker-up

and gpg is initialized so that the secrets can be decrypted:

make gpg-init

make secret-decrypt-with-password

We don't need to pass a GPG_PASSWORD to secret-decrypt-with-password because we have set it up in the previous step as a default value via make-init.

- Once the

application container is running, the qa tools are run by invoking the qa make target.

# QA

make qa || FAILED=true

The || FAILED=true part makes sure that the script will not be terminated if the checks fail. Instead, the fact that a failure happened is "recorded" in the FAILED variable so that we can evaluate it at the end. We don't want the script to stop here because we want the following steps to be executed as well (e.g., the tests).

- To mitigate the "

mysql is not ready" problem, we will now apply the wait-for-service.sh script:

# WAIT FOR CONTAINERS

bash .docker/scripts/wait-for-service.sh mysql 30 1

- Once

mysql is ready, we can execute the tests via the test make target and apply the same || FAILED=true workaround as for the qa tools:

# TEST

make test || FAILED=true

- Finally, all the timers are printed:

# RUNTIMES

echo "Build docker: " `expr $end_docker_build - $start_docker_build`

echo "Start docker: " `expr $end_docker_up - $start_docker_up `

echo "QA: " `expr $end_qa - $start_qa`

echo "Wait for containers: " `expr $end_wait_for_containers -

$start_wait_for_containers`

echo "Tests: " `expr $end_test - $start_test`

echo "---------------------"

echo "Total: " `expr $end_total - $start_total`

- We clean up the resources (this is only necessary when running locally, because the runner of a CI system would be shut down anyways):

# CLEANUP

make make-init

make docker-down ENV=ci || true

-

And finally, evaluate if any error occurred when running the qa tools or the tests:

# EVALUATE RESULTS

if [ "$FAILED" == "true" ]; then echo "FAILED"; exit 1; fi

echo "SUCCESS"

Execution Example

Executing the script via:

bash .local-ci.sh

yields the following (shortened) output:

$ bash .local-ci.sh

Container dofroscra_ci-redis-1 Stopping

# Stopping all other `ci` containers ...

# ...

Client:

Cloud integration: v1.0.22

Version: 20.10.13

# Print more debugging info ...

# ...

Created a local .make/.env file

ENV=ci TAG=latest DOCKER_REGISTRY=docker.io DOCKER_NAMESPACE=dofroscra

APP_USER_NAME=application APP_GROUP_NAME=application docker

compose -p dofroscra_ci --env-file ./.docker/.env

-f ./.docker/docker-compose/docker-compose-php-base.yml build php-base

#1 [internal] load build definition from Dockerfile

# Output from building the docker containers

# ...

ENV=ci TAG=latest DOCKER_REGISTRY=docker.io DOCKER_NAMESPACE=dofroscra

APP_USER_NAME=application APP_GROUP_NAME=application docker compose

-p dofroscra_ci --env-file ./.docker/.env

-f ./.docker/docker-compose/docker-compose.local.ci.yml

-f ./.docker/docker-compose/docker-compose.ci.yml up -d

Network dofroscra_ci_network Creating

# Starting all `ci` containers ...

# ...

"C:/Program Files/Git/mingw64/bin/make" -s gpg-import GPG_KEY_FILES="secret.gpg"

gpg: directory '/home/application/.gnupg' created

gpg: keybox '/home/application/.gnupg/pubring.kbx' created

gpg: /home/application/.gnupg/trustdb.gpg: trustdb created

gpg: key D7A860BBB91B60C7: public key "Alice Doe protected

<alice.protected@example.com>" imported

# Output of importing the secret and public gpg keys

# ...

"C:/Program Files/Git/mingw64/bin/make" -s git-secret ARGS="reveal -f -p 12345678"

git-secret: done. 1 of 1 files are revealed.

"C:/Program Files/Git/mingw64/bin/make" -j 8 -k --no-print-directory

--output-sync=target qa-exec NO_PROGRESS=true

phplint done took 4s

phpcs done took 4s

phpstan done took 8s

composer-require-checker done took 8s

Waiting for service 'mysql' to become healthy, checking every 1 second(s)

for max. 30 times

[1/30] ...

SUCCESS

PHPUnit 9.5.19 #StandWithUkraine

........ 8 / 8 (100%)

Time: 00:03.077, Memory: 28.00 MB

OK (8 tests, 15 assertions)

Build docker: 12

Start docker: 2

QA: 9

Wait for containers: 3

Tests: 5

---------------------

Total: 46

Created a local .make/.env file

Container dofroscra_ci-application-1 Stopping

Container dofroscra_ci-mysql-1 Stopping

# Stopping all other `ci` containers ...

# ...

SUCCESS

Setup for Github Actions

If you are completely new to Github Actions, I recommend to start with the official Quickstart Guide for GitHub Actions and the Understanding GitHub Actions article. In short:

- Github Actions are based on so called Workflows

- Workflows are yaml files that live in the special .github/workflows directory in the repository

- a Workflow can contain multiple Jobs

- each Job consists of a series of Steps

- each Step needs a

run: element that represents a command that is executed by a new shell

The Workflow File

Github Actions are triggered automatically based on the files in the .github/workflows directory. I have added the file .github/workflows/ci.yml with the following content:

name: CI build and test

on:

# automatically run for pull request and for pushes to branch

"part-7-ci-pipeline-docker-php-gitlab-github"

# @see https://stackoverflow.com/a/58142412/413531

push:

branches:

- part-7-ci-pipeline-docker-php-gitlab-github

pull_request: {}

# enable to trigger the action manually

# @see https://github.blog/changelog/2020-07-06-github-actions-manual-triggers-with-workflow_dispatch/

# CAUTION: there is a known bug that makes the "button to trigger the run"

# not show up

# @see https://github.community/t/workflow-dispatch-workflow-not-showing-in-actions-tab/130088/29

workflow_dispatch: {}

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v1

- name: start timer

run: |

echo "START_TOTAL=$(date +%s)" > $GITHUB_ENV

- name: STORE GPG KEY

run: |

# Note: make sure to wrap the secret in double quotes (")

echo "${{ secrets.GPG_KEY }}" > ./secret.gpg

- name: SETUP TOOLS

run : |

DOCKER_CONFIG=${DOCKER_CONFIG:-$HOME/.docker}

# install docker compose

# @see https://docs.docker.com/compose/cli-command/#install-on-linux

# @see https://github.com/docker/compose/issues/8630#issuecomment-1073166114

mkdir -p $DOCKER_CONFIG/cli-plugins

curl -sSL https://github.com/docker/compose/releases/download/v2.2.3/docker-compose-linux-$(uname -m) -o $DOCKER_CONFIG/cli-plugins/docker-compose

chmod +x $DOCKER_CONFIG/cli-plugins/docker-compose

- name: DEBUG

run: |

docker compose version

docker --version

cat /etc/*-release

- name: SETUP DOCKER

run: |

make make-init ENVS="ENV=ci TAG=latest

EXECUTE_IN_CONTAINER=true GPG_PASSWORD=${{ secrets.GPG_PASSWORD }}"

make docker-build

mkdir .build && chmod 777 .build

- name: START DOCKER

run: |

make docker-up

make gpg-init

make secret-decrypt-with-password

- name: QA

run: |

# Run the tests and qa tools but only store the error

# instead of failing immediately

# @see https://stackoverflow.com/a/59200738/413531

make qa || echo "FAILED=qa" >> $GITHUB_ENV

- name: WAIT FOR CONTAINERS

run: |

# We need to wait until mysql is available.

bash .docker/scripts/wait-for-service.sh mysql 30 1

- name: TEST

run: |

make test || echo "FAILED=test $FAILED" >> $GITHUB_ENV

- name: RUNTIMES

run: |

echo `expr $(date +%s) - $START_TOTAL`

- name: EVALUATE

run: |

# Check if $FAILED is NOT empty

if [ ! -z "$FAILED" ]; then echo "Failed at $FAILED" && exit 1; fi

- name: upload build artifacts

uses: actions/upload-artifact@v3

with:

name: build-artifacts

path: ./.build

The steps are essentially the same as explained before at Run details for the local run. Some additional notes:

- I want the Action to be triggered automatically only when I push to branch part-7-ci-pipeline-docker-php-gitlab-github OR when a pull request is created (via

pull_request). In addition, I want to be able to trigger the Action manually on any branch (via workflow_dispatch).

on:

push:

branches:

- part-7-ci-pipeline-docker-php-gitlab-github

pull_request: {}

workflow_dispatch: {}

For a real project, I would let the action only run automatically on long-living branches like main or develop. The manual trigger is helpful if you just want to test your current work without putting it up for review. CAUTION: There is a known issue that "hides" the "Trigger workflow" button to trigger the action manually.

- A new shell is started for each

run: instruction, thus we must store our timer in the "global" environment variable $GITHUB_ENV:

- name: start timer

run: |

echo "START_TOTAL=$(date +%s)" > $GITHUB_ENV

This will be the only timer we use, because the job uses multiple steps that are timed automatically - so we don't need to take timestamps manually:

-

The gpg key is configured as an encrypted secret named GPG_KEY and is stored in ./secret.gpg. The value is the content of the secret-protected.gpg.example file:

- name: STORE GPG KEY

run: |

echo "${{ secrets.GPG_KEY }}" > ./secret.gpg

Secrets are configured in the Github repository under Settings > Secrets > Actions at:

https://github.com/$user/$repository/settings/secrets/actions

e.g.

https://github.com/paslandau/docker-php-tutorial/settings/secrets/actions

- The

ubuntu-latest image doesn't contain the docker compose plugin, thus we need to install it manually:

- name: SETUP TOOLS

run : |

DOCKER_CONFIG=${DOCKER_CONFIG:-$HOME/.docker}

mkdir -p $DOCKER_CONFIG/cli-plugins

curl -sSL https://github.com/docker/compose/releases/download/v2.2.3/

docker-compose-linux-$(uname -m) -o $DOCKER_CONFIG/cli-plugins/docker-compose

chmod +x $DOCKER_CONFIG/cli-plugins/docker-compose

- For the

make initialization, we need the second secret named GPG_PASSWORD - which is configured as 12345678 in our case, see Add a password-protected secret gpg key:

- name: SETUP DOCKER

run: |

make make-init ENVS="ENV=ci TAG=latest EXECUTE_IN_CONTAINER=true

GPG_PASSWORD=${{ secrets.GPG_PASSWORD }}"

- Because the runner will be shutdown after the run, we need to move the build artifacts to a permanent location, using the actions/upload-artifact@v3 action:

- name: upload build artifacts

uses: actions/upload-artifact@v3

with:

name: build-artifacts

path: ./.build

You can download the artifacts in the Run overview UI:

Setup for Gitlab Pipelines

If you are completely new to Gitlab Pipelines, I recommend to start with the official Get started with GitLab CI/CD guide. In short:

- the core concept of Gitlab Pipelines is the Pipeline

- it is defined in the yaml file .gitlab-ci.yml that lives in the root of the repository

- a Pipeline can contain multiple Stages

- each Stage consists of a series of Jobs

- each Job contains a

script section - the

script section consists of a series of shell commands

Due to technical constraints, this article is capped at 40000 characters. Read the full content at CI Pipelines for dockerized PHP Apps with Github & Gitlab [Tutorial Part 7].