Here we take the data returned by Form Recognizer and save it to Dataverse so the Power App from the first article in the series can use it, and save it to an Azure SQL database so other apps and services and work with it.

In the previous two articles, we’ve set up a Power Apps application to upload images to a Dataverse database. Using Azure Event Hub, Functions, and Form Recognizer, we extracted text from images using OCR. Setting up AI to help the accounting department save time was painless.

In this final article of the three-part series, we'll write the extracted data to Dataverse to use in our Power Apps application. We’ll also send the data to a SQL database to be available for other applications across the organization.

Creating a Dataverse Details Table

Before we can write the data from Form Recognizer to Dataverse, we need to create a new table to store our extracted data. So, go to Dataverse and create a new table. Name it something like "FormDetail" and leave all other defaults.

When the table is ready, add two additional columns. Name the first column "TextData," give it the Data type "Text," make it required, and edit the length to be 1,000 in Advanced options.

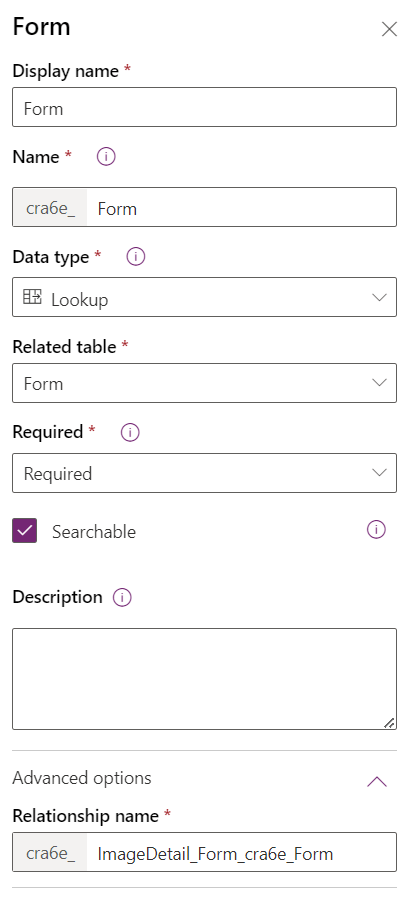

The second column is slightly different because it will link our "Form" table to the "FormDetail" table. That way, we know which details belong to which form.

Name the column "Form" because it will store a link to the form. Pick "Lookup" for Data type, which makes the Related table field visible. Pick "Form" for Related table. Also, make the column required.

Once you’ve created the column, save your table before returning to your table overview.

Writing Back to Dataverse

Writing back to Dataverse — especially connecting the form to the form details — can be challenging.

We’ll need to POST an object with the properties from Dataverse and their corresponding values. Doing so is straightforward, except for the Form property, which is a lookup. We’ll need to post this property in the form of [property name]@odata.bind. Since this isn’t a valid property name in C#, we need to create the JSON manually or use Dictionary<string, object> to generate the JSON. We'll do the latter.

We can now loop through the lines from Form Recognizer and insert them one by one. Since we don’t know what all the text means yet, we’ll just use the text as both name and value. The @odata.bind is tricky because the Form property must have an uppercase F, unlike everything else we’ve done so far.

Place this code in your application’s code:

foreach (var value in result.Value)

{

foreach (var line in value.Lines)

{

var postResult = await client.PostAsJsonAsync($"{prefix}_{detailsTableName}", new List<object>

{

new Dictionary<string, object>

{

{ $"{prefix}_name", line.Text },

{ $"{prefix}_textdata", line.Text },

{ $"{prefix}_Form@odata.bind", $"/{prefix}_{tableName}({primaryEntityId})" }

}

});

postResult.EnsureSuccessStatusCode();

}

}

Since we’ve already fetched data from Dataverse, we can reuse the HTTP client. We already have access, so other than the uppercase F, there’s not much to see.

Showing Data in Power Apps

Next, we’ll show the data in Power Apps.

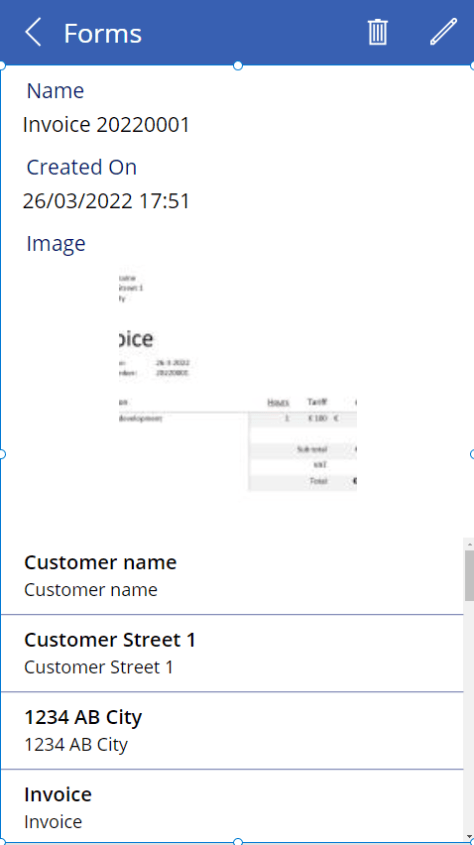

Go to your Power App designer and open the detail screen. Go to Insert in the ribbon bar at the top and add a vertical gallery. Drag the gallery to the bottom of the form, under the image.

After that, we’ll need to set the gallery source. You can do this in the formula field at the top or in the Items field in the Advanced Properties tab. If you check the Item field of DetailForm1, you’ll see "BrowserGallery1.Selected," the selected item in the browse screen’s browser gallery. We’ll use this item in the new gallery, so set the Items field to "BrowseGallery1.Selected.FormDetails."

Next, in Properties, change the gallery layout to "Title and subtitle." In Fields, set the name and text data fields to "title" and "subtitle," respectively.

When you run your Function app and upload a picture of an invoice (a dummy invoice is available in this article’s source code, its text should appear in your form after a slight delay.

Extracting an Invoice

Unfortunately, this extracted text lacks meaning. Let’s fix that.

We could train Form Recognizer to recognize specific values on specific forms, like driver’s licenses, invoices, and business cards. Form Recognizer has a few pre-trained models that work with English and Spanish.

Change this line in your application’s code:

var operation = await recognizer.StartRecognizeContentAsync(stream);

to:

var operation = await recognizer.StartRecognizeInvoicesAsync(stream);

The response changes, too, so we need to rewrite our foreach loop a little:

foreach (var value in result.Value)

{

foreach (var field in value.Fields)

{

var postResult = await client.PostAsJsonAsync($"{prefix}_{detailsTableName}", new List<object>

{

new Dictionary<string, object>

{

{ $"{prefix}_name", field.Key },

{ $"{prefix}_textdata", field.Value.ValueData?.Text },

{ $"{prefix}_Form@odata.bind", $"/{prefix}_{tableName}({primaryEntityId})" }

}

});

var resultContext = await postResult.Content.ReadAsStringAsync();

postResult.EnsureSuccessStatusCode();

}

}dw

We now have fields instead of lines. A field has a key suitable for the Dataverse Name field. We can get the value using field.Value.ValueData.Text. Notice that ValueData can be null, so we use a null-propagator here.

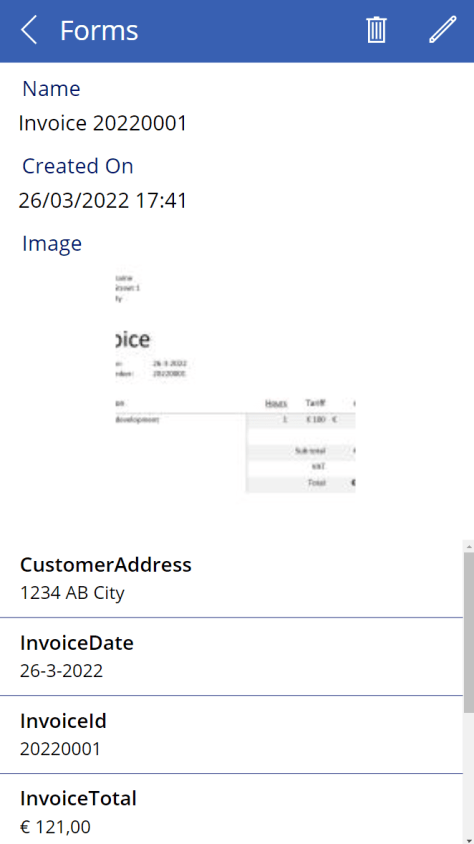

Now, if we upload the same invoice, we get helpful data.

As the screenshot shows, Form Recognizer recognized the customer address, invoice date, invoice ID, and total. The other fields include the subtotal and taxes.

You should probably clean the data or use columns rather than rows that you can update on your invoice. But you can use this valuable data as you see fit.

You now know how to write data to Dataverse and use it in a Power App.

Creating an Azure SQL Database

We’ll next write our Form Recognizer results to an Azure SQL database. First, we need to create the database.

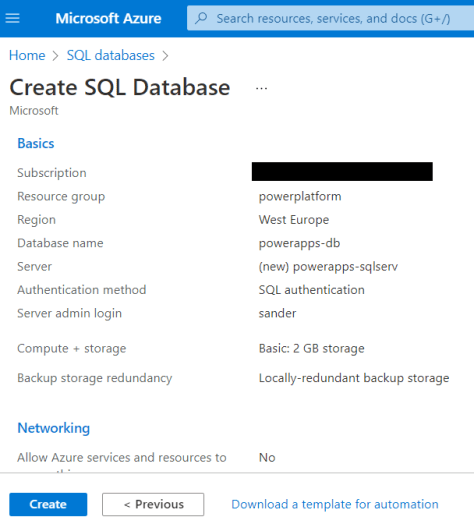

Go to the Azure Portal and find your SQL databases. Create a new one and put it into the resource group you’ve been using throughout this series. Name the database something like "powerapps-db."

You’ll probably want to create a new SQL Server as well. Enter any globally-unique server name, pick your locations, and enter a username and password. For the service and compute tier, choose either the serverless plan or the DTU-based basic tier. Also, pick locally-redundant backup storage. Leave all the other defaults. Then, click Create.

Deployment may take a couple of minutes.

When Azure deploys the database, go to your SQL Server, find the firewall settings, and add your client IP address to the allowed IP address list.

Next, go to your SQL Database in the Azure Portal and find its query editor. You could use SQL Server Management Studio’s more robust editor instead. Log in using your username and password and create two tables using the following query:

CREATE TABLE dbo.Form

(

[Id] [int] IDENTITY(1,1) NOT NULL,

[Name] nvarchar NOT NULL,

[Base64Image] nvarchar NOT NULL,

CONSTRAINT [PK_Form] PRIMARY KEY CLUSTERED (Id)

)

GO

CREATE TABLE dbo.FormDetail

(

[Id] [int] IDENTITY(1,1) NOT NULL,

[Name] nvarchar NOT NULL,

[TextData] nvarchar NOT NULL,

[FormId] INT NOT NULL,

CONSTRAINT [PK_FormDetail] PRIMARY KEY CLUSTERED (Id)

)

GO

ALTER TABLE dbo.FormDetail WITH CHECK ADD CONSTRAINT [FK_FormDetail_Form] FOREIGN KEY(FormId)

REFERENCES dbo.Form (Id)

GO

We can now write to these tables from our Azure Function.

Writing to Azure SQL

There are a couple of ways to write data to Azure SQL. We’ll use Entity Framework (EF).

Start by installing the Microsoft.EntityFrameworkCore.SqlServer NuGet package using the NuGet package manager or NuGet CLI. There are tools to generate an EF model based on an existing database, and you can opt for code-first database generation. But, for this simple example, we’ll type out our database in code. For simplicity, we’ll not inject our DbContext, and we’ll paste the connection string directly into the code.

You can find your connection string in your Azure SQL Database resource. Don’t forget to enter your password into the string.

Add these classes to your application:

public class Form

{

public int Id { get; set; }

public string Name { get; set; }

public string Base64Image { get; set; }

public ICollection<FormDetail> FormDetails { get; set; }

}

public class FormDetail

{

public int Id { get; set; }

public string Name { get; set; }

public string TextData { get; set; }

public int FormId { get; set; }

}

public class ApplicationDbContext : DbContext

{

public DbSet<Form> Forms { get; set; }

protected override void OnConfiguring(DbContextOptionsBuilder optionsBuilder)

{

optionsBuilder.UseSqlServer("<Your connection string>"

base.OnConfiguring(optionsBuilder);

}

protected override void OnModelCreating(ModelBuilder modelBuilder)

{

base.OnModelCreating(modelBuilder);

modelBuilder.Entity<Form>().ToTable(nameof(Form));

}

}

Saving to Azure is now easy.

string name = JsonConvert.DeserializeObject<dynamic>(content)[$"{prefix}_name"];

var form = new Form

{

Base64Image = image,

Name = name,

FormDetails = result.Value.SelectMany(v => v.Fields.Select(f => new FormDetail

{

Name = f.Key,

TextData = f.Value.ValueData?.Text

})).ToList()

};

context.Forms.Add(form);

await context.SaveChangesAsync();

We’ve added the name column to the Dataverse OData GET $select parameter so we can use it here. We can now copy the complete form and the form data we just extracted.

You can test whether the data was inserted into your database by going to Azure and running SELECT * FROM FormDetail in the query editor.

Find the complete code on GitHub.

Conclusion

Fusion development offers the best of both worlds.

Power Apps and Dataverse enable citizen developers to create complex applications with relative ease. Meanwhile, experienced developers can harness the full power of the Azure platform via a programming language without worrying about users’ detailed front-end wishlist.

We’ve created an app that lets users upload pictures of invoices using Power Apps in this series. We then extracted meaningful information from that data using Azure Form Recognizer. We’ve finally stored the data in Dataverse and an Azure SQL database for our application and other applications to access.

Our hypothetical citizen developer in the accounting department quickly got the standard functions they needed by creating a no-code app to gather invoices. Our hypothetical experienced developer then jumped in to connect all the moving parts and harness Azure’s AI capabilities, making the app more robust.

The accounting department saves time by automatically extracting invoice information and keeping it in a database. Best of all, they can play with the user interface, customizing it to suit their needs. And, if they need more capabilities in the future, they can always use Power Apps to add those functions.

Power Apps is especially well-suited for fusion development. It uses Microsoft Azure Cloud, making it easy for developers to connect to and extend the Power App with Azure’s many solutions, including artificial intelligence. Explore Power Apps and other Azure cloud tools to get inspired and create your own AI solutions.

To learn more about build a complex, fully functional solution that combines Power Apps with Azure services, check out the e-book Fusion development approach to building apps using Power Apps.