Article provides the documentation of the features of created .NET Wrapper of FFmpeg libraries. It contains description of architecture, core classes and include sample applications and results of code execution.

Table of Contents

There are lots of attempts to create .NET wrappers of FFmpeg libraries. I saw different implementations, mostly just performing calling ffmpeg.exe with prepared arguments and parsing outputs from it. More productive results have some basic classes which can perform just one of few tasks of ffmpeg and whose classes are exposed as COM objects or regular C# classes, but their functionality is very limited and does not fully perform in .NET what actually can be done with full access to ffmpeg libraries in C++. I also have seen a totally .NET “unsafe” code implementation of wrapper, so the C# code must be also marked as “unsafe” and the functions of such wrapper have lots of pointers like “void *” and other similar types. So, usage of such code is also problematic and I am just talking about C# and not take other languages such as VB.NET.

Current implementation of .NET wrapper is representation of all exported structures from ffmpeg libraries, along with APIs which can operate with those objects from managed code either from C# or VB.NET or even Delphi.NET. It is not just calls of certain APIs of a couple predefined objects - all API exposed as methods. You may ask a good question: “how is that possible and why wasn't that done before?”, and the answers you can find in current documentation.

Article describes created .NET wrapper of FFmpeg libraries. It covers basic architecture aspects along with information: how that was done and what issues are solved during implementation. It contains a lot of code samples for different aims of usage of FFmpeg library. Each code is workable and has a description with the output results. It will be interesting for those who want to start learning how to work with the ffmpeg library or for those who plan to use it in production. Even for those who are sure that he knows the ffmpeg library from C++ side, this information also will be interesting. And if you are looking for a solution of how to integrate the whole ffmpeg libraries power directly into your .NET application - then you are on the right way.

The first part describes wrapper library architecture, main features, basic design of the classes, what kind of issues appear during implementation and how they were solved. Here is also introduced the base classes of the library with description of their fields and purposes. It contains code snippet and samples either in C++/CLI or C#. In the second part, you can find core wrapper classes of the most FFmpeg libraries structures. Each class documentation contains basic description and one or few sample code of usage for this class. In this part code samples in C#. Part three introduces some advanced topics of the library. In additional documentation include description of the example C# projects of the .NET wrapper of native C++ FFmpeg examples. There are also a few of my own sample projects, which, I think, will be interesting to look for FFmpeg users. Last part contains a description about how to build the project, license information and distribution notes.

Current implementation supports FFmpeg libraries from 4.2.2 and higher. By saying higher I mean that it controls some aspects of newer versions with some definitions and/or dynamic library linking. For example native FFmpeg libraries have definitions of what APIs are exposed and what fields each structure contains and that is properly handled in the current structure.

By saying that implementation of the current .NET wrapper isn’t simple or not easy it means say nothing. The result of the ability of this library to exist and work properly is in its architecture. Project is written on C++/CLI and compiled as a standalone .NET DLL which can be added as reference into .NET applications written in any language. Answers why decisions were made in favor of C++/CLI you can find in this documentation.

Library contains couple h/cpp files which are mostly represent underlying imported FFmpeg dll library:

| AVCore.h/cpp | Contains core classes enumerations and structures. Also contains imported classes and enumerations from AVUtil library |

| AVCodec.h/cpp | Contains classes and enumerations for wrapper from AVCodec Library |

| AVFormat.h/cpp | Contains classes and enumerations for wrapper from AVFormat Library |

| AVFilter.h/cpp | Contains classes and enumerations for wrapper from AVFilter Library |

| AVDevice.h/cpp | Contains classes and enumerations for wrapper from AVDevice Library |

| SWResample.h/cpp | Contains classes and enumerations for wrapper from SWResample Library |

| SWScale.h/cpp | Contains classes and enumerations for wrapper from SWScale Library |

| Postproc.h/cpp | Contains classes and enumerations for wrapper from Postproc Library |

This topic contains the description of most benefits and a design guide of major parts of library architecture from base classes design to core aspects and resolved issues. This part along with C# also contains code snippets from wrapper library implementation which is in C++/CLI.

Most classes in the wrapper library implementation rely on structure or enumeration in FFmpeg libraries. Class has the same name as its native underlying structure. For example: the managed class AVPacket in the library represents the AVPacket structure of the libavcodec: it contains all fields of the structure along with exposed related methods. The fields implemented not as regular structure fields, but as managed properties:

public ref class AVPacket : public AVBase

, public ICloneable

{

private:

Object^ m_pOpaque;

AVBufferFreeCB^ m_pFreeCB;

internal:

AVPacket(void * _pointer,AVBase^ _parent);

public:

AVPacket();

AVPacket(int _size);

AVPacket(IntPtr _data,int _size);

AVPacket(IntPtr _data,int _size,AVBufferFreeCB^ free_cb,Object^ opaque);

AVPacket(AVBufferRef^ buf);

AVPacket(AVPacket^ _packet);

~AVPacket();

public:

property int _StructureSize { virtual int get() override; }

public:

property AVBufferRef^ buf { AVBufferRef^ get(); }

property Int64 pts { Int64 get(); void set(Int64); }

property Int64 dts { Int64 get(); void set(Int64); }

...

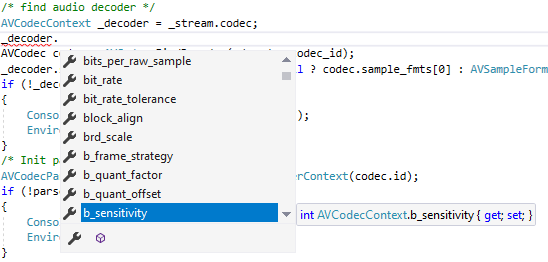

The class which relies on native structure is inherited from the AVBase class. The classes represent the pointer to underlying structure. Exposing structure fields as properties gives the ability to hide whole internals and this allows to support different versions of ffmpeg by encapsulation implementations. For example: in certain ffmpeg versions some fields can be hidden in structure and accessed via Options APIs, like the “b_sensitivity” field of AVCodecContext it may be removed during build by having FF_API_PRIVATE_OPT definition set:

#if FF_API_PRIVATE_OPT

attribute_deprecated

int b_sensitivity;

#endif

In wrapper library, you only see the property, but internally it chooses the way to access it:

int FFmpeg::AVCodecContext::b_sensitivity::get()

{

__int64 val = 0;

if (AVERROR_OPTION_NOT_FOUND == av_opt_get_int(m_pPointer, "b_sensitivity", 0, &val))

{

#if FF_API_PRIVATE_OPT

val = ((::AVCodecContext*)m_pPointer)->b_sensitivity;

#endif

}

return (int)val;

}

void FFmpeg::AVCodecContext::b_sensitivity::set(int value)

{

if (AVERROR_OPTION_NOT_FOUND ==

av_opt_set_int(m_pPointer, "b_sensitivity", (int64_t)value, 0))

{

#if FF_API_PRIVATE_OPT

((::AVCodecContext*)m_pPointer)->b_sensitivity = (int)value;

#endif

}

}

In same time in C# code:

Or, in some versions of FFmpeg, fields may be not present at all. For example “refcounted_frames” of AVCodecContext:

int FFmpeg::AVCodecContext::refcounted_frames::get()

{

__int64 val = 0;

if (AVERROR_OPTION_NOT_FOUND ==

av_opt_get_int(m_pPointer, "refcounted_frames", 0, &val))

{

#if (LIBAVCODEC_VERSION_MAJOR < 59)

val = ((::AVCodecContext*)m_pPointer)->refcounted_frames;

#endif

}

return (int)val;

}

void FFmpeg::AVCodecContext::refcounted_frames::set(int value)

{

if (AVERROR_OPTION_NOT_FOUND ==

av_opt_set_int(m_pPointer, "refcounted_frames", (int64_t)value, 0))

{

#if (LIBAVCODEC_VERSION_MAJOR < 59)

((::AVCodecContext*)m_pPointer)->refcounted_frames = (int)value;

#endif

}

}

I’m sure you already think that it is very cool and no need to worry about the background. Such implementation is known as Facade pattern.

If you know FFmpeg libraries APIs, you can agree that it contains APIs for allocating structures. There can be different APIs for every structure for such purposes. In wrapper classes, all objects have a constructor - so users no need to think about what API to use; and even more: the constructor can encapsulate a list of FFmpeg API calls to perform full object initialization. Different FFmpeg API as well as different object initialization are done as separate constructor with different arguments. As an example constructor of AVPacket object:

public:

AVPacket();

AVPacket(int _size);

AVPacket(IntPtr _data,int _size);

AVPacket(IntPtr _data,int _size,AVBufferFreeCB^ free_cb,Object^ opaque);

AVPacket(AVBufferRef^ buf);

AVPacket(AVPacket^ _packet);

There is a special case of an internal constructor which is not exposed outside of the library. Most objects contain it. This is done for internal object management which I describe later in this document:

internal:

AVPacket(void * _pointer,AVBase^ _parent);

Also for users no need to worry about releasing memory and free object data. This is all done in object destructors and internally in finalizers. In C#, that is implemented automatically as an IDisposable interface of an object. So, once an object is not needed it is good to call Dispose method - to clear all dependencies and free allocated object memory. Users also have no need to think which FFmpeg API to call to free data and deallocate object resources as It is all handled by the wrapper library. In case the user forgot to call Dispose method: object and its memory will be freed by finalizer, but it is good practice to call Dispose directly in code.

public class OutputStream

{

public AVStream st;

public AVCodecContext enc;

public long next_pts;

public int samples_count;

public AVFrame frame;

public AVFrame tmp_frame;

public float t, tincr, tincr2;

public SwsContext sws_ctx;

public SwrContext swr_ctx;

}

static void close_stream(AVFormatContext oc, OutputStream ost)

{

if (ost.enc != null) ost.enc.Dispose();

if (ost.frame != null) ost.frame.Dispose();

if (ost.tmp_frame != null) ost.tmp_frame.Dispose();

if (ost.sws_ctx != null) ost.sws_ctx.Dispose();

if (ost.swr_ctx != null) ost.swr_ctx.Dispose();

}

Also, it is necessary to keep in mind that such objects as AVPacket or AVFrame can contain buffers which are allocated internally by ffmpeg API and which should be freed separately see av_packet_unref and av_frame_unref APIs. For such purposes those objects expose methods named Free, so, don’t forget to call it to avoid memory leaks.

Some FFmpeg library definitions or enumerations are done as .NET enum classes - this way it is easy to find what value can be set in certain fields of the structures. For example AV_CODEC_FLAG_*, AV_CODEC_FLAG2_* or AV_CODEC_CAP_* definitions:

#define AV_CODEC_CAP_DR1 (1 << 1)

#define AV_CODEC_CAP_TRUNCATED (1 << 3)

Designed as .NET enum for better access from code:

[Flags]

public enum class AVCodecCap : UInt32

{

DR1 = (1 << 1),

TRUNCATED = (1 << 3),

...

In C#, this looks like:

Some enumerations or definitions from FFmpeg are done as regular .NET classes. In that case, class also exposes some useful methods which rely on specified enumerator type:

public value class AVSampleFormat

{

public:

static const AVSampleFormat NONE = ::AV_SAMPLE_FMT_NONE;

static const AVSampleFormat U8 = ::AV_SAMPLE_FMT_U8;

static const AVSampleFormat S16 = ::AV_SAMPLE_FMT_S16;

static const AVSampleFormat S32 = ::AV_SAMPLE_FMT_S32;

static const AVSampleFormat FLT = ::AV_SAMPLE_FMT_FLT;

static const AVSampleFormat DBL = ::AV_SAMPLE_FMT_DBL;

static const AVSampleFormat U8P = ::AV_SAMPLE_FMT_U8P;

static const AVSampleFormat S16P = ::AV_SAMPLE_FMT_S16P;

static const AVSampleFormat S32P = ::AV_SAMPLE_FMT_S32P;

static const AVSampleFormat FLTP = ::AV_SAMPLE_FMT_FLTP;

static const AVSampleFormat DBLP = ::AV_SAMPLE_FMT_DBLP;

protected:

int m_nValue;

public:

AVSampleFormat(int value);

explicit AVSampleFormat(unsigned int value);

protected:

public:

property String^ name { String^ get() { return ToString(); } }

property int bytes_per_sample { int get(); }

property bool is_planar { bool get(); }

Such classes expose all required things to work with such classes as value types along with implicit types conversions:

public:

static operator int(AVSampleFormat a) { return a.m_nValue; }

static explicit operator unsigned int(AVSampleFormat a)

{ return (unsigned int)a.m_nValue; }

static operator AVSampleFormat(int a) { return AVSampleFormat(a); }

static explicit operator AVSampleFormat(unsigned int a)

{ return AVSampleFormat((int)a); }

internal:

static operator ::AVSampleFormat(AVSampleFormat a)

{ return (::AVSampleFormat)a.m_nValue; }

static operator AVSampleFormat(::AVSampleFormat a)

{ return AVSampleFormat((int)a); }

Library contains special static classes which can identify used libraries - their version, build configuration and license:

public ref class LibAVCodec

{

private:

static bool s_bRegistered = false;

private:

LibAVCodec();

public:

static property UInt32 Version { UInt32 get(); }

static property String^ Configuration { String^ get(); }

static property String^ License { String^ get(); }

public:

static void Register(AVCodec^ _codec);

static void RegisterAll();

};

Each FFmpeg imported library has a similar class with described fields.

Those library classes can contain static initialization methods which are relay to internal calls of such APIs as: avcodec_register_all, av_register_all and others. Those methods may be called manually by the user, but the library manages to call them internally if needed. The API which is deprecated and may not be present as exported from FFmpeg library - internally designed dynamically linking. How that is done is described later in this document.

Some classes or enumerations have overridden the string conversion method: “ToString()”. In such a case, it returns the string value which is output by the related FFmpeg API to get a readable description of the type or structure content:

String^ FFmpeg::AVSampleFormat::ToString()

{

auto p = av_get_sample_fmt_name((::AVSampleFormat)m_nValue);

return p != nullptr ? gcnew String(p) : nullptr;

}

In example, it calls that method to get default string representation of the sample format value:

var fmt = AVSampleFormat.FLT;

Console.WriteLine("AVSampleFormat: {0} {1}",(int)fmt,fmt);

As you may know, most of FFmpeg API returns integer value, which may mean error or number processed data or have any other meaning. For the good understanding of the returned value wrapper library have the class with the name AVRESULT. It is the same as the AVERROR macro in FFmpeg, just designed as a class object with exposed useful fields and with .NET pluses. That class can be used as integer value without direct type casting as it is done as value type in its basis. By using the class, it is possible to get an error description string from the returned API value as the class has overridden the ToString() method so it can be easily used in string conversion:

ret = oc.WriteHeader();

if (ret < 0) {

Console.WriteLine("Error occurred when opening output file: {0}\n",(AVRESULT)ret);

Environment.Exit(1);

}

Given error description string is good for understanding what result has in execution of the method:

Console.WriteLine(AVRESULT.ENOMEM.ToString());

If AVRESULT, type used in code then possible to see result string under debugger:

In case we have integer result type and we know that it is an error, then just cast to AVRESULT type to see error description in variable values view while debugging:

This class also can be accessed with some predefined error values which can be used in code:

static AVRESULT CheckPointer(IntPtr p)

{

if (p == IntPtr.Zero) return AVRESULT.ENOMEM;

return AVRESULT.OK;

}

Some of the methods already designed to return AVRESULT objects, other types can be easily casted to it.

FFmpeg::AVRESULT FFmpeg::AVCodecParameters::FromContext(AVCodecContext^ codec)

{

return avcodec_parameters_from_context(

((::AVCodecParameters*)m_pPointer),(::AVCodecContext*)codec->_Pointer.ToPointer());

}

FFmpeg::AVRESULT FFmpeg::AVCodecParameters::CopyTo(AVCodecContext^ context)

{

return avcodec_parameters_to_context(

(::AVCodecContext*)context->_Pointer.ToPointer(),

(::AVCodecParameters*)m_pPointer);

}

FFmpeg::AVRESULT FFmpeg::AVCodecParameters::CopyTo(AVCodecParameters^ dst)

{

return avcodec_parameters_copy(

(::AVCodecParameters*)dst->_Pointer.ToPointer(),

((::AVCodecParameters*)m_pPointer));

}

The way to inform applications from your component or from the internal of FFmpeg library uses the av_log API. Access to the same functionality of the logging is done with a static AVLog class.

if ((ret = AVFormatContext.OpenInput(out ifmt_ctx, in_filename)) < 0) {

AVLog.log(AVLogLevel.Error, string.Format("Could not open input file {0}", in_filename));

goto end;

}

It also has the ability to set up your own callback for the receiving log messages:

static void LogCallback(AVBase avcl, int level, string fmt, IntPtr vl)

{

Console.WriteLine(fmt);

}

static void Main(string[] args)

{

AVLog.Callback = LogCallback;

...

Most of native FFmpeg structure objects have properties which are hidden and can be accessed only with av_opt_* API’s. Current implementations of the wrapper library also have such abilities. For that purpose, the library contains class AVOptions. Initially, it was designed as a static object but later implemented as a regular class. It can be initialized with a constructor with AVBase or just with a pointer type argument.

AVOptions options = new AVOptions(abuffer_ctx);

options.set("channel_layout", INPUT_CHANNEL_LAYOUT.ToString(), AVOptSearch.CHILDREN);

options.set("sample_fmt", INPUT_FORMAT.name, AVOptSearch.CHILDREN);

options.set_q("time_base", new AVRational( 1, INPUT_SAMPLERATE ), AVOptSearch.CHILDREN);

options.set_int("sample_rate", INPUT_SAMPLERATE, AVOptSearch.CHILDREN);

Class exposes all useful methods for manipulation with options API. Along with it, there is an ability to enumerate options:

AVOptions options = new AVOptions(ifmt_ctx);

foreach (AVOption o in options)

{

Console.WriteLine(o.name);

}

Another most used structure in FFmpeg API is the AVDictionary. It is a Key-Value collection class which can be an input or an output of certain functions. In such functions, you pass some initialization options as a dictionary object and in return getting all available options or options which are not used by the function. The management of that structure pointer in such function methods is done in place there is such an API called. So, users just need to free the returned dictionary object in a regular way as it described earlier, and internally the structure pointer just replaced. For example, we have an FFmpeg API:

int avcodec_open2(AVCodecContext *avctx, const AVCodec *codec, AVDictionary **options);

And related implementation in the wrapper library:

AVRESULT Open(AVCodec^ codec, AVDictionary^ options);

AVRESULT Open(AVCodec^ codec);

So, according to FFmpeg API implementation details, structure designed to set options, and on returns, there is a new dictionary object with options which are not found. But on implementation, only the structure object is used which was passed as an argument. So this is working in a regular way and users have only one structure at all. Even if the call of the internal API failed, the user needed to free only the target structure object, which was created initially:

AVDictionary dct = new AVDictionary();

dct.SetValue("preset", "veryfast");

s.id = (int)_output.nb_streams - 1;

bFailed = !(s.codec.Open(_codec, dct));

dct.Dispose();

As mentioned, structure classes can contain fields of the related structure and methods which generated from FFmpeg API and also related to functionality of that structure. But classes can contain helper methods which allows extending functionality of FFmpeg. For example, class AVFrame has static methods for conversion from/to .NET Bitmap object into AVFrame structure:

public:

System::Drawing::Bitmap^ ToBitmap();

public:

static String^ GetColorspaceName(AVColorSpace val);

public:

static AVFrame^ FromImage(System::Drawing::Bitmap^ _image,AVPixelFormat _format);

static AVFrame^ FromImage(System::Drawing::Bitmap^ _image);

static AVFrame^ FromImage(System::Drawing::Bitmap^ _image,

AVPixelFormat _format,bool bDeleteBitmap);

static AVFrame^ FromImage(System::Drawing::Bitmap^ _image, bool bDeleteBitmap);

static AVFrame^ ConvertVideoFrame(AVFrame^ _source,AVPixelFormat _format);

static System::Drawing::Bitmap^ ToBitmap(AVFrame^ _frame);

Those methods encapsulate whole functionality for colorspace conversion and any other list of calls. More of that, there is an ability to wrap around existing Bitmap object without any copy or allocating new image data. In such a case, an internal helper object is created, which is freed with the parent AVFrame class. How that mechanism is implemented is described later in the document.

All FFmpeg structure classes are inherited from the AVBase class. It contains common fields and helper methods. AVBase class is the root class of each FFmpeg object. Main tasks of the AVBase object are to handle object management and memory management.

As mentioned, AVBase class is the base for every exposed structure of FFmpeg library and it contains fields which are common to all objects. Those fields are: structure pointer and size of structure. Size of structure may be not initialized unless the structure is not allocated directly, so user does not need to rely on that field. Pointers can be casted to raw structure type, for access to any additional fields or raw data directly:

AVBase avbase = new AVPacket();

Console.WriteLine("0x{0:x}",avbase._Pointer);

In case if structure can be allocated with a common allocation method, it may contain structure size non zero value and in case if the structure allocated the related property is set to true:

AVBase avbase = new AVRational(1,1);

Console.WriteLine("0x{0:x} StructureSize: {1} Allocated {2}",

avbase._Pointer,

avbase._StructureSize,

avbase._IsAllocated

);

If a class object is returned from any method, to be sure if such object is valid, we can check the pointer field and compare it to non-zero value. But there is also _IsValid property in the AVBase class which performs the same:

ost.frame = alloc_picture(c.pix_fmt, c.width, c.height);

if (!ost.frame._IsValid) {

Console.WriteLine("Could not allocate video frame\n");

Environment.Exit(1);

}

Decided to add the “_” prefix into such internal and not related to FFmpeg structure properties, so the user knows that it is not a structure field.

One method available for testing objects and its fields is the TraceValues(). This method uses .NET Reflection and enumerates available properties of the object with the values. If your code is critical to use of .NET Reflection, then that code should be excluded from assembly. Although TraceValues code worked only in Debug build configuration. Example of execution of that method is:

var r = new AVRational(25, 1);

r.TraceValues();

r.Dispose();

There are also a couple protected methods of the class which can help to build inherited classes with your own needs. I just point few of them which may be interested:

void ValidateObject();

That method checks whether an object is disposed or not, and if disposed then it throws an ObjectDisposedException exception.

bool _EnsurePointer();

bool _EnsurePointer(bool bThrow);

Those two methods check if the structure pointer is zero or not, including the case if the object is disposed. This method is better for internal use than the _IsValid property described earlier.

void AllocPointer(int _size);

This is the helper method of allocation structure pointer. Usually, an argument for this method is used in the _StructureSize field, but this is not a requirement. What method performs internal memory allocation for the structure pointer field: _Pointer, setting up the destructor and set allocation flag of an object: _IsAllocated.

if (!base._EnsurePointer(false))

{

base.AllocPointer(_StructureSize);

}

Other protected methods of the class are described in objects management or memory management topics.

As already described, that structure pointer in AVBase class is a pointer to a real FFmpeg structure. But any structure can be allocated and freed with different FFmpeg APIs. More of that after creation and call any initialization methods of any structure there is possible to call additional methods for destruction or specific uninitialization before performing actual object free. For example, AVCodecContext allocation and free APIs.

AVCodecContext *avcodec_alloc_context3(const AVCodec *codec);

void avcodec_free_context(AVCodecContext **avctx);

And at the same time, AVPacket object, along with allocation and free related APIs, have the underlying buffer dereferencing API, which should be called before freeing the structure.

void av_packet_unref(AVPacket *pkt);

Depending on that, AVBase class has two pointers to APIs which perform structure destruction and free actual structure object memory:

typedef void TFreeFN(void *);

typedef void TFreeFNP(void **);

TFreeFN * m_pFree;

TFreeFN * m_pDescructor;

TFreeFNP * m_pFreep;

There are two types of free API pointers, as different FFmpeg structures can have different API arguments to free underlying objects, and properly destroy it. If one API is set, then the other one is not used - those two pointers are mutual exclusion. Destructor API if set then called before any API to free structure. Example of how those pointers are initialized internally for AVPacket class:

FFmpeg::AVPacket::AVPacket()

: AVBase(nullptr,nullptr)

, m_pOpaque(nullptr)

, m_pFreeCB(nullptr)

{

m_pPointer = av_packet_alloc();

m_pFreep = (TFreeFNP*)av_packet_free;

m_pDescructor = (TFreeFN*)av_packet_unref;

av_init_packet((::AVPacket*)m_pPointer);

((::AVPacket*)m_pPointer)->data = nullptr;

((::AVPacket*)m_pPointer)->size = 0;

}

Those pointers can be changed internally in code depending on which object methods are called. So, in some cases, for an object to be set to a different free function pointer or different destructor depending on the object state. This allows the object to properly destroy allocated data and clean up used memory.

Each wrapper library object can belong to another object as well as the underlying structure field containing a pointer to another FFmpeg structure. As an example: structure AVCodecContext contains codec field which points to AVCodec structure; av_class field - AVClass structure and others; AVFormatContext have similar fields: iformat, oformat, pb and others. More of that, the structure fields may not be read only and can be changed by the user, so in the code, we need to handle that. In related properties, it designed in the next way:

public ref class AVFormatContext : public AVBase

{

public:

static property AVClass^ Class { AVClass^ get(); }

protected:

AVIOInterruptDesc^ m_pInterruptCB;

internal:

AVFormatContext(void * _pointer,AVBase^ _parent);

public:

AVFormatContext();

public:

property AVClass^ av_class { AVClass^ get(); }

property AVInputFormat^ iformat { AVInputFormat^ get(); void set(AVInputFormat^); }

property AVOutputFormat^ oformat { AVOutputFormat^ get(); void set(AVOutputFormat^); }

Internally, the child object of such structure is created with no referencing allocated data - as initially, the object is part of the parent. So disposing of that object makes no sense. But, if such an object is set by the user, which means that it was allocated as an independent object initially, then the parent holds reference to the child until it will be replaced via property or the main object will be released. In that case, the call of child object disposing does not free the actual object - it just decrements the internal reference counter. In that manner: one object can be created and can be set via property to different parents, and in all cases, that single object will be used without coping and it will be disposed automatically, once all parents will be freed. In other words, disposing of the child object will be performed then it does not belong to any other parents. So, an object can be created manually, an object can be created from a pointer as part of the parent object, or an object can be set as a pointer property of another object, and from all of that object can be properly released. This is handled by having two reference counters. One used for in-object access another from outer-object calls and once all references become zero - object freed.

Most child objects are created once the program accessed the property for the first time. Then such an object is put into the children collection, and if the program makes a new call of the same property, then a previously created object from that collection will be used, so no new instance is created. There are helper templates in the AVBase class for child instance creation:

internal:

template <class T>

static T^ _CreateChildObject(const void * p, AVBase^ _parent)

{ return _CreateChildObject((void*)p,_parent); }

template <class T>

static T^ _CreateChildObject(void * p,AVBase^ _parent) {

if (p == nullptr) return nullptr;

T^ o = (_parent != nullptr ? (T^)_parent->GetObject((IntPtr)p) : nullptr);

if (o == nullptr) o = gcnew T(p,_parent); return o;

}

template <class T>

T^ _CreateObject(const void * p) { return _CreateChildObject<T>(p,this); }

template <class T>

T^ _CreateObject(void * p) { return _CreateChildObject<T>(p,this); }

Children collection is freed once the main object is released. Class contains helper methods for accessing children objects in a collection:

protected:

AVBase^ GetObject(IntPtr p);

bool AddObject(IntPtr p,AVBase^ _object);

bool AddObject(AVBase^ _object);

void RemoveObject(IntPtr p);

Most interesting method here is the AddObject which allows associating a child AVBase object with a specified pointer. If that pointer is already associated with another object, then it will be replaced, and the previous object disposed.

Each AVBase object can contain allocated memory for certain properties, internal data or other needs. That memory is stored in the named collection which is related to that object. If a memory pointer is recreated, then it is replaced in the collection. Once an object is freed - then all allocated memory is also free.

Generic::SortedList<String^,IntPtr>^ m_ObjectMemory;

There are couple methods for memory manipulation in a class:

IntPtr GetMemory(String^ _key);

void SetMemory(String^ _key,IntPtr _pointer);

IntPtr AllocMemory(String^ _key,int _size);

IntPtr AllocString(String^ _key,String^ _value);

IntPtr AllocString(String^ _key,String^ _value,bool bUnicode);

void FreeMemory(String^ _key);

bool IsAllocated(String^ _key);

Those methods help allocate and free memory and check whether it is allocated or not. Naming access done for controls allocation for specified properties.

void FFmpeg::AVCodecContext::subtitle_header::set(array<byte>^ value)

{

if (value != nullptr && value->Length > 0)

{

((::AVCodecContext*)m_pPointer)->subtitle_header_size = value->Length;

((::AVCodecContext*)m_pPointer)->subtitle_header =

(uint8_t *)AllocMemory("subtitle_header",value->Length).ToPointer();

Marshal::Copy(value,0,

(IntPtr)((::AVCodecContext*)m_pPointer)->subtitle_header,value->Length);

}

else

{

FreeMemory("subtitle_header");

((::AVCodecContext*)m_pPointer)->subtitle_header_size = 0;

((::AVCodecContext*)m_pPointer)->subtitle_header = nullptr;

}

}

</byte>

Allocation of the memory is done by using av_maloc API of the FFmpeg library. There is also a static collection of allocated memory. That collection is used to call the static methods for memory allocation provided by the AVBase class.

IntPtr AllocMemory(String^ _key,int _size);

IntPtr AllocString(String^ _key,String^ _value);

IntPtr AllocString(String^ _key,String^ _value,bool bUnicode);

void FreeMemory(String^ _key);

bool IsAllocated(String^ _key);

Static memory collection located in a special AVMemory class. As that class is the base for AVMemPtr - which represents the special object of memory allocated pointer, and for AVBase - as that class is base for all imported structures and, as were mentioned, there may require memory allocation. Static memory is freed automatically once all objects which may use it are disposed.

In FFmpeg structures, there are a lot of fields which represent arrays of data with different types. Those arrays can be fixed size, arrays ending with specified data value, arrays of arrays and arrays of other structures. Each type can be designed personally, but there is also some common implementation. For example, array of streams in format context implemented as its own class with enumerator and indexed property.

ref class AVStreams

: public System::Collections::IEnumerable

, public System::Collections::Generic::IEnumerable<AVStream^>

{

private:

ref class AVStreamsEnumerator

: public System::Collections::IEnumerator

, public System::Collections::Generic::IEnumerator<AVStream^>

{

protected:

AVStreams^ m_pParent;

int m_nIndex;

public:

AVStreamsEnumerator(AVStreams^ streams);

~AVStreamsEnumerator();

public:

virtual bool MoveNext();

virtual property AVStream^ Current { AVStream^ get (); }

virtual void Reset();

virtual property Object^ CurrentObject

{ virtual Object^ get() sealed = IEnumerator::Current::get; }

};

protected:

AVFormatContext^ m_pParent;

internal:

AVStreams(AVFormatContext^ _parent) : m_pParent(_parent) {}

public:

property AVStream^ default[int] { AVStream^ get(int index)

{ return m_pParent->GetStream(index); } }

property int Count { int get() { return m_pParent->nb_streams; } }

public:

virtual System::Collections::IEnumerator^ GetEnumerator() sealed =

System::Collections::IEnumerable::GetEnumerator

{ return gcnew AVStreamsEnumerator(this); }

public:

virtual System::Collections::Generic::IEnumerator<AVStream^>^

GetEnumeratorGeneric() sealed =

System::Collections::Generic::IEnumerable<AVStream^>::GetEnumerator

{

return gcnew AVStreamsEnumerator(this);

}

};

In that case, we have an array with pointers to other structure classes - AVStream, and each indexed property call - performing creation of the child object of the parent AVFormatContext object, as described in objects management topic.

FFmpeg::AVStream^ FFmpeg::AVFormatContext::GetStream(int idx)

{

if (((::AVFormatContext*)m_pPointer)->nb_streams <=

(unsigned int)idx || idx < 0) return nullptr;

auto p = ((::AVFormatContext*)m_pPointer)->streams[idx];

return _CreateObject<AVStream>((void*)p);

}

In .NET, that looks just as regular property and array access:

var _input = AVFormatContext.OpenInputFile(@"test.avi");

if (_input.FindStreamInfo() == 0)

{

for (int i = 0; i < _input.streams.Count; i++)

{

Console.WriteLine("Stream: {0} {1}",i,_input.streams[i].codecpar.codec_type);

}

}

There are also possible cases where you need to set an array of the AVBase objects as property - in that situation, each object is put into the parent objects collection, so disposing of that object can be done safely. Along with the main structure array, the property which is related to the counter of objects in that array can be modified internally. Also, the previously allocated objects and array memory itself are freed. The next code displays how that is done:

void FFmpeg::AVFormatContext::chapters::set(array<avchapter^>^ value)

{

{

int nCount = ((::AVFormatContext*)m_pPointer)->nb_chapters;

::AVChapter ** p = (::AVChapter **)((::AVFormatContext*)m_pPointer)->chapters;

if (p)

{

while (nCount-- > 0)

{

RemoveObject((IntPtr)*p++);

}

}

((::AVFormatContext*)m_pPointer)->nb_chapters = 0;

((::AVFormatContext*)m_pPointer)->chapters = nullptr;

FreeMemory("chapters");

}

if (value != nullptr && value->Length > 0)

{

::AVChapter ** p = (::AVChapter **)AllocMemory

("chapters",value->Length * (sizeof(::AVChapter*))).ToPointer();

if (p)

{

((::AVFormatContext*)m_pPointer)->chapters = p;

((::AVFormatContext*)m_pPointer)->nb_chapters = value->Length;

for (int i = 0; i < value->Length; i++)

{

AddObject((IntPtr)*p,value[i]);

*p++ = (::AVChapter*)value[i]->_Pointer.ToPointer();

}

}

}

}

In most cases with read only arrays, where array values are not required to be changed, the properties returns a simple .NET array:

array<FFmpeg::AVSampleFormat>^ FFmpeg::AVCodec::sample_fmts::get()

{

List<AVSampleFormat>^ _array = nullptr;

const ::AVSampleFormat * _pointer = ((::AVCodec*)m_pPointer)->sample_fmts;

if (_pointer)

{

_array = gcnew List<AVSampleFormat>();

while (*_pointer != -1)

{

_array->Add((AVSampleFormat)*_pointer++);

}

}

return _array != nullptr ? _array->ToArray() : nullptr;

}

The usage of such arrays are done in regular way in .NET:

var codec = AVCodec.FindDecoder(AVCodecID.MP3);

Console.Write("{0} Formats [ ",codec.long_name);

foreach (var fmt in codec.sample_fmts)

{

Console.Write("{0} ",fmt);

}

Console.WriteLine("]");

For other cases for arrays accessing in the wrapper library implemented base class AVArrayBase. It is inherited from the AVBase class and accesses memory chunks with specified data size. Class also contains a number of elements in that array.

public ref class AVArrayBase : public AVBase

{

protected:

bool m_bValidate;

int m_nItemSize;

int m_nCount;

protected:

AVArrayBase(void * _pointer,AVBase^ _parent,int nItemSize,int nCount)

: AVBase(_pointer,_parent) , m_nCount(nCount),

m_nItemSize(nItemSize), m_bValidate(true) { }

AVArrayBase(void * _pointer,AVBase^ _parent,

int nItemSize,int nCount,bool bValidate)

: AVBase(_pointer,_parent) , m_nCount(nCount),

m_nItemSize(nItemSize), m_bValidate(bValidate) { }

protected:

void ValidateIndex(int index) { if (index < 0 || index >= m_nCount)

throw gcnew ArgumentOutOfRangeException(); }

void * GetValue(int index)

{

if (m_bValidate) ValidateIndex(index);

return (((LPBYTE)m_pPointer) + m_nItemSize * index);

}

void SetValue(int index,void * value)

{

if (m_bValidate) ValidateIndex(index);

memcpy(((LPBYTE)m_pPointer) + m_nItemSize * index,value,m_nItemSize);

}

public:

property int Count { int get() { return m_nCount; } }

};

That class is the base and without types specifying. And the main template for the typed arrays is the AVArray class. It is inherited from AVArrayBase and already has access to the indexed property of the array elements. Along with it, the class supports enumerators. This class has special modifications for some types like AVMemPtr and IntPtr to make proper access to array elements. The template classes are used in most cases in the library, as they give direct access to underlying array memory - without any .NET marshaling and copy. For example, the class AVFrame/AVPicture has properties:

property AVArray<AVMemPtr^>^ data { AVArray<AVMemPtr^>^ get(); }

property AVArray<int>^ linesize { AVArray<int>^ get(); }

And in the same place, the AVMemPtr class has the ability to directly access the memory with its properties, as different typed arrays which helps modifying or reading data. Next example shows how to access the frame data:

var frame = AVFrame.FromImage((Bitmap)Bitmap.FromFile(@"image.jpg"),

AVPixelFormat.YUV420P);

for (int j = 0; j < frame.height; j++)

{

for (int i = 0; i < frame.linesize[0]; i++)

{

Console.Write("{0:X2}",frame.data[0][i + j * frame.linesize[0]]);

}

Console.WriteLine();

}

frame.Dispose();

Initially, in the wrapper library all properties, which operate with pointers, were designed as IntPtr type. But it is not an easy way to work with memory in .NET directly with IntPtr. As in application may require to change picture data, generate image, perform some processing of audio and video. To make that easier, the AVMemPtr memory helper class was involved. However, it contains an implicit casting operator which allows it to be used in the same methods which have the IntPtr type as argument in .NET. The basic usage of this class as regular IntPtr value demonstrated in the next C# example:

[DllImport("msvcrt.dll", EntryPoint = "strcpy")]

public static extern IntPtr strcpy(IntPtr dest, IntPtr src);

static void Main(string[] args)

{

var p = Marshal.StringToCoTaskMemAnsi("some text");

AVMemPtr s = new AVMemPtr(256);

strcpy(s, p);

Marshal.FreeCoTaskMem(p);

Console.WriteLine("AVMemPtr string: \"{0}\"", Marshal.PtrToStringAnsi(s));

s.Dispose();

}

In this example, we allocate memory from given text. The result of that memory pointer and the data can be shown in the next picture:

After execution of C++ strcpy API .NET wrapper, which performs zero ending string copy, you can see that the text copied into allocated pointer of AVMemPtr object:

And after we display the string value which we have in there:

Memory allocation and free in class done with FFmpeg API av_alloc and av_free. Class has comparison operators with different types. AVMemPtr also can be casted directly from IntPtr structure. Along with it, the class has the ability to access data as a regular bytes array:

var p = Marshal.StringToCoTaskMemAnsi("some text");

AVMemPtr s = p;

int idx = 0;

Console.Write("AVMemPtr data: ");

while (s[idx] != 0) Console.Write(" {0}",(char)s[idx++]);

Console.Write("\n");

Marshal.FreeCoTaskMem(p);

Result of execution code above:

So we have allocated pointer data as IntPtr type and easily accessed it directly. AVMemPtr also can handle addition and subtraction operators they gave another AVMemPtr object which points to another address with resulted offset:

var p = Marshal.StringToCoTaskMemAnsi("some text");

AVMemPtr s = p;

Console.Write("AVMemPtr data: ");

while ((byte)s != 0) { Console.Write(" {0}", (char)((byte)s)); s += 1; }

Console.Write("\n");

Marshal.FreeCoTaskMem(p);

Each addition or subtraction creates a new instance of AVMemPtr but internally, it is implemented that the base data pointer stays the same even if data is allocated and the main instance is DIsposed. That is done also with counting references of main instance, for example, this code will work correctly:

var p = Marshal.StringToCoTaskMemAnsi("some text");

AVMemPtr s = new AVMemPtr(256);

strcpy(s, p);

Marshal.FreeCoTaskMem(p);

AVMemPtr s1 = s + 3;

s.Dispose();

Console.WriteLine("AVMemPtr string: \"{0}\"",Marshal.PtrToStringAnsi(s1));

s1.Dispose();

In next code, we can see that instances of s, s0 and s1 objects are different but comparison operators determine equals of the data:

var p = Marshal.StringToCoTaskMemAnsi("some text");

AVMemPtr s = p;

AVMemPtr s1 = s + 1;

AVMemPtr s0 = s1 - 1;

Console.WriteLine(" s {0} s1 {1} s0 {2}", s,s1,s0);

Console.WriteLine(" s == s1 {0}, s == s0 {1},s0 == s1 {2}",

(s == s1),(s == s0),(s0 == s1));

Console.WriteLine(" {0}, {1}, {2}",

object.ReferenceEquals(s,s1), object.ReferenceEquals(s,s0),

object.ReferenceEquals(s0,s1));

Marshal.FreeCoTaskMem(p);

Class AVMemPtr also contains helper methods and properties which may be useful, one of them to determine if data allocated and size of buffer in bytes and debug helper method to perform dump pointer data to a file or Stream.

The main feature of this class is the ability to represent data as arrays of different types. This is done as different properties of AVArray templates:

property AVArray<byte>^ bytes { AVArray<byte>^ get(); }

property AVArray<short>^ shorts { AVArray<short>^ get(); }

property AVArray<int>^ integers { AVArray<int>^ get(); }

property AVArray<float>^ floats { AVArray<float>^ get(); }

property AVArray<double>^ doubles { AVArray<double>^ get(); }

property AVArray<IntPtrv^ pointers { AVArray<IntPtr>^ get(); }

property AVArray<unsigned int>^ uints { AVArray<unsigned int>^ get(); }

property AVArray<unsigned short>^ ushorts { AVArray<unsigned short>^ get(); }

property AVArray<RGB^>^ rgb { AVArray<RGB^>^ get(); }

property AVArray<RGBA^>^ rgba { AVArray<RGBA^>^ get(); }

property AVArray<AYUV^>^ ayuv { AVArray<AYUV^>^ get(); }

property AVArray<YUY2^>^ yuy2 { AVArray<YUY2^>^ get(); }

property AVArray<UYVY^>^ uyvy { AVArray<UYVY^>^ get(); }

So, it is easy fill data of AVFrame object, for example, of the audio with IEEE float type or signed short:

var samples = frame.data[0].shorts;

for (int j = 0; j < c.frame_size; j++)

{

samples[2 * j] = (short)(int)(Math.Sin(t) * 10000);

for (int k = 1; k < c.channels; k++)

samples[2 * j + k] = samples[2 * j];

t += tincr;

}

More of it, as you can see, there are some arrays with specified pixel format structures: RGB, RGBA, AYUV, YUY2 and UYVY. Using these properties is a designed way to address pixels with those formats which is described later.

FFmpeg APIs for accessing lists of resources for example enumerate existing input or output formats have special types of APIs - iterators. The new API has iterate with opaque argument and old ones use previous element as reference:

const AVCodec *av_codec_iterate(void **opaque);

And the old one:

AVCodec *av_codec_next(const AVCodec *c);

Initially, in the wrapper library were designed a way to use those API in the same manner as they are present in FFmpeg, but later implementations were done with usage of IEnumerable and IEnumerator interfaces. That gave us the way of performing enumeration with foreach C# operator instead of calling API directly:

foreach (AVCodec codec in AVCodec.Codecs)

{

if (codec.IsDecoder()) Console.WriteLine(codec);

}

The code above can be done in a couple other modifications. Most of enumerator objects expose Count property and indexing operator:

var codecs = AVCodec.Codecs;

for (int i = 0; i < codecs.Count; i++)

{

if (codecs[i].IsDecoder()) Console.WriteLine(codecs[i]);

}

It is also possible to use old way with calling API wrapper method:

AVCodec codec = null;

while (null != (codec = AVCodec.Next(codec)))

{

if (codec.IsDecoder()) Console.WriteLine(codec);

}

There is no need to worry if FFmpeg libraries expose only one API: av_codec_iterate or av_codec_next. In the wrapper library, all FFmpeg APIs which are marked as deprecated are linked dynamically, so it checks runtime for what API to use. How that is implemented is described later in a separate topic.

AVArray classes which are done in the library also expose an enumerator interface:

var p = Marshal.StringToCoTaskMemAnsi("some text");

AVMemPtr s = new AVMemPtr(256);

strcpy(s, p);

Marshal.FreeCoTaskMem(p);

var array = s.bytes;

foreach (byte c in array)

{

if (c == 0) break;

Console.Write("{0} ",(char)c);

}

Console.Write("\n");

s.Dispose();

In the example above, we have allocated data length of memory while creating AVMemPtr object. But it is possible that enumeration may not work as arrays can be created dynamically or without size initialization - so the enumerators are not able to determine the total number of elements. I limit that functionality to avoid out of boundary crashes and other exceptions. For example, the next code will not work as the data size of AVMemPtr object is 0 due conversion from IntPtr pointer into AVMemPtr, so you can find the difference with the previous example:

var p = Marshal.StringToCoTaskMemAnsi("some text");

AVMemPtr s = p;

var array = s.bytes;

foreach (byte c in array)

{

if (c == 0) break;

Console.Write("{0} ",(char)c);

}

Console.Write("\n");

s.Dispose();

On the screenshot below, it is possible to see that data of AVMemPtr object pointed correctly to the text line, but as size is unknown and the enumeration is not available.

The wrapper library properly handles AVPicture/AVFrame classes data field enumeration. That is done with an internal function which handles detecting object field size. So the next code example works correctly:

AVFrame frame = new AVFrame();

frame.Alloc(AVPixelFormat.BGR24, 320, 240);

frame.MakeWritable();

var rgb = frame.data[0].rgb;

foreach (RGB c in rgb)

{

c.Color = Color.AliceBlue;

}

var bmp = frame.ToBitmap();

bmp.Save(@"test.bmp");

bmp.Dispose();

frame.Dispose();

Lots of classes in a library support enumerators. Along with enumerating codecs in the examples above, enumerator types are used in enumeration input and output formats, parsers, filters, devices and other library resources.

As already mentioned, the AVMemPtr class can produce data access as arrays of some colorspace types like RGB24, RGBA, AYUV, YUY2 or UYVY. Those colorspaces are designed as structures with the ability to access color components. Base class of those structures is the AVColor. It contains basic operators, helper properties and methods. For example, it supports assigning regular color value and overrides string representation:

AVFrame frame = new AVFrame();

frame.Alloc(AVPixelFormat.BGRA, 320, 240);

frame.MakeWritable();

var rgb = frame.data[0].rgba;

rgb[0].Color = Color.DarkGoldenrod;

Console.Write("{0} {1}",rgb[0].ToString(),rgb[0].Color);

frame.Dispose();

Along with it, the class contains the ability for color component access and implicit conversion into direct IntPtr type. Also, internally, the class supports colorspace conversions RGB into YUV and YUV to RGB.

var ayuv = frame.data[0].ayuv;

ayuv[0].Color = Color.DarkGoldenrod;

Console.Write("{0} {1}",ayuv[0].ToString(),ayuv[0].Color);

In the code above, the same color as in the previous example set into AYUV colorspace. So internally, it converted from ARGB into AYUV, which gave output:

Conversion results have little difference from original value, but that is acceptable. By default, used the BT.601 conversion matrix. It is possible to change matrix coefficients by calling SetColorspaceMatrices static method of AVColor class. Additionally class contains static methods for colorspace components conversion:

Color c = Color.DarkGoldenrod;

int y = 0, u = 0, v = 0;

int r = 0, g = 0, b = 0;

AVColor.RGBToYUV(c.R,c.G,c.B, ref y, ref u, ref v);

AVColor.YUVToRGB(y,u,v, ref r, ref g, ref b);

Console.Write("src: [R: {0} G: {1} B: {2}] dst: [R: {3} G: {4} B: {5}]",c.R,c.G,c.B,r,g,b);

It is necessary to keep in mind that YUY2 and UYVY control 2 pixels. So setting the Color property affects both those pixels. To control each pixel, you should use “Y0” and “Y1” class properties to change luma values.

The wrapper library contains lots of classes which, as were mentioned, represents each one of FFmpeg structures with exposing data fields as properties along with related usage methods. This topic describes most of those classes but not all of them. Description also contains basic usage examples of those classes, without describing class fields, as they are the same as in original FFmpeg libraries. All code samples are workable but in code parts skipped calling the Dispose() method of some global object destruction. Also, that is required to check error results of the methods calls, and you should do it in your code but in the samples, that is also skipped to decrease code size.

Class is the wrapper around the AVPacket structure of the FFmpeg libavcodec library. It has different constructors with the ability to pass its own buffer in there, and with a callback to detect when the buffer will be freed. After every successive return from any decoding or encoding operation, it is required to call the Free method to un-reference the underlying buffer. Class contains static methods to create AVPacket objects from specified .NET arrays with different types. Dump method allows you to save packet data into a file or stream.

var f = AVFrame.FromImage((Bitmap)Bitmap.FromFile(@"image.jpg"),AVPixelFormat.YUV420P);

var c = new AVCodecContext(AVCodec.FindEncoder(AVCodecID.MPEG2VIDEO));

c.bit_rate = 400000;

c.width = f.width;

c.height = f.height;

c.time_base = new AVRational(1, 25);

c.framerate = new AVRational(25, 1);

c.pix_fmt = f.format;

c.Open(null);

AVPacket pkt = new AVPacket();

bool got_packet = false;

while (!got_packet)

{

c.EncodeVideo(pkt,f,ref got_packet);

}

pkt.Dump(@"pkt.bin");

pkt.Free();

pkt.Dispose();

Class which is wrapped around related structure in the old version of the FFmpeg libavcodec library. In wrapper library, it is supported for all versions of FFmpeg in current implementation. Class contains methods for the av_image_* API’s of FFmpeg library and pointers to data and plane sizes. The AVFrame inherits from this class so it is recommended to use AVFrame directly instead.

Class for the AVFrame structure of the FFmpeg libavutil library. Class used as input for encoding audio or video data, or as output from the decoding process. It can handle audio and video data. After every successive return from any decoding or encoding operation it is required to call the Free method to un-reference the underlying buffer.

var _input = AVFormatContext.OpenInputFile(@"test.mp4");

if (_input.FindStreamInfo() == 0) {

int idx = _input.FindBestStream(AVMediaType.VIDEO, -1, -1);

AVCodecContext decoder = _input.streams[idx].codec;

var codec = AVCodec.FindDecoder(decoder.codec_id);

if (decoder.Open(codec) == 0) {

AVPacket pkt = new AVPacket();

AVFrame frame = new AVFrame();

int index = 0;

while ((bool)_input.ReadFrame(pkt)) {

if (pkt.stream_index == idx) {

bool got_frame = false;

int ret = decoder.DecodeVideo(frame, ref got_frame, pkt);

if (got_frame) {

var bmp = AVFrame.ToBitmap(frame);

bmp.Save(string.Format(@"image{0}.png",++index));

bmp.Dispose();

frame.Free();

}

}

pkt.Free();

}

}

}

Frame objects can be initialized from existing .NET Bitmap object. In the code below, the frame is created from the image and converted into YUV 420 planar colorspace format.

var frame = AVFrame.FromImage((Bitmap)Bitmap.FromFile(@"image.jpg"),

AVPixelFormat.YUV420P);

It is also possible to have wrap around existing bitmap data in that case, there is no need to specify target pixel format as the second argument. The pixel format of such frame data will be BGRA, and the image data will not be copied into the newly allocated buffer. But, internally, the child class object of the wrapped image data will be created which will be freed once the actual frame will be disposed.

There are also some helper methods which can be used for converting video frames:

var src = AVFrame.FromImage((Bitmap)Bitmap.FromFile(@"image.jpg"));

var frame = AVFrame.ConvertVideoFrame(src,AVPixelFormat.YUV420P);

And conversion back into .NET Bitmap object:

var bmp = AVFrame.ToBitmap(frame);

bmp.Save(@"image.png");

Class which is implemented managed access to AVCodec structure of the FFmpeg libavcodec library. As recommended, it contains only public fields.of the underlying structure. Class describes registered codec in the library.

var codec = AVCodec.FindEncoder("libx264");

Console.WriteLine("{0}",codec);

Class is able to enumerate existing codecs:

foreach (AVCodec codec in AVCodec.Codecs)

{

Console.WriteLine(codec);

}

Class which is implemented managed access to AVCodecContext structure of the FFmpeg libavcodec library. Object is used for encoding or decoding video and audio data.

var c = new AVCodecContext(AVCodec.FindEncoder("libx264"));

c.bit_rate = 400000;

c.width = 352;

c.height = 288;

c.time_base = new AVRational(1, 25);

c.framerate = new AVRational(25, 1);

c.gop_size = 10;

c.max_b_frames = 1;

c.pix_fmt = AVPixelFormat.YUV420P;

c.Open(null);

Console.WriteLine(c);

In the example above, we create a context object and initialize the H264 encoder of the video with resolution 352x288 with 25 frames per second and 400 kbps bit rate.

Next sample demonstrates opening the file and initializing the decoder for the video stream:

AVFormatContext fmt_ctx;

AVFormatContext.OpenInput(out fmt_ctx, @"test.mp4");

fmt_ctx.FindStreamInfo();

var s = fmt_ctx.streams[fmt_ctx.FindBestStream(AVMediaType.VIDEO)];

var c = new AVCodecContext(AVCodec.FindDecoder(s.codecpar.codec_id));

s.codecpar.CopyTo(c);

c.Open(null);

Console.WriteLine(c);

Class contains methods for initializing structure and decoding or encoding audio and video.

Class for accessing the AVCodecParser structure of the FFmpeg libavcodec library from managed code. Structure describes registered codec parsers. Class mostly used for enumerating registered parsers in the library:

foreach (AVCodecParser parser in AVCodecParser.Parsers)

{

for (int i = 0; i < parser.codec_ids.Length; i++)

{

Console.Write("{0} ", parser.codec_ids[i]);

}

}

Class for performing bitstream parse operation. It is wrapped around the AVCodecParserContext structure of the FFmpeg libavcodec library. It is created with the specified codec and contains different variations of the parse method.

var decoder = new AVCodecContext(AVCodec.FindDecoder(AVCodecID.MP3));

decoder.sample_fmt = AVSampleFormat.FLTP;

if (decoder.Open(null) == 0) {

var parser = new AVCodecParserContext(decoder.codec_id);

AVPacket pkt = new AVPacket();

AVFrame frame = new AVFrame();

var f = fopen(@"test.mp3", "rb");

const int buffer_size = 1024;

IntPtr data = Marshal.AllocCoTaskMem(buffer_size);

int data_size = fread(data, 1, buffer_size, f);

while (data_size > 0) {

int ret = parser.Parse(decoder, pkt, data, data_size);

if (ret < 0) break;

data_size -= ret;

if (pkt.size > 0) {

bool got_frame = false;

decoder.DecodeAudio(frame,ref got_frame,pkt);

if (got_frame) {

var p = frame.data[0].floats;

for (int i = 0; i < frame.nb_samples; i++) {

Console.Write((char)(((p[i] + 1.0) * 54) + 32));

}

frame.Free();

}

pkt.Free();

}

memmove(data, data + ret, data_size);

data_size += fread(data + data_size, 1, buffer_size - data_size, f);

}

Marshal.FreeCoTaskMem(data);

fclose(f);

}

Example demonstrates reading data from raw mp3 file, parsing it by chunks and decode with simple print clamped data into output. Example uses some wrappers of the unmanaged API’s: fopen, fread and fclose. Those function declarations can be found in other samples in this document.

Class which is implemented managed access to AVRational structure of the FFmpeg libavutil library. It contains all methods which are related to that structure.

AVRational r = new AVRational(25,1);

Console.WriteLine("{0}, {1}",r.ToString(),r.inv_q().ToString());

Class which is implemented managed access to AVFormatContext structure of the FFmpeg libavformat library. Class contains methods for operating with output or input media data.

var ic = AVFormatContext.OpenInputFile(@"test.mp4");

ic.DumpFormat(0,null,false);

Most use case of the class is getting audio or video packets from input or writing such packets into output. Next sample demonstrates reading mp3 audio packets from an AVI file and saves them into a separate file.

var ic = AVFormatContext.OpenInputFile(@"1.avi");

if (0 == ic.FindStreamInfo()) {

int idx = ic.FindBestStream(AVMediaType.AUDIO);

if (idx >= 0

&& ic.streams[idx].codecpar.codec_id == AVCodecID.MP3) {

AVPacket pkt = new AVPacket();

bool append = false;

while (ic.ReadFrame(pkt) == 0) {

if (pkt.stream_index == idx) {

pkt.Dump(@"out.mp3",append);

append = true;

}

pkt.Free();

}

pkt.Dispose();

}

}

ic.Dispose();

And another main task, as mentioned, is the output packets. The next example demonstrates outputting a static picture to a mp4 file with H264 encoding for a 10 seconds long:

string filename = @"test.mp4";

var oc = AVFormatContext.OpenOutput(filename);

var frame = AVFrame.FromImage((Bitmap)Bitmap.FromFile(@"image.jpg"),

AVPixelFormat.YUV420P);

var codec = AVCodec.FindEncoder(AVCodecID.H264);

var ctx = new AVCodecContext(codec);

var st = oc.AddStream(codec);

st.id = oc.nb_streams-1;

st.time_base = new AVRational( 1, 25 );

ctx.bit_rate = 400000;

ctx.width = frame.width;

ctx.height = frame.height;

ctx.time_base = st.time_base;

ctx.framerate = ctx.time_base.inv_q();

ctx.pix_fmt = frame.format;

ctx.Open(null);

st.codecpar.FromContext(ctx);

if ((int)(oc.oformat.flags & AVfmt.NOFILE) == 0) {

oc.pb = new AVIOContext(filename,AvioFlag.WRITE);

}

oc.DumpFormat(0, filename, true);

oc.WriteHeader();

AVPacket pkt = new AVPacket();

int idx = 0;

bool flush = false;

while (true) {

flush = (idx > ctx.framerate.num * 10 / ctx.framerate.den);

frame.pts = idx++;

bool got_packet = false;

if (0 < ctx.EncodeVideo(pkt, flush ? null : frame, ref got_packet)) break;

if (got_packet) {

pkt.RescaleTS(ctx.time_base, st.time_base);

oc.WriteFrame(pkt);

pkt.Free();

continue;

}

if (flush) break;

}

oc.WriteTrailer();

pkt.Dispose();

frame.Dispose();

ctx.Dispose();

oc.Dispose();

And the application output results:

Class which is implemented managed access to AVIOContext structure of the FFmpeg libavformat library. Class contains helper methods for writing/reading data from byte streams. The most interesting usage of this class is the ability to set up custom reading or writing callbacks. To demonstrates how to use those features, let's modify previous code sample with replacement AVIOContext creation code:

AVMemPtr ptr = new AVMemPtr(20 * 1024);

OutputFile file = new OutputFile(filename);

if ((int)(oc.oformat.flags & AVfmt.NOFILE) == 0) {

oc.pb = new AVIOContext(ptr,1,file,null,OutputFile.WritePacket,OutputFile.Seek);

}

So right now, we create a context with defining output and seek callback. Those callbacks are defined in OutputFile class of the example:

class OutputFile

{

IntPtr stream = IntPtr.Zero;

public OutputFile(string filename) {

stream = fopen(filename, "w+b");

}

~OutputFile() {

IntPtr s = Interlocked.Exchange(ref stream, IntPtr.Zero);

if (s != IntPtr.Zero) fclose(s);

}

public static int WritePacket(object opaque, IntPtr buf, int buf_size) {

return fwrite(buf, 1, buf_size, (opaque as OutputFile).stream);

}

public static int Seek(object opaque, long offset, AVSeek whence) {

return fseek((opaque as OutputFile).stream, (int)offset, (int)whence);

}

[DllImport("msvcrt.dll", EntryPoint = "fopen")]

public static extern IntPtr fopen(

[MarshalAs(UnmanagedType.LPStr)] string filename,

[MarshalAs(UnmanagedType.LPStr)] string mode);

[DllImport("msvcrt.dll")]

public static extern int fwrite(IntPtr buffer, int size, int count, IntPtr stream);

[DllImport("msvcrt.dll")]

public static extern int fclose(IntPtr stream);

[DllImport("msvcrt.dll")]

public static extern int fseek(IntPtr stream, long offset, int origin);

}

By running the sample, you got the same output file and same results as in the previous topic, but now you can control each writing and seeking of the data.

Class also contains some helper static methods. It can check for URLs and enumerate supported protocols:

Console.Write("Output Protocols: \n");

var protocols = AVIOContext.EnumProtocols(true);

foreach (var s in protocols) {

Console.Write("{0} ",s);

}

Class which is implemented managed access to AVOutputFormat structure of the FFmpeg libavformat library. Structure describes parameters of an output format supported by the libavformat library. Class contains enumerator to see all available formats:

foreach (AVOutputFormat format in AVOutputFormat.Formats)

{

Console.WriteLine(format.ToString());

}

Class has no public constructor, but it can be accessed with enumerator or static methods:

var fmt = AVOutputFormat.GuessFormat(null,"test.mp4",null);

Console.WriteLine(fmt.long_name);

Class which is implemented managed access to AVInputFormat structure of the FFmpeg libavformat library. The structure describes parameters of an input format supported by the libavformat library. Class also contains enumerator to see all available formats:

foreach (AVInputFormat format in AVInputFormat.Formats)

{

Console.WriteLine(format.ToString());

}

Class has no public constructor. Can be accessed from static methods:

var fmt = AVInputFormat.FindInputFormat("avi");

Console.WriteLine(fmt.long_name);

It is also possible to detect format of giving buffer, also using static methods:

var f = fopen(@"test.mp4", "rb");

AVProbeData data = new AVProbeData();

data.buf_size = 1024;

data.buf = Marshal.AllocCoTaskMem(data.buf_size);

data.buf_size = fread(data.buf,1,data.buf_size,f);

var fmt = AVInputFormat.ProbeInputFormat(data,true);

Console.WriteLine(fmt.ToString());

Marshal.FreeCoTaskMem(data.buf);

data.Dispose();

fclose(f);

Class which is implemented managed access to AVStream structure of the FFmpeg libavformat library. Class has no public constructor and can be accessed from the AVFormatContext streams property.

var input = AVFormatContext.OpenInputFile(@"test.mp4");

if (input.FindStreamInfo() == 0){

for (int idx = 0; idx < input.streams.Count; idx++)

{

Console.WriteLine("Stream [{0}]: {1}",idx,

input.streams[idx].codecpar.codec_type);

}

}

input.DumpFormat(0,null,false);

Streams can be created with the AVFormatContext AddStream method.

var oc = AVFormatContext.OpenOutput(@"test.mp4");

var codec = AVCodec.FindEncoder(AVCodecID.H264);

var c = new AVCodecContext(codec);

var st = oc.AddStream(codec);

st.id = oc.nb_streams-1;

st.time_base = new AVRational( 1, 25 );

c.codec_id = codec.id;

c.bit_rate = 400000;

c.width = 352;

c.height = 288;

c.time_base = st.time_base;

c.gop_size = 12;

c.pix_fmt = AVPixelFormat.YUV420P;

c.Open(codec);

st.codecpar.FromContext(c);

oc.DumpFormat(0, null, true);

Class which is implemented managed access to AVDictionary collection type of the FFmpeg libavutil library. This class is the name-value collection of the string values and contains methods, enumerators and properties for accessing them. There is no ability in FFmpeg API to remove special entries from the collection, so we have the same functionality in the wrapper library. AVDictionary class supports enumeration of the key-values entries. Class also supports copy data with usage of the ICloneable interface.

AVDictionary dict = new AVDictionary();

dict.SetValue("Key1","Value1");

dict.SetValue("Key2","Value2");

dict.SetValue("Key3","Value3");

Console.Write("Number of elements: \"{0}\"\nIndex of \"Key2\": \"{1}\"\n",

dict.Count,dict.IndexOf("Key2"));

Console.Write("Keys:\t");

foreach (string key in dict.Keys) {

Console.Write(" \"" + key + "\"");

}

Console.Write("\nValues:\t");

for (int i = 0; i < dict.Values.Count; i++) {

Console.Write(" \"" + dict.Values[i] + "\"");

}

Console.Write("\nValue[\"Key1\"]:\t\"{0}\"\nValue[3]:\t\"{1}\"\n",

dict["Key1"],dict[2]);

AVDictionary cloned = (AVDictionary)dict.Clone();

Console.Write("Cloned Entries: \n");

foreach (AVDictionaryEntry ent in cloned) {

Console.Write("\"{0}\"=\"{1}\"\n", ent.key, ent.value);

}

cloned.Dispose();

dict.Dispose();

Result of execution code above is:

Helper class which exposes functionality accessing options of the library objects. Objects can be created from pointers or from AVBase class as were already mentioned. Example of enumerating existing object options:

var ctx = new AVCodecContext(AVCodec.FindEncoder(AVCodecID.H264));

Console.WriteLine("{0} Options:",ctx);

var options = new AVOptions(ctx);

for (int i = 0; i < options.Count; i++)

{

string value = "";

if (0 == options.get(options[i].name, AVOptSearch.None, out value))

{

Console.WriteLine("{0}=\"{1}\"\t'{2}'",options[i].name,value,options[i].help);

}

}

Class gives the ability to enumerate option names and option parameters and also gets or sets options values of the object.

var ctx = new AVCodecContext(AVCodec.FindEncoder(AVCodecID.H264));

var options = new AVOptions(ctx);

string initial = "";

options.get("b", AVOptSearch.None, out initial);

options.set_int("b", 100000, AVOptSearch.None);

string updated = "";

options.get("b", AVOptSearch.None, out updated);

Console.WriteLine("Initial=\"{0}\", Updated\"{1}\"",initial,updated);

Due to compatibility with old wrapper library versions, some properties of library classes internally set object options instead of usage direct fields. Example of how that done in a AVFilterGraph scale_sws_opts field is given below:

void FFmpeg::AVFilterGraph::scale_sws_opts::set(String^ value)

{

auto p = _CreateObject<AVOptions>(m_pPointer);

p->set("scale_sws_opts",value,AVOptSearch::None);

}

Available options also can be enumerated via AVClass object of the library:

var options = AVClass.GetCodecClass().option;

for (int i = 0; i < options.Length; i++)

{

Console.WriteLine("\"{0}\"",options[i]);

}

As in FFmpeg, each context structure has AVClass object access, so the next code gives the same results:

var ctx = new AVCodecContext(AVCodec.FindEncoder(AVCodecID.H264));

var options = ctx.av_class.option;

for (int i = 0; i < options.Length; i++)

{

Console.WriteLine("\"{0}\"",options[i]);

}

Class which is implemented managed access to AVBitStreamFilter type of the FFmpeg libavcodec library. It contains the name and array of codec ids to which filter can be applied. All filters can be enumerated:

foreach (AVBitStreamFilter f in AVBitStreamFilter.Filters)

{

Console.WriteLine("{0}",f);

}

Filter can be searched by name:

var f = AVBitStreamFilter.GetByName("h264_mp4toannexb");

Console.Write("{0} Codecs: ",f);

foreach (AVCodecID id in f.codec_ids)

{

Console.Write("{0} ",id);

}

Class which is implemented managed access to AVBSFContext structure type of the FFmpeg libavcodec library. It is an instance of Bit Stream Filter and contains methods for filtering operations.

Example of class usage is as given below:

var input = AVFormatContext.OpenInputFile(@"test.mp4");

if (input.FindStreamInfo() == 0) {

int idx = input.FindBestStream(AVMediaType.VIDEO);

var ctx = new AVBSFContext(

AVBitStreamFilter.GetByName("h264_mp4toannexb"));

input.streams[idx].codecpar.CopyTo(ctx.par_in);

ctx.time_base_in = input.streams[idx].time_base;

if (0 == ctx.Init()) {

bool append = false;

AVPacket pkt = new AVPacket();

while (0 == input.ReadFrame(pkt)) {

if (pkt.stream_index == idx) {

ctx.SendPacket(pkt);

}

pkt.Free();

if (0 == ctx.ReceivePacket(pkt)) {

pkt.Dump(@"out.h264",append);

pkt.Free();

append = true;

}

}

pkt.Dispose();

}

ctx.Dispose();

}

The code above performs conversion of H264 video stream from opened mp4 media file into annexb format - format with start codes, and saves the resulting bitstream into a file. The file, which is produced, can be played with VLC media player, with graphedit tool or with ffplay.

In the hex dump of a file, it is possible to see that data is in annexb format:

Helper value class of definitions AV_CH_* for audio channels masks from libavutil library. Class contains all available definitions as public static class fields:

Class represent the 64 bit integer value and cover implicit conversions operators. It also contains helper methods as wrap of FFmpeg library APIs and has overridden string representation of the value.

AVChannelLayout ch = AVChannelLayout.LAYOUT_5POINT1;

Console.WriteLine("{0} Channels: \"{1}\" Description: \"{2}\"",

ch,ch.channels,ch.description);

AVChannelLayout ex = ch.extract_channel(2);

Console.WriteLine("Extracted 2 Channel: {0} Index: \"{1}\" Name: \"{2}\"",

ex,ch.get_channel_index(ex),ex.name);

ch = AVChannelLayout.get_default_channel_layout(7);

Console.WriteLine("Default layout for 7 channels {0} "+

"Channels: \"{1}\" Description: \"{2}\"",

ch,ch.channels,ch.description);

The code above displays basic operations with the class. All class methods are based on FFmpeg library APIs, so it is easy to understand how to use them. The execution result of the code above:

There is also an AVChannels class in a wrapper library which is the separate static class with exported APIs for AVChannelLayout.

Another helper value class for enumeration with the same name from libavutil library. It describes the pixel format of video data. Class contains all formats which are exposed by original enumeration. Along with it, the class extended with methods and properties based on FFmpeg APIs. It handles implicit conversion from to integer types.

AVPixelFormat fmt = AVPixelFormat.YUV420P;

byte[] bytes = BitConverter.GetBytes(fmt.codec_tag);

string tag = "";

for (int i = 0; i < bytes.Length; i++) { tag += (char)bytes[i]; }

Console.WriteLine("Format: {0} Name: {1} Tag: {2} Planes: {3} " +

"Components: {4} Bits Per Pixel: {5}",(int)fmt,

fmt.name,tag,fmt.planes, fmt.format.nb_components,

fmt.format.bits_per_pixel);

fmt = AVPixelFormat.get_pix_fmt("rgb32");

Console.WriteLine("{0} {1}",fmt.name, (int)fmt);

FFLoss loss = FFLoss.NONE;

fmt = AVPixelFormat.find_best_pix_fmt_of_2(AVPixelFormat.RGB24,

AVPixelFormat.RGB32,AVPixelFormat.RGB444BE,false,ref loss);

Console.WriteLine("{0} {1}",fmt.name, (int)fmt);

Execution of the code above gives a result:

Also a helper class for libavutil enumeration with the same name. It manages the format description of the audio data. As other classes, it handles basic operations and exposes properties and methods which are done as FFmpeg APIs.

AVSampleFormat fmt = AVSampleFormat.FLTP;

Console.WriteLine("Format: {0}, Name: {1}, Planar: {2}, Bytes Per Sample: {3}",

(int)fmt,fmt.name,fmt.is_planar,fmt.bytes_per_sample);

fmt = AVSampleFormat.get_sample_fmt("s16");

var alt = fmt.get_alt_sample_fmt(false);

var pln = fmt.get_planar_sample_fmt();

Console.WriteLine("Format: {0} Alt: {1} Planar: {2}",fmt, alt, pln);

FFmpeg APIs which operate with AVSampleFormat are also exported in a separate static class AVSampleFmt.