Introduction

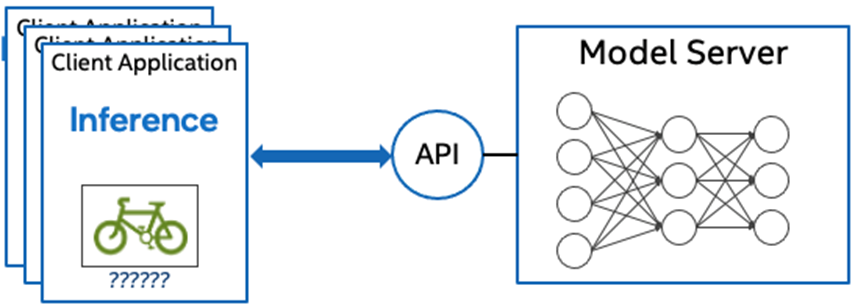

Model servers play a vital role in bringing AI models from development to production. Models are served via network endpoints which expose APIs to run predictions. These microservices abstract inference execution while providing scalability and efficient resource utilization.

In this blog, you will learn how to use key features of the OpenVINO™ Operator for Kubernetes. We will demonstrate how to deploy and use OpenVINO Model Server in two scenarios:

- Serving a single model

- Serving a pipeline of multiple models

Kubernetes provides an optimal environment for deploying model servers but managing these resources can be challenging in larger-scale deployments. Using our Operator for Kubernetes makes this easier.

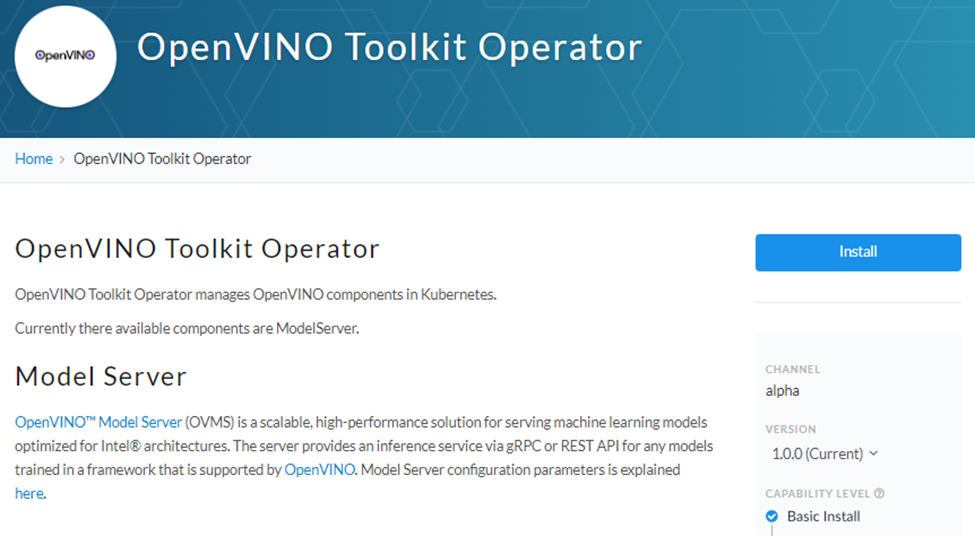

Install via OperatorHub

The OpenVINO Operator can be installed in a Kubernetes cluster from the OperatorHub. Just search for OpenVINO and click the ‘Install’ button.

Serve a Single OpenVINO Model in Kubernetes

Create a new instance of OpenVINO Model Server by defining a custom resource called ModelServer using the provided CRD. All parameters are explained here.

In the sample below, a fully functional model server is deployed along with a ResNet-50 image classification model pulled from Google Cloud storage.

kubectl apply -f https:

A successful deployment will create a service called ovms-sample.

kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

AGE

ovms-sample ClusterIP 10.98.164.11 <none> 8080/TCP,8081/TCP

5m30s

Now that the model is deployed and ready for requests, we can use the ovms-sample service with our Python client known as ovmsclient.

Send Inference Requests to the Service

The example below shows how to use the ovms-sample service inside the same Kubernetes cluster where it’s running. To create a client container, launch an interactive session to a pod with Python installed:

kubectl create deployment client-test --image=python:3.8.13 -- sleep infinitykubectl exec -it $(kubectl get pod -o jsonpath="{.items[0].metadata.name}" -l app=client-test) -- bash

From inside the client container, we will connect to the model server API endpoints. A simple curl command lists the served models with their version and status:

curl http:

{

"resnet" :

{

"model_version_status": [

{

"version": "1",

"state": "AVAILABLE",

"status": {

"error_code": "OK",

"error_message": "OK"

}

}

]

}

Additional REST API calls are described in the documentation.

Now let’s use the ovmsclient Python library to process an inference request. Create a virtual environment and install the client with pip:

python3 -m venv /tmp/venv

source /tmp/venv/bin/activate

pip install ovmsclient

Download a sample image of a zebra:

curl https:

The Python code below collects the model metadata using the ovmsclient library:

from ovmsclient import make_grpc_client

client = make_grpc_client("ovms-sample:8080")

model_metadata = client.get_model_metadata(model_name="resnet")

print(model_metadata)

The code above returns the following response:

{‘model_version’: 1, ‘inputs’: {‘map/TensorArrayStack/TensorArrayGatherV3:0’: {‘shape’: [-1, -1, -1, -1], ‘dtype’: ‘DT_FLOAT’}}, ‘outputs’: {‘softmax_tensor’: {‘shape’: [-1, 1001], ‘dtype’: ‘DT_FLOAT’}}}

Now create a simple Python script to classify the JPEG image of the zebra :

cat >> /tmp/predict.py <<EOL

from ovmsclient import make_grpc_client

import numpy as np

client = make_grpc_client("ovms-sample:8080")

with open("/tmp/zebra.jpeg", "rb") as f:

data = f.read()

inputs = {"map/TensorArrayStack/TensorArrayGatherV3:0": data}

results = client.predict(inputs=inputs, model_name="resnet")

print("Detected class:", np.argmax(results))

EOLpython /tmp/predict.py

Detected class: 341

The detected class from imagenet is 341, which represents `zebra`.

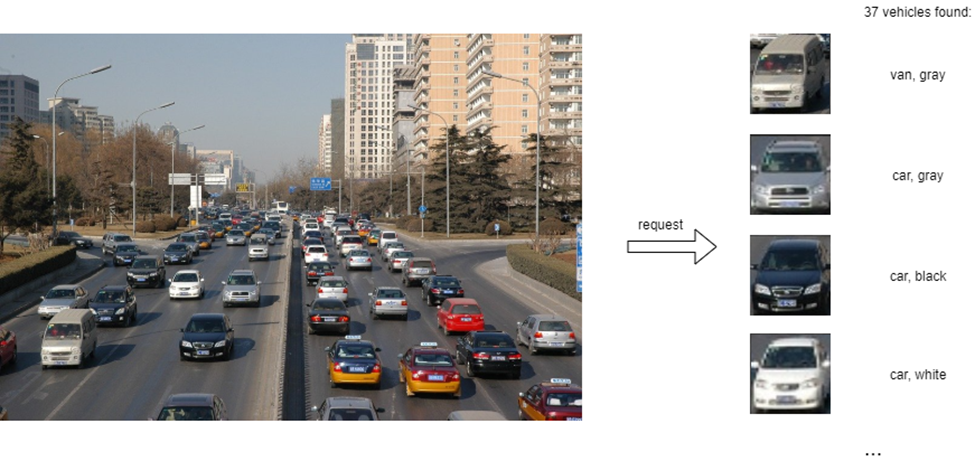

Serve a Multi-Model Pipeline

After running a simple use case of serving a single OpenVINO model, let’s explore the more advanced scenario of a multi-model vehicle analysis pipeline. This pipeline leverages the Directed Acyclic Graph feature in OpenVINO Model Server.

The remaining steps in this demo require `mc` minio client binary and access to an S3-compatible bucket. See the quick start with Minio for more information.

First, prepare all dependencies using the vehicle analysis pipeline example below:

git clone https:

cd model_server/demos/vehicle_analysis_pipeline/python

make

The command above downloads the required models and builds a custom library to run the pipeline, then it places these files in the workspace directory. Copy the files to a shared S3-compatible storage accessible within the cluster. In the example below, the S3 server alias is mys3:

mc cp — recursive workspace/vehicle-detection-0202 mys3/models-repository/

mc cp — recursive workspace/vehicle-attributes-recognition-barrier-0042 mys3/models-repository/

mc ls -r mys3

43MiB models-repository/vehicle-attributes-recognition-barrier-0042/1/vehicle-attributes-recognition-barrier-0042.bin

118KiB models-repository/vehicle-attributes-recognition-barrier-0042/1/vehicle-attributes-recognition-barrier-0042.xml

7.1MiB models-repository/vehicle-detection-0202/1/vehicle-detection-0202.bin

331KiB models-repository/vehicle-detection-0202/1/vehicle-detection-0202.xml

To use previously created model server config file in workspace/config.json, we need to adjust the paths to models and the custom node library. The commands below change the model paths to our S3 bucket and the custom node library to /config folder which will be mounted as a Kubernetes configmap.

sed -i ‘s/\/workspace\/vehicle-detection-0202/s3:\/\/models-repository\/vehicle-detection-0202/g’ workspace/config.json

sed -i ‘s/\/workspace\/vehicle-attributes-recognition-barrier-0042/s3:\/\/models-repository\/vehicle-attributes-recognition-barrier-0042/g’ workspace/config.json

sed -i ‘s/workspace\/lib/config/g’ workspace/config.json

Next, add both the config file and the custom name library to a Kubernetes config map:

kubectl create configmap ovms-pipeline --from-

file=config.json=workspace/config.json

\

--from-

file=libcustom_node_model_zoo_intel_object_detection.so=workspace/lib/libcustom_node_model_zoo_intel_object_detection.so

Now we are ready to deploy the model server with the pipeline configuration. Use kubectl to apply the following ovms-pipeline.yaml configuration.

apiVersion: intel.com/v1alpha1

kind: ModelServer

metadata:

name: ovms-pipeline

spec:

image_name: openvino/model_server:latest

deployment_parameters:

replicas: 1

models_settings:

single_model_mode: false

config_configmap_name: "ovms-pipeline"

server_settings:

file_system_poll_wait_seconds: 0

log_level: "INFO"

service_parameters:

grpc_port: 8080

rest_port: 808

service_type: ClusterIP

models_repository:

storage_type: "s3"

aws_access_key_id: minioadmin

aws_secret_access_key: minioadmin

aws_region: us-east-1

s3_compat_api_endpoint: http:

That creates the service with the model server

kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ovms-pipeline ClusterIP 10.99.53.175 <none> 8080/TCP,8081/TCP 26m

To test the pipeline, we can use the same client container as the previous example with a single model. From inside the client container shell, download a sample image to analyze:

curl https:

cat >> /tmp/pipeline.py <<EOL

from ovmsclient import make_grpc_client

import numpy as np

client = make_grpc_client("ovms-pipeline:8080")

with open("/tmp/road1.jpg", "rb") as f:

data = f.read()

inputs = {"image": data}

results = client.predict(inputs=inputs, model_name="multiple_vehicle_recognition")

print("Returned outputs:",results.keys())

EOL

Run a prediction using the following command:

python /tmp/pipeline.py

Returned outputs: dict_keys(['colors', 'vehicle_coordinates', 'types', 'vehicle_images', 'confidence_levels'])

The sample code above just returns a list of pipeline outputs without data interpretation. More complete client code samples for vehicle analysis is available on GitHub.

Conclusion

OpenVINO Model Server makes it easy to deploy and manage inference services in a Kubernetes environment. In this blog, we learned how to run predictions using the ovmsclient Python library with both a single model scenario and with multiple models using a DAG pipeline.

Learn more about the operator on https://github.com/openvinotoolkit/operator

Check also other demos with OpenVINO Model Server on https://docs.openvino.ai/2022.1/ovms_docs_demos.html

Resources

Notices & Disclaimers

Performance varies by use, configuration and other factors. Learn more on the Performance Index site.

No product or component can be absolutely secure.

Intel does not control or audit third-party data. You should consult other sources to evaluate accuracy.

Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade.

Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.