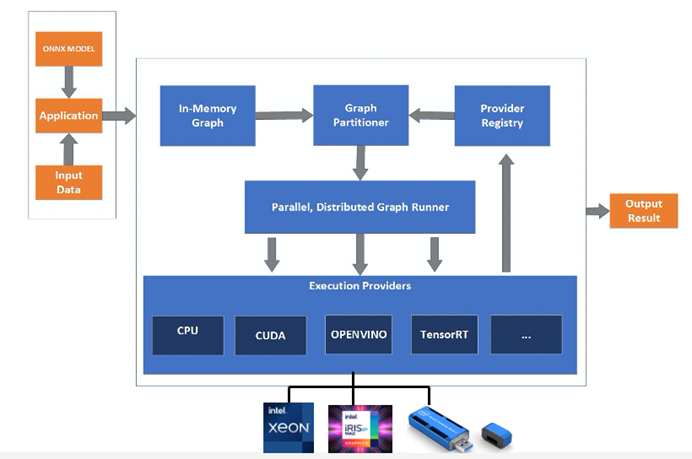

There is a need for greater interoperability in the AI tools community. Many people are working on great tools, but developers are often locked in to one framework or ecosystem. ONNX enables more of these tools to work together by allowing them to share models. ONNX is an open format built to represent machine learning and deep learning models. With ONNX, AI developers can more easily move models between state-of-the-art tools and choose the combination that is best for them. ONNX is supported by a large community of partners such as Microsoft, Meta open source, and Amazon Web Services.

ONNX is widely supported and can be found in many frameworks, tools, and hardware. Enabling interoperability between different frameworks and streamlining the path from research to production helps increase the speed of innovation in the AI community. ONNX helps solve the challenge of hardware dependency related to AI workloads and enables deploying AI models targeting key accelerators.

When we add hardware, like Intel® processors, to this mix, we’re able to take full advantage of the compute power your laptop or desktop. Developers can now utilize the power of the Intel® OpenVINO™ toolkit through ONNX Runtime to accelerate inferencing of ONNX models, which can be exported or converted from AI frameworks like TensorFlow, PyTorch, Keras and much, much, more. The OpenVINO™ Execution Provider for ONNX Runtime enables ONNX models for running inference using ONNX Runtime API’s while using OpenVINO™ toolkit as a backend. With the OpenVINO™ Execution Provider, ONNX Runtime delivers better inferencing performance on the same hardware compared to generic acceleration on Intel® CPU, GPU, and VPU. Best of all you can get that better performance you were looking for with just one line of code.

Now theories aside, as a developer, you always want the installation to be quick and easy so that you can use the package as soon as possible. Before, if you wanted to get access to OpenVINO™ Execution Provider for ONNX Runtime on your machine, there were multiple installation steps that were involved. To make your life easier, we have launched OpenVINO™ Execution Provider for ONNX Runtime on PyPi. Now, with just a simple pip install, OpenVINO™ Execution Provider for ONNX Runtime will be installed on your Linux or Windows machine.

In our previous blog, you learned about OpenVINO™ Execution Provider for ONNX Runtime in depth and tested out some of the object detection samples that we created for different programming languages (Python, C++, C#). Now, it’s time for us to explain to you how easy it is for you to install the OpenVINO Execution Provider for ONNX Runtime on your Linux or Windows machines and get that faster inference for your ONNX deep learning models that you’ve been waiting for.

How to Install

Download the onnxruntime-openvino python packages from PyPi onto your Linux/Windows machine by typing the following command in your terminal:

pip install onnxruntime-openvino

For windows, in order to use the OpenVINO™ Execution Provider for ONNX Runtime you must use Python3.9 and install the OpenVINO™ toolkit as well:

pip install openvino==2022.1

If you are a Windows user, please also add the following lines of code in your application:

import openvino.utils as utils

utils.add_openvino_libs_to_path()

That’s all it takes to get OpenVINO™ Execution Provider for ONNX Runtime installed on your machine.

Samples

Now that you have OpenVINO™ Execution Provider for ONNX Runtime installed on your Linux or Windows machine, it’s time to get your hands dirty with some Python samples. Test out the Python Samples now and see how you can get substantial performance boost with OpenVINO™ Execution Provider. What’s Next

Stay tuned for the new releases of OpenVINO™ Toolkit.

Resources

Notices & Disclaimers

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.