An introduction to LLVM compilers and the benefits of Intel's oneAPI Data Parallel C++ (DPC++) compiler that allows us to write code that works on all accelerator platforms using common programming standards.

LLVM is a free and open source compiler infrastructure originally built for C and C++. Although its name initially stood for Low Level Virtual Machine, LLVM is now a technology that deals with much more than just virtual machines. The name is no longer officially an initialism.

The central component in LLVM is a language-independent intermediate representation (IR). This IR functions as the middle layer of a compiler system and produces lower-level IR. The low-level IR is then reduced to assembly language code optimized for a target machine. Or, it can be further reduced to the level of binary machine code at runtime.

This article explores the benefits of LLVM-based compilers and their cross-architecture capabilities, and presents a few examples of Intel LLVM-based oneAPI compilers.

The History of LLVM

The LLVM project was started at the University of Illinois at Urbana-Champaign in 2000 by Vikram Adve and Chris Lattner. It was designed to be modular and quickly adapt to different compiler front ends and back ends.

Since its start, LLVM has grown from a research-focused project to the basis for many of today’s industry-standard commercial compilers. The project has been implemented in numerous other languages like Go, Haskell, OCaml, Rust, Scala, Nimrod, JavaScript, Objective-C, C#, Fortran, and Python.

LLVM is used in a wide variety of systems, including linkers, assemblers, debuggers, and compilers, like those available on Intel oneAPI. Intel previously based these compilers on the Open64 infrastructure. However, Intel has embraced LLVM and now offers C/C++ and Fortran compilers using the infrastructure.

The Benefits of LLVM-Based Compilers

LLVM-based compilers are valuable to developers and commercial organizations in many ways. Let’s consider some of the benefits.

Shorter Build Times

LLVM is a production-quality compiler infrastructure that provides a framework for different compilers. Rather than writing the same components from scratch each time, we can use components already written and tested by the LLVM community in our LLVM-based compilers. This lets us spend more time focusing on the unique aspects of our projects and less time recreating code.

Easy Maintenance and Optimization

LLVM compilers are lightweight and easy to maintain. They compile code into native object files or bytecode that a virtual machine can execute. Some compilers also produce intermediate assembly code or generate binary executables as output. These compilers are designed for use in embedded systems where memory is limited and performance is critical. They’ve also been used successfully in supercomputers to optimize execution speed on large workloads.

Because LLVM is a modular library, we’re able to use only the parts of LLVM we need. We can handpick which components and optimizations are used in our compiler. We can also insert our own optimizations into the pipeline if we need to.

Flexibility

LLVM compilers are flexible enough to support programming languages with low-level (machine code) and high-level (human-readable code) features. In this way, we can create custom programming languages without creating our own compiler from scratch whenever we want to add new features or make changes to existing ones.

We can easily add new front ends and back ends for new languages and target architectures. Additionally, we can use the LLVM compiler infrastructure to build testing and analysis tools without modifying the compiler itself.

Portability

LLVM is designed to target other compiler back ends, meaning we can use it to generate machine code for our platform of choice. It also provides features like an assembler, disassembler, linker, and just-in-time (JIT) compiler for dynamic programming languages. By allowing our compiler to target LLVM IR code rather than actual machine code, we can achieve better portability than if we were generating machine code directly from our compiler’s front end.

Because the compiler runs on many different platforms, we can easily port any applications we develop with it to new platforms.

Intel oneAPI Compiler Options

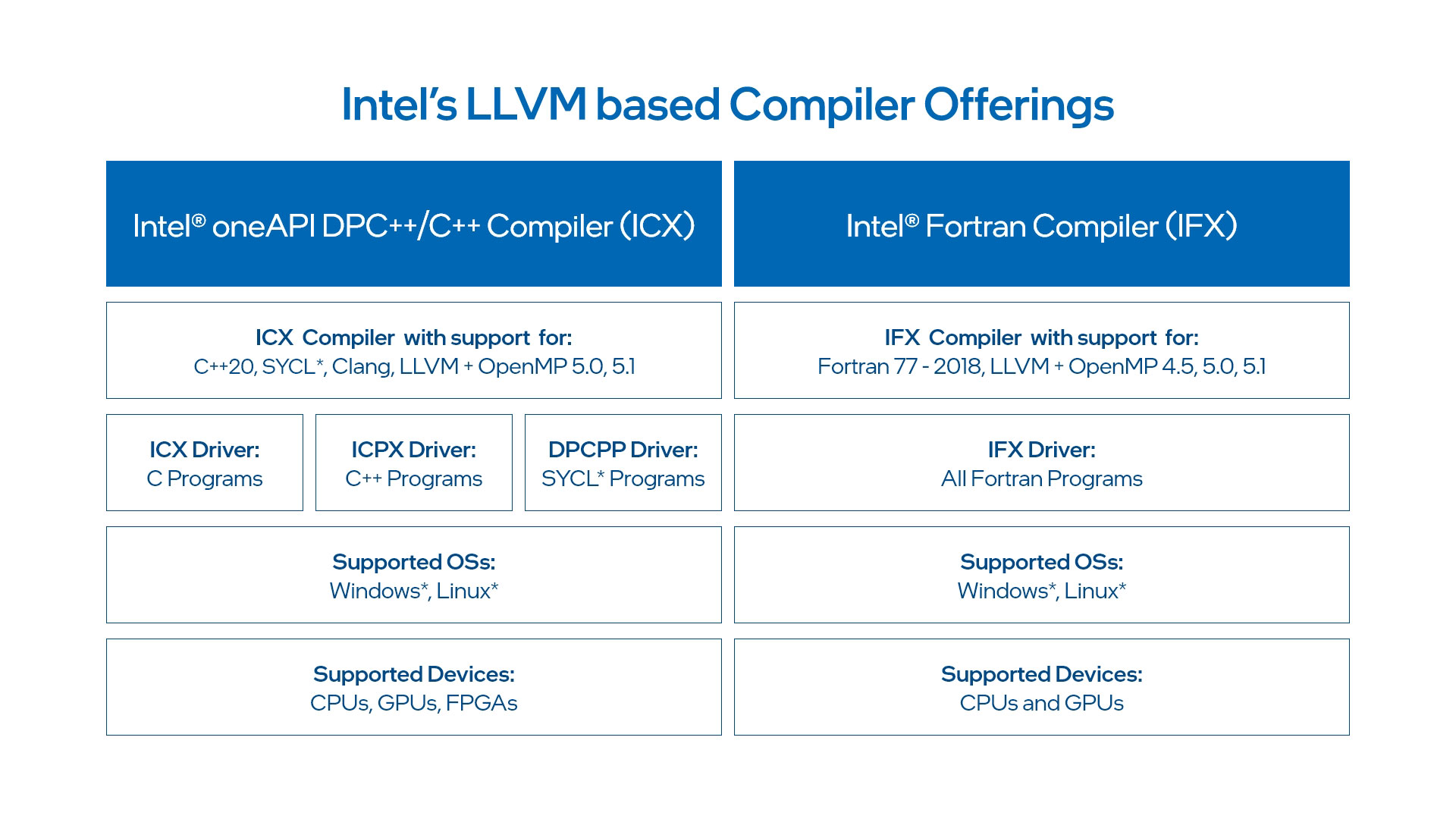

We can use different compilers to affect code generation and optimization. Two examples of LLVM-based oneAPI compilers are Intel oneAPI DPC++/C++ Compiler and Intel Fortran Compiler (IFX).

Intel oneAPI DPC++/C++ Compiler

oneAPI’s Data Parallel C++ (DPC++) is an Intel-led project that lets us write programs that execute across different computing systems without major, time-consuming code changes. Intel oneAPI DPC++/C++ Compiler is a single-source solution for compiling data-parallel C++ code for CPUs, GPUs, FPGAs, and other acceleration hardware. The compiler supports both Windows and Linux operating systems. It’s built on LLVM and uses the Clang front end, SYCL 2020 standards to support C++, and OpenCL kernels through the same source file.

SYCL is an open standard from the Khronos Group. It provides single-source programming based on standard C++ for heterogeneous computing platforms.

Intel implements SYCL specifications (with some extensions) through DPC++. The DPC++ compiler was used to prototype SYCL 2020, as well as many of its features in it. This means that the DPC++ compiler is a good choice for any programmers working on SYCL 2020 projects. Intel uses DPC++ to target Intel GPUs, CPUs, and FPGAs.

With the DPC++/C++ Compiler, based on Clang front-end LLVM technology, we can write code that works on all accelerator platforms using common programming standards. This helps lower the development cost by removing the need to maintain multiple development paths. It also simplifies porting our code across hardware platforms. It enables OpenMP 5.0 and 5.1 TARGET offload to Intel GPU targets.

The compiler contains three compiler drivers — icx, icpx, and dpcpp — to further simplify tailoring code for unique support requirements. These drivers are for compiling and linking C programs, C++ programs, and C++ programs with SYCL extensions, respectively. For Windows systems, the dcpcpp SYCL extension support driver has a slightly different naming convention: dpcpp-cl. Compiling with dcpcpp SYCL extensions provides the added flexibility for future acceleration needs.

Intel Fortran Compiler (IFX)

ifx is a Fortran compiler that uses LLVM back-end compiler technology, but it’s based on the Intel® Fortran Compiler Classic front-end and runtime libraries. It has a basic mode that supports Fortran 77, language standards up to Fortran 95, and most features in Fortran 2003 through Fortran 2018. It also supports OpenMP 5.0/5.1 and OpenMP 4.5 offloading features and directives.

The Cross-Architecture Capabilities of LLVM-based Compilers

Many compilers target a single architecture, like NVCC for NVIDIA GPUs. However, recent advances in the LLVM compiler infrastructure allow LLVM-based compilers to target multiple architectural domains. For example, the Clang C and C++ compiler uses the LLVM framework to generate executable code for CPUs. Clang can also generate executable code for different GPUs and FPGAs by targeting the appropriate runtime libraries.

LLVM-based compilers also support GPU and FPGA offloading by using the NVIDIA NVPTX back end. These compilers have a front end that parses source code into an intermediate representation (IR) and a back end that can produce machine code for various architectures provided by the user. Rather than using independent resources for each accelerator, we can efficiently share resources using tools like Intel DPC++ Unified Shared Memory.

GPU and FPGA offloading are enabled by default in several Intel oneAPI products:

- Intel oneAPI HPC Toolkit

- Intel oneAPI Base Toolkit

- Intel AI Analytics Toolkit

The Clang compiler has a different front end than the GNU Compiler Collection (GCC), but it has the same LLVM back end. It uses the same code generation toolchain and optimization passes as the GCC toolchain.

We can build applications with just one compiler and then offload portions of the application to run on GPUs or FPGAs, depending on the compute requirements. This lets us harness more appropriately specialized computing cores with minimal effort. For applications requiring graphics or AI accelerators, this is a particularly powerful way to avoid the intensive codebase revisions needed to parallelize for these platforms.

Additionally, we’re able to automate optimization tasks that would otherwise require significant manual work. We can rapidly scale our applications across multiple devices without hiring additional staff or spending time optimizing their code.

Final Thoughts

If you’re looking to develop use cases involving an XPU, or if you’re migrating code from existing GPUs and FGPAs to new architectures, LLVM compilers should be a critical component of your strategy. LLVM-based compilers offer shorter build times, flexibility, portability, and easy maintenance and optimization.

Intel oneAPI provides a standard programming model to simplify the development experience and enable software portability across diverse architectures.

Try oneAPI to learn more about its cross-architecture capabilities and experiment with its use in accelerating architectures. Intel also offers training in Data Parallel C++ to help developers make the most of oneAPI’s features.

Resources