Hi, welcome to an illustrated guide on using Docker with Intel’s OpenVINO™ integration with Tensorflow* to accelerate inferencing of your TensorFlow models on Intel hardware.

In this post, we will start by using Prebuilt Images available on DockerHub. Then we will go through the exact commands you’ll need to access an Object Detection notebook via Docker.

Let’s Begin!

For this post, we will try running OpenVINO™ integration with TensorFlow* on Windows OS. You will need Docker Desktop for Windows.

In case you are planning to try on Ubuntu OS, please refer to this link. The link also provides instructions on building runtime images from the hosted Dockerfiles.

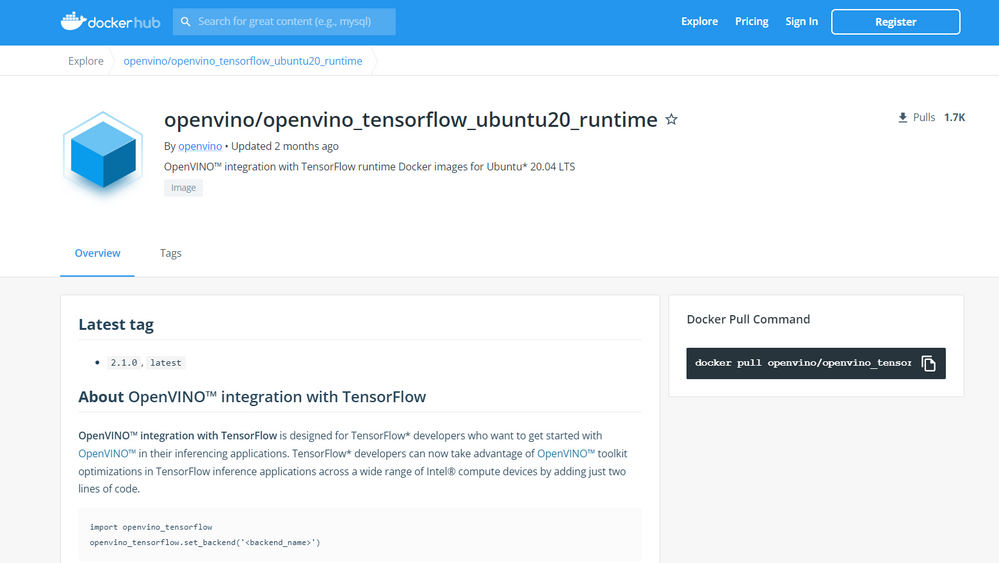

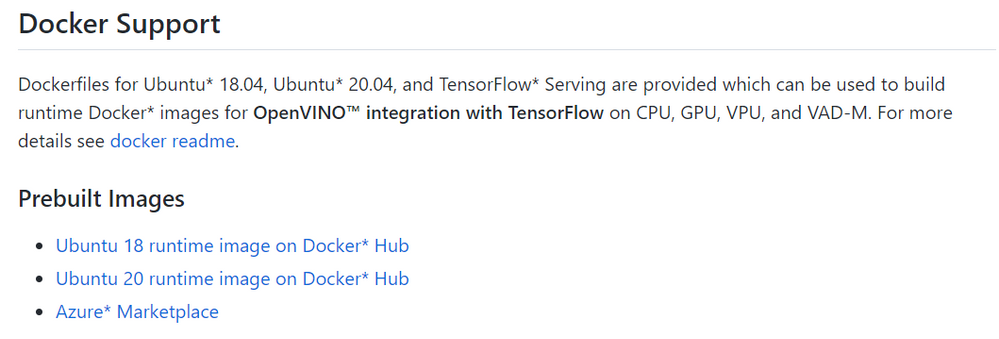

To start off, we simply pulled & ran the latest docker image on my Intel® Core™ i7 CPU. To do this, click on this link and scroll down to the "Docker Support" section and select the Ubuntu prebuilt image of your choice.

Here, we are going with Ubuntu 20 prebuilt image.

Next, type the following commands in your Windows PowerShell.

- docker pull openvino/openvino_tensorflow_ubuntu20_runtime

- docker run -it --rm -p 8888:8888 openvino/openvino_tensorflow_ubuntu20_runtime:latest

You will notice two things:

- OpenVINO™ environment is initialized

- Server location and URL of the hosted notebook

To access the notebook, copy-paste the URL of the hosted notebook in your browser.

Note: The docker image hosts a Jupyter server with an Image Classification and an Object Detection sample that demonstrate the performance benefits of using OpenVINO™ integration with TensorFlow*.

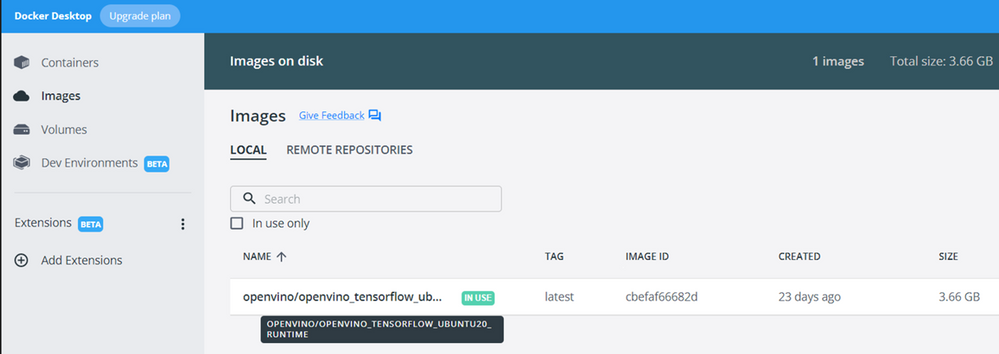

You can view the status of your images on Docker Desktop, which will look something like this.

Additionally, check out the video version of this post and the steps to run the notebook here if you prefer. You will be amazed to witness the added acceleration and the model performing so well.

Video TLDR; The video showcases a notebook that covers an object detection use case and demonstrates the acceleration of YOLOv4 model, trained on coco dataset. You can find this specific example on GitHub.

Lastly, if you’re interested in exploring more notebooks with Docker and OpenVINO™ integration with Tensorflow, here are links to some fantastic resources that you may enjoy. This post was heavily inspired by them.

Links

OpenVINO™ integration with TensorFlow* GitHub Homepage: https://github.com/openvinotoolkit/openvino_tensorflow

Prebuilt Images:

https://github.com/openvinotoolkit/openvino_tensorflow/tree/master/docker#prebuilt-images

Dockerfiles for OpenVINO™ integration with TensorFlow:

https://github.com/openvinotoolkit/openvino_tensorflow/tree/master/docker#openvino-integration-with-...

Dockerfiles for TensorFlow Serving with OpenVINO™ integration with TensorFlow:

https://github.com/openvinotoolkit/openvino_tensorflow/tree/master/docker#dockerfiles-for-tf-serving...

Notebooks to try:

https://github.com/openvinotoolkit/openvino_tensorflow/blob/master/examples/notebooks

We had a lot of fun making this post so let us know in the comments if this was helpful or what you would like to see in the next one. And as always, thanks for reading!

Resources

Notices & Disclaimers

Intel technologies may require enabled hardware, software or service activation.

No product or component can be absolutely secure.

Your costs and results may vary.

Intel does not control or audit third-party data. You should consult other sources to evaluate accuracy.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.