This article outlines the process of creating a custom model for object detection using YOLOv5 architecture. It covers setting up the training environment, obtaining a large annotated dataset, training the model, and using the custom model in CodeProject.AI Server. The article presents observations and improvements to achieve higher accuracy in object detection.

Introduction

Object Detection is a common application of Artificial Intelligence. In CodeProject.AI Server, we have added a module that uses the YOLOv5 architecture for object detection. It’s a brilliant system but using the 'standard’ YOLOv5 models means you are limited to the 80 classes available in the default models. To detect objects outside this default set, you need to train your own custom models.

While the YOLOv5 documentation walks you through the process of creating a new model, setting up a training session and producing good and accurate models is not as simple as they would lead you to believe.

This article will walk you through creating a custom model for the detection of backyard pests using the following steps:

- Setting up your training environment.

- Obtaining a sufficiently large and annotated collection of relevant and diverse images for training, testing, and validation of the model.

- Training your model, including critical steps in enabling GPU acceleration

- Using your custom model in CodeProject.AI Server

Some of my observations about the training process were unexpected, and I plan on expanding this article as I explore and improve the process and the resulting models.

There are a number of terms and metrics that are used to describe the performance of an AI Model. I will be using these to determine how 'good' a trained model is for the task for which it was trained. You can read about them in the Notes on Training Metrics.

Setting Up Your Training Environment

Hardware

The system I am using for my AI development and testing has the following specs:

| Spec | Value |

| CPU | 12th Gen Intel(R) Core(TM) i5-12400 2.50 GHz |

| RAM | 16 GB |

| GPU | NVidia GeForce RTX 3060 |

| GPU RAM | 12GB |

| Disk | 1 TB SSD |

| OS | Windows 11 |

Development IDE

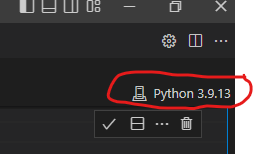

I am using Visual Studio Code as my development IDE as it runs on both Windows and Linux. I have this configured for Python development and am using a Python Jupyter Notebook to execute and record results. I am running Python 3.9.13.

Setup Project Folder

For my project, I created a directory c:\Dev\YoloV5_Training and opened it in Visual Studio Code. I then created a Python Notebook named Custom Model Training.ipynb. Having got this out of the way, let's get things going.

Setup a Python Virtual Environment

In order to not pollute the global Python installation, I will be creating a Virtual Environment for this project using the Python venv command. This virtual environment will be created in the venv sub-directory.

Run the following command only once.

!Python -m venv venv

Having done this, you need to tell Visual Studio Code that you want to use the virtual environment you just created. You do this by clicking on the Python Environment selector in the top-right of the Notebook document header.

Setup Tools for Dataset Creation

The most important part of training a custom YOLOv5, or any AI model, is obtaining a sufficiently large and varied set of annotated data with which to train the model. Fortunately, there are several dataset repositories available. One of these is Google's Open Images. This repository contains, for object detection, 16M bounding boxes for 600 object classes on 1.9M images.

In order to manage the selection of images and the creation of datasets from this repository, Google has worked with Voxel51 to integrate Open Images into their FiftyOne "open-source tool for building high-quality datasets and computer vision models." This tool includes both a Python package and UI for managing and viewing image datasets from a variety of sources, including Open Images.

In the attached Jupyter notebook you'll see, at the top, the following:

%pip install –upgrade pip

%pip install fiftyone

Setup the Model Training Tools

We will be using the tools supplied with the Ultralytics YOLOv5 GitHub Repository for training and validation of our models. We will clone a copy of this repository into our directory in the yolov5 subdirectory. Execute the following command:

!git clone https://github.com/ultralytics/yolov5

Setup the Dependencies

Did you set up the Virtual Environment for the notebook?

It is important that you set the Virtual Environment for the workbook so that you don't install packages into the Python global packages, potentially polluting other systems that rely on the global package store. In the upper-right of the of the Notebook, you can set the Virtual Environment used by Visual Studio Code to run Python from the Notebook. Select the Virtual Environment venv that was created earlier.

Then you can run one of the two following cells, depending on whether you have an Nvidia GPU or not.

# if running on a system with GPU

%pip install --upgrade pip

%pip install -r requirements-gpu.txt

%pip install ipywidgets

# if running on a system without GPU

%pip install --upgrade pip

%pip install -r requirements-cpu.txt

%pip install ipywidgets

Training a Model with a Small Dataset

Download a Subset from Open Images

Training a model from a large dataset with thousands or tens of thousands of images will take hours or days.

In order to evaluate the process of creating a dataset and training it, we will:

- create a dataset with a maximum of 1000 images each for Raccoons, Dogs, Cats, Squirrels, and Skunks each

- export the dataset to YOLOv5 format

- train the dataset and evaluate its performance metrics.

The intent of this dataset is mainly to detect if our friendly Trash Pandas are invading our garbage containers. We have added other classes than Raccoons to help ensure that the resulting model does not end up thinking that anything with four legs is a Raccoon.

Running the following script will download a subset of the Open Images dataset into our /users/<username>/.FiftyOne directory. In addition, it will create a number of subsets or the data and store this information in a MongoDb database in our /users/<username>/FiftyOne directory.

import fiftyone as fo

import fiftyone.zoo as foz

splits = ["train", "validation", "test"]

numSamples = 1000

seed = 42

if fo.dataset_exists("open-images-critters"):

fo.delete_dataset("open-images-critters")

dataset = foz.load_zoo_dataset(

"open-images-v6",

splits=splits,

label_types=["detections"],

classes="Raccoon",

max_samples=numSamples,

seed=seed,

shuffle=True,

dataset_name="open-images-critters")

print(dataset)

if fo.dataset_exists("open-images-cats"):

fo.delete_dataset("open-images-cats")

cats_dataset = foz.load_zoo_dataset(

"open-images-v6",

splits=splits,

label_types=["detections"],

classes="Cat",

max_samples=numSamples,

seed=seed,

shuffle=True,

dataset_name="open-images-cats")

dataset.merge_samples(cats_dataset)

if fo.dataset_exists("open-images-dogs"):

fo.delete_dataset("open-images-dogs")

dogs_dataset = foz.load_zoo_dataset(

"open-images-v6",

splits=splits,

label_types=["detections"],

classes="Dog",

max_samples=numSamples,

seed=seed,

shuffle=True,

dataset_name="open-images-dogs")

dataset.merge_samples(dogs_dataset)

if fo.dataset_exists("open-images-squirrels"):

fo.delete_dataset("open-images-squirrels")

squirrels_dataset = foz.load_zoo_dataset(

"open-images-v6",

splits=splits,

label_types=["detections"],

classes="Squirrel",

max_samples=numSamples,

seed=seed,

shuffle=True,

dataset_name="open-images-squirrels")

dataset.merge_samples(squirrels_dataset)

if fo.dataset_exists("open-images-skunks"):

fo.delete_dataset("open-images-skunks")

skunks_dataset = foz.load_zoo_dataset(

"open-images-v6",

splits=splits,

label_types=["detections"],

classes="Skunk",

max_samples=numSamples,

seed=seed,

shuffle=True,

dataset_name="open-images-skunks")

dataset.merge_samples(skunks_dataset)

print(fo.list_datasets())

Export the Dataset to YOLOv5 Format

Before we can train the model using YOLOv5, we need to export the open-images-critters dataset to the correct format.

Fortunately, FiftyOne supplies the tools to perform this conversion. The following code will export the dataset to the datasets\critters subfolder. This will have the following structure:

import fiftyone as fo

export_dir = "datasets/critters"

label_field = "detections"

splits = ["train", "validation","test"]

classes = ["Raccoon", "Cat", "Dog", "Squirrel", "Skunk"]

dataset_or_view = fo.load_dataset("open-images-critters")

for split in splits:

split_view = dataset_or_view.match_tags(split)

split_view.export(

export_dir=export_dir,

dataset_type=fo.types.YOLOv5Dataset,

label_field=label_field,

split=split,

classes=classes,

)

Correct the dataset.yml file

The file datasets\critters\dataset.yaml is created during this process.

names:

- Raccoon

- Cat

- Dog

- Squirrel

- Skunk

nc: 5

path: c:\Dev\YoloV5_Training\datasets\critters

test: .\images\test\

train: .\images\train\

validation: .\images\validation\

The training code expects the label val rather than validation. Update the file to:

names:

- Raccoon

- Cat

- Dog

- Squirrel

- Skunk

nc: 5

path: c:\Dev\YoloV5_Training\datasets\critters

test: .\images\test\

train: .\images\train\

val: .\images\validation\

We are now ready to train the model.

Train the Small Dataset

To ensure that our process is correct, we will train a model with a small number of epochs (iterations). We will train using the standard yolov5s.pt model as a starting point with 50 epochs. The training results will be stored in train/critters/epochs50. In this directory, you will also find the resulting weights (model) as well as graphs and tables detailing the process and the resulting performance metrics.

Due to memory constraints, we had to reduce the batch size to 32. Make sure you have shut down any memory hogging applications such as Docker, otherwise, your training may stop with no warning.

Run the following script to do the training. This took 51 minutes on my machine.

!python yolov5/train.py --batch 32 --weights

yolov5s.pt --data datasets/critters/dataset.yaml --project train/critters

--name epochs50 --epochs 50

Once this is complete, the train/critters.epochs50 directory will contain a number of interesting files.

- results.csv which contains performance information for each epoch

- several PNG files with graphs for various performance metrics. The PR_curve.png and results.png are of particular interest.

- The PR_Curve.png shows the Precision vs. Recall curve for the best model.

- The results.png shows the values of the training metrics for each epoch of the training.

Precision/Recall Curve

Training Metrics

The resulting best.pt has a mAP@50 of 0.757 which is fairly good, but the low mAP@[0.5:0.95] 0f 0.55 indicates that will miss detecting some objects.

So let's verify that the model train\critters\epochs50\weights\best.pt actually will detect a Raccoon in an image. I will use the image datasets\critters\images\validation\8fbdeff053852ee7.jpg for this. You may wish to use a different image.

To get an idea of performance, run the detection twice. The first run has some setup resulting in longer inferencing times, so the second inference timing will be more representative.

Awww…who’s a cutey?

import torch

model2 = torch.hub.load('ultralytics/yolov5', 'custom', 'train/critters/epochs50/weights/best.pt', device="0")

result = model2("datasets/critters/images/validation/8fbdeff053852ee7.jpg")

result.print()

Using cache found in C:\Users\matth/.cache\torch\hub\ultralytics_yolov5_master

YOLOv5 2022-11-8 Python-3.9.13 torch-1.13.0+cu117 CUDA:0 (NVIDIA GeForce RTX 3060, 12288MiB)

Fusing layers...

Model summary: 157 layers, 7023610 parameters, 0 gradients, 15.8 GFLOPs

Adding AutoShape...

image 1/1: 768x1024 1 Raccoon

Speed: 13.0ms pre-process, 71.0ms inference, 4.0ms NMS per image at shape (1, 3, 480, 640)

Improve the Model

Considering that the standard yolov5s.pt model has an m_AP@50 of 0.568, our model's 0.757 appears to be impressive. However, previous runs of this process have shown that the generated model will miss some objects, particularly if they are small.

Improving the model's performance, according to the Ultralytics YOLOv5 documentation, should be done in two ways:

- increase the number of epochs to at least 300

- increase the number of images in the training set

We will do this in two stages so that we can see the effect each change impacts the performance metrics.

First, we will train with 300 epochs. In addition, we will use the model we just trained as a starting point. No sense wasting that work.

This will take several hours (5 hours on my machine), so you might want to do this in the evening.

!python yolov5/train.py --batch 32 --weights

train/critters/epochs50/weights/best.pt --data datasets/critters/dataset.yaml

--project train/critters --name epochs300 -

Once this is complete, the train/critters/epochs300 folder will contain the results of the training.

The performance can be seen in the PR_curve.png and results.png graphs.

Precision/Recall Curve

Training Metrics

The new value for the m_AP@50 is 0.777 which is an improvement of 2.6%. This improved mAP@[0.5:0.95] of 0.62 shows that the model will miss fewer objects.

Again, let's verify that it works.

import torch

model2 = torch.hub.load('ultralytics/yolov5', 'custom', 'train/critters/epochs300/weights/best.pt', device="0")

result = model2("datasets/critters/images/validation/8fbdeff053852ee7.jpg")

result.print()

Using cache found in C:\Users\matth/.cache\torch\hub\ultralytics_yolov5_master

YOLOv5 2022-11-8 Python-3.9.13 torch-1.13.0+cu117 CUDA:0 (NVIDIA GeForce RTX 3060, 12288MiB)

Fusing layers...

Model summary: 157 layers, 7023610 parameters, 0 gradients, 15.8 GFLOPs

Adding AutoShape...

image 1/1: 768x1024 1 Raccoon

Speed: 8.0ms pre-process, 71.4ms inference, 2.0ms NMS per image at shape (1, 3, 480, 640)

Training a Model with a Large Dataset

Having shown that we can train a custom YOLOv5 dataset and obtain reasonable performance with a small dataset, we want to try this with a larger dataset. We will then check to see if any additional performance merits the extra effort and time.

Download a Subset from Open Images

For this:

- create a dataset with a maximum of 25,000 total images for Raccoons, Dogs, Cats, Squirrels, and Skunks.

- export the dataset to YOLOv5 format

- train the dataset with 300 epochs starting with our last best.pt and evaluate its performance metrics.

As before, running the following script will download a subset of the Open Images dataset into our /users/<username>/.FiftyOne directory.

import fiftyone as fo

import fiftyone.zoo as foz

splits = ["train", "validation", "test"]

numSamples = 25000

seed = 42

if fo.dataset_exists("open-images-critters-large"):

fo.delete_dataset("open-images-critters-large")

dataset = foz.load_zoo_dataset(

"open-images-v6",

splits=splits,

label_types=["detections"],

classes=["Raccoon", "Dog", "Cat", "Squirrel", "Skunk"],

max_samples=numSamples,

seed=seed,

shuffle=True,

dataset_name="open-images-critters-large")

print(fo.list_datasets())

print(dataset)

Export the Large Dataset

As before, we will export the dataset to YOLOv5 format. We will save it in datasets/critters-large.

import fiftyone as fo

export_dir = "datasets/critters-large"

label_field = "detections"

splits = ["train", "validation","test"]

classes = ["Raccoon", "Cat", "Dog", "Squirrel", "Skunk"]

dataset_or_view = fo.load_dataset("open-images-critters-large")

for split in splits:

split_view = dataset_or_view.match_tags(split)

split_view.export(

export_dir=export_dir,

dataset_type=fo.types.YOLOv5Dataset,

label_field=label_field,

split=split,

classes=classes,

)

Correct the dataset.yml file

As before, the file datasets\critters-large\dataset.yaml is created during this process needs to be corrected.

names:

- Raccoon

- Cat

- Dog

- Squirrel

- Skunk

nc: 5

path: c:\Dev\YoloV5_Training\datasets\critters-large

test: .\images\test\

train: .\images\train\

validation: .\images\validation\

The training code expects the label val rather than validation. Update the file to:

names:

- Raccoon

- Cat

- Dog

- Squirrel

- Skunk

nc: 5

path: c:\Dev\YoloV5_Training\datasets\critters-large

test: .\images\test\

train: .\images\train\

val: .\images\validation\

We are now ready to train the model.

Train with the Larger Dataset

Again, we need to reduce the batch size due to memory constrains. In this case, I set it to 24.

!python yolov5/train.py --batch 24 --weights

train/critters/epochs300/weights/best.pt --data

datasets/critters-large/dataset.yaml --project train/critters-large --name

epochs300 --epochs 300 ^C

I had to stop the run after 15 hours. Fortunately, the training can be resumed. After each epoch, a best.pt file is created in the weights directory which, surprisingly enough, contains the best model found so far.

We can get the performance metrics for this model using the validation script.

!python yolov5/val.py --weights

train/critters-large/epochs300/weights/best.pt --data

datasets/critters-large/dataset.yaml --project val/critters --name large

--device 0

Once the validation is complete, the PR Curve, and other graphs, will be available in the val/critters/large folder.

We can already see that this model is much improved over the previous model and has a m_AP@50 of 0.811 or an additional 4.3%.

I can then restart the training using the --resume parameter on the training script:

!python yolov5/train.py --resume

train/critters-large/epochs300/weights/last.pt

Once this is complete, the train/critters-large/epochs300 folder will contain the results of the training.

The performance can be seen in the PR_curve.png graph.

Precision/Recall Curve

Training Metrics

The new value for the mAP@50 is 0.878 which is an additional improvement of 13% over the 300 epoch small model, or 16% over the small model with 50 epochs. In addition, the mAP@[0.5:0.95] of 0.75 indicates that the model will detect most objects.

Furthermore, the upward slope of the mAP graphs at the end of training indicate that additional training may further improve the model.

Again, let's verify that it works.

import torch

model2 = torch.hub.load('ultralytics/yolov5', 'custom', 'train/critters-large/epochs300/weights/best.pt', device="0")

result = model2("datasets/critters/images/validation/8fbdeff053852ee7.jpg")

result.print()

Validate the Model

The YOLOv5 code provides tools for validating the performance of the custom model. We will validate the model with and without image augmentation during inferencing.

Augmentation manipulates the image being inferenced so that multiple images with different modifications are supplied to the model. Of course, this means that there is more processing time required for any additional accuracy.

Run the following scripts at least twice as there is setup for the first inference.

!python yolov5/val.py --weights

train/critters-large/epochs300/weights/best.pt --data

datasets/critters-large/dataset.yaml --project val/critters-large --name

augmentee --device 0 --augment

!python yolov5/val.py --weights

train/critters-large/epochs300/weights/best.pt --data

datasets/critters-large/dataset.yaml --project val/critters-large --name not

The results of these validation runs are shown in the PR_curve.png graphs.

Normal Inferencing Precision/Recall Curve

Augmented Inferencing Precision/Recall Curve

- The unaugmented validation has mAP@50 of 0.877, a mAP@50:95 of 0.756, and took 6.4ms.

- The augmented validation has mAP@50 of 0.85, a mAP@50:95 of 0.734, and took 14.0ms.

This indicates that augmented inference does not improve the performance and in fact slightly reduces the performance and takes twice as long. So, at least for this model, augmented inferencing is not recommended.

Conclusions

We have shown that using readily available tools and data, it is a relatively simple, if time consuming task to:

- create a large, annotated dataset suitable for YOLOv5 training

- train and verify the operation of a custom YOLOv5 model

While I didn't go through the process here, I did try training with:

- freezing the all the layers but the last

- freezing the backbone (first 10 layers)

- hyper-parameter evolution

The first two did not result in any significant improvement, or actually decreased in performance.

The last just took too long and was terminated. This has been left for a future exercise.

Next Steps

For you, train a custom model to detect something you would find useful. This could be detecting:

- Amazon packages at you front door

- the presence of birds at your bird feeder and the species

- the School Bus arriving to pick up or drop off you children

- Guns, knives, and other weapons

- Face detection

- Face mask detection, or lack of face mask detection

The possibilities are endless.

For me, I would like to:

- evaluate modifications to the hyper-parameters to provide better and faster convergence on best models.

- evaluate the evolution of the hyper-parameters during training.

- as YOLOv5 now supports Classification and Segmentation, look at training and use of these types of models

- look at encapsulating the dataset creation and training process into a simple tool/UI.

Be on the lookout for follow up articles.

As always, comments, bug reports, and any improvement recommendations are always welcome.

Notes on Training Metrics

These are explained in detail at The Confusing Metrics of AP and mAP for Object Detection / Instance Segmentation and Mean Average Precision (mAP) Explained: Everything You Need to Know. I've summarized this here:

| Term | Formula | Description |

| Precision | Precision = Tp / (Tp + Fp) | A measurement of the probability of a positive result (object detection) is correct. |

| Recall | Recall = Tp /(Tp + Fn) | A measurement of the probability of a positive result when it should have been. |

| AP | The area under the Precision-Recall Curve | Average Precision |

| IoU | IoU = Ai / Au | Union over Intersection is a measure of the amount of overlap of two bounding boxes. When one of the bounding boxes is the Ground Truth and the other is the predicted value, the IoU is a measurement of the accuracy of the prediction. The larger the value, the more the predicted and Ground Truth values match. |

| mAP | mAP = 1/nc ∑ APi for i=1 to nc | Mean Average Precision. The average of the sum of the Average Precision for each class of object. This value is calculated for a particular value of IoU threshold for a positive value. |

| mAP@50 | | mAP for an IoU threshold of .5 for a positive prediction. |

| mAP@[0.5:0.95] | | The average of the mAP values for a number of IoU values between .5 and .95. Typically 10 intervals or a value of .05 is used. |

| Ground Truth | | The true value of the bounding box for the object. This is typically defined by the manual or automatic labeling process which defines the classes and bounding boxes for objects in an image. |

Where:

- Tp is the number of correct Positive results

- Fp is the number of incorrect Positive results

- Tn is the number of correct Negative results

- Fn is the number of incorrect Negative results.

- Ai is the area of Intersection of two bounding boxes

- Au is the area of the Union of two bounding boxes

- APi is the AP for class i

- nc is the number of classes