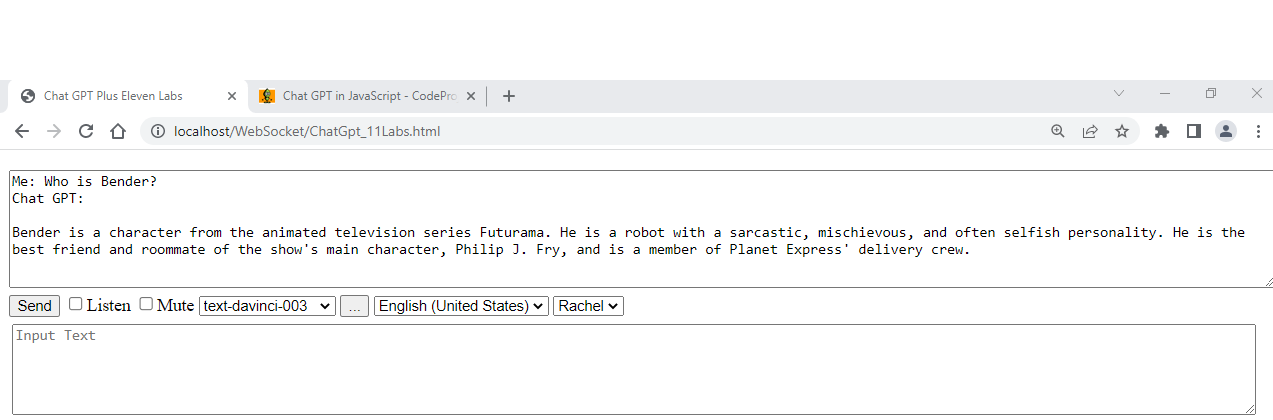

In this article, I have attempted to create a web page to talk to Chat GPT with speech-to-text and Eleven Labs text-to-speech browser capabilities. The goal is to make Chat GPT talk with a human like voice.

Introduction

This application is my attempt to create a client app to talk to Chat GPT in JavaScript. My goal is to demonstrate how to use Chat GPT API with speech-to-text and Eleven Labs text-to-speech capabilities. This means that you can talk to your browser and your browser will talk back to you with a human like voice.

Background

This article is a sequel to my previous article Chat GPT in JavaScript.

Using the Code

- Get

OPENAI_API_KEY from https://beta.openai.com/account/api-keys. - Open ChatGPT_11Labs.js and add the API Key key to the first line.

- Get

ELEVEN_LABS_API_KEY from https://beta.elevenlabs.io/speech-synthesis > Profile > API Key. - Open ChatGPT_11Labs.js and add the API Key key to the second line.

Here is the code. Basically, it uses XMLHttpRequest to post JSON to OpenAI's endpoint and then posts the answer from Chat GPT to Eleven Labs endpoint.

Code for ChatGpt_11Labs.js

var OPENAI_API_KEY = "";

var ELEVEN_LABS_API_KEY = "";

var sVoiceId = "21m00Tcm4TlvDq8ikWAM";

var bSpeechInProgress = false;

var oSpeechRecognizer = null

function OnLoad() {

if ("webkitSpeechRecognition" in window) {

} else {

lblSpeak.style.display = "none";

}

GetVoiceList();

}

function ChangeLang(o) {

if (oSpeechRecognizer) {

oSpeechRecognizer.lang = selLang.value;

}

}

function GetVoiceList() {

var oHttp = new XMLHttpRequest();

oHttp.open("GET", "https://api.elevenlabs.io/v1/voices");

oHttp.setRequestHeader("Accept", "application/json");

oHttp.setRequestHeader("Content-Type", "application/json");

oHttp.setRequestHeader("xi-api-key", ELEVEN_LABS_API_KEY)

oHttp.onreadystatechange = function () {

if (oHttp.readyState === 4) {

var oJson = { voices: []};

try {

oJson = JSON.parse(oHttp.responseText);

} catch (ex) {

txtOutput.value += "Error: " + ex.message

}

for (var i = 0; i < oJson.voices.length; i++) {

selVoices.options[selVoices.length] = new Option(oJson.voices[i].name, oJson.voices[i].voice_id);

};

}

};

oHttp.send();

}

function SayIt() {

var s = txtMsg.value;

if (s == "") {

txtMsg.focus();

return;

}

TextToSpeech(s);

}

function TextToSpeech(s) {

if (chkMute.checked) return;

if (selVoices.length > 0 && selVoices.selectedIndex != -1) {

sVoiceId = selVoices.value;

}

spMsg.innerHTML = "Eleven labs text-to-speech...";

var oHttp = new XMLHttpRequest();

oHttp.open("POST", "https://api.elevenlabs.io/v1/text-to-speech/" + sVoiceId);

oHttp.setRequestHeader("Accept", "audio/mpeg");

oHttp.setRequestHeader("Content-Type", "application/json");

oHttp.setRequestHeader("xi-api-key", ELEVEN_LABS_API_KEY)

oHttp.onload = function () {

if (oHttp.readyState === 4) {

spMsg.innerHTML = "";

var oBlob = new Blob([this.response], { "type": "audio/mpeg" });

var audioURL = window.URL.createObjectURL(oBlob);

var audio = new Audio();

audio.src = audioURL;

audio.play();

}

};

var data = {

text: s,

voice_settings: { stability: 0, similarity_boost: 0 }

};

oHttp.responseType = "arraybuffer";

oHttp.send(JSON.stringify(data));

}

function SetModels() {

selModel.length = 0;

var oHttp = new XMLHttpRequest();

oHttp.open("GET", "https://api.openai.com/v1/models");

oHttp.setRequestHeader("Accept", "application/json");

oHttp.setRequestHeader("Content-Type", "application/json");

oHttp.setRequestHeader("Authorization", "Bearer " + OPENAI_API_KEY);

oHttp.onreadystatechange = function () {

if (oHttp.readyState === 4) {

var oJson = { voices: [] };

try {

oJson = JSON.parse(oHttp.responseText);

} catch (ex) {

txtOutput.value += "Error: " + ex.message

}

var l = [];

for (var i = 0; i < oJson.data.length; i++) {

l.push(oJson.data[i].id);

};

l.sort();

for (var i = 0; i < l.length; i++) {

selModel.options[selModel.length] = new Option(l[i], l[i]);

};

for (var i = 0; i < selModel.length; i++) {

if (selModel.options[i].value == "text-davinci-003") {

selModel.selectedIndex = i;

break;

}

};

}

};

oHttp.send();

}

function Send() {

var sQuestion = txtMsg.value;

if (sQuestion == "") {

alert("Type in your question!");

txtMsg.focus();

return;

}

spMsg.innerHTML = "Chat GPT is thinking...";

var sUrl = "https://api.openai.com/v1/completions";

var sModel = selModel.value;

if (sModel.indexOf("gpt-3.5-turbo") != -1) {

sUrl = "https://api.openai.com/v1/chat/completions";

}

var oHttp = new XMLHttpRequest();

oHttp.open("POST", sUrl);

oHttp.setRequestHeader("Accept", "application/json");

oHttp.setRequestHeader("Content-Type", "application/json");

oHttp.setRequestHeader("Authorization", "Bearer " + OPENAI_API_KEY)

oHttp.onreadystatechange = function () {

if (oHttp.readyState === 4) {

spMsg.innerHTML = "";

var oJson = {}

if (txtOutput.value != "") txtOutput.value += "\n";

try {

oJson = JSON.parse(oHttp.responseText);

} catch (ex) {

txtOutput.value += "Error: " + ex.message

}

if (oJson.error && oJson.error.message) {

txtOutput.value += "Error: " + oJson.error.message;

} else if (oJson.choices) {

var s = "";

if (oJson.choices[0].text) {

s = oJson.choices[0].text;

} else if (oJson.choices[0].message) {

s = oJson.choices[0].message.content;

}

if (selLang.value != "en-US") {

var a = s.split("?\n");

if (a.length == 2) {

s = a[1];

}

}

if (s == "") {

s = "No response";

} else {

txtOutput.value += "Chat GPT: " + s;

TextToSpeech(s);

}

}

}

};

var iMaxTokens = 2048;

var sUserId = "1";

var dTemperature = 0.5;

var data = {

model: sModel,

prompt: sQuestion,

max_tokens: iMaxTokens,

user: sUserId,

temperature: dTemperature,

frequency_penalty: 0.0,

presence_penalty: 0.0,

stop: ["#", ";"]

}

if (sModel.indexOf("gpt-3.5-turbo") != -1) {

data = {

"model": sModel,

"messages": [

{

"role": "user",

"content": sQuestion

}

]

}

}

oHttp.send(JSON.stringify(data));

if (txtOutput.value != "") txtOutput.value += "\n";

txtOutput.value += "Me: " + sQuestion;

txtMsg.value = "";

}

function Mute(b) {

if (b) {

selVoices.style.display = "none";

} else {

selVoices.style.display = "";

}

}

function SpeechToText() {

if (oSpeechRecognizer) {

if (chkSpeak.checked) {

oSpeechRecognizer.start();

} else {

oSpeechRecognizer.stop();

}

return;

}

oSpeechRecognizer = new webkitSpeechRecognition();

oSpeechRecognizer.continuous = true;

oSpeechRecognizer.interimResults = true;

oSpeechRecognizer.lang = selLang.value;

oSpeechRecognizer.start();

oSpeechRecognizer.onresult = function (event) {

var interimTranscripts = "";

for (var i = event.resultIndex; i < event.results.length; i++) {

var transcript = event.results[i][0].transcript;

if (event.results[i].isFinal) {

txtMsg.value = transcript;

Send();

} else {

transcript.replace("\n", "<br>");

interimTranscripts += transcript;

}

var oDiv = document.getElementById("idText");

oDiv.innerHTML = '<span style="color: #999;">' + interimTranscripts + '</span>';

}

};

oSpeechRecognizer.onerror = function (event) {

};

}

Code for the HTML page ChatGpt_11Labs.html

<!DOCTYPE html>

<html>

<head>

<title>Chat GPT Plus Eleven Labs</title>

<script src="ChatGpt_11Labs.js?v=6"></script>

</head>

<body onload="OnLoad()">

<div id="idContainer">

<textarea id="txtOutput" rows="10" style="margin-top: 10px; width: 100%;" placeholder="Output"></textarea>

<div>

<button type="button" onclick="Send()" id="btnSend">Send</button>

<button type="button" onclick="SayIt()" style="display: none">Say It</button>

<label id="lblSpeak"><input id="chkSpeak" type="checkbox" onclick="SpeechToText()" />Listen</label>

<label id="lblMute"><input id="chkMute" type="checkbox" onclick="Mute(this.checked)" />Mute</label>

<select id="selModel">

<option value="text-davinci-003">text-davinci-003</option>

<option value="text-davinci-002">text-davinci-002</option>

<option value="code-davinci-002">code-davinci-002</option>

<option value="gpt-3.5-turbo">gpt-3.5-turbo</option>

<option value="gpt-3.5-turbo-0301">gpt-3.5-turbo-0301</option>

</select>

<button type="button" onclick="SetModels()" id="btnSetModels" title="Load all models">...</button>

<select id="selLang" onchange="ChangeLang(this)">

<option value="en-US">English (United States)</option>

<option value="fr-FR">French (France)</option>

<option value="ru-RU">Russian (Russia)</option>

<option value="pt-BR">Portuguese (Brazil)</option>

<option value="es-ES">Spanish (Spain)</option>

<option value="de-DE">German (Germany)</option>

<option value="it-IT">Italian (Italy)</option>

<option value="pl-PL">Polish (Poland)</option>

<option value="nl-NL">Dutch (Netherlands)</option>

</select>

<select id="selVoices"></select>

<span id="spMsg"></span>

</div>

<textarea id="txtMsg" rows="5" wrap="soft" style="width: 98%; margin-left: 3px; margin-top: 6px" placeholder="Input Text"></textarea>

<div id="idText"></div>

</div>

</body>

</html>

Points of Interest

Not all browsers support speech-to-text. Chrome and Edge seem to support it while Firefox does not. This is why the Listen checkbox will be hidden in Firefox.

History

- 20th March, 2023: Version 1 created

- May 24. 2023, Chat GPT4 support