The point of this implementation is that 1) I need several caches to manage objects of different types and I want type safety and I don't want numerous discrete instances but rather a container for all caches. 2) The caches will not be large. 3) The caches should exist throughout the lifetime of the application unless explicitly cleared. 4) There is no need for eviction/removal policies because these policies cannot be determined by the application. 5) The keyed cache collection and the caches they manage must be thread safe.

Introduction

In-memory caching in .NET usually implements instances of the MemoryCache in the System.Runtime.Caching namespace, which supports policies for callbacks when an entry is about to be removed, as well as eviction/removal policies based on an absolute or sliding expiration date and/or the size of the cache. More sophisticated caching systems provide persistence and some deal with distributed caches, neither features are necessary for my requirements. One of the drawbacks of MemoryCache is that it returns an object and requires an extension method to cast the object to a generic type; an example is here. Oddly, MemoryCache does not implement a "clear all" method -- you have to dispose of the cache and create it anew. It appears that Microsoft's implementation is coupled with the cache state and in particular whether it has been disposed of. Again, this is not a concern that I have -- the cache might be cleared but there's no reason to dispose of it entirely. Microsoft also has this warning regarding MemoryCache, at least for the old .NET 3.1 platform:

"Do not create MemoryCache instances unless it is required. If you create cache instances in client and Web applications, the MemoryCache instances should be created early in the application life cycle. You must create only the number of cache instances that will be used in your application, and store references to the cache instances in variables that can be accessed globally. For example, in ASP.NET applications, you can store the references in application state. If you create only a single cache instance in your application, use the default cache and get a reference to it from the Default property when you need to access the cache."

So it seems like the MemoryCache, at least with regards to web applications, is intended more to be used as a singleton with the expectation that the cache will be large. I certainly intend to use the service described here as a "singleton" but to support several (under a dozen) small caches.

One of the drawbacks (there are probably others, but this one sticks out like a sore thumb) is that the "get cached item" requires the generic type parameters of the key and value, for example:

service.GetCachedItem<string, int>(CacheKey.Cache1, "test1");

If the key is always a string, the code could be simplified to only require the type of the cached value.

The point of this implementation is that:

- I need several caches to manage objects of different types

- and I want type safety

- and I don't want numerous discrete instances but rather a container for all caches.

- The caches will not be large.

- The caches should exist throughout the lifetime of the application unless explicitly cleared.

- There is no need for eviction/removal policies because these policies cannot be determined by the application.

- The keyed cache collection and the caches they manage must be thread safe.

Hence, this article.

Implementation

There are some implementation details that are important to go over.

Thread Safety

Thread safety is accomplished by using ConcurrentDictionary instances, so there is nothing further to consider.

Instantiating the Keyed Cache Collection

The service is of type CachingService<Q>, indicating that all caches are keyed by the type Q. For example, to instantiate the service using an int as the cache collection key:

private CachingService<int> service = new CachingService<int>();

As another example, this code declares a singleton service in ASP.NET Core, using an enum type:

builder.Services.AddSingleton<CachingService<CacheCollectionKey>>();

and will be passed in to controller and other service constructors, for example:

private CachingService<CacheCollectionKey> cachingService;

public RootController(CachingService<CacheCollectionKey> cachingService)

{

this.cachingService = cachingService;

}

Set and Get a Cache's Key-Value

public void SetCachedItem<T, R>(Q type, T key, R value)

{

var dict = GetCache<T, R>(type);

Assert.NotNull<CachingServiceException>

(dict, $"Cache of generic type <{typeof(T).Name},

{typeof(R).Name}> does not exist.");

dict[key] = value;

}

public R GetCachedItem<T, R>(Q type, T key, Func<T, R> creator = null)

{

var dict = GetCache<T, R>(type);

Assert.NotNull<CachingServiceException>

(dict, $"Cache of generic type <{typeof(T).Name},

{typeof(R).Name}> does not exist.");

var hasKey = dict.TryGetValue(key, out R ret);

Assert.That<CachingServiceException>(hasKey || creator != null,

$"Key {key} does not exist in the cache {type}");

if (!hasKey)

{

ret = creator(key);

dict[key] = ret;

}

return ret;

}

When we are setting a key-value in a specific cache, the generic types are all inferred, for example:

service.SetCachedItem(CacheKey.Cache1, "test1", 1);

However, when we get a cached item, we must supply the key and value generic types, for example:

var n = service.GetCachedItem<string, int>(CacheKey.Cache1, "test1");

As discussed in the introduction, this is a bit of an annoyance but it's better than explicit cast or as operators. Also note that:

- this method will assert if the cache of type

<T, R> does not match an existing dictionary of type <T, R>. - However, if the backing dictionary of type

<T, R> does not exist in the collection, it will be created. - The method will assert if the cache does not contain the key and no creator function is provided.

Removing a Cached Key

public (bool removed, R) RemoveCachedItem<T, R>(Q type, T key)

{

var dict = GetCache<T, R>(type);

Assert.NotNull<CachingServiceException>

(dict, $"Cache of generic type <{typeof(T).Name},

{typeof(R).Name}> does not exist.");

var removed = dict.TryRemove(key, out R ret);

return (removed, ret);

}

This method will return a tuple indicating that the key has been removed from the specified cache, and it also returns the value that was removed if it existed. If the key does not exist, the returned value of type R will be the default value of the type R, otherwise for objects, this will be null.

Clear Caches

There are three ways to clear the caches depending on what you want to do:

- Clear the keyed cache collection.

- Clear all caches but preserve the keyed cache collection.

- Clear all entries in a specific cache.

public void ClearKeyedCache()

{

keyedCacheCollection.Clear();

}

public void ClearAllCaches()

{

foreach (var cache in keyedCacheCollection.Values.Cast<IDictionary>())

{

cache.Clear();

}

}

public void ClearCache(Q type)

{

if (keyedCacheCollection.TryGetValue(type, out object typeCache))

{

((IDictionary)typeCache).Clear();

}

}

Get the Count of Items in a Cache

public int Count(Q type)

{

int count = 0;

if (keyedCacheCollection.TryGetValue(type, out object typeCache))

{

count = ((IDictionary)typeCache).Count;

}

return count;

}

Note that this method does not throw an exception if the keyed cache does not yet exist.

Internal: Create the Keyed Cached

Internally, we have a method that creates the keyed cache if it does not exist:

private ConcurrentDictionary<T, R> GetCache<T, R>(Q type)

{

ConcurrentDictionary<T, R> dict;

if (!keyedCacheCollection.TryGetValue(type, out object typeCache))

{

typeCache = new ConcurrentDictionary<T, R>();

keyedCacheCollection[type] = typeCache;

dict = typeCache as ConcurrentDictionary<T, R>;

}

else

{

dict = typeCache as ConcurrentDictionary<T, R>;

}

return dict;

}

This method always returns the cache instance, whether it created one or found an existing one. Because the value of the keyedCacheCollection, which is an object type, is cast to a ConcurrentDictionary<T, R> using the as operator, this will return null if there is a type mismatch in the generic parameters <T, R>, hence the assertions in the code described earlier.

The Assert Class

I don't like "if" statements to determine whether to throw an exception, but I also want to throw a typed exception, so I have a simple helper class that I use everywhere:

namespace Clifton.Lib

{

public static class Assert

{

public static void That<T>(bool condition, string msg) where T : Exception, new()

{

if (!condition)

{

var ex = Activator.CreateInstance(typeof(T), new object[] { msg }) as T;

throw ex;

}

}

public static void NotNull<T>(object obj, string msg) where T : Exception, new()

{

if (obj == null)

{

var ex = Activator.CreateInstance(typeof(T), new object[] { msg }) as T;

throw ex;

}

}

}

}

Therefore, there is a specific exception class for this service:

public class CachingServiceException : Exception

{

public CachingServiceException() { }

public CachingServiceException(string message) : base(message) { }

}

Unit Tests

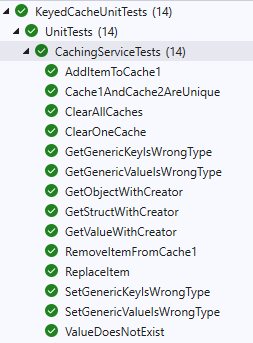

I think it's a good idea to have unit tests for this service, and it's a good way to "document" the usage. There are 14 unit tests:

Each unit test clears the keyed cache collection to start with a blank slate:

[TestClass]

public class CachingServiceTests

{

private CachingService<CacheKey> service = new CachingService<CacheKey>();

[TestInitialize] public void TestInitialize()

{

service.ClearKeyedCache();

}

...

and there is a "singleton" service instantiated once for all tests and there is an enum for the two caches used in the tests:

public enum CacheKey

{

Cache1 = 1,

Cache2 = 2

}

Here are a couple tests to illustrate how they are written. This one tests that clearing one cache does not affect another cache:

[TestMethod]

public void ClearOneCache()

{

service.SetCachedItem(CacheKey.Cache1, "test1", 1);

service.SetCachedItem(CacheKey.Cache2, "test2", "2");

Assert.AreEqual(1, service.Count(CacheKey.Cache1));

Assert.AreEqual(1, service.Count(CacheKey.Cache2));

service.ClearCache(CacheKey.Cache1);

Assert.AreEqual(0, service.Count(CacheKey.Cache1));

Assert.AreEqual(1, service.Count(CacheKey.Cache2));

}

This one verifies that removing an item returned the removed item and that it is in fact removed:

[TestMethod]

public void RemoveItemFromCache1()

{

service.SetCachedItem(CacheKey.Cache1, "test1", 1);

var item = service.RemoveCachedItem<string, int>(CacheKey.Cache1, "test1");

Assert.IsTrue(item.removed);

Assert.AreEqual(1, item.val);

Assert.AreEqual(0, service.Count(CacheKey.Cache1));

}

Here, we test that a value we expect to be cached does not exist:

[TestMethod, ExpectedException(typeof(CachingServiceException))]

public void ValueDoesNotExist()

{

service.GetCachedItem<string, int>(CacheKey.Cache1, "1");

}

Here, we test the ability to create the values of value type and object type when the value does not exist in the cache:

[TestMethod]

public void GetStructWithCreator()

{

var now = DateTime.Now;

var n = service.GetCachedItem(CacheKey.Cache1, "1", key => now);

Assert.AreEqual(now, n);

Assert.AreEqual(1, service.Count(CacheKey.Cache1));

}

[TestMethod]

public void GetObjectWithCreator()

{

var obj = new TestObject() { Id = 1 };

var n = service.GetCachedItem(CacheKey.Cache1, "1", key => obj);

Assert.AreEqual(obj, n);

Assert.AreEqual(obj.Id, n.Id);

Assert.AreEqual(1, service.Count(CacheKey.Cache1));

}

And there are tests to verify an exception is thrown on type mismatches between the key and value types of a particular cache:

[TestMethod, ExpectedException(typeof(CachingServiceException))]

public void GetGenericKeyIsWrongType()

{

service.SetCachedItem(CacheKey.Cache1, "1", 1);

service.GetCachedItem<int, int>(CacheKey.Cache1, 1);

}

[TestMethod, ExpectedException(typeof(CachingServiceException))]

public void GetGenericValueIsWrongType()

{

service.SetCachedItem(CacheKey.Cache1, "1", 1);

service.GetCachedItem<string, string>(CacheKey.Cache1, "1");

}

Conclusion

Ideally, the point of writing an article like this is so that reader can tell me why they wouldn't use this implementation and why perhaps MemoryCache is still a better solution even given my reduced requirements. To review my lightweight requirements:

- I need several caches to manage objects of different types

- and I want type safety

- and I don't want numerous discrete instances but rather a container for all caches.

- The caches will not be large.

- The caches should exist throughout the lifetime of the application unless explicitly cleared.

- There is no need for eviction/removal policies because these policies cannot be determined by the application.

- The keyed cache collection and the caches they manage must be thread safe.

Certainly, there are arguments that can be made regarding this implementation:

- What's wrong with having discrete caches for each type?

- What's wrong with casting or

as-ing the object returned by MemoryCache to the required type? - What's wrong with using the extension method noted in the reference below?

- What's wrong with disposing of the cache and recreating it to clear the cache contents?

- What's wrong with using

MemoryCache?

The answer is, nothing really! The code I presented here, in response to my five questions, is:

- I don't want discrete caches.

- I don't want the cast or use

as ... - though I do like Cynthia's solution linked and posted in the References.

- Not being able to clear a cache without disposing and recreating it does bother me.

- Because I don't need the expiration/eviction policies and I'm only managing small sets of cached data.

I look forward to your feedback!

References

public static T AddOrGetExisting<T>

(ObjectCache cache, string key, Func<(T item, CacheItemPolicy policy)> addFunc)

{

object cachedItem = cache.Get(key);

if (cachedItem is T t)

return t;

(T item, CacheItemPolicy policy) = addFunc();

cache.Add(key, item, policy);

return item;

}

History

- 2nd April, 2023: Initial version