Just hook the library up to your platform's audio subsystem by implementing a few callbacks and enjoy simple polyphonic audio capabilities.

Introduction

I originally wrote htcw_sfx to handle audio for IoT. I made it very modular, but unfortunately that made it more complicated to use than I'd like.

To that end, I've produced a simple class to play audio on an IoT device with an audio subsystem, like I2S hardware.

Understanding this Mess

The player works by keeping a linked list of voices and their associated state. It has generator functions for the different types of voices, like wav files, sine waves and triangle waves.

Periodically, it runs each of these functions in turn over a buffer, wherein each function adds its results to the values already in the buffer, effectively mixing. This also allows you to create filters using the same mechanism but I haven't implemented any yet.

Once the buffer is constructed and written, it calls the flush callback to send it to the platform specific audio layer.

Using this Mess

The actual player bit is simple, but we'll be covering using an ESP32 line. The included code works with an M5 Stack Fire, an M5 Stack Core2, or an AI-Thinker ESP32 Audio Kit 2.2. If you have a different device, you'll have to modify the project, but the player code itself will be pretty much the same regardless.

Include it and declare it:

#include <player.hpp>

player sound(44100,2,16);

In your application's setup code, initialize it.

if(!sound.initialize()) {

printf("Sound initialization failure.\n");

while(1);

}

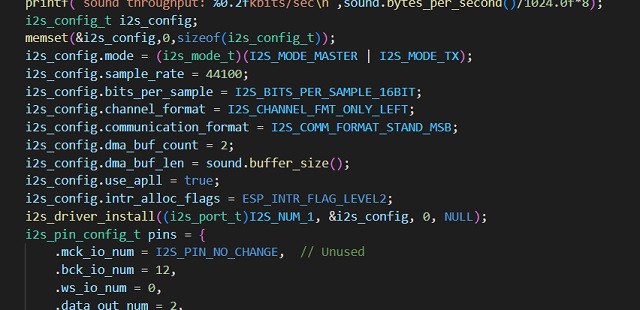

Next is where I like to initialize the platform specific audio layer. In this case, we'll be using the AI-Thinker ESP32 Audio Kit 2.2 as an example:

i2s_config_t i2s_config;

memset(&i2s_config,0,sizeof(i2s_config_t));

i2s_config.mode = (i2s_mode_t)(I2S_MODE_MASTER | I2S_MODE_TX);

i2s_config.sample_rate = 44100;

i2s_config.bits_per_sample = I2S_BITS_PER_SAMPLE_16BIT;

i2s_config.channel_format = I2S_CHANNEL_FMT_RIGHT_LEFT;

i2s_config.communication_format = I2S_COMM_FORMAT_STAND_MSB;

i2s_config.dma_buf_count = 2;

i2s_config.dma_buf_len = sound.buffer_size();

i2s_config.use_apll = true;

i2s_config.intr_alloc_flags = ESP_INTR_FLAG_LEVEL2;

i2s_driver_install((i2s_port_t)I2S_NUM_1, &i2s_config, 0, NULL);

i2s_pin_config_t pins = {

.mck_io_num = 0,

.bck_io_num = 27,

.ws_io_num = 26,

.data_out_num = 25,

.data_in_num = I2S_PIN_NO_CHANGE};

i2s_set_pin((i2s_port_t)I2S_NUM_1,&pins);

Next set the callbacks. We need two in this case:

sound.on_flush([](const void* buffer,size_t buffer_size,void* state){

size_t written;

i2s_write(I2S_NUM_1,buffer,buffer_size,&written,portMAX_DELAY);

});

sound.on_sound_disable([](void* state) {

i2s_zero_dma_buffer(I2S_NUM_1);

});

This is all platform and hardware specific, but will look similar across different ESP32 MCUs. Note we use the sound library to compute the DMA buffer size.

Our two callbacks are straightforward: on_flush writes data to the I2S port. on_sound_disable zeroes out the I2S DMA buffer, silencing the output.

Now let's play a sine wave at 440Hz, 40% amplitude:

voice_handle_t sin_handle = sound.sin(0,440,.4);

The first argument is the "port", which is an arbitrary numeric identifier that indicates which pipeline the sound will play on. If you don't need pipelines (which you usually won't), you can simply use 0. Ports are useful to group sound together when you need to apply a filter to a certain set of sounds, but not other sounds. All sounds with the same port identifier are on the same pipeline.

The second argument is the frequency, in Hz.

The third argument is the amplitude which is scaled between 0 and 1.

The return value is a handle that can be used to refer to the voice later, for example to stop it from playing after a bit.

voice_handle_t wav_handle = sound.wav(0,read_demo,nullptr,.4,true,seek_demo,nullptr);

The first argument is the port.

The second argument is the callback to read the next byte of the wav data.

The third argument is the callback's state

The fourth argument is the amplitude modifier, indicating 40% in this case.

The fifth argument indicates if the sound is to loop.

The sixth argument is the seek callback (only used if looping).

The seventh argument is the state for the seek callback.

Let's take a look at those callback implementations:

size_t test_len = sizeof(test_data);

size_t test_pos = 0;

int read_demo(void* state) {

if(test_pos>=test_len) {

return -1;

}

return test_data[test_pos++];

}

void seek_demo(unsigned long long pos, void* state) {

test_pos = pos;

}

These are pretty straightforward. test_data[] comes from test.hpp and contains our wav data.

read_demo() returns the next value in test_data[], incrementing the position (test_pos), or returning -1 if already at the end.

seek_demo() sets the position.

If we wanted to stop the wav file from playing, we could call:

sound.stop(wav_handle);

If we wanted to stop all sounds:

sound.stop();

You can also stop all sounds on a particular port by passing the port number to stop_port():

sound.stop_port(0);

In order to pump it to get it to actually play anything, you need to call update() repeatedly:

sound.update();

The above covers the basic functionality in broad strokes.

Coding this Mess

Now let's dive into how it was made. We'll cover player.cpp:

#include <player.hpp>

#if __has_include(<Arduino.h>)

#include <Arduino.h>

#else

#include <inttypes.h>

#include <stddef.h>

#include <math.h>

#include <string.h>

#define PI (3.1415926535f)

#endif

This gets us our core includes and defines, which vary depending on whether Arduino is available (although the rest of the code is the same).

Now on to some private definitions we use in the implementation:

constexpr static const float player_pi = PI;

constexpr static const float player_two_pi = player_pi*2.0f;

typedef struct voice_info {

unsigned short port;

voice_function_t fn;

void* fn_state;

voice_info* next;

} voice_info_t;

typedef struct {

float frequency;

float amplitude;

float phase;

float phase_delta;

} waveform_info_t;

typedef struct wav_info {

on_read_stream_callback on_read_stream;

void* on_read_stream_state;

on_seek_stream_callback on_seek_stream;

void* on_seek_stream_state;

float amplitude;

bool loop;

unsigned short channel_count;

unsigned short bit_depth;

unsigned long long start;

unsigned long long length;

unsigned long long pos;

} wav_info_t;

These structures indicate basic info about each voice, and the specific state for each type of voice.

Next we have some functions for reading using our read callback from earlier. The callback only returns 8 bit unsigned values, so we have methods for reading compound/multibyte values and signed values using the callback. I'll spare you the implementations since they aren't particularly important or complicated.

static bool player_read32(on_read_stream_callback on_read_stream,

void* on_read_stream_state,

uint32_t* out) {...}

static bool player_read16(on_read_stream_callback on_read_stream,

void* on_read_stream_state,

uint16_t* out) {...}

static bool player_read8s(on_read_stream_callback on_read_stream,

void* on_read_stream_state,

int8_t* out) {...}

static bool player_read16s(on_read_stream_callback on_read_stream,

void* on_read_stream_state,

int16_t* out) {...}

static bool player_read_fourcc(on_read_stream_callback on_read_stream,

void* on_read_stream_state,

char* buf) {...}

The final function above actually reads a "fourCC" value which is a 4 character long identifier like "WAVE" or "RIFF". These are commonly used as file format indicators, but wav files also use fourCC codes in multiple places throughout the file.

Next we have some voice functions. The purpose of these is to render voice data into a provided buffer in the specified format. For generating simple waveforms we use the following form, which I'll expand the implementation of once, and omit for successive functions since the code is almost the same:

static void sin_voice(const voice_function_info_t& info, void*state) {

waveform_info_t* wi = (waveform_info_t*)state;

for(int i = 0;i<info.frame_count;++i) {

float f = (sinf(wi->phase) + 1.0f) * 0.5f;

wi->phase+=wi->phase_delta;

if(wi->phase>=player_two_pi) {

wi->phase-=player_two_pi;

}

float samp = (f*wi->amplitude)*info.sample_max;

switch(info.bit_depth) {

case 8: {

uint8_t* p = ((uint8_t*)info.buffer)+(i*info.channel_count);

uint32_t tmp = *p+roundf(samp);

if(tmp>info.sample_max) {

tmp = info.sample_max;

}

for(int j = 0;j<info.channel_count;++j) {

*p++=tmp;

}

}

break;

case 16: {

uint16_t* p = ((uint16_t*)info.buffer)+(i*info.channel_count);

uint32_t tmp = *p+roundf(samp);

if(tmp>info.sample_max) {

tmp = info.sample_max;

}

for(int j = 0;j<info.channel_count;++j) {

*p++=tmp;

}

}

break;

default:

break;

}

}

}

static void sqr_voice(const voice_function_info_t& info, void*state) {...}

static void saw_voice(const voice_function_info_t& info, void*state) {...}

static void tri_voice(const voice_function_info_t& info, void*state) {...}

What we're doing above is computing a sine wave into f and then writing it out in the specified format to the supplied buffer. Currently, only 8 and 16 bit output is supported with these functions.

We also have voice functions for wav files. To keep things fast and easy to implement, we have one function for each combination of wav and output format we support. I've provided one implementation and omitted the rest, like I did above:

static void wav_voice_16_2_to_16_2(const voice_function_info_t& info, void*state) {

wav_info_t* wi = (wav_info_t*)state;

if(!wi->loop&&wi->pos>=wi->length) {

return;

}

uint16_t* dst = (uint16_t*)info.buffer;

for(int i = 0;i<info.frame_count;++i) {

int16_t i16;

if(wi->pos>=wi->length) {

if(!wi->loop) {

break;

}

wi->on_seek_stream(wi->start,wi->on_seek_stream_state);

wi->pos = 0;

}

for(int j=0;j<info.channel_count;++j) {

if(player_read16s(wi->on_read_stream,wi->on_read_stream_state,&i16)) {

wi->pos+=2;

} else {

break;

}

*dst+=(uint16_t)(((i16*wi->amplitude)+32768U));

++dst;

}

}

}

static void wav_voice_16_2_to_8_1(const voice_function_info_t& info, void*state) {...}

static void wav_voice_16_1_to_16_2(const voice_function_info_t& info, void*state) {...}

static void wav_voice_16_2_to_16_1(const voice_function_info_t& info, void*state) {...}

static void wav_voice_16_1_to_16_1(const voice_function_info_t& info, void*state) {...}

static void wav_voice_16_1_to_8_1(const voice_function_info_t& info, void*state) {...}

These functions use the read and seek callbacks to read through wav data and add it to the provided buffer.

Note that wav data is signed, but our internal data is unsigned.

Next is our first linked list function - the one to add a voice:

static voice_handle_t player_add_voice(unsigned char port,

voice_handle_t* in_out_first,

voice_function_t fn,

void* fn_state,

void*(allocator)(size_t)) {

voice_info_t* pnew;

if(*in_out_first==nullptr) {

pnew = (voice_info_t*)allocator(sizeof(voice_info_t));

if(pnew==nullptr) {

return nullptr;

}

pnew->port = port;

pnew->next = nullptr;

pnew->fn = fn;

pnew->fn_state = fn_state;

*in_out_first = pnew;

return pnew;

}

voice_info_t* v = (voice_info_t*)*in_out_first;

if(v->port>port) {

pnew = (voice_info_t*)allocator(sizeof(voice_info_t));

if(pnew==nullptr) {

return nullptr;

}

pnew->port = port;

pnew->next = v;

pnew->fn = fn;

pnew->fn_state = fn_state;

*in_out_first = pnew;

return pnew;

}

while(v->next!=nullptr && v->next->port<=port) {

v=v->next;

}

voice_info_t* vnext = v->next;

pnew = (voice_info_t*)allocator(sizeof(voice_info_t));

if(pnew==nullptr) {

return nullptr;

}

pnew->port = port;

pnew->next = vnext;

pnew->fn = fn;

pnew->fn_state = fn_state;

v->next = pnew;

return v;

}

This takes a port, which I touched on briefly, a pointer to the handle for the first element in the list, which might be changed, the voice function for the new voice, the state for the aforementioned function, and the allocator to use to allocate memory, which allows for using custom heaps.

From there, we simply do a sorted insert on port. This code should look straightforward to anyone who has implemented a linked list. Note that our function state must have already been allocated before this routine is called.

Now the counterpart, to remove a voice:

static bool player_remove_voice(voice_handle_t* in_out_first,

voice_handle_t handle,

void(deallocator)(void*)) {

voice_info_t** pv = (voice_info_t**)in_out_first;

voice_info_t* v = *pv;

if(v==nullptr) {return false;}

if(handle==v) {

*pv = v->next;

if(v->fn_state!=nullptr) {

deallocator(v->fn_state);

}

deallocator(v);

} else {

while(v->next!=handle) {

v=v->next;

if(v->next==nullptr) {

return false;

}

}

void* to_free = v->next;

if(to_free==nullptr) {

return false;

}

void* to_free2 = v->next->fn_state;

if(v->next->next!=nullptr) {

v->next = v->next->next;

} else {

v->next = nullptr;

}

deallocator(to_free);

deallocator(to_free2);

}

return true;

}

This again, should be straightforward to anyone who has implemented a linked list. The one thing we're doing extra here is freeing fn_state.

The following function is sort of a corollary to the previous one and allows you to remove all the voices on a particular port:

static bool player_remove_port(voice_handle_t* in_out_first,

unsigned short port,

void(deallocator)(void*)) {

voice_info_t* first = (voice_info_t*)(*in_out_first);

voice_info_t* before = nullptr;

while(first!=nullptr && first->port<port) {

before = first;

first = first->next;

}

if(first==nullptr || first->port>port) {

return false;

}

voice_info_t* after = first->next;

while(after!=nullptr && after->port==port) {

void* to_free = after;

if(after->fn_state!=nullptr) {

deallocator(after->fn_state);

}

after=after->next;

deallocator(to_free);

}

if(before!=nullptr) {

before->next = after;

} else {

*in_out_first = after;

}

return true;

}

Now we finally get into the player class implementation itself so we'll segue into player.hpp for a moment just to get definition:

typedef struct voice_function_info {

void* buffer;

size_t frame_count;

unsigned int channel_count;

unsigned int bit_depth;

unsigned int sample_max;

} voice_function_info_t;

typedef void (*voice_function_t)(const voice_function_info_t& info, void* state);

typedef void* voice_handle_t;

typedef void (*on_sound_disable_callback)(void* state);

typedef void (*on_sound_enable_callback)(void* state);

typedef void (*on_flush_callback)(const void* buffer, size_t buffer_size, void* state);

typedef int (*on_read_stream_callback)(void* state);

typedef void (*on_seek_stream_callback)(unsigned long long pos, void* state);

class player final {

voice_handle_t m_first;

void* m_buffer;

size_t m_frame_count;

unsigned int m_sample_rate;

unsigned int m_channel_count;

unsigned int m_bit_depth;

unsigned int m_sample_max;

bool m_sound_enabled;

on_sound_disable_callback m_on_sound_disable_cb;

void* m_on_sound_disable_state;

on_sound_enable_callback m_on_sound_enable_cb;

void* m_on_sound_enable_state;

on_flush_callback m_on_flush_cb;

void* m_on_flush_state;

void*(*m_allocator)(size_t);

void*(*m_reallocator)(void*,size_t);

void(*m_deallocator)(void*);

player(const player& rhs)=delete;

player& operator=(const player& rhs)=delete;

void do_move(player& rhs);

bool realloc_buffer();

public:

player(unsigned int sample_rate = 44100,

unsigned short channels = 2,

unsigned short bit_depth = 16,

size_t frame_count = 256,

void*(allocator)(size_t)=::malloc,

void*(reallocator)(void*,size_t)=::realloc,

void(deallocator)(void*)=::free);

player(player&& rhs);

~player();

player& operator=(player&& rhs);

bool initialized() const;

bool initialize();

void deinitialize();

voice_handle_t sin(unsigned short port, float frequency, float amplitude = .8);

voice_handle_t sqr(unsigned short port, float frequency, float amplitude = .8);

voice_handle_t saw(unsigned short port, float frequency, float amplitude = .8);

voice_handle_t tri(unsigned short port, float frequency, float amplitude = .8);

voice_handle_t wav(unsigned short port,

on_read_stream_callback on_read_stream,

void* on_read_stream_state,

float amplitude = .8,

bool loop = false,

on_seek_stream_callback on_seek_stream = nullptr,

void* on_seek_stream_state=nullptr);

voice_handle_t voice(unsigned short port,

voice_function_t fn,

void* state = nullptr);

bool stop(voice_handle_t handle = nullptr);

bool stop(unsigned short port);

void on_sound_disable(on_sound_disable_callback cb, void* state=nullptr);

void on_sound_enable(on_sound_enable_callback cb, void* state=nullptr);

void on_flush(on_flush_callback cb, void* state=nullptr);

size_t frame_count() const;

bool frame_count(size_t value);

unsigned int sample_rate() const;

bool sample_rate(unsigned int value);

unsigned short channel_count() const;

bool channel_count(unsigned short value);

unsigned short bit_depth() const;

bool bit_depth(unsigned short value);

size_t buffer_size() const;

size_t bytes_per_second() {

return m_sample_rate*m_channel_count*(m_bit_depth/8);

}

void update();

template<typename T>

T* allocate_voice_state() const {

return (T*)m_allocator(sizeof(T));

}

};

Now let's dive back into the implementation, starting with some boilerplate:

void player::do_move(player& rhs) {

m_first = rhs.m_first ;

rhs.m_first = nullptr;

m_buffer = rhs.m_buffer;

rhs.m_buffer = nullptr;

m_frame_count = rhs.m_frame_count;

rhs.m_frame_count = 0;

m_sample_rate = rhs.m_sample_rate;

m_sample_max = rhs.m_sample_max;

m_sound_enabled = rhs.m_sound_enabled;

m_on_sound_disable_cb=rhs.m_on_sound_disable_cb;

rhs.m_on_sound_enable_cb = nullptr;

m_on_sound_disable_state = rhs.m_on_sound_disable_state;

m_on_sound_enable_cb = rhs.m_on_sound_enable_cb;

rhs.m_on_sound_enable_cb = nullptr;

m_on_flush_cb = rhs.m_on_flush_cb;

rhs.m_on_flush_cb = nullptr;

m_on_flush_state = rhs.m_on_flush_state;

m_allocator = rhs.m_allocator;

m_reallocator = rhs.m_reallocator;

m_deallocator = rhs.m_deallocator;

}

This function essentially implements the meat for C++ move semantics because I don't give you copy constructors or assignment operators for reasons which should be understandable if you think about it.

Next we have the constructor and destructor. The constructor doesn't really do anything except assign or set all of the members to their initial values, while the destructor calls deinitialize().

player::player(unsigned int sample_rate,

unsigned short channel_count,

unsigned short bit_depth,

size_t frame_count,

void*(allocator)(size_t),

void*(reallocator)(void*,size_t),

void(deallocator)(void*)) :

m_first(nullptr),

m_buffer(nullptr),

m_frame_count(frame_count),

m_sample_rate(sample_rate),

m_channel_count(channel_count),

m_bit_depth(bit_depth),

m_on_sound_disable_cb(nullptr),

m_on_sound_disable_state(nullptr),

m_on_sound_enable_cb(nullptr),

m_on_sound_enable_state(nullptr),

m_on_flush_cb(nullptr),

m_on_flush_state(nullptr),

m_allocator(allocator),

m_reallocator(reallocator),

m_deallocator(deallocator)

{

}

player::~player() {

deinitialize();

}

The next two definitions implement move semantics by delegating to do_move():

player::player(player&& rhs) {

do_move(rhs);

}

player& player::operator=(player&& rhs) {

do_move(rhs);

return *this;

}

The next group of methods handle initialization and deinitialization:

bool player::initialized() const { return m_buffer!=nullptr;}

bool player::initialize() {

if(m_buffer!=nullptr) {

return true;

}

m_buffer=m_allocator(m_frame_count*m_channel_count*(m_bit_depth/8));

if(m_buffer==nullptr) {

return false;

}

m_sample_max = powf(2,m_bit_depth)-1;

m_sound_enabled = false;

return true;

}

void player::deinitialize() {

if(m_buffer==nullptr) {

return;

}

stop();

m_deallocator(m_buffer);

m_buffer = nullptr;

}

initialize() allocates a buffer to hold the frames and sets m_sample_max to the maximum value for the bit depth. It also sets the initial state of the sound to disabled.

deinitialize() stops any playing sound which also frees the memory for the voices. It then deallocates the buffer that holds the frames.

The next methods handle creating our waveform voices when you call the appropriate method. They all work pretty much the same way, so they each delegate to the same helper method to do most of the heavy lifting:

static voice_handle_t player_waveform(unsigned short port,

unsigned int sample_rate,

voice_handle_t* in_out_first,

voice_function_t fn,

float frequency,

float amplitude,

void*(allocator)(size_t)) {

waveform_info_t* wi = (waveform_info_t*)allocator(sizeof(waveform_info_t));

if(wi==nullptr) {

return nullptr;

}

wi->frequency = frequency;

wi->amplitude = amplitude;

wi->phase = 0;

wi->phase_delta = player_two_pi*wi->frequency/(float)sample_rate;

return player_add_voice(port, in_out_first,fn,wi,allocator);

}

voice_handle_t player::sin(unsigned short port, float frequency, float amplitude) {

voice_handle_t result = player_waveform(port,

m_sample_rate,

&m_first,

sin_voice,

frequency,

amplitude,

m_allocator);

return result;

}

voice_handle_t player::sqr(unsigned short port, float frequency, float amplitude) {

voice_handle_t result = player_waveform(port,

m_sample_rate,

&m_first,

sqr_voice,

frequency,

amplitude,

m_allocator);

return result;

}

voice_handle_t player::saw(unsigned short port, float frequency, float amplitude) {

voice_handle_t result = player_waveform(port,

m_sample_rate,

&m_first,

saw_voice,

frequency,

amplitude,

m_allocator);

return result;

}

voice_handle_t player::tri(unsigned short port, float frequency, float amplitude) {

voice_handle_t result = player_waveform(port,

m_sample_rate,

&m_first,

tri_voice,

frequency,

amplitude,

m_allocator);

return result;

}

Now onto wav files. We have to read the RIFF chunks out of the header to get our wav start and stop points within the file, and that's what most of this routine does:

voice_handle_t player::wav(unsigned short port,

on_read_stream_callback on_read_stream,

void* on_read_stream_state, float amplitude,

bool loop,

on_seek_stream_callback on_seek_stream,

void* on_seek_stream_state) {

if(on_read_stream==nullptr) {

return nullptr;

}

if(loop && on_seek_stream==nullptr) {

return nullptr;

}

unsigned int sample_rate=0;

unsigned short channel_count=0;

unsigned short bit_depth=0;

unsigned long long start=0;

unsigned long long length=0;

uint32_t size;

uint32_t remaining;

uint32_t pos;

int v = on_read_stream(on_read_stream_state);

if(v!='R') {

return nullptr;

}

v = on_read_stream(on_read_stream_state);

if(v!='I') {

return nullptr;

}

v = on_read_stream(on_read_stream_state);

if(v!='F') {

return nullptr;

}

v = on_read_stream(on_read_stream_state);

if(v!='F') {

return nullptr;

}

pos =4;

uint32_t t32 = 0;

if(!player_read32(on_read_stream,on_read_stream_state,&t32)) {

return nullptr;

}

size = t32;

pos+=4;

remaining = size-8;

v = on_read_stream(on_read_stream_state);

if(v!='W') {

return nullptr;

}

v = on_read_stream(on_read_stream_state);

if(v!='A') {

return nullptr;

}

v = on_read_stream(on_read_stream_state);

if(v!='V') {

return nullptr;

}

v = on_read_stream(on_read_stream_state);

if(v!='E') {

return nullptr;

}

pos+=4;

remaining-=4;

char buf[4];

while(remaining) {

if(!player_read_fourcc(on_read_stream,on_read_stream_state,buf)) {

return nullptr;

}

pos+=4;

remaining-=4;

if(!player_read32(on_read_stream,on_read_stream_state,&t32)) {

return nullptr;

}

pos+=4;

remaining-=4;

if(0==memcmp("fmt ",buf,4)) {

uint16_t t16;

if(!player_read16(on_read_stream,on_read_stream_state,&t16)) {

return nullptr;

}

if(t16!=1) { return nullptr;

}

pos+=2;

remaining-=2;

if(!player_read16(on_read_stream,on_read_stream_state,&t16)) {

return nullptr;

}

channel_count = t16;

if(channel_count<1 || channel_count>2) {

return nullptr;

}

pos+=2;

remaining-=2;

if(!player_read32(on_read_stream,on_read_stream_state,&t32)) {

return nullptr;

}

sample_rate = t32;

if(sample_rate!=this->sample_rate()) {

return nullptr;

}

pos+=4;

remaining-=4;

if(!player_read32(on_read_stream,on_read_stream_state,&t32)) {

return nullptr;

}

pos+=4;

remaining-=4;

if(!player_read16(on_read_stream,on_read_stream_state,&t16)) {

return nullptr;

}

pos+=2;

remaining-=2;

if(!player_read16(on_read_stream,on_read_stream_state,&t16)) {

return nullptr;

}

bit_depth = t16;

pos+=2;

remaining-=2;

} else if(0==memcmp("data",buf,4)) {

length = t32;

start = pos;

break;

} else {

while(t32--) {

if(0>on_read_stream(on_read_stream_state)) {

return nullptr;

}

++pos;

--remaining;

}

}

}

wav_info_t* wi = (wav_info_t*)m_allocator(sizeof(wav_info_t));

if(wi==nullptr) {

return nullptr;

}

wi->on_read_stream = on_read_stream;

wi->on_read_stream_state = on_read_stream_state;

wi->on_seek_stream = on_seek_stream;

wi->on_seek_stream_state = on_seek_stream_state;

wi->amplitude = amplitude;

wi->bit_depth = bit_depth;

wi->channel_count = channel_count;

wi->loop = loop;

wi->on_read_stream = on_read_stream;

wi->on_read_stream_state = on_read_stream_state;

wi->start = start;

wi->length = length;

wi->pos = 0;

if(wi->channel_count==2 &&

wi->bit_depth==16 &&

m_channel_count==2 &&

m_bit_depth==16) {

voice_handle_t res = player_add_voice(port,

&m_first,

wav_voice_16_2_to_16_2,

wi,

m_allocator);

if(res==nullptr) {

m_deallocator(wi);

}

return res;

} else if(wi->channel_count==1 &&

wi->bit_depth==16 &&

m_channel_count==2 &&

m_bit_depth==16) {

voice_handle_t res = player_add_voice(port,

&m_first,

wav_voice_16_1_to_16_2,

wi,

m_allocator);

if(res==nullptr) {

m_deallocator(wi);

}

return res;

} else if(wi->channel_count==2 &&

wi->bit_depth==16 &&

m_channel_count==1 &&

m_bit_depth==16) {

voice_handle_t res = player_add_voice(port,

&m_first,

wav_voice_16_2_to_16_1,

wi,

m_allocator);

if(res==nullptr) {

m_deallocator(wi);

}

return res;

} else if(wi->channel_count==1 &&

wi->bit_depth==16 &&

m_channel_count==1 &&

m_bit_depth==16) {

voice_handle_t res = player_add_voice(port,

&m_first,

wav_voice_16_1_to_16_1,

wi,

m_allocator);

if(res==nullptr) {

m_deallocator(wi);

}

return res;

} else if(wi->channel_count==2 &&

wi->bit_depth==16 &&

m_channel_count==1 &&

m_bit_depth==8) {

voice_handle_t res = player_add_voice(port,

&m_first,

wav_voice_16_2_to_8_1,

wi,

m_allocator);

if(res==nullptr) {

m_deallocator(wi);

}

return res;

} else if(wi->channel_count==1 &&

wi->bit_depth==16 &&

m_channel_count==1 &&

m_bit_depth==8) {

voice_handle_t res = player_add_voice(port,

&m_first,

wav_voice_16_1_to_8_1,

wi,

m_allocator);

if(res==nullptr) {

m_deallocator(wi);

}

return res;

}

m_deallocator(wi);

return nullptr;

}

Like I said, most of it is reading the RIFF header information out of the wav file, and then marking our start and end point within it for the wav data itself. Note that we use different functions for different wav and output format combinations. This was much more straightforward, and more performant than one general purpose routine.

We're skipping to update() because the code before it is trivial:

void player::update() {

const size_t buffer_size = m_frame_count*m_channel_count*(m_bit_depth/8);

voice_info_t* first = (voice_info_t*)m_first;

bool has_voices = false;

voice_function_info_t vinf;

vinf.buffer = m_buffer;

vinf.frame_count = m_frame_count;

vinf.channel_count = m_channel_count;

vinf.bit_depth = m_bit_depth;

vinf.sample_max = m_sample_max;

voice_info_t* v = first;

memset(m_buffer,0,buffer_size);

while(v!=nullptr) {

has_voices = true;

v->fn(vinf, v->fn_state);

v=v->next;

}

if(has_voices) {

if(!m_sound_enabled) {

if(m_on_sound_enable_cb!=nullptr) {

m_on_sound_enable_cb(m_on_sound_enable_state);

}

m_sound_enabled = true;

}

} else {

if(m_sound_enabled) {

if(m_on_sound_disable_cb!=nullptr) {

m_on_sound_disable_cb(m_on_sound_disable_state);

}

m_sound_enabled = false;

}

}

if(m_sound_enabled && m_on_flush_cb!=nullptr) {

m_on_flush_cb(m_buffer, buffer_size, m_on_flush_state);

}

}

What we're doing here is computing the voice information and then looping through each voice, adding its data to the buffer. While doing so, we keep track of whether there are voices or not. If there are voices and the sound is disabled, we enable it. If there are no voices and the sound is enabled, we disable it. Next, we call flush to send the data to the sound hardware.

History

- 8th April, 2023 - Initial submission

- 9th April, 2023 - Bugfixes