If a multitouch screen is available, the user can play music with Microtonal Fabric applications with ten fingers, play chords, glissando, and use other techniques in all combinations. Microtonal Fabric uses a unified approach to cover the handling of the musical on-screen keyboards and more. The present article offers an easy-to-use yet comprehensive API suitable not only to Microtonal Fabric but a wide class of applications.

Contents

Introduction

The Problem: Keyboard Keys are not Like Buttons!

Microtonal Fabric Applications Using Multitouch Control

Implementation

Multitouch

Usage Examples

Abstract Keyboard

Using Extra Data

Compatibility

Conclusions

Introduction

This is the fourth article of the series dedicated to musical study with on-screen keyboards, including microtonal ones:

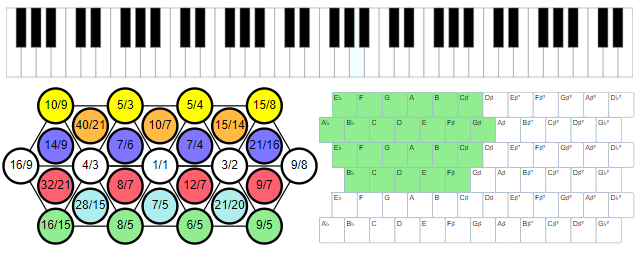

- Musical Study with Isomorphic Computer Keyboard

- Microtonal Music Study with Chromatic Lattice Keyboard

- Sound Builder, Web Audio Synthesizer

- Present article

The last three articles are devoted to the project named Microtonal Fabric, a microtonal music platform based on WebAudio API. It is a framework for building universal or customized microtonal musical keyboard instruments, microtonal experiments and computing, music study, and teaching music lessons with possible remote options. The platform provides several applications executed in a Web browser using shared JavaScript components.

See also my page at the microtonal community Web site Xenharmonic Wiki. In addition to the Microtonal Fabric links, there are some useful links on different microtonal topics and personalities.

Most of the Microtonal Fabric applications allow the user to play music in a browser. If a multitouch screen is available, the user can play with ten fingers. The required features of the multitouch interface are not as trivial as they may seem at first glance. The present article explains the problem and the solution. It shows how the multitouch behavior is abstracted from the other part of the code, reused, and utilized by different types of on-screen keyboards.

In the approach discussed, the application of the present multitouch solution is not limited to musical keyboards. The view model of the keyboard looks like a collection of HTML or SVG elements with two states: “activated” (down) or “deactivated” (up). The states can be modified by the user or by software in many different ways. First, let’s consider the entire problem.

So, why does the multitouch control of the keyboard present some problems? Well, mostly because the inertia of thinking could lead us in the wrong direction.

The most usual approach is to take the set of keys of some keyboard and attach some event handler to each one. It looks natural, but it cannot work at all.

Let’s see what playing with all ten fingers requires. On a screen, we have three kinds of area: 1) the area of some keyboard key, 2) the area of a keyboard not occupied by a key, and 3) the area outside the keyboard. When one or more fingers touch the screen inside the area of some key (case #1), a Touch object is created. The low-level touch event is invoked, but a semantic-level keyboard event to be handled to invoke key activation should be invoked only if there are no more Touch objects in the area of the given key. Likewise, when a finger is removed from the screen, a semantic-level keyboard event should be invoked only if there are no other Touch objects in the area of the key.

But this is not enough. The key activation can also be changed if a finger just slides on the screen. When a sliding finger enters the area or leaves the area of some key, it can come from or move to any of the areas of types #1 to #3. Depending on the presence of other Touch objects in the area of the given key, it can also change the activation state of this key. Most typically, it happens when fingers slide across two or more keys. This technique is known as glissando. And this is something that cannot be implemented when touch events are attached to each key separately.

Why? It’s easy to understand by comparison with the pointer events. These events include the events pointerleave and pointerout. These events make perfect sense for a single pointer controlled by a mouse or a touchpad. However, there is nothing similar in touch events. The keyboard keys do not at all behave like UI buttons. Despite the apparent similarity, they are totally different.

The only way to implement all the combinations of the semantic-level multitouch events is to handle low-level touch events to some element containing all the keys. In Microsoft Fabric code, this is the element representing the entire keyboard. Let’s see how it is implemented in the section Implementation Multitouch.

Before looking at the implementation, the reader may want to look at the available Microtonal Fabric applications using multitouch control. For all the applications, live play is available. For each application, the live play URL can be found below.

Microtonal Fabric Applications Using Multitouch Control

Implementation

The idea is: we need a separate unit abstracted from the set of UI elements representing the keyboard. We are going to set some touch events to the single HTML or SVG control representing the entire keyboard. These events should be interpreted by some events that may or may not be related to the keyboard keys. To pull the information on the keys from the user, we are going to use inversion of control.

The touch functionality is implemented by attaching the events to some element representing an entire keyboard using the single call to the function setMultiTouch, and it gets the key configuration information and invokes the semantic-level key events through three callback handers. Let’s see how it works.

Multitouch

The function setMultiTouch assumes the following UI model of the multitouch sensitive area: a container HTML or SVG element containing one or more HTML or SVG child elements, they can be direct or indirect children.

The function accepts four input arguments, container, and three handlers:

container: HTML or SVG handling multitouch eventselementSelector: the handler to select relevant children in container.

Profile: element => bool

If this handler returns false, the event is ignored. Essentially, this handler is used by the user code to define the HTML or SVG elements to be interpreted as keys of some keyboard represented by the container.elementHandler: the handler used to implement main keyboard functionality.

Profile: (element, Touch touchObject, bool on, touchEvent event) => undefined

The handler is used to implement the main functionality, for example, produce sounds in response to the keyboard events; the handler accepts element, a touch object, and a Boolean on argument showing if this is an “on” or “off” action. Basically, this handler calls a general semantic handler which can be triggered in different ways, for example, through a keyboard or a mouse. Essentially, it implements the action triggered when a keyboard key, represented by element is activated or deactivated, depending on the value of on.sameElementHandler: the handler used to handle events within the same element

Profile: (element, Touch touchObject) => undefined

“ui.components/multitouch.js”:

"use strict";

const setMultiTouch = (

container,

elementSelector,

elementHandler,

sameElementHandler,

) => {

if (!elementSelector)

return;

if (!container) container = document;

const assignEvent = (element, name, handler) => {

element.addEventListener(name, handler,

{ passive: false, capture: true });

};

const assignTouchStart = (element, handler) => {

assignEvent(element, "touchstart", handler);

};

const assignTouchMove = (element, handler) => {

assignEvent(element, "touchmove", handler);

};

const assignTouchEnd = (element, handler) => {

assignEvent(element, "touchend", handler);

};

const isGoodElement = element => element && elementSelector(element);

const elementDictionary = {};

const addRemoveElement = (touch, element, doAdd, event) => {

if (isGoodElement(element) && elementHandler)

elementHandler(element, touch, doAdd, event);

if (doAdd)

elementDictionary[touch.identifier] = element;

else

delete elementDictionary[touch.identifier];

};

assignTouchStart(container, ev => {

ev.preventDefault();

if (ev.changedTouches.length < 1) return;

const touch = ev.changedTouches[ev.changedTouches.length - 1];

const element =

document.elementFromPoint(touch.clientX, touch.clientY);

addRemoveElement(touch, element, true, ev);

});

assignTouchMove(container, ev => {

ev.preventDefault();

for (let touch of ev.touches) {

let element =

document.elementFromPoint(touch.clientX, touch.clientY);

const goodElement = isGoodElement(element);

const touchElement = elementDictionary[touch.identifier];

if (goodElement && touchElement) {

if (element == touchElement) {

if (sameElementHandler)

sameElementHandler(element, touch, ev)

continue;

}

addRemoveElement(touch, touchElement, false, ev);

addRemoveElement(touch, element, truem, ev);

} else {

if (goodElement)

addRemoveElement(touch, element, goodElement, ev);

else

addRemoveElement(touch, touchElement, goodElement, ev);

}

}

});

assignTouchEnd(container, ev => {

ev.preventDefault();

for (let touch of ev.changedTouches) {

const element =

document.elementFromPoint(touch.clientX, touch.clientY);

addRemoveElement(touch, element, false, ev);

}

});

};The central point of the setMultiTouch implementation is the call to document.elementFromPoint. This way, the elements related to the Touch event data are found. When an element is found, it is checked up whether this is the element representing a keyboard key, and the function isGoodElement does that using the handler elementSelector. If it is, the handler elementHandler or sameElementHandler is called, depending on the event data. These calls are used to handle touch events "touchstart", "touchmove", and "touchend".

Let’s see how setMultiTouch can be used by applications.

Usage Examples

A very typical usage example can be found in the application 29-EDO. It provides several keyboard layouts and two different tonal systems (29-EDO and a common-practice 12-EDO), but the keyboards reuse a lot of common code. For all the keyboard layouts elementSelector is based on the fact that all the keyboard keys are rectangular SVG elements SVGRectElement, but the keyboards are not, they are represented by an SVG element, SVGSVGElement.

Also, the keyboards have a common semantic-level handler handler(element, on), it controls highlighting and audio action of a key represented by element, depending on its Boolean activation state on. This is a common handler used via the touch API and pointer API. The handler can also be activated by the code through a computer keyboard or other control elements. In particular, it can be called by the Microtonal Fabric sequence recorder. It makes the call to setMultiTouch pretty simple:

“29-EDO/ui/keyboard.js”:

setMultiTouch(

element,

element => element.constructor == SVGRectElement,

(element, _, on) => handler(element, on));Here, the first element represents a keyboard, an SVGRectElement, and the element arguments of the handlers represent the keyboard keys.

In another place the expression element.constructor == SVGCircleElement is used, for the application having only round-shaped keys.

There is a more dedicated example where the selection of the key element is performed by a method of some abstract JavaScript class.

“ui.components/abstract-keyboard.js”:

class AbstractKeyboard {

setMultiTouch(

parentElement,

keyElement => this.isTouchKey(parentElement, keyElement),

(keyElement, _, on) => handler(keyElement, on));

}This second example is more interesting from a programming standpoint. Let’s discuss it in more detail.

Abstract Keyboard

The last example shows an additional abstraction layer: the universal piece of code setting up the multitouch features is placed once in a class representing an abstract keyboard. Potentially, more than one terminal keyboard class can be derived from AbstractKeyboard and reuse the multitouch setup and other common keyboard features.

In the class AbstractKeyboard, the functions used in the call to setMultiTouch are not fully defined: the function this.isTouchKey is not defined at all, and the function handler is defined, but it depends on not yet functions. These functions are supposed to be implemented in all the terminal classes derived from AbstractKeyboard. But how to guarantee it?

To guarantee, I’ve put forward a new technique I called interface (“agnostic/interfaces.js”). The keyboard classes do not extend an appropriate interface class, they just implement proper functions defined in a particular interface, a descendant class of the class IInterface (agnostic/interfaces.js"). The only purpose of IInterface is to provide a way of early detection of the problem due to the lack of full implementation of an interface to some degree of strictness. To understand the concept of strictness, please see const IInterfaceStrictness in the same file, it is self-explaining.

In the example of the application Microtonal Playground, the terminal keyboard class implements the multitouch behavior not directly by calling setMultiTouch, but by inheriting from AbstractKeyboard through the following inheritance diagram:

AbstractKeyboard (“ui.components\abstract-keyboard.js”) ◁─ GridKeyboard (“ui.components\grid-keyboard.js”) ◁― PlaygroungKeyboard (“playground\ui\playground-keyboard.js”)

In addition to the inheritance from AbstractKeyboard, the terminal class is supposed to implement IKeyboardGeometry:

IInterface (“agnostic/interfaces.js”) ◁— IKeyboardGeonetry (“ui.components\abstract-keyboard.js”)

“ui.components\abstract-keyboard.js”:

class IKeyboardGeometry extends IInterface {

createKeys(parentElement) {}

createCustomKeyData(keyElement, index) {}

highlightKey(keyElement, keyboardMode) {}

isTouchKey(parentElement, keyElement) {}

get defaultChord() {}

customKeyHandler(keyElement, keyData, on) {}

}The implementation of this interface guarantees that the functions of AbstractKeyboard work correctly, and the fact that the implementation of IKeyboardGeometry is fully implemented is validated by the constructor of the class AbstractKeyboard.

This validation is throwing an exception in case the implementation of the interface is not satisfactory:

class AbstractKeyboard {

#implementation = { mode: 0, chord: new Set(), playingElements: new(Set), chordRoot: -1, useHighlight: true };

derivedImplementation = {};

derivedClassConstructorArguments = [undefined];

constructor(parentElement, ...moreArguments) {

IKeyboardGeometry.throwIfNotImplemented(this);

this.derivedClassConstructorArguments = moreArguments;

}

}For the detail of IInterface.throwIfNotImplemented please see the source code, “agnostic/interfaces.js”. The validation of the interface implementation is based on the reflection of the terminal class performed during runtime by the constructor. It checks up all the interface functions, property getters, and setters, and, depending on required strictness, the number of arguments for each function. In our case, it happens during the construction of the terminal application-level class PlaygroungKeyboard of the application Microtonal Playground. The application itself deserves a separate article.

Apparently, this is just the imitation of the interface mechanism found in some well-designed compiled languages. It validates the implementation of the interfaces later, during runtime, but as soon as possible. Naturally, the mechanism cannot change the behavior of the software, it only facilitates debugging and, hence, software development. The mechanism does work, but I consider it experimental and do understand that its value is discussible. I would be grateful for any criticism or suggestions.

Note that none of the examples uses the second argument parameter of the handler handler, accepted as the second setMultiTouch argument, the argument touchObject of the Touch type. And the last handlers’ argument event of the typeTouchEvent is not used. Also, the last argument of setMultiTouch, the handler sameElementHandler is not used. However, these arguments are fully functional and can be used. They are reserved for advanced use.

The Touch argument passed to handler is used to get additional information on the original touch event. In particular, I’ve tried to use the values Touch.radiusX and Touch.radiusY. My idea was to evaluate the area of the contact of the touchscreen with a finger. This information could be used to derive an amount of pressure, and hence, adjust sound volume based on this value, to add some dynamics to the performance. However, my experiments demonstrated that the performer poorly controls this value, and it is not the same as actual pressure. The more principle problem of those Touch member properties is that their change does not trigger any touch events; an event is triggered only when the centroid of the touch is changed. Nevertheless, it is obvious that the Touch data can be useful for the implementation of some advanced effects.

The event argument of the handler is used for the implementation of plucked string instruments. The major purpose of this artument is to find out which finder defines the sound frequency in case more than one finger presses the same string against a fretboard. The instrument application used to mimic a plucked string instruments is presently under development.

The argument sameElementHandler of the function setMultiTouch is called when a touch event is triggered when a location of the touch remains within the same element as a previous touch event. Apparently, such events should not modify the activation state of the element. At the same time, such events can be used for the implementation of finer techniques. For example, the motion of a finger within the same key can be interpreted as finger-controlled vibrato.

All the finer techniques mentioned above are a matter of further research.

Compatibility

Microtonal Fabric functionality is based on advanced and modern JavaScipt and browser features and may fail when used with some Web browsers. Basically, it works correctly with all Chromium-based browsers, or, more exactly, the modern browsers based on Blink and V8 engines. It means Chromium, Chrome, and some other derived browsers. Even the latest “Anaheim” Microsoft Edge works correctly.

Unfortunately, the present-day Mozilla browsers manifest some problems and cannot correctly run the Microtonal Fabric application. In particular, Firefox for Linux does not properly handle the touch events described in the present article. Microtonal Fabric users and I keep an eye on compatibility, so I’ll try to update compatibility information if something changes.

Conclusions

We have a mechanism and an abstraction layer to be used as a semantic wrapper around a more general touch API, which is closer to the touchscreen hardware. This layer presents the events in а form adequate to the view model of an on-screen keyboard or other UI elements similar in functionality. The code using this layer calls a single function setMultiTouch and passes its semantic handlers.

Optionally, on top of this layer, the user can use a more specific and potentially less universal API based on IKeyboardGeometry and JavaScript classes.

These two options present a comprehensive semantic-level mechanism for the implementation of all aspects of the behavior of on-screen keyboards based on a multitouch touchscreen. At the same time, it leaves the UI open to other input methods, such as a physical computer keyboard, mouse, or touchpad.