Out of the box, provides a way to run unit tests as a debug program. However, this article shows how to do that by adding your own Program.Main, providing the opportunity for a broader application/integration of the framework.

Introduction

I had always setup my unit tests by hand and of course, that meant I didn't have as many as I really wanted (or needed). xUnit is the first test framework that I have come across that was simple to use and didn't require a huge investment in time to become productive. It has made running unit tests alongside the development project a breeze. However, off the shelf, xUnit does not easily allow integration of tests with external systems.

I envisage using the xUnit framework as the foundation of an integrated, automated, embedded hardware/software test framework in order to bring a fully automated test process flow to our embedded development. Being able to integrate xUnit tests and debug such a behemoth will be paramount!

Fortunately, because xUnit is very 'transparent' and simply builds a DLL from the test cases and then calls them using reflection, we can easily do the same thing with our own Program.Main which can then provide a normal debug process. While doing exactly this, I discovered a couple of traps for young players, nothing serious or complicated, and the result is a neat example of using reflection.

I am an embedded hack by trade and not a C# guru and I welcome any pointers for improving my C# skills. I tend to prefer a more explicit coding style than super compressed code that depends on a lot of neat C# translation tricks that are hard to 'parse by eye' unless you use them every day.

I use Visual Studio for all my C# development and this note relates experience with Visual Studio only. I have no idea how it might apply to other C# IDEs (such as VSCode). I am also assuming that the reader is capable enough to find and install the xUnit Framework into Visual Studio and these tips will help get additional leverage from doing so. Finally, by way of introduction, I'm old fashioned - I overload braces as both code block separators and visual block separators, you can always re-style to suit.

Background

xUinit

xUnit tests deal in Fact and Theory. These are used as Attribute classes to the test class methods to distinguishing methods that take arguments (Theory) and those that don't (Fact). As examples:

[Theory]

[InlineData(typeof(int), "42")]

public void Test2(Type type, string valueStr)

{

Assert.Equal(42, int.Parse(valueStr));

}

[Fact]

public void Test3()

{

}

As such, the way to pass arguments to a theory uses InlineData attributes. There can be multiple InlineData attributes to run the same test code (theory) against multiple input data set.

Exceptions are used to indicate problems and as such, failures should always throw exceptions and runtime state is tested for conformance as needed using Assert. Exceptions that are not failures should be caught and handled appropriately!

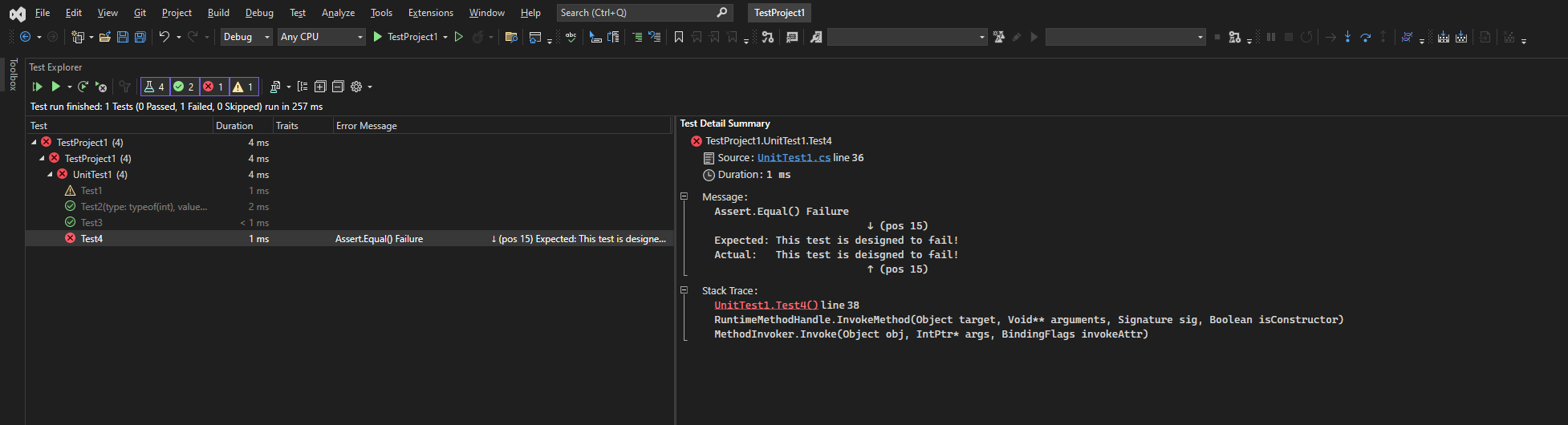

From a code point of view, that is pretty much it. xUinit has a great 'Test Explorer' (Test->Test Explorer) which lets you run tests independently of the current startup project. This is handy for debugging/checking/testing;

Reflection

Reflection is the process of viewing all the 'reflected' metadata that is used to create a program. There is a great deal of this data available in .NET which is generated by the compiler, the linker and the runtime. In .NET, pretty much everything has some form of metadata defined for it. Every .NET application has classes which include meta data and every method likewise and both have attributes that control their entire runtime existence. These attributes can include 'custom' attributes defined by the developer to be part of the test runtime.

For our purposes here, we are interested in the metadata relating to classes, their methods and the method attributes. Attributes are metadata class objects defined at compile time using the [] notation in C#, for example:

[Theory]

[InlineData(typeof(int), "42")]

All reflection metadata are themselves classes and here the Theory attribute has no constructor arguments and the InlineData attribute has two. These are 'custom' attributes the developer has defined to tell the compiler to add two attribute objects to the method Test2.

xUnit uses the metadata provided by reflection to figure out, at runtime, how to load and run the UnitTest1 class we defined as a container for the test methods, Test1, Test2 and Test3. All we need to do, in order to be able to run and debug the same, is provide a Program.Main that can read the metadata and 'figure it out'.

Using the Code

The example code includes a TestProject1 xUnit test project which you will need to open to follow along. You should already have xUnit Test framework installed for Visual Studio.

Program.Main

Out of the box, xUnit does provide this capability, but it not simple to integrate into external systems. Here, I describe a Program.Main that can be expanded to allow external control of running tests.

We already know the test class we created (in UnitTest1.cs). Before we can do anything, we need to create an instance of that class:

var test = new UnitTest1(Output);

I'll come back to discuss Output later.

The UnitTest1 class has the set of test methods we're interested in calling. We find those with the GetMethods() method which can be found on the class Type reflection object accessed as:

var methods = typeof(UnitTest1).GetMethods();

This method returns an IEnumberable object which allows us to iterate through the list of methods and choose the one we want to run. Here, I used a foreach loop (line 133) to see the list of attributes for each method. There is probably an easy way to select methods that have the attributes Fact or Theory, but here is a good example of clarity over obscurity (and it wasn't immediately clear to me what the Where expression would be).

We then also iterate over the method attributes using another foreach (line 136).

For each method attribute, we check to see if it is a test method - ether a Fact or Theory (line 139).

Fact Method Handling

This is the Fact handler (line 142);

Output.WriteLine(method.Name);

Output.Indent++;

Output.WriteLine(attribute.GetType().Name);

Output.Indent++;

if ((skip = ((FactAttribute)attribute).Skip) != null)

{

Output.WriteLine("Skipped - " + (string.IsNullOrEmpty(skip) ?

"no reason given!" : skip));

Output.Indent.Clear();

Output.WriteLine();

continue;

}

Invoke(test, method);

Output.Indent--;

Output.WriteLine();

Most of this is output formatting which you can see for yourself by running the code and in the 'Final Result' section below. Point of note, if the Fact attribute is declared with the string parameter Skip as a non-null string, that test will be skipped (as in Test1);

[Theory(Skip = "Fails, needs fixing!")]

[InlineData("\"Type\":\"Print\",\"Mode\":\"WriteLine\",

\"Method\":\"TestFormat0\",\"File\":\"printtTests.cs\",\"Line\":47,\"Indent\":\"\"}")]

public void Test1(string json)

And will be reported as skipped by xUnit:

We replicate the Skip behavior in Program.Main as:

if ((skip = ((FactAttribute)attribute).Skip) != null)

{

Output.WriteLine("Skipped - " + (string.IsNullOrEmpty(skip) ?

"no reason given!" : skip));

Output.Indent.Clear();

Output.WriteLine();

continue;

}

Nothing special here - we simply note the Skip and move on to the next test case.

If it is not skipped, the test method is invoked as:

Invoke(test, method);

(See Progam.Invoke below.)

Theory Method Handling

The Theory method handler is very similar to the Fact method handler with the additional wrinkle that it needs to handle InlineData attributes which are used as arguments to multiple calls to the test method:

Output.WriteLine(method.Name);

Output.Indent++;

Output.WriteLine(attribute.GetType().Name);

Output.Indent++;

if ((skip = ((TheoryAttribute)attribute).Skip) != null)

{

Output.WriteLine("Skipped - " + (string.IsNullOrEmpty(skip) ?

"no reason given!" : skip));

Output.Indent.Clear();

Output.WriteLine();

continue;

}

var data = attributes.Where(item => item is InlineDataAttribute);

foreach (var item in data)

{

Output.WriteLine(item.GetType().Name);

var args = ((InlineDataAttribute)item).GetData

(method).ToArray()[0];

Output.Indent++;

var argEnum = args.Select((arg, index) => new { index, arg });

foreach (var arg in argEnum)

Output.WriteLine(arg);

Output.Indent--;

Invoke(test, method, args);

Output.Indent--;

Output.WriteLine();

}

We filter the method attributes to pull out only the InlineData attributes using:

var data = attributes.Where(item => item is InlineDataAttribute);

Here, I used a where clause because it is pretty clear what we're looking for without excessive mental gymnastics. Again, this is an IEnumberable list and we iterate over it with foreach which shows the arguments used and invokes the test method.

Program.Invoke

Method invocation is pulled out as a method of its own since it is called by both handlers. It simply calls the test methods' Invoke method with any required arguments:

method.Invoke(test, args);

This is wrapped in an exception handler catching any and all exceptions to output the pass/fail grade.

static void Invoke(UnitTest1 test, MethodInfo method, object[]? args = null)

{

Exception? exception = null;

try

{

method.Invoke(test, args);

}

catch (Exception ex)

{

exception = ex;

}

Output.Indent--;

if (exception != null)

{

for (Exception? ex = exception; ex != null; ex = ex.InnerException)

Output.WriteLine(ex.ToString());

Output.WriteLine("Failed");

}

else

Output.WriteLine("Passed");

}

That pretty much completes Program.Main.

The Output Class

xUnit redirects and manages the standard output stream when it runs tests. If you want to compliment the output you need to define an output class inheriting from ITestOutputHelper. We define one as:

public class Output : ITestOutputHelper

{

public Indent Indent = new();

public void Write(object message) => Console.Write(message);

public void Write(string message) => Console.Write(message);

public void WriteLine(object message) => Console.WriteLine(Indent.Text + message);

public void WriteLine(string message = "") =>

Console.WriteLine(Indent.Text + message);

public void WriteLine(string format, params object[] args) =>

Console.WriteLine(Indent.Text + format, args);

}

Which should be self explanatory but has the additional facility of adding indentation via an Indent class. Though not particularly smart, it does have the advantage of being simple.

public class Indent

{

public int Count { get; internal set; } = 0;

public string Text { get; internal set; } = "";

public int Size { get; set; } = 3;

public char Char { get; set; } = ' ';

public static Indent operator ++(Indent indent)

{

indent.Count++;

indent.Text = "";

for (int n = 0; n < indent.Count; n++)

for (int i = 0; i < indent.Size; i++)

indent.Text += indent.Char;

return indent;

}

public static Indent operator --(Indent indent)

{

if (indent.Count != 0)

{

indent.Count--;

indent.Text = "";

for (int n = 0; n < indent.Count; n++)

for (int i = 0; i < indent.Size; i++)

indent.Text += indent.Char;

}

return indent;

}

public void Clear()

{

Count = 0;

Text = "";

}

}

You can use it to Increase, Decrease or Clear the level of output indentation as for example:

Output.WriteLine(method.Name);

Output.Indent++;

Output.WriteLine(attribute.GetType().Name);

Or:

Output.Indent++;

Output.WriteLine("Indented");

Output.Indent.Clear();

Output.WriteLine("Unindented");

Making It Work

The example code included here should work as is. However, if you create a new xUnit test project and want to use the Program.Main described here, there are a couple of things you need to do. First, you need to set the namespace to match the project you just created:

namespace TestProject1

Second, you need to change the class name used for the Test methods:

static void Invoke(UnitTest1 test, MethodInfo method, object[]? args = null)

var test = new UnitTest1(Output);

and;

var methods = typeof(UnitTest1).GetMethods();

Thirdly, not so obvious but simple to fix, you also need to set the project Startup object to be <namespace>.Program. Right click on the project, select properties and set Startup object;

The Final Result

Points of Interest

Extending the xUnit framework to do this was not hard, perhaps half a day or so. But it included elements I hadn't used before in 15+ years of coding C# and one or two things I always forget about when setting up a new test after not using for a while.

One satisfying aspect to this is that I finally see a path to having a sensible embedded test harness by extending this framework to include automation; loading and unloading hardware specific test cases onto dedicated embedded hardware, capturing the output and assigning pass/fail metrics. We develop an 'embedded system' that supports 10 different MCUs and the test matrix is a nightmare and very 'hands-on'. I can finally see a way to wrestle the problem to the ground and automate in the coming months.

Your mileage may vary, but regardless - enjoy!

History

- 3rd July, 2023: Initial version