In this article, you will find an introduction of writing a simple C# console app for files backup solution.

Introduction

The codes shown in this article are extracted from the main project. They are the core ideas of how the whole thing works. For a complete working solution, please download the source code or visit the Github page.

If you have experience in similar files and folders backup solutions, you’re more than welcome to share your ideas in the comment section below.

The first thing that came to mind for backing up files is just simply copying files to another folder.

The Basics

using System.IO;

string sourceFolder = @"D:\fileserver";

string destinationFolder = @"E:\backup";

string[] folders = Directory.GetDirectories(sourceFolder);

string[] files = Directory.GetFiles(sourceFolder);

foreach (string folder in folders)

{

string foldername = Path.GetFileName(folder);

string dest = Path.Combine(destFolder, foldername);

Directory.CreateDirectory(dest);

}

foreach (string file in files)

{

string filename = Path.GetFileName(file);

string dest = Path.Combine(destFolder, filename);

File.Copy(file, dest, true);

}

Next, in order to cover all sub-folders, the code above can be refactored into a method:

static void Main(string[] args)

{

string sourceFolder = @"D:\fileserver";

string destinationFolder = @"E:\backup";

BackupDirectory(sourceFolder, destinationFolder);

}

static void BackupDirectory(string sourceFolder, string destinationFolder)

{

Directory.CreateDirectory(destinationFolder);

string[] files = Directory.GetFiles(sourceFolder);

foreach (string file in files)

{

string filename = Path.GetFileName(file);

string dest = Path.Combine(destFolder, filename);

File.Copy(filename, dest, true);

}

string[] folders = Directory.GetDirectories(sourceFolder);

foreach (string folder in folders)

{

string name = Path.GetFileName(folder);

string dest = Path.Combine(destinationFolder, name);

BackupDirectory(folder, dest);

}

}

Here’s a different version that does not require a recursive call.

Version 2

static void Main(string[] args)

{

string sourceFolder = @"D:\fileserver";

string destinationFolder = @"E:\backup";

BackupFolder(sourceFolder, destinationFolder);

}

static void BackupFolder(string source, string destination)

{

Directory.CreateDirectory(destinationFolder);

foreach (string dirPath in Directory.GetDirectories

(source, "*", SearchOption.AllDirectories))

{

Directory.CreateDirectory(dirPath.Replace(source, destination));

}

foreach (string newPath in Directory.GetFiles

(source, "*.*", SearchOption.AllDirectories))

{

File.Copy(newPath, newPath.Replace(source, destination), true);

}

}

Another even simpler version.

Version 3

using System;

using Microsoft.VisualBasic.FileIO;

static void Main(string[] args)

{

string sourceFolder = @"D:\fileserver";

string destinationFolder = @"E:\destination";

FileSystem.CopyDirectory(sourceFolder, destinationFolder, UIOption.DoNotDisplayUI);

}

Capturing Read And Write Errors

The next concern is about read and write error. Maybe because of user account access rights. Therefore, it is highly recommended to run the program as Administrator or “Local System” which will have full access rights to everything. Nonetheless, in order to address some other unknown circumstances that might be resulting in file read or write errors, all read and write actions should be wrapped within a try-catch block, so that the backup process will not be terminated half way when any error happens. Logging can be implemented so that if any error occurs, it can be traced.

The typical try-catch block:

try

{

File.Copy(sourceFile, destinationFile, true);

}

catch (Exception ex)

{

}

Now, combining the logging and try-catch, the block will look something like this:

static void Main(string[] args)

{

try

{

string sourceFolder = @"D:\fileserver";

string destinationFolder = @"E:\2023-11-01";

string successLogPath = @"E:\2023-11-01\log-success.txt";

string failLogPath = @"E:\2023-11-01\log-fail.txt";

using (StreamWriter successWriter = new StreamWriter(successLogPath, true))

{

using (StreamWriter failWriter = new StreamWriter(failLogPath, true))

{

BackupDirectory(sourceFolder, destinationFolder, successWriter, failWriter);

}

}

}

catch (Exception ex)

{

}

}

static void BackupDirectory(string sourceFolder, string destinationFolder,

StreamWriter successWriter, StreamWriter failWriter)

{

if (!Directory.Exists(destinationFolder))

{

try

{

Directory.CreateDirectory(destinationFolder);

successWriter.WriteLine($"Create folder: {destinationFolder}");

}

catch (Exception ex)

{

failWriter.WriteLine($"Failed to create folder:

{sourceFolder}\r\nAccess Denied\r\n");

return;

}

}

string[] files = null;

try

{

files = Directory.GetFiles(sourceFolder);

}

catch (UnauthorizedAccessException)

{

failWriter.WriteLine($"{sourceFolder}\r\nAccess Denied\r\n");

}

catch (Exception e)

{

failWriter.WriteLine($"{sourceFolder}\r\n{e.Message}\r\n");

}

if (files != null && files.Length > 0)

{

foreach (string file in files)

{

try

{

string name = Path.GetFileName(file);

string dest = Path.Combine(destinationFolder, name);

File.Copy(file, dest, true);

}

catch (UnauthorizedAccessException)

{

failWriter.WriteLine($"{file}\r\nAccess Denied\r\n");

TotalFailed++;

}

catch (Exception e)

{

TotalFailed++;

failWriter.WriteLine($"{file}\r\n{e.Message}\r\n");

}

}

}

string[] folders = null;

try

{

folders = Directory.GetDirectories(sourceFolder);

}

catch (UnauthorizedAccessException)

{

failWriter.WriteLine($"{sourceFolder}\r\nAccess denied\r\n");

}

catch (Exception e)

{

failWriter.WriteLine($"{sourceFolder}\r\nAccess {e.Message}\r\n");

}

if (folders != null && folders.Length > 0)

{

foreach (string folder in folders)

{

try

{

string name = Path.GetFileName(folder);

string dest = Path.Combine(destinationFolder, name);

BackupDirectory(folder, dest, successWriter, failWriter);

}

catch (UnauthorizedAccessException)

{

failWriter.WriteLine($"{folder}\r\nAccess denied\r\n");

}

catch (Exception e)

{

failWriter.WriteLine($"{sourceFolder}\r\nAccess {e.Message}\r\n");

}

}

}

}

The backup process will be executed on daily or weekly basis, depends on your preference. For each backup, the destination folder can be named by timestamps, for example:

// for daily basis

E:\2023_11_01\ << day 1

E:\2023_11_02\ << day 2

E:\2023_11_03 030000\ << day 3

// for weekly basis

E:\2023-11-01 030000\ << week 1

E:\2023-11-08 030000\ << week 2

E:\2023-11-15 030000\ << week 3

Full Backup

The backup code explained above executes a “Full Backup,” copying everything.

There is a very high likelihood that more than 90% of the content being backed up is the same as in the previous backup. Imagine if the backup is performed on a daily basis; there would be a lot of identical redundancies.

How about this: instead of repeatedly copying the same large amount of files every time, the program only copies those files that are new or have been modified, the “Incremental Backup.”

So, the backup strategy will now look something like this: perform an incremental backup on a daily basis and a full backup on a weekly basis (perhaps every Sunday, etc.) or every 15th day or perhaps, once a month.

Incremental backup is resource-efficient, saves space (obviously), saves CPU resources, and consumes far less time.

Incremental Backup

To identify the new files is easy, new files are not existed in the destination folder, just copy it.

foreach (string file in files)

{

string name = Path.GetFileName(file);

string dest = Path.Combine(destinationFolder, name);

if (File.Exists(dest))

{

}

else

{

File.Copy(file, dest, true);

}

}

If the file exists, then some kind of file comparison will need to be carried out to determine whether both the source file and the destination file are exactly the same.

The most accurate way to identify whether they are identical copies is by calculating the HASH signature of both files.

public static string ComputeFileHash(string filePath)

{

using (FileStream fs = new FileStream(filePath, FileMode.Open, FileAccess.Read))

{

using (SHA256 sha256 = SHA256.Create())

{

byte[] hashBytes = sha256.ComputeHash(fs);

return BitConverter.ToString(hashBytes).Replace("-", "");

}

}

}

Example of output:

string hash = ComputeFileHash(@"C:\path\to\your\file.txt");

During the first backup, compute the SHA-256 hash signature of all files and cache (save) it into a text file (or a portable database such as SQLite), which will look something like this:

A3A67E8FBEC365CDBB63C09A5790810A247D692E96360183C67E3E72FFDF6FE9|\somefile.pdf

7F83B1657FF1FC53B92DC18148A1D65DFC2D4B1FA3D677284ADDD200126D9069|\somefolder\letter.docx

C7BE1ED902FB8DD4D48997C6452F5D7E509FB598F541B12661FEDF5A0A522670|\account.xlsx

A54D88E06612D820BC3BE72877C74F257B561B19D15BB12D0061F16D454082F4|\note.txt

The HASH and the filename are separated by a vertical line “|” symbol.

Then at the second backup, re-compute SHA-256 hash for all files (again) and match it to the cached (saved) old SHA-256 from the destination folder.

By comparing the HASH signatures of both files (new and old), the program is able to identify even the slightest tiny differences of both files.

BUT, to compute SHA-256 hash, the file bytes will be loaded, and this process is even more hardware resource-intensive. Yes, it will still save space, but the time required and the CPU computation power are more than quadruple of what is needed to just doing a full backup.

Another faster (super fast) alternative to identify the newer version of a file is by comparing the “last modified time” of both files. Although comparing the “last modified time” is not as accurate as comparing the HASH, it is good enough in this context.

DateTime srcWriteTime = File.GetLastWriteTime(sourceFile);

DateTime destWriteTime = File.GetLastWriteTime(destinationFile);

if (srcWriteTime > destWriteTime)

{

File.Copy(file, dest, true);

}

else

{

}

and this concludes the basic idea of how the incremental backup can be done.

Next Problem: The Backup Drive Will Eventually Fill Up

If the backup drive becomes full, simply delete the oldest backup folder. If deleting one backup folder is not enough, delete two folders.

The problem is… deleting a large number of files and sub-folders can be very slow.

What is a fast way to clear off files in a short amount of time?

One solution that comes to mind is to “Format Drive.”

Here’s a code snippet of formatting a drive using “Format.exe“:

using System.Diagnostics;

var process = new Process()

{

StartInfo = new ProcessStartInfo

{

FileName = "format.com",

Arguments = $"E: /FS:NTFS /V:backup /Q /Y /A:4096",

Verb = "runas",

RedirectStandardOutput = true,

RedirectStandardInput = true,

UseShellExecute = false,

CreateNoWindow = true,

}

};

process.Start();

process.StandardInput.WriteLine("Y");

string output = process.StandardOutput.ReadToEnd();

process.WaitForExit();

The above method has a high chance of being reported as malware by antivirus software.

Another better way is by using ManagementObjectSearcher from System.Management;

using System.Management;

ManagementObjectSearcher searcher = new ManagementObjectSearcher("\\\\.\\ROOT\\CIMV2",

"SELECT * FROM Win32_Volume WHERE DriveLetter = 'E:'");

foreach (ManagementObject volume in searcher.Get())

{

volume.InvokeMethod("Format", new object[] { "NTFS", true, 4096, "Backup", false });

}

However, if we format the backup drive that is currently in use, all backups will be lost, which makes this not an option.

How about using two backup drives ? …

This means that now, when the first drive is full, we can continue the backup on the second drive. And when the second drive is full, since we have the latest backups on the second drive, we can safely format the first drive. Sounds good? Yep, that will do.

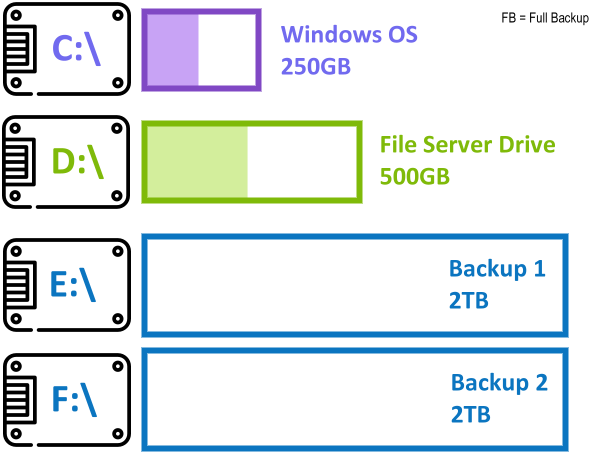

And one more thing, the main storage of file server has to be easily moved to another computer. This means the main storage of the file server has to be using a dedicated physical hard disk. So, the main Windows operating system will be using its own dedicated hard disk too. This same goes to the backup folder, they will be using another dedicated hard disk, or they can share the hard disk with the Windows OS.

So, 3 hard disks. 1 for Windows, 1 for file server, 1 (or 2) for backup.

The following images illustrate the scenario:

- First hard disk used for running Windows (Drive C).

- Second hard disk will be used as the main file server storage (Drive D).

- Third and forth hard disk (or a third hard disk partitioned into 2 drives) will be dedicated for backup (Drive E, F).

The below image shows the software started to run the backup. In this example, the program is set to perform a full backup on every interval of 7 days. Other days will be performing incremental backup.

Next, the image shows the first backup drive is full. The program will switch to the next backup drive.

and lastly, when the next drive is full, the program will go back to the first backup drive. Since the first backup drive is already full, the program will format the drive and start using it for the backup.

Doing the Final Code Logic

Now the last part going to code the logic for deciding which drive to use and backup type (incremental or full backup).

Before starting the logic, we need to obtain the total size of the source folder.

static long GetDirectorySize(DirectoryInfo dirInfo)

{

long size = 0;

FileInfo[] files = dirInfo.GetFiles();

foreach (FileInfo file in files)

{

size += file.Length;

}

DirectoryInfo[] subDirs = dirInfo.GetDirectories();

foreach (DirectoryInfo subDir in subDirs)

{

size += GetDirectorySize(subDir);

}

return size;

}

DirectoryInfo dirInfo = new DirectoryInfo(sourceFolder);

long totalSize = GetDirectorySize(dirInfo);

The main logic to get the destination folder:

static string GetDestinationFolder(long totalSize)

{

string[] lstMyDrive = new string[] { "E", "F" };

double TotalDaysForFullBackup = 7;

string timeNowStr = DateTime.Now.ToString("yyyy-MM-dd HHmmss");

DriveInfo[] allDrives = DriveInfo.GetDrives();

List<DriveInfo> matchingDrives = allDrives.Where

(d => lstMyDrive.Contains(d.Name[0].ToString())).ToList();

Dictionary<string, DateTime> dicFolderDate = new Dictionary<string, DateTime>();

foreach (var drive in matchingDrives)

{

string[] backupFolders = Directory.GetDirectories(drive.Name);

foreach (var dir in backupFolders)

{

string folderName = new DirectoryInfo(dir).Name;

if (DateTime.TryParseExact(folderName, "yyyy-MM-dd HHmmss",

CultureInfo.InvariantCulture, DateTimeStyles.None,

out DateTime folderDate))

{

dicFolderDate[dir] = folderDate;

}

}

}

if (dicFolderDate.Count == 0)

{

return $"{lstMyDrive[0]}:\\{timeNowStr}";

}

string latestBackupFolder = "";

DateTime latestBackupDate = DateTime.MinValue;

foreach (var keyValuePair in dicFolderDate)

{

if (keyValuePair.Value > latestBackupDate)

{

latestBackupDate = keyValuePair.Value;

latestBackupFolder = keyValuePair.Key;

}

}

if (latestBackupDate == DateTime.MinValue)

{

return $"{lstMyDrive[0]}:\\{timeNowStr}";

}

var timespanTotalDaysOld = DateTime.Now - latestBackupDate;

if (timespanTotalDaysOld.TotalDays < TotalDaysForFullBackup)

{

return latestBackupFolder;

}

if (timespanTotalDaysOld.TotalDays >= TotalDaysForFullBackup)

{

bool requireFormat = false;

string newTargetedDriveLetter =

GetSuitableDrive(totalSize, lstMyDrive, out requireFormat);

if (requireFormat)

{

FormatDrive(newTargetedDriveLetter);

}

return $"{newTargetedDriveLetter}:\\{timeNowStr}";

}

}

The GetSuitableDrive method:

static string GetSuitableDrive(long totalSize, string[] lstMyDrive, out bool requireFormat)

{

requireFormat = false;

DriveInfo[] allDrives = DriveInfo.GetDrives();

List<DriveInfo> matchingDrives = allDrives.Where

(d => lstMyDrive.Contains(d.Name[0].ToString())).ToList();

foreach (DriveInfo drive in matchingDrives)

{

if (drive.IsReady)

{

bool enoughSpace = drive.AvailableFreeSpace > totalSize;

if (enoughSpace)

{

return drive.Name[0].ToString();

}

}

}

requireFormat = true;

DateTime latestDate = DateTime.MinValue;

string latestDrive = "";

foreach (DriveInfo drive in matchingDrives)

{

if (drive.IsReady)

{

string[] directories = Directory.GetDirectories(drive.Name);

foreach (string dir in directories)

{

string folderName = new DirectoryInfo(dir).Name;

if (DateTime.TryParseExact

(folderName, "yyyy-MM-dd HHmmss", CultureInfo.InvariantCulture,

DateTimeStyles.None, out DateTime folderDate))

{

if (folderDate > latestDate)

{

latestDate = folderDate;

latestDrive = drive.Name[0].ToString();

}

}

}

}

}

int latestDriveIndex = Array.IndexOf(lstMyDrive, latestDrive);

latestDriveIndex++;

if (latestDriveIndex >= lstMyDrive.Length)

{

latestDriveIndex = 0;

}

return lstMyDrive[latestDriveIndex];

}

Till here, the drive letter is chosen and a new backup folder will be created.

Piecing all this up together, a simple files and folder backup can be built.

Let the Program Runs Automatically

The program can be set to run automatically by using Windows Task Scheduler.

- Open Windows Task Scheduler, create a Task.

- Set the task scheduler’s action to run this program.

- Run the task scheduler with administrative user or System.

- Run it whether user is logged on or not.

- Run with highest privileges.

- Set a trigger with your preferred execution time (i.e., 3am).

Here is the C# code snippet that can install the task scheduler programmatically:

First, install the Nuget Package of TaskScheduler (provided by David Hall).

Install Task

using Microsoft.Win32.TaskScheduler;

using System.Text.RegularExpressions;

using (TaskService ts = new TaskService())

{

var existingTask = ts.GetTask("Auto Folder Backup");

if (existingTask != null)

{

return;

}

TaskDefinition td = ts.NewTask();

td.RegistrationInfo.Description = "Automated folder backup task";

td.Principal.RunLevel = TaskRunLevel.Highest;

DateTime triggertime = DateTime.Today.AddHours((int)nmTaskHour.Value)

.AddMinutes((int)nmTaskMinute.Value);

td.Triggers.Add(new DailyTrigger { StartBoundary = triggertime });

td.Actions.Add(new ExecAction(@"D:\auto_folder_backup\auto_folder_backup.exe", null, null));

ts.RootFolder.RegisterTaskDefinition(@"Auto Folder Backup", td,

TaskCreation.CreateOrUpdate,

"SYSTEM",

null,

TaskLogonType.ServiceAccount,

null);

}

Remove Task

using (TaskService ts = new TaskService())

{

var tasks = ts.RootFolder.GetTasks(new Regex("Auto Folder Backup"));

foreach (var task in tasks)

{

ts.RootFolder.DeleteTask(task.Name);

}

}

That is all for now. Cheers! ;)

History

- 1st November, 2023: Initial version