This library, HigLabo.OpenAI, facilitates easy integration with OpenAI's GPT4-turbo, GPT Store, and Assistant API through comprehensive classes like OpenAIClient and XXXParameter, providing streamlined methods for various API endpoints and versatile response handling, empowering developers to efficiently utilize and interact with OpenAI services in C#.

Introduction

This week, OpenAI introduced GPT4-turbo, GPTs, GPT Store, and more. They also released new API called Assistant API. It's new, there is no C# library. So, I developed it.

NEW!!! (2024-5-07)

I added vector file, usage on chatcompletion. Please check it out!

How to Use?

Download via Nuget

HigLabo.OpenAI

HigLabo.OpenAI is that.

You can see the sample code on:

The main class is OpenAIClient. You create OpenAIClient class for OpenAI API.

var apiKey = "your api key of OpenAI";

var cl = new OpenAIClient(apiKey);

For Azure endpoint.

var apiKey = "your api key of OpenAI";

var cl = new OpenAIClient(new AzureSettings

(apiKey, "https://tinybetter-work-for-our-future.openai.azure.com/", "MyDeploymentName"));

Call ChatCompletion endpoint.

var cl = new OpenAIClient("API KEY");

var p = new ChatCompletionsParameter();

p.Messages.Add(new ChatMessage(ChatMessageRole.User, $"How to enjoy coffee?"));

p.Model = "gpt-4";

var res = await cl.ChatCompletionsAsync(p);

foreach (var choice in res.Choices)

{

Console.Write(choice.Message.Content);

}

Console.WriteLine();

Console.WriteLine();

Console.WriteLine("----------------------------------------");

Console.WriteLine("Total tokens: " + res.Usage.Total_Tokens);

Consume ChatCompletion endpoint with server sent event.

var cl = new OpenAIClient("API KEY");

var result = new ChatCompletionStreamResult();

await foreach (string text in cl.ChatCompletionsStreamAsync("How to enjoy coffee?", "gpt-4", result, CancellationToken.None))

{

Console.Write(text);

}

Console.WriteLine();

Console.WriteLine("Finish reason: " + result.GetFinishReason());

ChatCompletion with function calling.

var cl = new OpenAIClient("API KEY");

var p = new ChatCompletionsParameter();

p.Messages.Add(new ChatMessage(ChatMessageRole.User,

$"I want to know the whether of these locations. Newyork, Sanflansisco, Paris, Tokyo."));

p.Model = "gpt-4";

{

var tool = new ToolObject("function");

tool.Function = new FunctionObject();

tool.Function.Name = "getWhether";

tool.Function.Description = "This service can get whether of specified location.";

tool.Function.Parameters = new

{

type = "object",

properties = new

{

locationList = new

{

type = "array",

description = "Location list that you want to know.",

items = new

{

type = "string",

}

}

}

};

p.Tools = new List<ToolObject>();

p.Tools.Add(tool);

}

{

var tool = new ToolObject("function");

tool.Function = new FunctionObject();

tool.Function.Name = "getLatLong";

tool.Function.Description =

"This service can get latitude and longitude of specified location.";

tool.Function.Parameters = new

{

type = "object",

properties = new

{

locationList = new

{

type = "array",

description = "Location list that you want to know.",

items = new

{

type = "string",

}

}

}

};

p.Tools = new List<ToolObject>();

p.Tools.Add(tool);

}

var result = new ChatCompletionStreamResult();

await foreach (var text in cl.ChatCompletionsStreamAsync(p, result, CancellationToken.None))

{

Console.Write(text);

}

Console.WriteLine();

foreach (var f in result.GetFunctionCallList())

{

Console.WriteLine("Function name is " + f.Name);

Console.WriteLine("Arguments is " + f.Arguments);

}

Use vision api via ChatCompletion endpoint.

var cl = new OpenAIClient("API KEY");

var p = new ChatCompletionsParameter();

var message = new ChatImageMessage(ChatMessageRole.User);

message.AddTextContent("Please describe this image.");

message.AddImageFile(Path.Combine(Environment.CurrentDirectory, "Image", "Pond.jpg"));

p.Messages.Add(message);

p.Model = "gpt-4-vision-preview";

p.Max_Tokens = 300;

var result = new ChatCompletionStreamResult();

await foreach (var text in cl.ChatCompletionsStreamAsync(p, result, CancellationToken.None))

{

Console.Write(text);

}

Upload file for fine tuning or pass to assistants.

var p = new FileUploadParameter();

p.File.SetFile("my_file.pdf", File.ReadAllBytes("D:\\Data\\my_file.pdf"));

p.Purpose = "assistants";

var res = await client.FileUploadAsync(p);

Console.WriteLine(res);

Image generation.

var cl = new OpenAIClient("API KEY");

var res = await cl.ImagesGenerationsAsync("Blue sky and green field.");

foreach (var item in res.Data)

{

Console.WriteLine(item.Url);

}

Create Assistant via API.

var cl = new OpenAIClient("API KEY");

var p = new AssistantCreateParameter();

p.Name = "Legal tutor";

p.Instructions = "You are a personal legal tutor.

Write and run code to legal questions based on passed files.";

p.Model = "gpt-4-1106-preview";

p.Tools = new List<ToolObject>();

p.Tools.Add(new ToolObject("code_interpreter"));

p.Tools.Add(new ToolObject("retrieval"));

var res = await cl.AssistantCreateAsync(p);

Console.WriteLine(res);

Add files to assistant.

var cl = new OpenAIClient("API KEY");

var res = await cl.FilesAsync();

foreach (var item in res.Data)

{

if (item.Purpose == "assistants")

{

var res1 = await cl.AssistantFileCreateAsync(id, item.Id);

}

}

Call assistant streaming API.

var assistantId = "your assistant Id";

var threadId = "your thread Id";

if (threadId.Length == 0)

{

var res = await cl.ThreadCreateAsync();

threadId = res.Id;

}

{

var p = new MessageCreateParameter();

p.Thread_Id = threadId;

p.Role = "user";

p.Content = "Hello! I want to know how to use OpenAI assistant API

to get stream response.";

var res = await cl.MessageCreateAsync(p);

}

{

var p = new RunCreateParameter();

p.Assistant_Id = assistantId;

p.Thread_Id = threadId;

p.Stream = true;

var result = new AssistantMessageStreamResult();

await foreach (string text in cl.RunCreateStreamAsync

(p, result, CancellationToken.None))

{

Console.Write(text);

}

Console.WriteLine();

Console.WriteLine(JsonConvert.SerializeObject(result.Thread));

Console.WriteLine(JsonConvert.SerializeObject(result.Run));

Console.WriteLine(JsonConvert.SerializeObject(result.RunStep));

Console.WriteLine(JsonConvert.SerializeObject(result.Message));

}

Function calling and submit tool output to Assistant API.

var cl = new OpenAIClient("API KEY");

var p0 = new MessageCreateParameter();

p0.Thread_Id = threadId;

p0.Role = "user";

p0.Content = $"I want to know the whether of Tokyo.";

var res = await cl.MessageCreateAsync(p0);

var p = new RunCreateParameter();

p.Assistant_Id = assistantId;

p.Thread_Id = threadId;

p.Tools = new List<ToolObject>();

p.Tools.Add(CreateGetWheatherTool());

var result = new AssistantMessageStreamResult();

await foreach (string text in cl.RunCreateStreamAsync(p, result, CancellationToken.None))

{

Console.Write(text);

}

Console.WriteLine();

Console.WriteLine(JsonConvert.SerializeObject(result.Run));

if (result.Run != null)

{

if (result.Run.Status == "requires_action" &&

result.Run.Required_Action != null)

{

var p1 = new SubmitToolOutputsParameter();

p1.Thread_Id = threadId;

p1.Run_Id = result.Run.Id;

p1.Tool_Outputs = new();

foreach (var toolCall in result.Run.Required_Action.GetToolCallList())

{

Console.WriteLine(toolCall.ToString());

var output = new ToolOutput();

output.Tool_Call_Id = toolCall.Id;

var o = new

{

Wheather = "Cloud",

Temperature = "20℃",

Forecast = "Rain after 3 hours",

};

output.Output = $"{toolCall.Function.Arguments} is {JsonConvert.SerializeObject(o)}";

p1.Tool_Outputs.Add(output);

}

await foreach (var text in cl.SubmitToolOutputsStreamAsync(p1))

{

Console.Write(text);

}

}

}

Class Architecture

The main classes are:

OpenAIClientXXXParameterXXXAsyncXXXResponseRestApiResponseQueryParameter

OpenAIClient

This class manages api key and call endpoint.

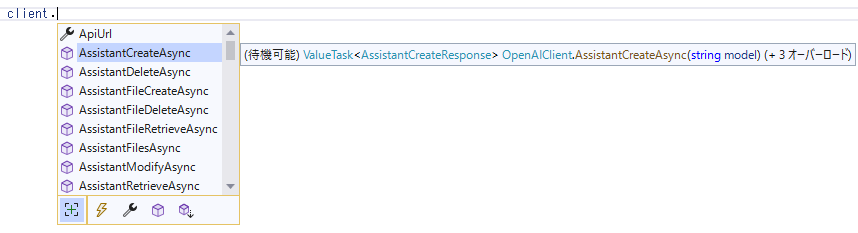

You can see in intellisense all endpoint as methods.

I provide method for all endpoints with required parameter. These are the easiest way to call endpoint.

var res = await cl.AudioTranscriptionsAsync("GoodMorningItFineDayToday.mp3"

, new MemoryStream(File.ReadAllBytes("D:\\Data\\Dev\\GoodMorningItFineDayToday.mp3"))

, "whisper-1");

OpenAI provides three types of endpoints. These content-type are json, form-data, and server-sent-event. The examples are there.

Json endpoint:

Form-data endpoint:

Stream endpoint:

So I provided SendJsonAsync, SendFormDataAsync, GetStreamAsync methods to call these endpoints. These classes manage http header and content-type and handle response correctly.

You can call endpoint with passing parameter object.

var p = new AudioTranscriptionsParameter();

p.File.SetFile("GoodMorningItFineDayToday.mp3",

new MemoryStream(File.ReadAllBytes("D:\\Data\\Dev\\GoodMorningItFineDayToday.mp3")));

p.Model = "whisper-1";

var res = await cl.SendFormDataAsync<AudioTranscriptionsParameter,

AudioTranscriptionsResponse>(p, CancellationToken.None);

But this method required the type of parameter and response, so I provide method easy to use.

var p = new AudioTranscriptionsParameter();

p.File.SetFile("GoodMorningItFineDayToday.mp3",

new MemoryStream(File.ReadAllBytes("D:\\Data\\Dev\\GoodMorningItFineDayToday.mp3")));

p.Model = "whisper-1";

var res = await cl.AudioTranscriptionsAsync(p);

XXXParameter

I provide parameter classes that represent all values of endpoint.

For example, this is create assistants endpoint.

This is AssistantCreateParameter class.

public partial class AssistantCreateParameter : RestApiParameter, IRestApiParameter

{

string IRestApiParameter.HttpMethod { get; } = "POST";

public string Model { get; set; } = "";

public string? Name { get; set; }

public string? Description { get; set; }

public string? Instructions { get; set; }

public List<ToolObject>? Tools { get; set; }

public List<string>? File_Ids { get; set; }

public object? Metadata { get; set; }

string IRestApiParameter.GetApiPath()

{

return $"/assistants";

}

public override object GetRequestBody()

{

return new {

model = this.Model,

name = this.Name,

description = this.Description,

instructions = this.Instructions,

tools = this.Tools,

file_ids = this.File_Ids,

metadata = this.Metadata,

};

}

}

These parameter classes are generated from API document. You can see actual generator code on Github.

XXXAsync

These methods are generated. I generate four methods that you can easily call API endpoint.

An example of AssistantCreate endpoint.

public async ValueTask<AssistantCreateResponse> AssistantCreateAsync(string model)

public async ValueTask<AssistantCreateResponse>

AssistantCreateAsync(string model, CancellationToken cancellationToken)

public async ValueTask<AssistantCreateResponse>

AssistantCreateAsync(AssistantCreateParameter parameter)

public async ValueTask<AssistantCreateResponse>

AssistantCreateAsync(AssistantCreateParameter parameter,

CancellationToken cancellationToken)

Essentially, there are two types of methods.

One is pass values only required parameter.

AssistantCreate endpoint required model. So, I generate:

public async ValueTask<AssistantCreateResponse> AssistantCreateAsync(string model)

This method is easy to use to call endpoint with only required parameter.

The other one that you can call api endpoint with all parameter values.

You can create Parameter like this:

var p = new AssistantCreateParameter();

p.Name = "Legal tutor";

p.Instructions = "You are a personal legal tutor.

Write and run code to legal questions based on passed files.";

p.Model = "gpt-4-1106-preview";

p.Tools = new List<ToolObject>();

p.Tools.Add(new ToolObject("code_interpreter"));

p.Tools.Add(new ToolObject("retrieval"));

And pass it to method.

var res = await cl.AssistantCreateAsync(p);

XXXResponse

Response class represents actual response data of api endpoint.

For example, Retrieve assistant endpoint returns assistant object.

I provide AssistantObjectResponse. (I created this class not code generation.)

public class AssistantObjectResponse: RestApiResponse

{

public string Id { get; set; } = "";

public int Created_At { get; set; }

public DateTimeOffset CreateTime

{

get

{

return new DateTimeOffset

(DateTime.UnixEpoch.AddSeconds(this.Created_At), TimeSpan.Zero);

}

}

public string Name { get; set; } = "";

public string Description { get; set; } = "";

public string Model { get; set; } = "";

public string Instructions { get; set; } = "";

public List<ToolObject> Tools { get; set; } = new();

public List<string>? File_Ids { get; set; }

public object? MetaData { get; set; }

public override string ToString()

{

return $"{this.Id}\r\n{this.Name}\r\n{this.Instructions}";

}

}

You can get values of response of api endpoint.

RestApiResponse

Sometimes, you want to get meta data of response. You can get response text, request object that create this response, etc.

This is RestApiResponse class.

public abstract class RestApiResponse : IRestApiResponse

{

object? IRestApiResponse.Parameter

{

get { return _Parameter; }

}

HttpRequestMessage IRestApiResponse.Request

{

get { return _Request!; }

}

string IRestApiResponse.RequestBodyText

{

get { return _RequestBodyText; }

}

HttpStatusCode IRestApiResponse.StatusCode

{

get { return _StatusCode; }

}

Dictionary<String, String> IRestApiResponse.Headers

{

get { return _Headers; }

}

string IRestApiResponse.ResponseBodyText

{

get { return _ResponseBodyText; }

}

}

These property are hidden property. You can access it by cast to RestApiResponse class:

var p = new AssistantCreateParameter();

p.Name = "Legal tutor";

p.Instructions = "You are a personal legal tutor.

Write and run code to legal questions based on passed files.";

p.Model = "gpt-4-1106-preview";

var res = await cl.AssistantCreateAsync(p);

var iRes = res as RestApiResponse;

var responseText = iRes.ResponseBodyText;

Dictionary<string, string> responseHeaders = iRes.Headers;

var parameter = iRes.Parameter as AssistantCreateParameter;

You can log response text to your own log database by RestApiResponse.

QueryParameter

Some api endpoint provides filter, paging feature. You can specify condition by QueryParameter class.

You can specify order like this:

var p = new MessagesParameter();

p.Thread_Id = "thread_xxxxxxxxxxxx";

p.QueryParameter.Order = "asc";

Conclusion

I am really excited about OpenAI GPTs, GPT store and so on. If you are also interested in OpenAI, my library may help your work. Happy to use!

History

- 6th November, 2023: OpenAI released assistants API

- 11th November, 2023: First release of

HigLabo.OpenAI - 2nd November, 2023: Add send message samples

- 8th January, 2024: Add vision api feature

- 12th February, 2024: Update for File upload endpoint

- 17th March, 2024: Add feature for Assistant streaming API