Commencing today, we embark on a concise series delving into lexical analysis, with a specific emphasis on SQL. The aim is to unveil the intricacies of how a query compiler within a RDBMS operates, unraveling its ability to comprehend the underlying meaning of a query and discern the user's intended objectives.

Introduction

In this article, we will revisit the fundamentals of computer science, placing a strong emphasis on theoretical concepts such as finite automata and compilation. Our dual objective is twofold: firstly, to elucidate the inner workings of a lexical analyzer, and secondly, to apply these foundational principles to a tangible scenario — crafting a streamlined scanner tailored for SQL.

-

Lexical analysis, often referred to as scanning or tokenization, is the initial phase of the compilation process in computer science. It involves the examination of a sequence of characters (source code) to produce meaningful units called tokens. These tokens are the smallest units of a programming language with well-defined meanings.

-

SQL is a domain-specific language used for managing and manipulating relational databases. It is a standard programming language specifically designed for interacting with and managing data stored in a relational database management system (RDBMS).

In this discussion, we aim to unravel the intricacies of how a query compiler processes a SQL string and successfully generates a meaningful outcome. The focus will be on addressing the challenges inherent in this process.

This article was originally posted here.

What is a Lexical Analyzer?

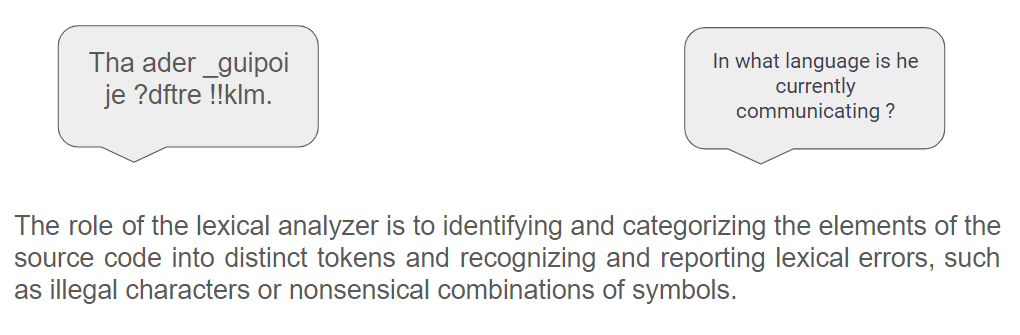

To start, an example is more illustrative than a lengthy explanation. Therefore, let's consider the following three sentences:

- Tha ader _guipoi je ?dftre !!klm.

- Question be not address book this.

- The cow is writing balloons.

The first sentence is incomprehensible, at least in English and likely in all languages worldwide. Additionally, certain words commence with illegal characters, such as exclamation marks, for instance.

The second sentence uses valid English words, but the arrangement in which they are employed renders it completely nonsensical.

The third sentence employs valid English words, and their arrangement is grammatically correct. However, the combination of words results in gibberish, making it nonsensical for anyone.

In a more formal lexicon, the first sentence is deemed lexically incorrect, the second one is lexically correct but syntactically incorrect, and the last one is lexically correct, syntactically correct but semantically incorrect.

The same principles that apply to a human language can be extended to programming languages, and the process is identical: a program needs to utilize permitted words (lexical analysis), organize them in a proper sequence according to a predefined grammar (syntax analysis), and avoid illogical assignments (semantic analysis).

In computer science, the three passes outlined above constitute the core components of a compiler and comprise a significant portion of a compilation course. Throughout this series, our focus will be solely on examining the lexical analyzer.

To Be Remembered

The lexical analyzer is a process examining the input source code of a programming language and breaking it down into a sequence of tokens. These tokens represent the fundamental building blocks of the language, such as keywords, identifiers, literals, and operators.

In essence, the lexical analyzer plays a crucial role by transforming raw source code into a structured stream of tokens that can be further processed by subsequent stages of a compiler.

A lexical analyzer initiates its process with a string, representing the raw source code written by a programmer (or, in our analogy above, the sentence spoken by a human). The objective is to determine the sequence of corresponding tokens.

What Is a Token?

-

Each individual component of the input string, typically separated by whitespaces, is commonly referred to as a lexeme. In the given sentence, for instance, 'SELECT', '*', and 'Customers' constitute distinct lexemes.

-

Each lexeme corresponds to a lexical category in the language in question, such as being a keyword, identifier, operator, or literal. To illustrate, 'SELECT' is a keyword, 'Customers' is an identifier, '=' represents an operator, and so forth.

-

A token is the amalgamation of these two pieces of information: the current lexeme and its associated category. Consequently, examples of tokens would be (KEYWORD|SELECT) or (IDENTIFIER|Customers).

It would seem relatively straightforward at first glance to detect keywords, given that their numbers are finite in each language. The code snippet below, for instance, could accomplish this task.

var keywords = [ "SELECT", "FROM", "WHERE" ];

var input = "SELECT * FROM Customers";

var lexemes = input.Split(new string[] { " " }, StringSplitOptions.None);

var tokens = new List<Token>();

foreach (var lexeme in lexemes)

{

if (keywords.Contains(lexeme))

{

var token = new Token() { Lexeme = lexeme, Category = "KEYWORD" };

tokens.Add(token);

}

}

However, challenges arise when it comes to detecting identifiers. As identifiers can essentially be any sequence of characters, it is impractical to predefine all possible user-created identifiers, unlike the approach used in the code above. This is where a specialized data structure comes into play – the finite automaton.

What are Finite Automata?

A finite automaton is a computational model with a finite set of states, transitions between these states, and an input alphabet that drives state transitions. It operates in discrete steps, processing input symbols to move between states based on predefined rules.

-

States are a finite set of distinct conditions or situations that the automaton can be in at any given time.

-

Transitions are rules or functions that define how the automaton transitions from one state to another in response to input symbols.

-

The input alphabet is the set of symbols that the automaton reads as input during its operation.

-

The initial state is the starting point of the automaton's operation, indicating its initial condition.

-

The accepting states are the states that, when reached, signify the successful recognition or acceptance of an input sequence.

Information

The theory underpinning finite automata is highly intricate and fascinating. While we won't explore profound theoretical concepts here, such as deterministic or nondeterministic automata or the remarkable Kleene's theorem, readers intrigued by these subjects can refer to Introduction to Automata Theory, Languages, and Computation (Hopcroft, Motwani, Ullman) for more comprehensive insights.

The above definition is quite formal, and readers who are not well-versed in these data structures may not find it more accessible. Consequently, we will take a graphical approach to illustrate and make tangible this incredibly useful structure.

A Simple Worked Example

Imagine a situation where we aim to design a data structure for identifying the word SELECT. The subsequent image illustrates the steps to take in this process.

- Commencing from state

1 (depicted in red in the figure above) and utilizing the string "SELECT", we initiate the process. Since the string begins with an 'S', we attempt to transition to a state with an 'S'-labeled transition. As such a transition exists, we progress to state 2. - Subsequently, encountering the character '

E', we iterate through the process again—transitioning from state 2 to another state with an 'E'-labeled transition. - This pattern continues until we reach state

7. In our example, state 7 is highlighted in green, indicating an accepting state. This signifies that we can conclude the process here and determine the recognized string, which, in this case, is precisely "SELECT".

Can This Structure Recognize the Word FROM ?

Once more, we initiate the process from state 1 (our initial state). However, this time, we employ the string "FROM". Given that this string commences with an 'F', we endeavor to transition to a state with an 'F'-labeled transition. As no such transition exists, we reach an impasse, and it becomes evident that this structure does not recognize the word FROM.

To rectify this, it is necessary to enhance our structure by introducing the requisite states.

Can This Structure Recognize the Word SEL?

- Once again, we commence the process from state

1, utilizing the string "SEL". As the string begins with an 'S', we attempt to transition to a state with an 'S'-labeled transition. Since such a transition exists, we proceed further. - Iterating through the process, we encounter a blockage in state

4 as the string is exhausted. Given that state 4 is not an accepting state, we deduce that this structure does not recognize the word SEL.

And So?

The structures described above are finite automata:

- States are represented by the set of all circles (1 through 7 in our case).

- Six transitions are labeled with individual characters; transition from one state to another is only possible if there exists a transition labeled with the current character.

- The input alphabet consists of sequence of alphanumeric characters.

- An initial state is designated in red.

- An accepting state is identified in green.

Important

An automaton recognizes a string if and only if, starting from the initial state, it can traverse to one of the accepting states by transitioning through the constituent characters of the string.

On its own, the current data structure serves little practical purpose, as identifying the word SELECT can be accomplished without such intricate machinery. However, envision how this structure can be employed to recognize identifiers.

Recognizing Identifiers

For our illustration, let's suppose that identifiers consist solely of sequences of alphabetic characters, with numeric characters not permitted. For instance, "Customers" is considered an identifier, while "state03" is not, as it contains non-alphabetic characters (0 and 3). Additionally, we impose the requirement that an identifier must consist of at least one character.

With this stipulation, the following automaton has the capability to identify an identifier.

We initiate the process from state 1, employing the string "Customers". As the string commences with a 'C', we endeavor to transition to a state with a 'C'-labeled transition. Successfully finding such a transition, we progress to state 2. Continuing through the process, we can consume all the characters and conclude in an accepting state. We conclude that Customers is recognized as an identifier.

Important 1

We can observe that there is a transition from a state to itself in this case. Such transitions are permitted by the definition of an automaton.

Important 2

It is quite noteworthy how the final structure is straightforward in comparison to the task it is designed to perform. With just two states and a handful of transitions, we can now accomplish a process that might appear quite complex without the aid of such a data structure.

It's time to put these concepts into practice, and we will exemplify the aforementioned ideas with a basic lexical analyzer for SQL.

What is SQL?

SQL stands as a time-honored language employed across all relational databases. In the context of this discussion, we presume that readers possess a proficient understanding of this technology without requiring refreshers. Our aim is to examine strings similar to the examples below.

SELECT *

FROM Customers c

WHERE c.Id = 10100

SELECT c.Name, c.Age

FROM Customers c

WHERE c.Id = 10100 AND c.Age <= 35

Customers SELECT WHERE Id FROM

SELECT *

FROM Customers04

Defining the Categories

For illustrative purposes, we will categorize our lexemes into seven distinct categories as outlined in the table below.

| Name | Example |

KEYWORD | SELECT, FROM, ... |

OPERATOR | =, <>, LIKE, ... |

IDENTIFIER | Customers, Id, ... |

PUNCTUATION | ., !, (, ... |

NUMBER | 1, 7653, ... |

LITERAL | 'john', '%michel%', ... |

ALL | * |

Building the Automata

We will need to construct an automaton for each of the categories mentioned above. To illustrate, we will outline the automaton for literals. The others are relatively straightforward and can be found in the source code written in C#.

Starting from state 1, a transition to state 2 is only possible with a ' character. Once in state 2, any number of alphabetic characters is allowed, and eventually, we can transition to state 3 only with another '. If the input string is exhausted, we can conclude that it is a literal. Otherwise, we need to explore another category.

It's sufficient for theory, and now we will proceed to practically implement a SQL lexical analyzer in C#. To be concise however, please refer to this link to see the code.

History

- 11th January, 2024: Initial version