Starting today, we embark on a brief series dedicated to vector databases. Our objective is to provide a comprehensive overview, exploring their definition, the reasons behind their emergence, and shedding light on some of their internal workings.

Introduction

Vector databases have gained prominence with the widespread adoption of large language models such as ChatGPT. However, their emergence predates this phenomenon, as they address a challenging issue that traditional databases struggle with: enabling similarity and semantic search functionality across diverse data types such as images, videos, and texts.

Throughout this concise series, we will delve into the reasons why vector databases transcend mere hype and are indispensable in specific scenarios. We will explore the algorithms they employ, the challenges they confront, and apply theoretical principles to a practical application: constructing a recommendation system using this technology.

Given the relatively nascent nature of the topic, authoritative textbooks on vector databases are limited. However, one notable resource, specialized in vector databases for NLP applications, serves as a commendable introduction to the subject.

Vector Databases for Natural Language Processing: Building Intelligent NLP Applications with High-Dimensional Text Representations (Allen)

This article was originally posted here: Understanding vector databases

In the Beginning Was Data

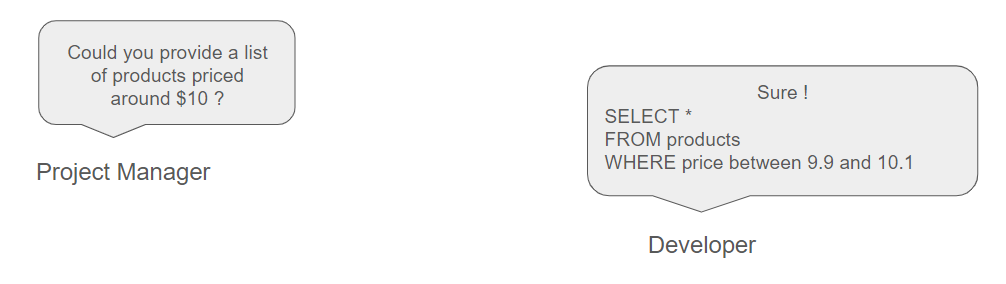

Until recently, data was predominantly stored in traditional relational databases, and queries of varying complexity were executed to retrieve information.

In the late 2000s, the rise of cloud computing coupled with significant reductions in storage expenses led to an influx of unstructured and diverse data. This included images, videos, audio files, documents, and text of varying natures. Concurrently, the demand to query these diverse data objects persisted, albeit with increased complexity.

How can we address this challenge?

In Everyday Life, What Does the Concept of Similarity Entail?

When we remark, for instance, that our sister bears a resemblance to our friend's daughter, we engage in human-based reasoning, employing similarity criteria such as eye color or other physical attributes. Similarly, we might assert that two documents resemble each other due to their utilization of identical words or synonymous expressions. In this scenario, we're analyzing documents and employing words as features to conduct a comparison.

The entities under consideration here, whether human beings or documents, aren't easily quantifiable in the traditional sense, as we're not employing mathematical reasoning to determine a precise degree of similarity. Rather, our brains are naturally adept at intuitively discerning commonalities between these entities, often without the need for explicit calculation.

So, how can we introduce a degree of formality?

Mathematics to the Rescue

Often, mathematics serves as the key to solving a portion of our problems. Here, we're grappling with a few formal concepts.

What are Vector Spaces?

A vector space is a set equipped with two operations: vector addition and scalar multiplication. These operations must satisfy certain properties, including closure under addition and scalar multiplication, associativity, commutativity, the existence of an additive identity (zero vector), and the existence of additive inverses for every vector. Vector spaces provide a framework for studying vectors, which can represent a variety of mathematical objects such as geometric quantities, physical quantities, and abstract mathematical entities.

Important

Mathematicians have crafted the concept of a vector space to possess a structural stability under linear combination, allowing for the addition of two vectors or their scaling by a constant factor. This foundational concept is pivotal in linear algebra, granting us the ability to manipulate abstract quantities—adding them, subtracting them, and comparing them—in a fundamental manner.

The set of real numbers serves as an example of a vector space: when we add two real numbers, the result remains a real number.

Does Mathematics Define the Notion of Similarity?

In practice, it commonly defines the opposite notion, which is distance, but it's equally possible to define similarity within mathematical frameworks.

Consider a vector space E. A metric or distance function on E is a function dd which takes pairs of points of EE to real numbers and satisfies the following rules:

- The distance between an object and itself is always zero: d(x,x)=0 for all x∈E

- The distance between distinct objects is always positive.

- Distance is symmetric: d(x,y)=d(y,x) for all x,y∈E

- Distance satisfies the triangle inequality: d(x,y)≤d(x,z)+d(z,y) for all x,y,z∈E

Information

Distances can theoretically be defined in more general spaces, known as metric spaces, where elements aren't required to adhere to the additive properties of vector spaces. However, the properties of metric spaces are generally less rich compared to those of vector spaces.

For instance, in the set of real numbers R, the absolute value functions as a metric, allowing us to assess the proximity between two values.

d(x,y)=∣x−y∣

Information

While these examples may seem straightforward, they serve to illustrate the essence of the topic: mathematics has long formalized the concept of proximity, and we should leverage these principles to facilitate comparisons between documents or images.

In practice, we will operate within the vector space Rn, which represents the set of n-tuples of real numbers.

Therefore, we are presented with tangible entities such as images, audio files, or documents that we aim to compare, alongside a robust mathematical framework that models similarities.

Yet, How Can We Bridge the Divide Between These Two Realms?

At this juncture, we encounter a challenge: we possess a set A of unstructured data comprising images, videos, and documents that aren't easily comparable, alongside a robust mathematical framework. The solution to bridging this gap once again arises from mathematics, specifically the concept of a function. A function establishes a mapping between two sets of elements, providing a means to relate the disparate data sets and enable meaningful comparisons.

f:A→Rn

In artificial intelligence, this function is referred to as an embedding. Essentially, we translate, or "embed", each element of set A into an n-tuple. However, it's important to note that the reverse operation cannot be universally applied: not every n-tuple necessarily represents an element of set A (since the set of real numbers R is uncountable).

In theory, the concept seems straightforward: we have a function that facilitates a proper mapping between documents or images and a vector space. However, the practical implementation of this function is where the challenge lies. This process involves modeling the function f. As a result, in the literature, f is often referred to as the "audio model" (if we aim to embed audio data), or the "video model" (if we aim to embed video data), and so forth, depending on the type of data being embedded. These models are designed to capture meaningful representations of the respective data types in a vector space, enabling comparisons, clustering, classification, and various downstream tasks in machine learning and artificial intelligence.

Numerous models have been conceptualized and deployed based on specific requirements and the nature of the data. To illustrate this concept concretely, we will delve into one such model: tf-idf (as detailed in the subsequent post).

And So, What Are Vector Databases?

Once data such as images or documents have been transformed into embeddings within a vector space, they can be stored in a datastore. While traditional relational database management systems (RDBMS) could serve this purpose, they are not specifically designed for storing vectors and are therefore not optimized for such data. This necessity has led to the development of vector databases.

Information

Vector databases are specialized databases designed to efficiently store and query high-dimensional vector data. Unlike traditional relational databases that are optimized for tabular data, vector databases are tailored to handle vectors as primary data types.

They provide mechanisms for storing, indexing, and querying vectors in a manner that preserves their high-dimensional structure and enables efficient similarity search and other vector-based operations. Examples of vector databases include Milvus, Faiss, and Pinecone.

What are the Practical Uses of Vector Databases?

Once a document or an image has been stored, it becomes possible to execute queries against these vectors. Conducting similarity searches with vectors is indeed a straightforward process, as demonstrated earlier.

-

Vector databases facilitate the indexing and searching of image or video features, enabling users to search for images or videos with similar visual characteristics. For instance, a user could seek images depicting a specific object or scene, and the system would retrieve visually similar images.

-

Vector databases can drive product recommendation engines, offering personalized suggestions derived from a user's historical purchases or browsing habits. Leveraging the indexing of product vectors and employing similarity search functionalities, the system can swiftly pinpoint products that closely match a user's preferences.

-

Vector databases can be used for fraud detection.

-

...

We will delve into a specific model utilized for documents. As there are mathematical notations involved, we recommend interested readers refer to the original article for a comprehensive understanding of its contents. See here for a concrete example.

How Do Vector Databases Efficiently Compute Similarities?

Vector databases are structured to enable rapid similarity computations. However, when seeking the k-nearest neighbors of a given vector, computing the distance between every vector (brute force approach) becomes impractical, especially when dealing with billions of vectors. To address this challenge, various techniques have been developed to perform efficient calculations. These techniques are commonly known as approximate nearest neighbors (ANN).

Here, we will simply outline the primary existing algorithms and refer to more specialized resources for further details.

Discovering ANNOY

ANNOY (Approximate Nearest Neighbors Oh Yeah) is a C++ library with Python bindings that provides an efficient implementation of approximate nearest neighbor search algorithms. ANNOY is designed to quickly find approximate nearest neighbors for high-dimensional data sets. It achieves this by constructing binary trees that partition the data space and then efficiently traversing these trees to identify the nearest neighbors.

ANNOY

Discovering LSH

LSH (Locality Sensitive Hashing) is a technique used for approximate nearest neighbor search in high-dimensional spaces. LSH works by hashing similar data points into the same buckets with high probability, thus enabling efficient approximate similarity search. The key idea behind LSH is to design hash functions that map similar data points to the same or nearby hash buckets, while mapping dissimilar points to different buckets with high probability.

LSH

Discovering HNSW

HNSW (Hierarchical Navigable Small World) is a method for constructing data structures to facilitate efficient approximate nearest neighbor search in high-dimensional spaces. HNSW builds a hierarchical graph structure where each node represents a data point and edges connect nodes to their nearest neighbors. The graph is constructed in such a way that it exhibits small-world properties, meaning that nodes are connected to both their nearest neighbors and other nodes that are further away but still relevant.

HNSW

Final Thoughts

If you wish to delve deeper into this topic, acquire the following book, which encompasses all the concepts emphasized in this series and delve into more advanced ones.

Vector Databases for Natural Language Processing: Building Intelligent NLP Applications with High-Dimensional Text Representations (Allen)

Do not hesitate to contact me if you require further information.

History

- 9th February, 2024: Initial version