As businesses navigate the end of BizTalk and seek potent data transformation solutions, Azure Logic Apps has surged to the forefront, offering an agile, serverless platform that simplifies workflow automation. My enthusiasm for Azure Logic Apps is fueled by its ease of use and its pivotal role in modernizing business processes. The integration of Azure OpenAI into Logic Apps is heralding a new era of AI-enhanced automation. This post explores the transformative potential of Azure Logic Apps, enhanced by AI, for businesses eager to leverage the latest in cloud and AI technologies.

Understanding Azure Logic Apps

Azure Logic Apps is a cloud-based service that helps you automate and orchestrate tasks, workflows, and business processes. It provides a visual designer to build workflows that integrate apps, data, services, and systems by automating tasks and business processes as “workflows.” Logic Apps is part of the Azure App Service suite, offering scalability, availability, and security, making it an ideal solution for integrating cloud resources and external services.

Key Features of Azure Logic Apps

- Visual Designer: Offers a drag-and-drop interface for building workflows, making it accessible to users with varying technical expertise.

- Connectors: Comes with a vast library of pre-built connectors, facilitating integration with various services and applications, such as Office 365, Salesforce, Dropbox, and now, Azure OpenAI and Azure AI Search. Which makes connecting and transforming data easy to multiple systems and SaaS providers.

- Scalability: Being serverless, it scales automatically to meet demand, ensuring high performance without the need to manage infrastructure.

- Condition-based Logic: Supports conditional statements, loops, and branches to create complex business logic.

Integrating Azure OpenAI with Logic Apps

The recent public preview of Azure OpenAI and Azure AI Search connectors marks a significant advancement in the capabilities of Logic Apps. These connectors bridge the gap between Logic Apps workflows and AI, enabling enterprises to harness the power of generative AI models like GPT-4 and AI-driven search functionalities within their automated workflows.

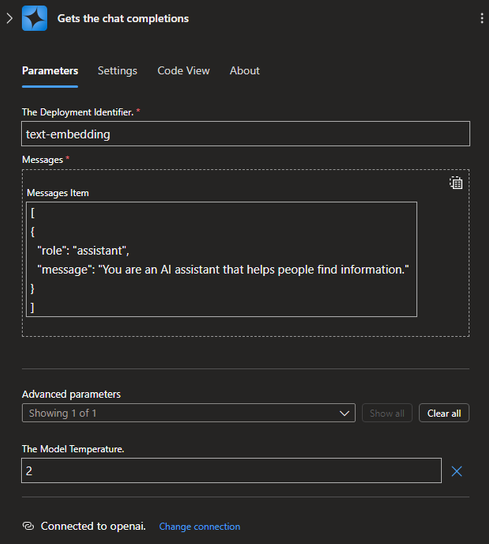

Azure OpenAI Connector

This connector allows Logic Apps to interact directly with Azure OpenAI services, enabling functionalities such as:

- Generating text completions or responses to queries based on your data.

- Extracting embeddings for data analysis and processing.

Azure AI Search Connector

With the AI Search connector, Logic Apps can:

- Index documents and data, making them searchable.

- Perform vector searches across indexed data, utilizing AI to understand the context and content of documents.

How to Use Azure Logic Apps with Azure OpenAI

Required AI Services

Access to an Azure OpenAI Service

If you already have an existing OpenAI Service and model you can skip these steps.

- Go to the Azure portal

- Click Create a resource

- In the search box type:

OpenAI. - In the search results list, click Create on Azure OpenAI.

- Follow the prompts to create the service in your chosen subscription and resource group.

- Once your OpenAI service is created you will need to create a deployments for generating embeddings and chat completions.

- Go to your OpenAI service, under the Resource Management menu pane, click Model deployments

- Click Manage Deployments

- On the Deployments page click Create new deployment

- Select an available embedding

model e.g. text-embedding-ada-002, model version, and deployment name. Keep track of the deployment name, it will be used in later steps. - Ensure your model is successfully deployed by viewing it on the Deployments page

- On the Deployments page click Create new deployment

- Select an available chat

model e.g. gpt-35-turbo, model version, and deployment name. Keep track of the deployment name, it will be used in later steps. - Ensure your model is successfully deployed by viewing it on the Deployments page

Access to an Azure AI Search Service

If you already have an existing AI Search Service you can skip to step 5.

- Go to the Azure portal.

- Click Create a resource.

- In the search box type:

Azure AI Search. - In the search results list, click Create on Azure AI Search.

- Follow the prompts to create the service in your chosen subscription and resource group.

- Once your AI Search service is created you will need to create an index to store your document content and embeddings.

- Go to your search service on the Overview page, at the top click Add index (JSON)

- Go up one level to the root folder ai-sample and open the Deployment folder. Copy the entire contents of the file aisearch_index.json and paste them into the index window. You can change the name of the index in the

name field if you choose. This name will be used in later steps. - Ensure your index is created by viewing in on the Indexes page

Follow these steps to create the Azure Standard Logic Apps project and deploy it to Azure:

- Open Visual Studio Code.

- Go to the Azure Logic Apps extension.

- Click Create New Project then navigate to and select the SampleAIWorkflows folder.

- Follow the setup prompts:

- Choose Stateful Workflow

- Press Enter to use the default

Stateful name. This can be deleted later - Select

Yes if asked to overwrite any existing files

- Update your parameters.json file:

- Open the parameters.json file

- Go to your Azure OpenAI service in the portal

- Under the Resource Management menu click Keys and Endpoint

- Copy the

KEY 1 value and place its value into the value field of the openai_api_key property - Copy the

Endpoint value and place its values into the value field of the openai_endpoint property

- Under the Resource Management menu click Model deployments

- Click Manage Deployments

- Copy the Deployment name of the embeddings model you want to use and place its value into the

value field of the openai_embeddings_deployment_id property - Copy the Deployment name of the chat model you want to use and place its value into the

value field of the openai_chat_deployment_id property

- Go to your Azure AI Search service in the portal

- On the Overview page copy the

Url value. Place its value in the value field of the aisearch_endpoint property - Under the Settings menu click

Keys. Copy either the Primary or Secondary admin key and place its value into the value field of the aisearch_admin_key property

- Go to your Tokenize Function App

- On the Overview page. Copy the

URL value and place its value into the value field of the tokenize_function_url property. Then append /api/tokenize_trigger to the end of the url.

- Deploy your Logic App:

- Go to the Azure Logic Apps extension

- Click Deploy to Azure

- Select a Subscription and Resource Group to deploy your Logic App

- Go to the Azure portal to verify your app is up and running.

- Verify your Logic Apps contains two workflows. They will be named:

chat-workflow and ingest-workflow.

Run your workflows

Now that the Azure Function and Azure Logic App workflows are live in Azure. You are ready to ingest your data and chat with it.

Ingest Workflow

- Go to your Logic App in the Azure portal.

- Go to your

ingest workflow. - On the Overview tab click the drop down Run then select Run with payload.

- Fill in the JSON

Body section with your fileUrl and documentName. For example: { "fileUrl": "https://mydata.enterprise.net/file1.pdf", "documentName": "file1" } NOTE: The expected file type is pdf. - Click Run, this will trigger the

ingest workflow. This will pull in your data from the above file and store it in your Azure AI Search Service. - View the Run History to ensure a successful run.

Chat Workflow

- Go to your Logic App in the Azure portal.

- Go to your chat workflow.

- On the Overview tab click the drop down Run then select Run with payload.

- Fill in the JSON

Body section with your prompt. For example: { "prompt": "Ask a question about your data?" } - Click Run, This will trigger the chat workflow. This will query your data stored in your Azure AI Search Service and respond with an answer.

- View the Run History to see the Response from your query.

Benefits of Using Azure Logic Apps with OpenAI

- Enhanced Efficiency: Automates repetitive tasks, freeing up valuable time for strategic work.

- Innovation: Enables businesses to leverage AI capabilities, fostering innovation and providing insights that were previously unattainable.

- Scalability and Flexibility: Easily scales with your business needs, and workflows can be modified as requirements change.

- Cost-Effective: You pay only for what you use, making it a cost-effective solution for businesses of all sizes.

Conclusion

The integration of Azure OpenAI and Azure AI Search with Azure Logic Apps represents a leap forward in the automation of business processes, allowing enterprises to seamlessly incorporate AI capabilities into their workflows. This not only enhances operational efficiency but also paves the way for innovative solutions to complex business challenges. By leveraging these advanced tools, businesses can stay ahead in the competitive landscape, making informed decisions, and driving growth through intelligent automation.

As Azure continues to expand its offerings, the potential for Logic Apps to revolutionize business processes grows exponentially. Embracing these technologies today can position your business as a leader in the digital transformation journey tomorrow.

Check the full logic app ai-sample at GitHub.