It is a routine task to import clients' data from other systems as a part of the boarding process. However, sometimes it can be expensive and require developers to be involved when Data Import is not flexible and expects a particular input file format. We can use JSONPath to map any file to the internal model for processing.

Introduction

To demonstrate how JSONPath can be used in data importing I created a Visual Studio solution that has standard for ONION architecture projects:

- App (Console)

- Domain

- Application

- Infrastructure (MS SQL database context)

- Unit tests

You can download it from GitHub https://github.com/euklad/BlogCode folder Story-11-Generic-Data-Import

This article explains the code and the approach I used.

Background

JsonPathToModel is a C# .NET open-source library that allows navigating through in-memory models extracting and setting property values using JSONPath dot notation.

Github: https://github.com/Binoculars-X/JsonPathToModel

NuGet: https://www.nuget.org/packages/JsonPathToModel

Solution Overview

Let's start by cloning the code locally and opening the solution file .\Story-11-Generic-Data-Import\Story-11-Generic-Data-Import.sln in Visual Studio 2022.

DataImport.Domain project contains DBContext entities and model classes, they don't have any logic inside and are supposed only to store data and represent database tables.

DataImport.Application project is responsible for the business logic, the mapping algorithms should be implemented there as well as functional helpers.

DataImport.Infrastructure project has DbContext and data persistence logic, it also manages database migrations.

DataImport.Console project is a console application that assembles all the pieces and executes the data import process in a few simple steps:

- Accept cmd line arguments

- Execute data import application algorithm

- Execute the persistence layer to save imported data

Domain

Let's have a look at the Entities folder, it has three classes Customer, Product and Purchase. These classes are mapped directly to the SQL database tables and used in Model classes to store data. As you can see the entities have some extra properties that are only needed to implement relationships between entities. These relationships are important for Entity Framework for a correct work.

For example Purchase.cs:

namespace DataImport.Domain.Entities;

public class Purchase

{

public int Id { get; set; }

public string? ExternalId { get; set; }

public string? CustomerExternalId { get; set; }

public string? ProductExternalId { get; set; }

public decimal? TotalAmount { get; set; }

public DateTime? PeriodStartDate { get; set; }

public DateTime? PeriodEndDate { get; set; }

public DateTime? OrderDate { get; set; }

public DateTime? PaymentDate { get; set; }

public string? Source { get; set; }

public DateTime Created { get; set; } = DateTime.UtcNow;

public int CustomerId { get; set; }

public Customer? Customer { get; set; }

public int ProductId { get; set; }

public Product? Product { get; set; }

}

The Model folder has DataSnapshotModel - a class representing a full snapshot of importing records and has three collections for each entity respectively.

Another model class DataLineModel is used for the record by record processing logic to store one CSV file record at a time.

using DataImport.Domain.Entities;

namespace DataImport.Domain.Model;

public class DataSnapshotModel

{

public List<Customer> Customers { get; set; } = [];

public List<Product> Products { get; set; } = [];

public List<Purchase> Purchases { get; set; } = [];

}

public class DataLineModel

{

public Customer Customer { get; set; } = new();

public Product Product { get; set; } = new();

public Purchase Purchase { get; set; } = new();

}

The last model class MappingConfig is used to deserialize JSON configuration and provide this as an in-memory configuration object accessible by C# code.

namespace DataImport.Domain.Model;

public class MappingConfig

{

public Dictionary<string, List<FileMapping>> FileMappings { get; set; } = [];

}

public class FileMapping

{

public string JsonPath { get; set; } = null!;

public int Position { get; set; }

}

As you can see there is no logic in the Domain project, only classes with properties to store data.

Application

The Application project encapsulates all business logic and algorithms, that do all the work, except persistence.

The FileHelper is a static utility class that encapsulates file-handling logic. It has methods to load a CSV file to memory List collection and deserialize MappingConfig from a JSON file.

using DataImport.Domain.Model;

using System.Text.Json;

namespace DataImport.Application.Helpers;

public static class FileHelper

{

public static List<string> GetCsvFileLines(string fileName)

{

var result = new List<string>();

using Stream stream = File.OpenRead(fileName)!;

using StreamReader reader = new StreamReader(stream);

while (!reader.EndOfStream)

{

result.Add(reader.ReadLine()!);

}

return result;

}

public static MappingConfig LoadConfig(string fileName)

{

using Stream stream = File.OpenRead(fileName)!;

using StreamReader reader = new StreamReader(stream);

var json = reader.ReadToEnd();

var config = JsonSerializer.Deserialize<MappingConfig>(json)!;

return config;

}

}

The most interesting class is DataImporter that implements all mapping logic and converts a CSV file of any format and structure to a corresponding Model class. To achieve this flexibility the MappingConfig should provide a mapping from a CSV file position to the corresponding property in the Model using JSONPath dot notation.

The JSON config looks like this:

{

"FileMappings": {

"PersonExport.csv": [

{

"JsonPath": "$.Customer.ExternalId",

"Position": 0

},

{

"JsonPath": "$.Customer.FirstName",

"Position": 1

},

...

You can find all configuration data in .\DataImport.Tests\TestData\TestMappingConfig.json

The configuration provides a list of mappings for each file. Each mapping has JsonPath and Position.

Let's go through DataImporter and see how it works:

using DataImport.Domain.Model;

using JsonPathToModel;

using DataImport.Application.Helpers;

namespace DataImport.Application.Mappings;

public class DataImporter

{

private readonly IJsonPathModelNavigator _navigator;

public DataImporter()

{

_navigator = new JsonPathModelNavigator(

new NavigatorConfigOptions

{

OptimizeWithCodeEmitter = false

});

}

public DataSnapshotModel ReadModelFromFiles(List<string> fileList, MappingConfig config)

{

var model = new DataSnapshotModel();

foreach (var filePath in fileList)

{

var csv = FileHelper.GetCsvFileLines(filePath);

foreach (var line in csv.Skip(1))

{

var cells = line.Split(',');

ImportFileLine(model, config, filePath, cells);

}

}

return model;

}

private void ImportFileLine(DataSnapshotModel model, MappingConfig config, string filePath, string[] cells)

{

var fileName = Path.GetFileName(filePath);

var mappings = config.FileMappings[fileName];

var target = new DataLineModel();

foreach (var mapping in mappings)

{

_navigator.SetValue(target, mapping.JsonPath, cells[mapping.Position]);

}

if (target.Customer.ExternalId != null)

{

target.Customer.Source = fileName;

model.Customers.Add(target.Customer);

}

if (target.Product.ExternalId != null)

{

target.Product.Source = fileName;

model.Products.Add(target.Product);

}

if (target.Purchase.ExternalId != null)

{

target.Purchase.Source = fileName;

model.Purchases.Add(target.Purchase);

}

}

}

In the constructor, we create an instance of JsonPathModelNavigator that will be used to set Model property values using JSONPath bindings like "$.Customer.ExternalId".

ReadModelFromFiles accepts a list of CSV files for processing and the MappingConfig. It iterates through the files and for each file reads a file content using the helper GetCsvFileLines method. Then for each line of the file, it splits the line into cells and executes the ImportFileLine method.

ImportFileLine method finds the corresponding mapping configuration for the file. Then it creates a model to process the file line. Then for each configuration mapping it calls JsonPathModelNavigator SetValue method, updating the model from a CSV line cell using JsonPath.

For example, for the first mapping for PersonExport.csv file the model update will look like:

_navigator.SetValue(target, "$.Customer.ExternalId", cells[0]);

and it is an equivalent of a direct assignment operation in .NET:

target.Customer.ExternalId = cells[0];

The JsonPathModelNavigator will find an appropriate property in the target model, convert the value to a target data type, and assign it.

Then once the model record is populated, we need to check which model object is affected, comparing ExternalId to null. The affected objects are added to the final snapshot, which eventually will contain all CSV records mapped to the Model objects.

Infrastructure

The demo stores data in the SQL Server database using Entity Framework. MyDbContext class consists of all required database definitions and is pretty much standard. It applies database migrations automatically. It will create and update the database schema only once in the first run. It uses a named connection string, which is added to the Console appsetings.json file.

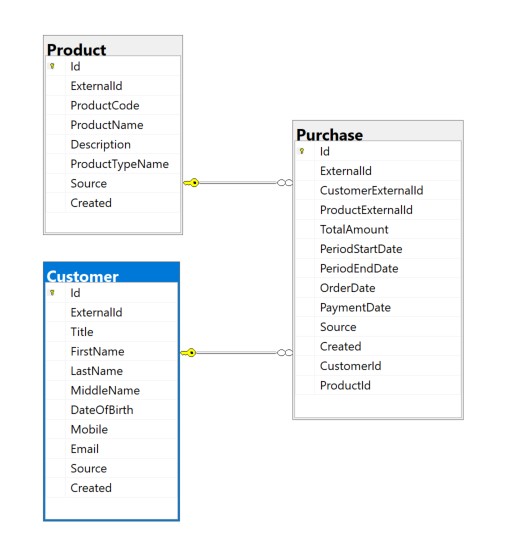

The database schema is represented in this diagram:

The project already has Migrations to generate the database, but if you want to make some changes and add more migrations there is an instruction in the solution readme.md file.

DataAccessService implements persistence logic and converts a model snapshot to database insert operations. The ImportDataSnapshot method accepts the snapshot and inserts Customers, Products and Purchases in one transaction:

public void ImportDataSnapshot(DataSnapshotModel snapshot)

{

using (var transaction = _db.Database.BeginTransaction())

{

InsertCustomers(snapshot);

InsertProducts(snapshot);

InsertPurchases(snapshot);

transaction.Commit();

}

}

Before insertion, the InsertPurchases method resolves Foreign Key properties to Customer and Product by ExternalId:

private void InsertPurchases(DataSnapshotModel snapshot)

{

var customerDict = snapshot.Customers.ToDictionary(c => c.ExternalId!, c => c.Id);

var productDict = snapshot.Products.ToDictionary(c => c.ExternalId!, c => c.Id);

foreach (var item in snapshot.Purchases)

{

item.CustomerId = customerDict[item.CustomerExternalId!];

item.ProductId = productDict[item.ProductExternalId!];

_db.Add(item);

}

_db.SaveChanges();

}

Console

The Console application is a runnable project that you can test locally. It has .\Properties\launchSettings.json file that specifies the necessary details for the run:

{

"profiles": {

"DataImport.Console": {

"commandName": "Project",

"commandLineArgs": "TestMappingConfig.json PersonExport.csv SubscriptionExport.csv OrderExport.csv"

}

}

}

The property "commandLineArgs" contains test JSON and CSV data file names that are used for debugging.

You can see the mentioned files as links. The actual files kept in .\Tests\TestData\ folder.

The Program class starts by defining the Host builder - a standard boilerplate to set up IServiceProvider for Dependency Injection (DI). It also adds a reference to the appsettings.json file that has a connection string:

{

"ConnectionStrings": {

"Default": "Data Source=.;Initial Catalog=mydatabase;Integrated Security=True;TrustServerCertificate=True;"

}

}

The DI is used to construct the DataAccessService. Then we read the MappingConfig configuration and assemble CSV file names to one list, to supply all that to the DataImporter::ReadModelFromFiles. It returns the snapshot that we save to the database using ImportDataSnapshot method:

using DataImport.Infrastructure;

using Microsoft.EntityFrameworkCore;

using Microsoft.Extensions.Configuration;

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Hosting;

using DataImport.Application.Mappings;

using DataImport.Application.Helpers;

namespace DataImport.Console;

class Program

{

static void Main(string[] args)

{

var app = Host.CreateDefaultBuilder()

.ConfigureAppConfiguration((hostingContext, config) =>

{

config.Sources.Clear();

config.AddConfiguration(hostingContext.Configuration);

config.AddJsonFile("appsettings.json");

config.AddJsonFile($"appsettings.Development.json", true, true);

})

.ConfigureServices(services =>

{

services.AddDbContext<MyDbContext>(options => options.UseSqlServer(), contextLifetime: ServiceLifetime.Singleton);

services.AddSingleton<IDataAccessService, DataAccessService>();

})

.Build();

var dataService = app.Services.GetService<IDataAccessService>()!;

var configFile = args[0];

var config = FileHelper.LoadConfig(configFile);

var csvFiles = new List<string>();

for (int i = 1; i < args.Length; i++)

{

csvFiles.Add(args[i]);

}

var dataImporter = new DataImporter();

var shapshot = dataImporter.ReadModelFromFiles(csvFiles, config);

dataService.ImportDataSnapshot(shapshot);

System.Console.ReadKey();

}

}

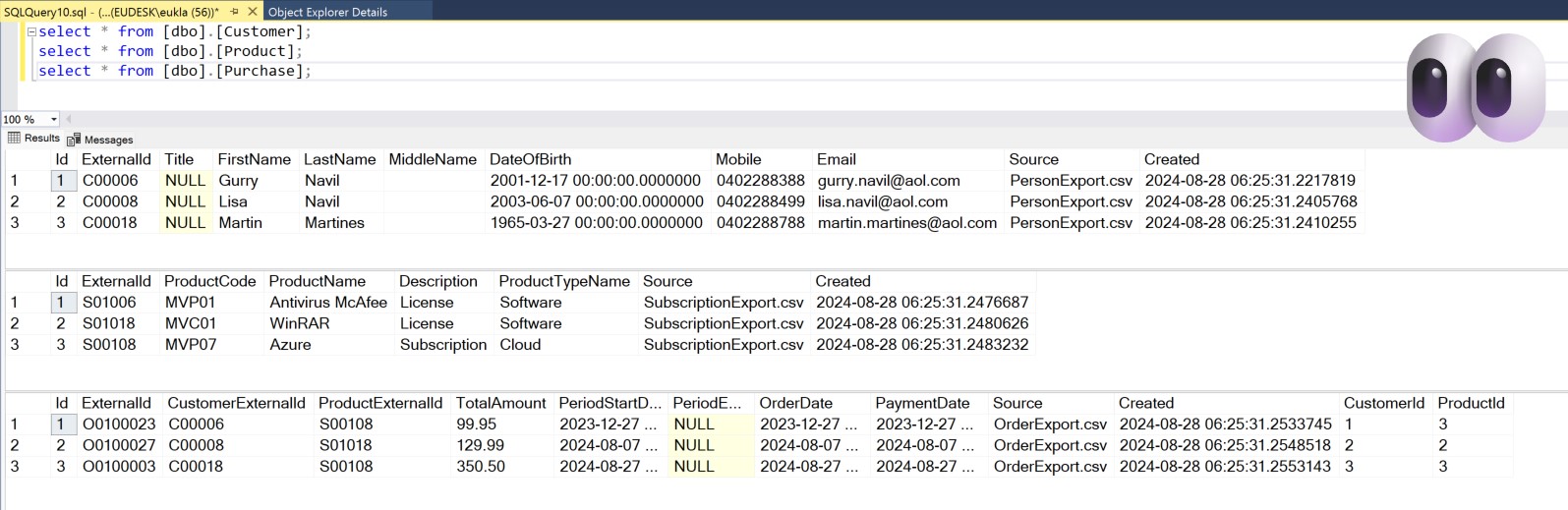

If you run the application it should create a database for you and populate all three tables by data from the test files.

If you select data from the tables you should see something like this:

Unit Tests

DataImport.Tests project has a TestData folder with test data files and test mapping configuration. The Console application re-uses these files for running.

However, instead of running the Console app, you can try to run unit tests from the UnitTest1 class.

For example, this test calls ReadModelFromFiles and checks that all three lines from the CSV file are imported to the snapshot correctly:

[Fact]

public void DataImporter_Should_ReadCustomer()

{

var config = LoadConfig("DataImport.Tests.TestData.TestMappingConfig.json");

List<string> fileList = ["./TestData/PersonExport.csv"];

var importer = new DataImporter();

var model = importer.ReadModelFromFiles(fileList, config);

Assert.NotNull(model);

Assert.Equal(3, model.Customers.Count);

Assert.Equal("C00006", model.Customers[0].ExternalId);

Assert.Empty(model.Products);

Assert.Empty(model.Purchases);

}

I believe that if your code is easily covered by unit tests, it indicates good design.

Summary

This article is intended to demonstrate the flexibility of using JSONPath to access in-memory models when you cannot predict all possible future use cases.

We discussed a generic model mapping approach, where you can configure a set of mappings between a position in a CSV file and a target model property, and it will be enough to import data to your system.

Thanks for reading.

History

Keep a running update of any changes or improvements you've made here.