Starting today, we begin our journey into understanding the inner workings of neural networks for regression and how to implement them in C#.

Introduction

In recent years, neural networks have gained significant popularity for their ability to solve complex problems and have become a core component of deep learning. While they can be applied to various tasks such as classification and regression, this series will focus specifically on their use in regression. We will explore how to train these models, examining both their strengths and limitations.

Neural networks have already been discussed in previous articles on this website, and we encourage readers to refer to those for a foundational understanding.

The subsequent textbooks prove useful for concluding this series.

Deep Learning (Goodfellow, Bengio, Courville)

Deep Learning: Foundations and Concepts (Bishop, Bishop)

Machine Learning: An Algorithmic Perspective (Marsland)

This article was originally publihed here: Neural networks for regression - a comprehensive overview

What is a neural network ?

Neural networks were introduced to effectively capture non-linear relationships in data, where conventional algorithms like logistic regression struggle to model patterns accurately. While the concept of neural networks is not inherently difficult to grasp, practitioners initially faced challenges in training them (specifically, in finding the optimal parameters that minimize the loss function).

We won't delve deeply into how neural networks work, as we've already covered that in a previous article. For more details, we refer readers to the following post.

Implementing neural networks in C#

It provides an in-depth explanation of why these structures were developed, the problems they address, and how they can outperform some well-known algorithms.

What is regression ?

Regression is a statistical and machine learning technique used to model and predict the relationship between a dependent variable (also known as the target or output) and one or more independent variables (known as features or inputs). The primary goal of regression is to understand how the dependent variable changes when one or more of the independent variables are modified, and to use this relationship to make predictions.

Important

Regression is widely used for prediction tasks where the output is a continuous variable, making it an essential tool in both statistics and machine learning.

We've all encountered regression in school, often without realizing it. Anytime we used a formula to predict a value based on another variable (such as plotting a line through data points in a math or science class), we were practicing basic regression.

These examples from school are fairly simple because they typically involved just one input variable, and we applied linear regression, which was easy to visualize on a graph.

Applying linear regression in this context is quite straightforward (it's a common exercise often assigned to students as homework).

However, real-world scenarios are much more complex and often involve hundreds or even thousands of variables. In such cases, visual representation becomes impossible, making it much harder to assess whether our approximation is accurate.

Furthermore, deciding which type of regression to use becomes a critical question. Should we apply linear, quadratic, or polynomial regression ?

What type of regression should we use in this example ? Should we apply a sinusoidal regression or a polynomial one ? Even in this simple case, with only one input, it can be quite challenging to determine exactly which approach to choose.

Neural networks can come to the rescue by attempting to model the underlying function without the need to predefine the type of regression. This flexibility allows them to automatically capture complex patterns in the data, making it one of their greatest strengths. We will explore numerous examples throughout this series.

Integrating neural networks with regression

In the case of regression with neural networks, the output activation function is typically the identity function AND we will have only one output. This results in the following final formula, which we will use throughout this article.

The formula may appear slightly simpler; however, the question of how to train the networks remains. Specifically, we need to determine how to find the optimal weights that minimize the loss function. Since this process is highly mathematical, we refer readers interested in the finer details to the following link (presenting mathematical formulas on this site can be quite challenging).

Neural networks for regression - a comprehensive overview

Enough theory, code please !

After covering the intricate details of the backpropagation algorithm, we now move on to practical application by implementing a neural network for regression in C#. We will build on what we dealt with in the previous section and demonstrate how to code a neural network. We'll aim to keep the explanation as concise as possible.

Defining interfaces

In this section, we define a few interfaces that we will need to implement. These interfaces can also be extended by custom classes in the future for greater flexibility and scalability.

Defining activation functions

Activation functions are a key component of neural networks that introduce non-linearity into the model, allowing it to learn and model complex patterns in the data. Activation functions determine the output of a neuron based on its input.

They play a role both in their natural form and through their derivatives, as derivatives are essential for calculating gradients during the backpropagation process. Therefore, we will define the following contract (interface) for them.

public interface IActivationFunction

{

double Evaluate(double input);

double EvaluateDerivative(double input);

}

An example of an activation function is the tanhtanh (hyperbolic tangent) function.

public class TanhActivationFunction : IActivationFunction

{

public double Evaluate(double input)

{

return Math.Tanh(input);

}

public double EvaluateDerivative(double input)

{

return 1 - Math.Pow(Math.Tanh(input), 2);

}

}

Since we are also working with regression, we will need the identity activation function.

public class IdentityActivationFunction : IActivationFunction

{

public double Evaluate(double input)

{

return input;

}

public double EvaluateDerivative(double input)

{

return 1.0;

}

}

Defining algorithms for training

For a neural network to be effective, we need to determine the weights that minimize the cost function. To accomplish this, various techniques can be used, and we can define the following contract to guide the process.

public interface IANNTrainer

{

void Train(ANNForRegression ann, DataSet set);

}

We will now implement a gradient descent algorithm, using the derivatives computed through the backpropagation algorithm (as discussed in the previous post).

public class GradientDescentANNTrainer : IANNTrainer

{

private ANNForRegression _ann;

public void Train(ANNForRegression ann, DataSet set)

{

_ann = ann;

Fit(set);

}

#region Private Methods

private void Fit(DataSet set)

{

var numberOfHiddenUnits = _ann.NumberOfHiddenUnits;

var a = new double[numberOfHiddenUnits];

var z = new double[numberOfHiddenUnits];

var delta = new double[numberOfHiddenUnits];

var nu = 0.1;

var rnd = new Random();

for (var i = 0; i < _ann.NumberOfFeatures; i++)

{

for (var j = 0; j < _ann.NumberOfHiddenUnits; j++)

{

_ann.HiddenWeights[j, i] = rnd.NextDouble();

_ann.HiddenBiasesWeights[j] = rnd.NextDouble();

}

}

for (var j = 0; j < numberOfHiddenUnits; j++)

_ann.OutputWeights[j] = rnd.NextDouble();

_ann.OutputBiasesWeights = rnd.NextDouble();

for (var n = 0; n < 10000; n++)

{

foreach (var record in set.Records)

{

z[0] = 1.0;

for (var j = 0; j < _ann.NumberOfHiddenUnits; j++)

{

a[j] = 0.0;

for (var i = 0; i < _ann.NumberOfFeatures; i++)

{

var feature = set.Features[i];

a[j] = a[j] + _ann.HiddenWeights[j, i]*record.Data[feature];

}

a[j] = a[j] + _ann.HiddenBiasesWeights[j];

z[j] = _ann.HiddenActivationFunction.Evaluate(a[j]);

}

var b = 0.0;

for (var j = 0; j < numberOfHiddenUnits; j++)

b = b + _ann.OutputWeights[j] * z[j];

b = b + _ann.OutputBiasesWeights;

var y = b;

var d = y - record.Target;

for (var j = 0; j < numberOfHiddenUnits; j++)

delta[j] = d * _ann.OutputWeights[j] * _ann.HiddenActivationFunction.EvaluateDerivative(a[j]);

for (var j = 0; j < numberOfHiddenUnits; j++)

_ann.OutputWeights[j] = _ann.OutputWeights[j] - nu * d * z[j];

_ann.OutputBiasesWeights = _ann.OutputBiasesWeights - nu * d;

for (var j = 0; j < numberOfHiddenUnits; j++)

{

for (var i = 0; i < _ann.NumberOfFeatures; i++)

{

var feature = set.Features[i];

_ann.HiddenWeights[j, i] = _ann.HiddenWeights[j, i] - nu * delta[j]*record.Data[feature];

}

_ann.HiddenBiasesWeights[j] = _ann.HiddenBiasesWeights[j] - nu * delta[j];

}

}

}

}

#endregion

}

Defining the neural network

With these interfaces defined, implementing a neural network becomes a fairly straightforward task.

public class ANNForRegression

{

public double[,] HiddenWeights { get; set; }

public double[] HiddenBiasesWeights { get; set; }

public double[] OutputWeights { get; set; }

public double OutputBiasesWeights { get; set; }

public int NumberOfFeatures { get; set; }

public int NumberOfHiddenUnits { get; set; }

public IActivationFunction HiddenActivationFunction { get; set; }

public IANNTrainer Trainer { get; set; }

public ANNForRegression(int numberOfFeatures, int numberOfHiddenUnits, IActivationFunction hiddenActivationFunction, IANNTrainer trainer)

{

NumberOfFeatures = numberOfFeatures;

NumberOfHiddenUnits = numberOfHiddenUnits;

HiddenActivationFunction = hiddenActivationFunction;

Trainer = trainer;

HiddenWeights = new double[NumberOfHiddenUnits, NumberOfFeatures];

HiddenBiasesWeights = new double[NumberOfHiddenUnits];

OutputWeights = new double[NumberOfHiddenUnits + 1];

}

public void Train(DataSet set)

{

Trainer.Train(this, set);

}

public double Predict(DataToPredict record)

{

var a = new double[NumberOfHiddenUnits];

var z = new double[NumberOfHiddenUnits];

z[0] = 1.0;

for (var j = 0; j < NumberOfHiddenUnits; j++)

{

a[j] = 0.0;

for (var i = 0; i < NumberOfFeatures; i++)

{

var data = record.Data.ElementAt(i);

a[j] = a[j] + HiddenWeights[j, i] * data.Value;

}

a[j] = a[j] + HiddenBiasesWeights[j];

z[j] = HiddenActivationFunction.Evaluate(a[j]);

}

var b = 0.0;

for (var j = 0; j < NumberOfHiddenUnits; j++)

b = b + OutputWeights[j] * z[j];

b = b + OutputBiasesWeights;

return b;

}

}

This code includes two notable methods: Train and Predict. The Train method allows us to train the neural network using a training algorithm, while the Predict method enables us to make predictions on previously unseen values.

That's enough about the code for now. It's time to see it in action, and we will now explore how a neural network can approximate any function we desire.

x↦x²

Our goal is to verify that a neural network can approximate the function x↦x².

Defining the dataset

Our dataset consists of one input and one output, specifically modeling the function x↦x². Data points are uniformly sampled for xx over the interval [−1,1], and the corresponding values have been subjected to noise.

Our objective is to predict values for inputs that have not been seen before.

Training the neural network

We will start by using a neural network with ten hidden layers.

internal class Program

{

static void Main(string[] args)

{

var path = AppContext.BaseDirectory + "/dataset01.csv";

var dataset = DataSet.Load(path); var numberOfFeatures = dataset.Features.Count;

var hiddenActivation = new TanhActivationFunction();

var trainer = new GradientDescentANNTrainer();

var ann = new ANNForRegression(numberOfFeatures, 10, hiddenActivation, trainer);

ann.Train(dataset);

var p = new DataToPredict()

{

Data = new Dictionary<string, double>

{

{"X", 0.753 }

}

};

var res = ann.Predict(p);

}

}

Here is what the neural network determines for the labeled values.

We can see that the neural network successfully identifies the underlying function. Now, let's predict an unseen value, such as 0.753.

The neural network predicts 0.5781, while the expected value is 0.5670.

x↦cos6x

Our goal is to verify that a neural network can approximate the function x↦cos6x.

Defining the dataset

Our dataset consists of one input and one output, specifically modeling the function x↦cos6x. Data points are uniformly sampled for xx over the interval [−1,1], and the corresponding values have been subjected to noise.

Our objective is to predict values for inputs that have not been seen before.

Training the neural network

We will start by using a neural network with ten hidden layers.

internal class Program

{

static void Main(string[] args)

{

var path = AppContext.BaseDirectory + "/dataset02.csv";

var dataset = DataSet.Load(path); var numberOfFeatures = dataset.Features.Count;

var hiddenActivation = new TanhActivationFunction();

var trainer = new GradientDescentANNTrainer();

var ann = new ANNForRegression(numberOfFeatures, 10, hiddenActivation, trainer);

ann.Train(dataset);

var p = new DataToPredict()

{

Data = new Dictionary<string, double>

{

{"X", 0.753 }

}

};

var res = ann.Predict(p);

}

}

Here is what the neural network determines for the labeled values.

We can see that the neural network successfully identifies the underlying function. Now, let's predict an unseen value, such as 0.753.

The neural network predicts −0.1512, while the expected value is −0.1932.

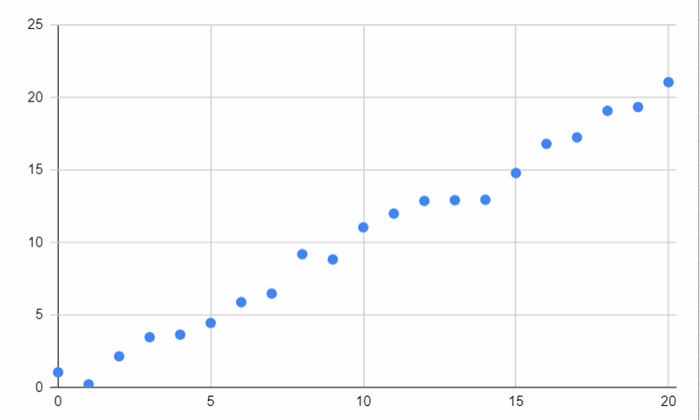

x↦H(x)

Our goal is to verify that a neural network can approximate the Heaviside function.

Defining the dataset

Our dataset consists of one input and one output, specifically modeling the Heaviside function. Data points are uniformly sampled for xx over the interval [−1,1], and the corresponding values have been subjected to noise.

Our objective is to predict values for inputs that have not been seen before.

Training the neural network

We will start by using a neural network with ten hidden layers.

internal class Program

{

static void Main(string[] args)

{

var path = AppContext.BaseDirectory + "/dataset04.csv";

var dataset = DataSet.Load(path); var numberOfFeatures = dataset.Features.Count;

var hiddenActivation = new TanhActivationFunction();

var trainer = new GradientDescentANNTrainer();

var ann = new ANNForRegression(numberOfFeatures, 10, hiddenActivation, trainer);

ann.Train(dataset);

var p = new DataToPredict()

{

Data = new Dictionary<string, double>

{

{"X", 0.753 }

}

};

var res = ann.Predict(p);

}

}

Here is what the neural network determines for the labeled values.

We can see that the neural network successfully identifies the underlying function. Now, let's predict an unseen value, such as 0.753.

The neural network predicts 0.9934, while the expected value is 1.

From these various examples, we can see that neural networks are well-suited for approximating a range of functions, including some that are not even continuous. However, we will explore now the weaknesses of these data structures.

How many hidden units should we use ?

In the previous examples, we arbitrarily used ten hidden units for our neural network. But is this a suitable value ? To determine that, we will experiment with different values and see whether the function is still accurately approximated.

Information

We will use the function x↦cos6x for our experiments.

With 2 hidden units

We will begin our experiment by using only two hidden units to see how the neural network behaves.

It is clear that the approximation is quite poor, indicating that the network lacks sufficient flexibility to accurately model the function. This phenomenon is referred to as underfitting.

With 4 hidden units

We will conduct the same experiment, but this time with four hidden units.

It is clear that the approximation is still quite poor.

With 6 hidden units

We will conduct the same experiment, but this time with six hidden units.

The approximation is now very accurate; however, this example illustrates that it can be quite challenging to determine the optimal number of hidden units. In our case, it was relatively easy to determine if the approximation was good; however, in real-world scenarios with hundreds or thousands of dimensions, this becomes much more complicated. Identifying the optimal number of hidden units is more of an art than a science.

Conclusion

If there are not enough hidden units, there is a risk of encountering the underfitting phenomenon. Conversely, if there are too many hidden units, we may face overfitting or consume excessive resources.

In the previous post, we examined how a neural network can interpolate unseen values. We specifically looked at the value 0.753 and found that it yields a relatively accurate prediction. However, we can question whether a neural network can determine an extrapolated value (specifically, a value outside the initial interval). In our case, what would be the predicted value for 2 for example ?

Information

Once again, we will use the function x↦cos6x for our experiments and set the number of hidden units to 6.

The neural network predicts 1.2397, while the expected value is 0.8438.

As a result, a neural network is unable to accurately predict extrapolated values. This phenomenon has significant implications: to ensure accurate predictions for all our inputs, we must ensure the completeness of our dataset. Specifically, it is essential to have representative values for all possible features. Otherwise, the network may produce unreliable predictions.

Now that we have explored neural networks for regression in depth on toy examples, we will examine how they can be applied in a real-world scenario. To avoid overloading this article, readers interested in this implementation can find the continuation here.