A complete explanation for the totally lost, part 1 of 2.

Downloads

Contents

- Overview

- Neural Networks

- AForge Framework

- Network training as a function optimization problem

- Levenberg-Marquardt

- Computing the Jacobian

- Approximating the Hessian

- Solving the Levenberg-Marquardt equation

- General Algorithm

- Limitations

- Bayesian Regularization

- Expanded Levenberg-Marquardt Algorithm

- Source Code

- Using the Code

- Sample Applications

- Additional Notes

- References

The problem of neural network learning can be seen as a function optimization problem, where we are trying to determine the best network parameters (weights and biases) in order to minimize network error. This said, several function optimization techniques from numerical linear algebra can be directly applied to network learning, one of these techniques being the Levenberg-Marquardt algorithm.

The Levenberg–Marquardt algorithm provides a numerical solution to the problem of minimizing a (generally nonlinear) function, over a space of parameters for the function. It is a popular alternative to the Gauss-Newton method of finding the minimum of a function.

Neural networks are a relatively new artificial intelligence technique. In most cases an ANN is an adaptive system that changes its structure based on external or internal information that flows through the network during the learning phase. The learning procedure tries is to find a set of connections w that gives a mapping that fits the training set well.

Furthermore, neural networks can be viewed as highly nonlinear functions with the basic the form:

Where x is the input vector presented to the network, w are the weights of the network, and y is the corresponding output vector approximated or predicted by the network. The weight vector w is commonly ordered first by layer, then by neurons, and finally by the weights of each neuron plus its bias.

This view of network as an parameterized function will be the basis for applying standard function optimization methods to solve the problem of neural network training.

AForge.NET Framework is a C# framework designed for developers and researchers in the fields of Computer Vision and Artificial Intelligence. Here, the Levenberg-Marquardt learning algorithm is implemented as a class implementing the ISupervisedLearning interface from the AForge framework.

As mentioned previously, neural networks can be viewed as highly non-linear functions. From this perspective, the training problem can be considered as a general function optimization problem, with the adjustable parameters being the weights and biases of the network, and the Levenberg-Marquardt can be straightforward applied in this case.

The Levenberg-Marquardt algorithm is a very simple, but robust, method for approximating a function. Basically, it consists in solving the equation:

Where J is the Jacobian matrix for the system, λ is the Levenberg's damping factor, δ is the weight update vector that we want to find and E is the error vector containing the output errors for each input vector used on training the network. The δ tell us by how much we should change our network weights to achieve a (possibly) better solution. The JtJ matrix can also be known as the approximated Hessian.

The λ damping factor is adjusted at each iteration, and guides the optimization process. If reduction of E is rapid, a smaller value can be used, bringing the algorithm closer to the Gauss–Newton algorithm, whereas if an iteration gives insufficient reduction in the residual, λ can be increased, giving a step closer to the gradient descent direction.

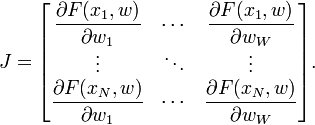

The Jacobian is a matrix of all first-order partial derivatives of a vector-valued function. In the neural network case, it is a N-by-W matrix, where N is the number of entries in our training set and W is the total number of parameters (weights + biases) of our network. It can be created by taking the partial derivatives of each output in respect to each weight, and has the form:

Where F(xi, w) is the network function evaluated for the i-th input vector of the training set using the weight vector w and wj is the j-th element of the weight vector w of the network.

In traditional Levenberg-Marquardt implementations, the Jacobian is approximated by using finite differences. However, for neural networks, it can be computed very efficiently by using the chain rule of calculus and the first derivatives of the activation functions.

For the least-squares problem, the Hessian generally doesn't needs to be calculated. As stated earlier, it can be approximated by using the Jacobian matrix with the formula:

Which is is a very good approximation of the Hessian if the residual errors at the solution are "small". If the residuals are not sufficiently small at the solution, this approach may result in slow convergence. The Hessian can also be used to apply regularization to the learning process, which will be discussed later.

Levenberg's main contribution to the method was the introduction of the damping factor λ. This value is summed to every member of the approximate Hessian diagonal before the system is solved for the gradient. Tipically, λ would start as a small value such as 0.1.

Then, the Levenberg-Marquardt equation is solved, commonly by using a LU decomposition. However, the system can only be solved if the approximated Hessian has not become singular (not having an inverse). If this is the case, the equation can still be solved by using a SVD decomposition.

After the equation is solved, the weights w are updated using δ and network errors for each entry in the training set are recalculated. If the new sum of squared errors has decreased, λ is decreased and the iteration ends. If it has not, then the new weights are discarded and the method is repeated with a higher value for λ.

This adjustment for λ is done by using an adjustment factor v, usually defined as 10. If λ needs to increase, it is multiplied by v. If it needs to decrease, then it is divided by v. The process is repeated until the error decreases. When this happens, the current iteration ends.

As stated earlier, the Levenberg-Marquardt consists basically in solving (2) with different λ values until the sum of squared error decreases. So, each learning iteration (epoch) will consist of the following basic steps:

- Compute the Jacobian (by using finite differences or the chain rule)

- Compute the error gradient

- Approximate the Hessian using the cross product Jacobian (eq. 3)

- H = JtJ

- Solve (H + λI)δ = g to find δ

- Update the network weights w using δ

- Recalculate the sum of squared errors using the updated weights

- If the sum of squared errors has not decreased,

- Discard the new weights, increase λ using v and go to step 4.

- Else decrease λ using v and stop.

Variations of the algorithm may include different values for v, one for decreasing λ and other for increasing it. Others may solve (H + λdiag(H))δ = g instead of (H + λI)δ = g (2), while others may select the initial λ according to the size of the elements on H, by setting λ0 = t max(diag(H)), where t is a value chosen by the user. I've chosen the identity matrix equation because, apparently, it is the same method implemented internally by the Neural Network Toolbox in MATLAB.

We can see we will have a problem if the error does not decrease after a some iterations. In this case, the algorithm also stops if λ becomes too large.

The Levenberg-Marquardt is very sensitive to the initial network weights. Also, it does not consider outliers in the data, what may lead to overfitting noise. To avoid those situations, we can use a technique known as regularization.

In the next part of this article (part 2), we'll discuss more about Bayesian regularization. We will also present and demonstrate the usage of the article's accompanying source code.

Click to go to Neural Network Learning by the Levenberg-Marquardt Algorithm (part 2).

CodeProject