What is libconhash

libconhash is a consistent hashing library which can be compiled both on Windows and Linux platforms, with the following features:

- High performance and easy to use, libconhash uses a red-black tree to manage all nodes to achieve high performance.

- By default, it uses the MD5 algorithm, but it also supports user-defined hash functions.

- Easy to scale according to the node's processing capacity.

Consistent hashing

Why you need consistent hashing

Now we will consider the common way to do load balance. The machine number chosen to cache object o will be:

hash(o) mod n

Here, n is the total number of cache machines. While this works well until you add or remove cache machines:

- When you add a cache machine, then object o will be cached into the machine:

hash(o) mod (n+1)

- When you remove a cache machine, then object o will be cached into the machine:

hash(o) mod (n-1)

So you can see that almost all objects will hashed into a new location. This will be a disaster since the originating content servers are swamped with requests from the cache machines. And this is why you need consistent hashing.

Consistent hashing can guarantee that when a cache machine is removed, only the objects cached in it will be rehashed; when a new cache machine is added, only a fairly few objects will be rehashed.

Now we will go into consistent hashing step by step.

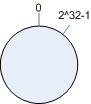

Hash space

Commonly, a hash function will map a value into a 32-bit key, 0~2^<sup>32</sup>-1. Now imagine mapping the range into a circle, then the key will be wrapped, and 0 will be followed by 2^32-1, as illustrated in figure 1.

Figure 1

Map object into hash space

Now consider four objects: object1~object4. We use a hash function to get their key values and map them into the circle, as illustrated in figure 2.

Figure 2

hash(object1) = key1;

.....

hash(object4) = key4;

Map the cache into hash space

The basic idea of consistent hashing is to map the cache and objects into the same hash space using the same hash function.

Now consider we have three caches, A, B and C, and then the mapping result will look like in figure 3.

hash(cache A) = key A;

....

hash(cache C) = key C;

Figure 3

Map objects into cache

Now all the caches and objects are hashed into the same space, so we can determine how to map objects into caches. Take object obj for example, just start from where obj is and head clockwise on the ring until you find a server. If that server is down, you go to the next one, and so forth. See figure 3 above.

According to the method, object1 will be cached into cache A; object2 and object3 will be cached into cache C, and object4 will be cached into cache B.

Add or remove cache

Now consider the two scenarios, a cache is down and removed; and a new cache is added.

If cache B is removed, then only the objects that cached in B will be rehashed and moved to C; in the example, see object4 illustrated in figure 4.

Figure 4

If a new cache D is added, and D is hashed between object2 and object3 in the ring, then only the objects that are between D and B will be rehashed; in the example, see object2, illustrated in figure 5.

Figure 5

Virtual nodes

It is possible to have a very non-uniform distribution of objects between caches if you don't deploy enough caches. The solution is to introduce the idea of "virtual nodes".

Virtual nodes are replicas of cache points in the circle, each real cache corresponds to several virtual nodes in the circle; whenever we add a cache, actually, we create a number of virtual nodes in the circle for it; and when a cache is removed, we remove all its virtual nodes from the circle.

Consider the above example. There are two caches A and C in the system, and now we introduce virtual nodes, and the replica is 2, then three will be 4 virtual nodes. Cache A1 and cache A2 represent cache A; cache C1 and cache C2 represent cache C, illustrated as in figure 6.

Figure 6

Then, the map from object to the virtual node will be:

objec1->cache A2; objec2->cache A1; objec3->cache C1; objec4->cache C2

When you get the virtual node, you get the cache, as in the above figure.

So object1 and object2 are cached into cache A, and object3 and object4 are cached into cache. The result is more balanced now.

So now you know what consistent hashing is.

Using the code

Interfaces of libconhash

CONHASH_API struct conhash_s* conhash_init(conhash_cb_hashfunc pfhash);

CONHASH_API void conhash_fini(struct conhash_s *conhash);

CONHASH_API void conhash_set_node(struct node_s *node,

const char *iden, u_int replica);

CONHASH_API int conhash_add_node(struct conhash_s *conhash,

struct node_s *node);

CONHASH_API int conhash_del_node(struct conhash_s *conhash,

struct node_s *node);

...

CONHASH_API const struct node_s*

conhash_lookup(const struct conhash_s *conhash,

const char *object);

Libconhash is very easy to use. There is a sample in the project that shows how to use the library.

First, create a conhash instance. And then you can add or remove nodes of the instance, and look up objects.

The update node's replica function is not implemented yet.

struct conhash_s *conhash = conhash_init(NULL);

if(conhash)

{

conhash_set_node(&g_nodes[0], "titanic", 32);

conhash_add_node(conhash, &g_nodes[0]);

printf("virtual nodes number %d\n", conhash_get_vnodes_num(conhash));

printf("the hashing results--------------------------------------:\n");

node = conhash_lookup(conhash, "James.km");

if(node) printf("[%16s] is in node: [%16s]\n", str, node->iden);

}

Reference