Useful links

1 Introduction. Why abstract nonsense?

Once one engineer said to me, “I do not need abstract class since I am solving specific problems only” He uses FORTRAN for calculation. Advanced engineers understand usefulness of abstraction. Abstract nonsense in math is next generation of abstraction. In

mathematics, abstract nonsense, general abstract nonsense, and general nonsense are terms used facetiously by some

mathematicians to describe certain kinds of arguments and methods related to

category theory. (Very) roughly speaking, category theory is the study of the general form of mathematical theories, without regard to their content. As a result, a

proof that relies on category theoretic ideas often seems slightly out of context to those who are not used to such abstraction, sometimes to the extent that it resembles a comical

non sequitur. Such proofs are sometimes dubbed “abstract nonsense” as a light-hearted way of alerting people to their abstract nature.

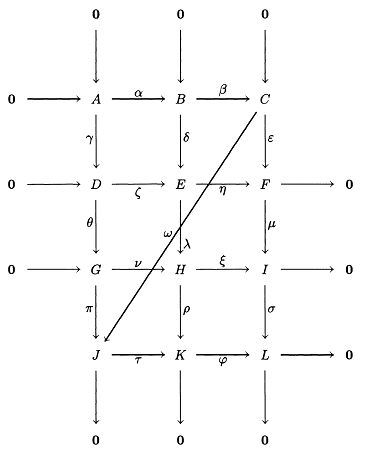

Category theory deals in an abstract way with mathematical structures and relationships between them: it abstracts from sets and functions to objects linked in diagrams by morphisms or arrows. High level of abstraction of Category Theory is explained in

Goldblatt. Topoi: The Categorial Analysis of Logic. This book contains following figure:

We did not actually say what a and f are. The point is that they can be anything you like. a might be a set with f its identity function. But f might be a number, or a pair of numbers, or a banana, or the Eiffel tower, or even Richard Nixon. Likewise for f. Author of this article was very impressed by

the math abstract nonsense and even developed a

Category theory software. Then author introduced

an “abstract nonsense” paradigm in other his software. This paradigm means that all domains contains objects and arrows only. However objects (resp. arrows ) can have different types. Following picture represents diagram with different type objects (resp. arrows).

Moreover object can be multi-typed, i.e. belong to several types. This phenomenon is known as

multiple inheritance, which is

also well known in math long time ago. For example in mathematics, the real line, or real number line is the line whose points are the real numbers. That is, the real line is the set R of all real numbers, viewed as a geometric

space, namely the Euclidean space of dimension one. It can be thought of as a vector space (or affine space), a metric space, a topological space, a measure space, or a linear continuum. Author

find that abstract nonsense is very effective. This article is first in series devoted to abstract nonsense.

2 Background

Applications of objects and arrows are well known. For example following software uses objects and arrows:

However these software products reflects rather status quo of the pre-computer era. At 1974 author studied control theory. He used diagrams like following:

Similar diagrams

uses Simulink. By meaning these diagrams are very similar to

data flow diagrams. At 1974 author learned

analog computers and he find that above block diagrams rather correspond to analogue computer than digital one. Abstract nonsense language is

an extension of dataflow diagrams language. Objects of very different types are used in a single diagram.

Author thinks that abstract nonsense would not be accepted at once since lot of engineers are familiar with above

data flow diagrams. Any innovation would not been accepted at once as well as the category theory

in math. Adoption of innovation takes long time. At 1977 author used FORTRAN for calculation. However a lot of researchers use FORTRAN till now.

But new object oriented languages become more popular than FORTRAN. This article shows

advantages of abstract nonsense in software development.

3 First example. Plane with with rotodome. Outlook

Here we consider a motion of plane with rotodome.

Absolute motion of rotodome is a superposition of

a motion of the plane and a relative motion of the rotodome with respect to the plane. Following picture represents

a simulation of this phenomenon.

This picture contains two type of arrows. Arrow with a  icon is an information link. It links a provider of information with its consumer.

A link with

icon is an information link. It links a provider of information with its consumer.

A link with  icon is a link of

a reference frame binding. Objects Linear and Rotation are providers of information. They calculate motion parameters. Both Plane and Rotodome are simultaneously consumers of information and reference frames. The M link means that

a motion of Rotodome is

a relative motion with respect to Airplane. Arrow D1 (resp. D2) means that Airplane (resp. Rotodome) is data consumer of Linear (resp. Rotation). So this example contains different types of arrows and multiple inheritance.

icon is a link of

a reference frame binding. Objects Linear and Rotation are providers of information. They calculate motion parameters. Both Plane and Rotodome are simultaneously consumers of information and reference frames. The M link means that

a motion of Rotodome is

a relative motion with respect to Airplane. Arrow D1 (resp. D2) means that Airplane (resp. Rotodome) is data consumer of Linear (resp. Rotation). So this example contains different types of arrows and multiple inheritance.

4 Basic interfaces

This section contains basic interfaces which are used in any applications of abstract nonsense. Any abstract nonsense object implements ICategoryObject interface.

public interface ICategoryObject : IAssociatedObject

{

ICategory Category

{

get;

}

ICategoryArrow Id

{

get;

}

}

Any abstract nonsense arrow implements an ICategoryArrow interface

public interface ICategoryArrow : IAssociatedObject

{

ICategoryObject Source

{

get;

set;

}

ICategoryObject Target

{

get;

set;

}

bool IsMonomorphism

{

get;

}

bool IsEpimorphism

{

get;

}

bool IsIsomorphism

{

get;

}

ICategoryArrow Compose(ICategory category, ICategoryArrow next);

}

5 Information flow

A information flow domain contains following basic objects:

- Provider of data (

IMeasurements intreface) - Consumer of data (

IDataConsumer intreface) - Elementary unit of data exchange (

IMeasure intreface) - Link of data (

DataLink class which implements ICategoryArrow interface)

The  icon corresponds to a

icon corresponds to a DataLink arrow. Source (resp. Target of DataLink is always an IMeasurements (resp. IDataConsumer) object. Following code represents these objects:

public interface IMeasurements

{

int Count

{

get;

}

IMeasure this[int number]

{

get;

}

void UpdateMeasurements();

bool IsUpdated

{

get;

set;

}

}

public interface IDataConsumer

{

void Add(IMeasurements measurements);

void Remove(IMeasurements measurements);

void UpdateChildrenData();

int Count

{

get;

}

IMeasurements this[int number]

{

get;

}

void Reset();

event Action OnChangeInput;

}

public interface IMeasure

{

Func<object> Parameter

{

get;

}

string Name

{

get;

}

object Type

{

get;

}

}

[Serializable()]

public class DataLink : ICategoryArrow, ISerializable,

IRemovableObject, IDataLinkFactory

{

#region Fields

public static readonly string SetProviderBefore =

"You should create measurements source before consumer";

private static Action<DataLink> checker;

private IDataConsumer source;

private IMeasurements target;

private int a = 0;

protected object obj;

private static IDataLinkFactory dataLinkFactory = new DataLink();

#endregion

#region Ctor

public DataLink()

{

}

public DataLink(SerializationInfo info, StreamingContext context)

{

a = (int)info.GetValue("A", typeof(int));

}

#endregion

#region ISerializable Members

public void GetObjectData(SerializationInfo info, StreamingContext context)

{

info.AddValue("A", a);

}

#endregion

#region ICategoryArrow Members

public ICategoryObject Source

{

set

{

if (source != null)

{

throw new Exception();

}

IDataLinkFactory f = this;

source = f.GetConsumer(value);

}

get

{

return source as ICategoryObject;

}

}

public ICategoryObject Target

{

get

{

return target as ICategoryObject;

}

set

{

if (target != null)

{

throw new Exception();

}

IDataLinkFactory f = this;

IMeasurements t = f.GetMeasurements(value);

bool check = true;

IAssociatedObject s = source as IAssociatedObject;

if (s.Object != null & value.Object != null)

{

if (check)

{

INamedComponent ns = s.Object as INamedComponent;

INamedComponent nt = value.Object as INamedComponent;

if (nt != null & ns != null)

{

if (PureDesktopPeer.GetDifference(nt, ns) >= 0)

{

throw new Exception(SetProviderBefore);

}

}

}

target = t;

source.Add(target);

}

if (!check)

{

return;

}

try

{

if (checker != null)

{

checker(this);

}

}

catch (Exception e)

{

e.ShowError(10);

source.Remove(target);

throw e;

}

}

}

public bool IsMonomorphism

{

get

{

return false;

}

}

public bool IsEpimorphism

{

get

{

return false;

}

}

public bool IsIsomorphism

{

get

{

return false;

}

}

public ICategoryArrow Compose(ICategory category, ICategoryArrow next)

{

return null;

}

#endregion

#region IAssociatedObject Members

public object Object

{

get

{

return obj;

}

set

{

obj = value;

}

}

#endregion

#region IRemovableObject Members

public void RemoveObject()

{

if (source == null | target == null)

{

return;

}

source.Remove(target);

}

#endregion

#region IDataLinkFactory Members

IDataConsumer IDataLinkFactory.GetConsumer(ICategoryObject source)

{

IAssociatedObject ao = source;

object o = ao.Object;

if (o is INamedComponent)

{

IDataConsumer dcl = null;

INamedComponent comp = o as INamedComponent;

IDesktop desktop = comp.Root.Desktop;

desktop.ForEach<DataLink>((DataLink dl) =>

{

if (dcl != null)

{

return;

}

object dt = dl.Source;

if (dt is IAssociatedObject)

{

IAssociatedObject aot = dt as IAssociatedObject;

if (aot.Object == o)

{

dcl = dl.source as IDataConsumer;

}

}

});

if (dcl != null)

{

return dcl;

}

}

IDataConsumer dc = DataConsumerWrapper.Create(source);

if (dc == null)

{

CategoryException.ThrowIllegalTargetException();

}

return dc;

}

IMeasurements IDataLinkFactory.GetMeasurements(ICategoryObject target)

{

IAssociatedObject ao = target;

object o = ao.Object;

if (o is INamedComponent)

{

IMeasurements ml = null;

INamedComponent comp = o as INamedComponent;

IDesktop d = null;

INamedComponent r = comp.Root;

if (r != null)

{

d = r.Desktop;

}

else

{

d = comp.Desktop;

}

if (d != null)

{

d.ForEach<DataLink>((DataLink dl) =>

{

if (ml != null)

{

return;

}

object dt = dl.Target;

if (dt is IAssociatedObject)

{

IAssociatedObject aot = dt as IAssociatedObject;

if (aot.Object == o)

{

ml = dl.Target as IMeasurements;

}

}

});

if (ml != null)

{

return ml;

}

}

}

IMeasurements m = MeasurementsWrapper.Create(target);

if (m == null)

{

CategoryException.ThrowIllegalTargetException();

}

return m;

}

#endregion

#region Public Members

public static Action<DataLink> Checker

{

set

{

checker = value;

}

}

public static IDataLinkFactory DataLinkFactory

{

get

{

return dataLinkFactory;

}

set

{

dataLinkFactory = value;

}

}

public IMeasurements Measurements

{

get

{

return target;

}

}

#endregion

}

5 6D Kinematics

A kinematics domain contains following basic types:

- 3D Position (

IPosition interface); - 3D Orientation (

IOrientation interface; - Standard 3D Position (

Position class which implements IPosition interface); - 3D Reference frame (

ReferenceFrame class which implements both IPosition and IOrientation); - Holder 3D Reference frame (

IReferenceFrame interface) ; - Reference frame binding (

ReferenceFrameArrow class which implements ICategoryArrow interface).

Source (resp. target) of ReferenceFrameArrow is always IPosition (resp. IReferenceFrame). This arrow means that coordinates of IPosition

are relative with respect to IReferenceFrame. Following code represents these

types:

public interface IPosition

{

double[] Position

{

get;

}

IReferenceFrame Parent

{

get;

set;

}

object Parameters

{

get;

set;

}

void Update();

}

public interface IOrientation

{

double[] Quaternion

{

get;

}

double[,] Matrix

{

get;

}

}

public class Position : IPosition, IChildrenObject

{

#region Fields

protected IReferenceFrame parent;

protected double[] own = new double[] { 0, 0, 0 };

protected double[] position = new double[3];

protected object parameters;

protected IAssociatedObject[] ch = new IAssociatedObject[1];

#endregion

#region Ctor

protected Position()

{

}

public Position(double[] position)

{

for (int i = 0; i < own.Length; i++)

{

own[i] = position[i];

}

}

#endregion

#region IPosition Members

double[] IPosition.Position

{

get { return position; }

}

public virtual IReferenceFrame Parent

{

get

{

return parent;

}

set

{

parent = value;

}

}

public virtual object Parameters

{

get

{

return parameters;

}

set

{

parameters = value;

if (value is IAssociatedObject)

{

IAssociatedObject ao = value as IAssociatedObject;

ch[0] = ao;

}

}

}

public virtual void Update()

{

Update(BaseFrame);

}

#endregion

#region Specific Members

protected virtual void Update(ReferenceFrame frame)

{

double[,] m = frame.Matrix;

double[] p = frame.Position;

for (int i = 0; i < p.Length; i++)

{

position[i] = p[i];

for (int j = 0; j < own.Length; j++)

{

position[i] += m[i, j] * own[j];

}

}

}

protected virtual ReferenceFrame BaseFrame

{

get

{

if (parent == null)

{

return Motion6D.Motion6DFrame.Base;

}

return parent.Own;

}

}

#endregion

#region IChildrenObject Members

IAssociatedObject[] IChildrenObject.Children

{

get { return ch; }

}

#endregion

}

public class ReferenceFrame : IPosition, IOrientation

{

#region Fields

protected double[] quaternion = new double[] { 1, 0, 0, 0 };

protected double[] position = new double[] { 0, 0, 0 };

protected double[,] matrix = new double[,] { { 1, 0, 0 }, { 0, 1, 0 }, { 0, 0, 1 } };

protected double[,] qq = new double[4, 4];

protected double[] p = new double[3];

protected IReferenceFrame parent;

protected object parameters;

private double[] auxPos = new double[3];

#endregion

#region Ctor

public ReferenceFrame()

{

}

private ReferenceFrame(bool b)

{

}

#endregion

#region IPosition Members

public double[] Position

{

get { return position; }

}

public virtual IReferenceFrame Parent

{

get

{

return parent;

}

set

{

parent = value;

}

}

public virtual object Parameters

{

get

{

return parameters;

}

set

{

parameters = value;

}

}

static public ReferenceFrame GetFrame(IPosition position)

{

if (position is IReferenceFrame)

{

IReferenceFrame f = position as IReferenceFrame;

return f.Own;

}

return GetParentFrame(position);

}

static public ReferenceFrame GetParentFrame(IPosition position)

{

if (position.Parent == null)

{

return Motion6DFrame.Base;

}

return position.Parent.Own;

}

static public void GetRelativeFrame(ReferenceFrame baseFrame,

ReferenceFrame targetFrame, ReferenceFrame relative)

{

double[] bp = baseFrame.Position;

double[] tp = targetFrame.Position;

double[,] bm = baseFrame.Matrix;

double[] rp = relative.Position;

for (int i = 0; i < 3; i++)

{

rp[i] = 0;

for (int j = 0; j < 3; j++)

{

rp[i] += bm[j, i] * (tp[i] - bp[i]);

}

}

double[,] tm = targetFrame.Matrix;

double[,] rm = relative.Matrix;

for (int i = 0; i < 3; i++)

{

for (int j = 0; j < 3; j++)

{

rm[i, j] = 0;

for (int k = 0; k < 3; k++)

{

rm[i, j] += bm[k, i] * tm[k, j];

}

}

}

}

static public ReferenceFrame GetOwnFrame(IPosition position)

{

if (position is IReferenceFrame)

{

IReferenceFrame f = position as IReferenceFrame;

return f.Own;

}

return GetParentFrame(position);

}

public virtual void Update()

{

ReferenceFrame p = ParentFrame;

position = p.Position;

quaternion = p.quaternion;

matrix = p.matrix;

}

#endregion

#region IOrientation Members

public double[] Quaternion

{

get { return quaternion; }

}

public double[,] Matrix

{

get { return matrix; }

}

#endregion

#region Specific Members

public void GetRelativePosition(double[] inPosition, double[] outPosition)

{

for (int i = 0; i < 3; i++)

{

auxPos[i] = inPosition[i] - position[i];

}

for (int i = 0; i < 3; i++)

{

outPosition[i] = 0;

for (int j = 0; j < 3; j++)

{

outPosition[i] += matrix[j, i] * auxPos[j];

}

}

}

static public void GetRelative(ReferenceFrame baseFrame, ReferenceFrame relativeFrame,

ReferenceFrame result, double[] diff)

{

V3DOperations.QuaternionInvertMultiply(relativeFrame.quaternion,

baseFrame.quaternion, result.quaternion);

result.SetMatrix();

for (int i = 0; i < 3; i++)

{

diff[i] = relativeFrame.position[i] - baseFrame.position[i];

}

double[,] m = baseFrame.Matrix;

double[] p = result.position;

for (int i = 0; i < 3; i++)

{

p[i] = 0;

for (int j = 0; j < 3; j++)

{

p[i] += m[j, i] * diff[j];

}

}

}

static public void GetRelative(ReferenceFrame baseFrame, ReferenceFrame relativeFrame,

ReferenceFrame result, double[] diff, double[,] matrix4)

{

GetRelative(baseFrame, relativeFrame, result, diff);

double[,] m = result.Matrix;

for (int i = 0; i < 3; i++)

{

for (int j = 0; j < 3; j++)

{

matrix4[i, j] = m[i, j];

}

}

double[] p = result.position;

for (int i = 0; i < 3; i++)

{

matrix4[3, i] = p[i];

matrix4[i, 3] = 0;

}

matrix4[3, 3] = 1;

}

static public void GetRelative(ReferenceFrame baseFrame, ReferenceFrame relativeFrame,

ReferenceFrame result, double[] diff, double[,] matrix4, double[] array16)

{

GetRelative(baseFrame, relativeFrame, result, diff, matrix4);

for (int i = 0; i < 4; i++)

{

int k = 4 * i;

for (int j = 0; j < 4; j++)

{

array16[k + j] = matrix4[i, j];

}

}

}

static public double[,] CalucateViewMatrix(double[] position, double rotation)

{

double[] r = position;

double ap = 0;

ap = r[0] * r[0] + r[2] * r[2];

double a = ap + r[1] * r[1];

ap = Math.Sqrt(ap);

a = Math.Sqrt(a);

double[] ez = { r[0] / a, r[1] / a, r[2] / a };

double[] ex1 = null;

if (ap < 0.00000000001)

{

ex1 = new double[] { 1.0, 0.0, 0.0 };

}

else

{

ex1 = new double[] { -r[2] / ap, 0, r[0] / ap };

}

double[] ey1 = {ez[1] * ex1[2] - ez[2] * ex1[1],

ez[2] * ex1[0] - ez[0] * ex1[2],

ez[0] * ex1[1] - ez[1] * ex1[0]};

double[] ey = new double[3];

double[] ex = new double[3];

double alpha = rotation;

alpha *= Math.PI / 180.0;

alpha += Math.PI;

double s = 0;

double c = 1;

double sD2 = Math.Sin(alpha / 2);

double cD2 = Math.Cos(alpha / 2);

double[] rc = { r[0], r[1] };

r[0] = rc[0] * c - rc[1] * s;

r[1] = rc[1] * s + rc[1] * c;

ex = ex1;

ey = ey1;

double[][] m = { ex, ey, ez };

double[] rr = { r[1], r[2], r[0] };

double[,] mat = new double[4, 4];

for (int i = 0; i < 3; i++)

{

mat[3, i] = r[i];

for (int j = 0; j < 3; j++)

{

mat[j, i] = m[j][i];

}

}

double[][] temp = new double[3][];

temp[0] = new double[] { c, s, 0 };

temp[1] = new double[] { -s, c, 0 };

temp[2] = new double[] { 0, 0, 1 };

double[,] mh = new double[3, 3];

for (int i = 0; i < 3; i++)

{

for (int j = 0; j < 3; j++)

{

mh[i, j] = 0;

for (int k = 0; k < 3; k++)

{

for (int l = 0; l < 3; l++)

{

mh[i, j] += mat[i, k] * temp[k][l] * mat[j, l];

}

}

}

}

double[,] mh1 = new double[3, 3];

for (int i = 0; i < 3; i++)

{

for (int j = 0; j < 3; j++)

{

mh1[i, j] = mat[i, j];

}

}

for (int i = 0; i < 3; i++)

{

for (int j = 0; j < 3; j++)

{

mat[i, j] = 0;

for (int k = 0; k < 3; k++)

{

mat[i, j] += mh[i, k] * mh1[k, j];

}

}

}

for (int i = 0; i < 3; i++)

{

rr[i] = 0;

for (int j = 0; j < 3; j++)

{

rr[i] += mat[i, j] * mat[3, j];

}

}

double[,] matr = new double[3, 3];

double[] rp = new double[3];

for (int i = 0; i < 3; i++)

{

rp[i] = mat[3, i];

for (int j = 0; j < 3; j++)

{

matr[i, j] = mat[j, i];

}

}

double[,] qq = new double[4, 4];

double[] e = Vector3D.V3DOperations.VectorNorm(rp);

double[] q = new double[] { cD2, e[0] * sD2, e[1] * sD2, e[2] * sD2 };

double[,] mq = new double[3, 3];

Vector3D.V3DOperations.QuaternionToMatrix(q, mq, qq);

double[,] mr = new double[3, 3];

for (int i = 0; i < 3; i++)

{

for (int j = 0; j < 3; j++)

{

mr[i, j] = 0;

for (int k = 0; k < 3; k++)

{

mr[i, j] += mq[i, k] * matr[k, j];

}

}

}

return mr;

}

public void CalculateRotatedPosition(double[] abs, double[] rot)

{

for (int i = 0; i < 3; i++)

{

rot[i] = 0;

for (int j = 0; j < 3; j++)

{

rot[i] += matrix[j, i] * abs[j];

}

}

}

public virtual void Set(ReferenceFrame baseFrame, ReferenceFrame relative)

{

for (int i = 0; i < 3; i++)

{

position[i] = baseFrame.position[i];

for (int j = 0; j < 3; j++)

{

position[i] += baseFrame.matrix[i, j] * relative.position[j];

}

}

V3DOperations.QuaternionMultiply(baseFrame.quaternion, relative.quaternion, quaternion);

Norm();

SetMatrix();

}

public void SetMatrix()

{

V3DOperations.QuaternionToMatrix(quaternion, matrix, qq);

}

public void Norm()

{

double a = 0;

foreach (double x in quaternion)

{

a += x * x;

}

a = 1 / Math.Sqrt(a);

for (int i = 0; i < 3; i++)

{

quaternion[i] *= a;

}

}

public void GetPositon(IPosition position, double[] coordinates)

{

double[] p1 = this.position;

double[] p2 = position.Position;

for (int i = 0; i < 3; i++)

{

p[i] = p2[i] - p1[i];

}

for (int i = 0; i < 3; i++)

{

coordinates[i] = 0;

for (int j = 0; j < 3; j++)

{

coordinates[i] += matrix[i, j] * p[j];

}

}

}

protected virtual ReferenceFrame ParentFrame

{

get

{

if (parent == null)

{

return Motion6D.Motion6DFrame.Base;

}

return parent.Own;

}

}

#endregion

}

public interface IReferenceFrame : IPosition

{

ReferenceFrame Own

{

get;

}

List<IPosition> Children

{

get;

}

}

[Serializable()]

public class ReferenceFrameArrow : CategoryArrow, ISerializable, IRemovableObject

{

#region Fields

IPosition source;

IReferenceFrame target;

#endregion

#region Constructors

public ReferenceFrameArrow()

{

}

protected ReferenceFrameArrow(SerializationInfo info, StreamingContext context)

{

}

#endregion

#region ISerializable Members

void ISerializable.GetObjectData(SerializationInfo info, StreamingContext context)

{

}

#endregion

#region ICategoryArrow Members

public override ICategoryObject Source

{

get

{

return source as ICategoryObject;

}

set

{

IPosition position = value.GetSource<IPosition>();

if (position.Parent != null)

{

throw new CategoryException("Root", this);

}

source = position;

}

}

public override ICategoryObject Target

{

get

{

return target as ICategoryObject;

}

set

{

IReferenceFrame rf = value.GetTarget<IReferenceFrame>();

IAssociatedObject sa = source as IAssociatedObject;

IAssociatedObject ta = value as IAssociatedObject;

INamedComponent ns = sa.Object as INamedComponent;

INamedComponent nt = ta.Object as INamedComponent;

target = rf;

source.Parent = target;

target.Children.Add(source);

}

}

#endregion

#region IRemovableObject Members

void IRemovableObject.RemoveObject()

{

source.Parent = null;

if (target != null)

{

target.Children.Remove(source);

}

}

#endregion

#region Specific Members

static public List<IPosition> Prepare(IComponentCollection collection)

{

List<IPosition> frames = new List<IPosition>();

if (collection == null)

{

return frames;

}

IEnumerable<object> c = collection.AllComponents;

foreach (object o in c)

{

if (!(o is IObjectLabel))

{

continue;

}

IObjectLabel lab = o as IObjectLabel;

ICategoryObject co = lab.Object;

if (!(co is IReferenceFrame))

{

if (co is IPosition)

{

IPosition p = co as IPosition;

if (p.Parent == null)

{

frames.Add(p);

}

}

continue;

}

IReferenceFrame f = co as IReferenceFrame;

if (f.Parent != null)

{

continue;

}

prepare(f, frames);

}

return frames;

}

private static void prepare(IReferenceFrame frame, List<IPosition> frames)

{

List<IPosition> children = frame.Children;

frames.Add(frame);

foreach (IPosition p in children)

{

if (frames.Contains(p))

{

continue;

}

if (p is IReferenceFrame)

{

IReferenceFrame f = p as IReferenceFrame;

prepare(f, frames);

}

else

{

frames.Add(p);

}

}

}

#endregion

}

Let us consider a following picture:

Type of the Point is Position and the Frame implements IReferenceFrame interface. The Link arrow means that motion of Point is relative with respect to Frame. Absolute coordinates of Point are calculated by following way:

Xa = XF + A11Xr + A12Yr + A13Zr;

Ya = YF + A21Xr + A22Yr + A23Zr;

Za = ZF + A31Xr + A32Yr + A33Zr.

where

- Xa, Ya, Za absolute coordinates of Point

- XF, YF, ZF absolute coordinates of Frame

- Xr, Yr, Zr relative coordinates of Point

- A11,..., A33 - elements of

3D rotation matrix.

7 Plane with with rotodome in details

Let us consider plane and rotodome once again.

Both Plane and Rotodome are objects of ReferenceFrameData type.

[Serializable()]

public class ReferenceFrameData : IReferenceFrame, IDataConsumer

Thus ReferenceFrameData class is simultaneously consumer of information and reference frames since it implements both

a IDataConsumer and a IReferenceFrame. The Linear object calculates

linear motion parameters of Airplane.

X = at + b;

Y = 0;

Z = 0;

Q0 = 1;

Q1 = 0;

Q2 = 0;

Q3 = 0.

Where

Properties of Linear are presented below.

The Linear object supplies necessary formulae of linear motion. Properties of airplane are presented below:

These properties reflect relation between motion parameters of Airplane and output parameters of Linear

| N | Motion parameter of Airplane | Parameter of Linear | Formula |

| 1 | X - coordinate | Formula_1 | at + b |

| 2 | Y - coordinate | Formula_2 | 0 |

| 3 | Z - coordinate | Formula_2 | 0 |

| 4 | Q0 - 0th component of orientation quaternion | Formula_3 | 1 |

| 5 | Q1 - 1st component of orientation quaternion | Formula_2 | 0 |

| 6 | Q2 - 2nd component of orientation quaternion | Formula_2 | 0 |

| 7 | Q3 - 3d component of orientation quaternion | Formula_2 | 0 |

Rotodome is relatively rotated with respect to Airplane. Formulae of relative motion are presented below.

X = 0;

Y = 0;

Z = 0;

Q0 = cos(ct + d);

Q1=0;

Q2 = sin(ct + d);

Q3=0.

Where c and d are constants. Above formulae mean uniform rotation around Y - axis.

Relation between the Rotodome and the Rotation is similar to relation between

the Aircraft and the Linear.

8 Parameters of relative motion

Let us consider the following picture:

Both Point 1 and Point 2 are objects of Position type. The Relative is object

of RelativeMeasurements type:

[Serializable()]

public class RelativeMeasurements : CategoryObject, ISerializable, IMeasurements, IPostSetArrow

{

private IPosition source;

private IPosition target;

private IOrientation oSource;

private IOrientation oTarget;

private Action UpdateAll;

...

This class implements IMeasurements interface. So this object is a provider of data. In above case this class provides Distance between Point 1

and Point 2 which is calculated by following way:

.

.

The Chart object contains chart of distance.

The RelativeMeasurements class contains following field:

private Action UpdateAll;

In above case UpdateAll = UpdateCoinDistance where UpdateCoinDistance calculates relative distance:

void UpdateCoinDistance()

{

double[] y = source.Position;

double[] x = target.Position;

double a = 0;

for (int i = 0; i < 3; i++)

{

double z = y[i] - x[i];

relativePos[i] = z;

a += z * z;

}

distance = Math.Sqrt(a);

}

object GetDistance()

{

return distance;

}

...

IMeasure measure = new Measure(GetDistance, "Distance");

In this code source (resp. target) is source (resp target) object which implements IPosition interface

Following picture contains Point (Position type) and Frame (ReferenceFrame type). So Point implements IPosition interface. The Frame implements both IPosition and IOrientation.

In this case the Relative object provides following parameters.

| N | Name | Meaning |

| 1 | Xr | X - relative coordinate |

| 2 | Yr | Y - relative coordinate |

| 3 | Zr | Z - relative coordinate |

| 4 | Distance | Relative distance |

These parameters are defined by following way:

Xr=A11(XP-XF)+A12(YP-YF)+A13(ZP-ZF);

Yr=A21(XP-XF)+A22(YP-YF)+A23(ZP-ZF);

Zr=A31(XP-XF)+A32(YP-YF)+A33(ZP-ZF);

.

.

where

- XP, YP, ZP - absolute coordinates of Point;

- XF, YF, ZF - absolute coordinates of Frame;

- A11,..., A33 - elements of

3D rotation matrix of Frame.

Following picture explains meaning of relative motion parameters:

In this case we have relative coordinates besides distance. It requires additional calculation UpdateAll = UpdateCoinDistance + UpdateRelativeCoordinates. Following code contains implementation of the additional calculation:

...

UpdateAll = UpdateCoinDistance;

if (source is IOrientation)

{

oSource = source as IOrientation;

UpdateAll += UpdateRelativeCoordinates;

}

...

void UpdateRelativeCoordinates()

{

double[] sourcePosition = source.Position;

double[] targetPosition = target.Position;

double[] aux = new double[3];

for (int i = 0; i < 3; i++)

{

aux[i] = targetPosition[i] - sourcePosition[i];

}

double[,] sourceOrientation = oSource.Matrix;

for (int i = 0; i < 3; i++)

{

relativePosition[i] = 0;

for (int j = 0; j < 3; j++)

{

relativePosition[i] += sourceOrientation[i, j] * aux[j];

}

}

}

object GetX()

{

return relativePosition[0];

}

object GetY()

{

return relativePosition[1];

}

object GetZ()

{

return relativePosition[2];

}

...

IMeasure[] relative = new IMeasure[3];

Func<object>[] coord = new Func<object>[] { GetX, GetY, GetZ };

string[] names = new string[] { "x", "y", "z" };

for (int i = 0; i < 3; i++)

{

relative[i] = new Measure(coord[i], names[i]);

}

Following picture contains two reference frames:

Both frames are objects of (ReferenceFrame type). Now the Relative object provides following parameters.

| N | Name | Meaning |

| 1 | Xr | X - relative coordinate |

| 2 | Yr | Y - relative coordinate |

| 3 | Zr | Z - relative coordinate |

| 4 | Distance | Relative distance |

| 5 | Q0 | 0th component of relative orientation quaternion |

| 6 | Q1 | 1st component of relative orientation quaternion |

| 7 | Q2 | 2nd component of relative orientation quaternion |

| 8 | Q3 | 3d component of relative orientation quaternion |

Following picture explains this phenomenon.

This article explains application of quaternions to 6D motion kinematics. Relative orientation quaternion can be defined by following way:

Qr=Q2-1Q1

where

- Q1 - quaternion of Frame 1;

- Q2 - quaternion of Frame 2.

Both Frame 1 and Frame 2 implement both IPosition and IOrientation.

In this case we have new parameters. It requires additional calculation UpdateAll = UpdateCoinDistance + UpdateRelativeCoordinates + UpdateRelativeQuaternion.

The following code contains an implementation of the additional calculation:

...

UpdateAll = UpdateCoinDistance;

if (source is IOrientation)

{

oSource = source as IOrientation;

UpdateAll += UpdateRelativeCoordinates;

}

if (target is IOrientation)

{

oTarget = target as IOrientation;

}

if ((oSource != null) & (oTarget != null))

{

UpdateAll += UpdateRelativeQuaternion;

}

...

void UpdateRelativeQuaternion()

{

Vector3D.V3DOperations.QuaternionInvertMultiply(oSource.Quaternion, oTarget.Quaternion, quaternion);

}

object GetQ0()

{

return quaternion[0];

}

object GetQ1()

{

return quaternion[1];

}

object GetQ2()

{

return quaternion[2];

}

object GetQ3()

{

return quaternion[3];

}

...

IMeasure[] relativeQuaternion = new IMeasure[4];

Func<object>[] quat = new Func<object>[] { GetQ0, GetQ2, GetQ2, GetQ3 };

string[] names = new string[] { "Q0", "Q1", "Q2", "Q3" };

for (int i = 0; i < 4; i++)

{

relativeQuaternion[i] = new Measure(quat[i], names[i]);

}

...

9 Differentiation

A lot of engineering problems require

differentiation of parameters. Differentiation is supplied by following interface:

public interface IDerivation

{

IMeasure Derivation

{

get;

}

}

Let us consider application of this interface. Suppose that we have following function and its derivation

f(t)=t2;

d/dt f(t)=2t.

Following code implements this sample:

public class SquareTime : IMeasure, IDerivation

{

#region Fields

double t;

const double type = (double)0;

SquareTimeDerivation derivation;

#endregion

#region Ctor

public SquareTime()

{

derivation = new SquareTimeDerivation(this);

}

#endregion

#region Public Members

public double Time

{

get

{

return t;

}

set

{

t = value;

}

}

#endregion

#region Private Members

object GetSquareTime()

{

return t * t;

}

#endregion

#region IMeasure Members

Func<object> IMeasure.Parameter

{

get { return GetSquareTime; }

}

string IMeasure.Name

{

get { return "SquareOfTime"; }

}

object IMeasure.Type

{

get { return type; }

}

#endregion

#region IDerivation Members

IMeasure IDerivation.Derivation

{

get { return derivation; }

}

#endregion

#region Derivation

class SquareTimeDerivation : IMeasure

{

#region Fields

SquareTime squareTime;

#endregion

#region Ctor

internal SquareTimeDerivation(SquareTime squareTime)

{

this.squareTime = squareTime;

}

#endregion

#region Private Members

object GetSquareTimeDerivation()

{

return 2 * squareTime.t;

}

#endregion

#region IMeasure Members

Func<object> IMeasure.Parameter

{

get { return GetSquareTimeDerivation; }

}

string IMeasure.Name

{

get { return "SquareOfTimeDerivation"; }

}

object IMeasure.Type

{

get { return SquareTime.type; }

}

#endregion

}

#endregion

}

This sample is too specific. Differentiation of specific functions is not a good idea. Next sections contains alternative methods of differentiation. Following code snippet shows calculation of a

derivative order and

higher derivatives:

public static int GetDerivativeOrder(this IMeasure measure)

{

if (measure is IDerivation)

{

IDerivation d = measure as IDerivation;

IMeasure m = d.Derivation;

return GetDerivativeOrder(m) + 1;

}

return 0;

}

public static IMeasure GetHigherDerivative(this IMeasure measure, int order)

{

if (order == 0)

{

return measure;

}

if (measure is IDerivation)

{

IDerivation d = measure as IDerivation;

IMeasure m = d.Derivation;

return GetHigherDerivative(m, order - 1);

}

return null;

}

Following three subsections explain calculation of derivations.

9.1 Symbolic Differentiation

This link contains theory of symbolic differentiation. If a calculation is represented as expression tree

then derivation can be defined recursively by

chain rule. Above tree contains binary operations "+", "-", "*".

Following interface supplies derivation calculation:

public interface IDerivationOperation

{

ObjectFormulaTree Derivation(ObjectFormulaTree tree, string variableName);

}

Following code implements recursive (chain rule) calculation of derivation:

static public ObjectFormulaTree Derivation(this ObjectFormulaTree tree, string variableName)

{

if (tree.Operation is IDerivationOperation)

{

IDerivationOperation op = tree.Operation as IDerivationOperation;

return op.Derivation(tree, variableName);

}

return null;

}

Besides binary operations "+", "-", "*", "/" there are

nullary, unary, ternary and other operations.

There are two most important types of nullary operations:

Any derivation of constant is equal to zero. Every constant corresponds to element of following type:

public class ElementaryRealConstant : IObjectOperation, IDerivationOperation

{

#region Fields

private const Double a = 0;

private double val;

public static readonly ObjectFormulaTree RealZero = NullTree;

private bool isZero = false;

#endregion

#region Ctor

public ElementaryRealConstant(double val)

{

this.val = val;

}

#endregion

#region IDerivationOperation Members

ObjectFormulaTree IDerivationOperation.Derivation(ObjectFormulaTree tree, string variableName)

{

return RealZero;

}

#endregion

public object this[object[] x]

{

get

{

return val;

}

}

public object ReturnType

{

get

{

return a;

}

}

static private ObjectFormulaTree NullTree

{

get

{

ElementaryRealConstant op = new ElementaryRealConstant(0);

op.isZero = true;

return new ObjectFormulaTree(op, new List<ObjectFormulaTree>());

}

}

}

Partial derivations of variables can be 1 or 0 as it is shown below:

Following class reflects this circumstance:

public class VariableDouble : Variable, IDerivationOperation

{

#region Ctor

public VariableDouble(string variableName)

: base((double)0, variableName)

{

}

#endregion

#region IDerivationOperation Members

ObjectFormulaTree IDerivationOperation.Derivation(ObjectFormulaTree tree, string variableName)

{

if (variableName.Equals("d/d" + this.variableName))

{

return new ObjectFormulaTree(new Unity(), new List<ObjectFormulaTree>());

}

return new ObjectFormulaTree(new Zero(), new List<ObjectFormulaTree>());

}

#endregion

#region Helper classes

class Unity : IObjectOperation, IDerivationOperation

{

const Double a = 0;

const Double b = 1;

object[] inputs = new object[0];

static ObjectFormulaTree tree;

internal Unity()

{

}

object[] IObjectOperation.InputTypes

{

get { return inputs; }

}

public virtual object this[object[] x]

{

get { return b; }

}

object IObjectOperation.ReturnType

{

get { return a; }

}

static Unity()

{

tree = new ObjectFormulaTree(new Zero(), new List<ObjectFormulaTree>());

}

ObjectFormulaTree IDerivationOperation.Derivation(ObjectFormulaTree tree, string s)

{

return tree;

}

}

class Zero : Unity

{

const Double c = 0;

public override object this[object[] x]

{

get

{

return c;

}

}

}

#endregion

}

Unary operations are elementary functions sine, cosine etc. It is the table of derivations of these functions

which is used for derivations of unary functions. Derivations of addition and multiplication are calculated by following way:

Following code calculates partial derivative of addition, substraction and multiplication

ObjectFormulaTree IDerivationOperation.Derivation(ObjectFormulaTree tree, string variableName)

{

bool[] b = new bool[] { false, false };

if ((symbol == '+') | (symbol == '-'))

{

IObjectOperation op = new ElementaryBinaryOperation(symbol,

new object[] { tree[0].ReturnType, tree[1].ReturnType });

List<objectformulatree> l = new List<objectformulatree>();

for (int i = 0; i < tree.Count; i++)

{

ObjectFormulaTree t = tree[i].Derivation(variableName);

b[i] = ZeroPerformer.IsZero(t);

l.Add(t);

}

if (b[0])

{

if (b[1])

{

return ElementaryRealConstant.RealZero;

}

if (symbol == '+')

{

return l[1];

}

List<objectformulatree> ll = new List<objectformulatree>();

ll.Add(l[1]);

return new ObjectFormulaTree(new ElementaryFunctionOperation('-'), ll);

}

if (b[1])

{

return l[0];

}

return new ObjectFormulaTree(op, l);

}

ObjectFormulaTree[] der = new ObjectFormulaTree[2];

for (int i = 0; i < 2; i++)

{

der[i] = tree[i].Derivation(variableName);

b[i] = ZeroPerformer.IsZero(der[i]);

}

if (symbol == '*')

{

List<objectformulatree> list = new List<objectformulatree>();

for (int i = 0; i < 2; i++)

{

List<objectformulatree> l = new List<objectformulatree>();

l.Add(tree[i]);

l.Add(der[1 - i]);

ElementaryBinaryOperation o = new ElementaryBinaryOperation('*',

new object[] { l[0].ReturnType, l[1].ReturnType });

list.Add(new ObjectFormulaTree(o, l));

}

if (b[0] & b[1])

{

return ElementaryRealConstant.RealZero;

}

for (int i = 0; i < b.Length; i++)

{

if (b[i])

{

return list[i];

}

}

ElementaryBinaryOperation op = new ElementaryBinaryOperation('+',

new object[] { list[0].ReturnType, list[1].ReturnType });

return new ObjectFormulaTree(op, list);

}

return null;

}

Let us consider following example:

The Expression object contains function f(t) = t3, and Transformation object just transfers it without any change:

Now we would like to differentiate Expression formula. We set value of Derivation order to 1.

.

.

In result symbolic differentiation of f(t) = t3 is performed. The differentiation is used by the Transformation object.

In result the Transformation object has both x(t), and d/dt x(t) where x(t)=t3. Following picture contains charts of both x(t), and d/dt x(t).

9.2 Ordinary differential equations

Motion of mechanical objects usually requires

ordinary differential equations

(abbreviated ODE) . Following component provides solution of ODE. Following

sample contains solution of ODE:

This example solves following ODE:

Properties of the ODE component are presented below:

In case of ODE d/dt is just right part of equations, i.e.

d/dt x = -ax + by;

d/dt y = -ay - bx.

9.3 Transfer functions

Transfer function is indeed a form of ODE. Following picture presents Transfer function component:

A red (resp. blue) curve represents the output of Transfer function. Properties of Transfer function are presented below:

Transfer functions are indeed ODE therefore they provide derivations by evident way.

10 Differentiation and 6D motion

10.1 Basic classes and interfaces

Derivations of 6D motion parameters have very important role in the engineering.

For example a lot of problems concerns with

velocity and

angular velocity. If coordinates are

differentiable functions

of time, then we can define velocity. Similarly if components of quaternion are

differentiable functions then we can define angular velocity. Following

interfaces are implemented by objects such that velocity (resp. angular

velocity) is defined:

public interface IVelocity

{

double[] Velocity

{

get;

}

}

public interface IAngularVelocity

{

double[] Omega

{

get;

}

}

Following two interfaces are implemented by objects with second derivations of 6D

motion parameters:

public interface IAcceleration

{

double[] LinearAcceleration

{

get;

}

double[] RelativeAcceleration

{

get;

}

}

public interface IAngularAcceleration

{

double[] AngularAcceleration

{

get;

}

}

These objects support

acceleration and

angular acceleration respectively. Following diagram represents classes which implement these interfaces:

10.2 How it works

Let us consider following situation with reference frame. The Frame is object of ReferenceFrameData.

Following table contains mapping between motion parameters of Frame and outputs of Linear and Angular:

| N | Motion parameter | Information provider | Name of output parameter |

| 1 | X | Linear | Formula_1 |

| 2 | Y | Linear | Formula_2 |

| 3 | Z | Linear | Formula_3 |

| 4 | Q0 | Angular | Formula_1 |

| 5 | Q1 | Angular | Formula_2 |

| 6 | Q2 | Angular | Formula_3 |

| 7 | Q3 | Angular | Formula_3 |

Properties of Linear and Angular are presented below:

The Frame object implements following interface:

public interface IReferenceFrame : IPosition

{

ReferenceFrame Own

{

get;

}

List<IPosition> Children

{

get;

}

}

The Own is an object of type ReferenceFrame or its subtype. If all coordinates of Frame are differentiable then Own should implement IVelocity interface. If orientation parameters are not differentiable then Own should not implement IAngularVelocity. So according to the class diagram of 6D motion objects Frame is object of MovedFrame type

According to Section 9.1 motion parameters can be differentiable if we set to 1 Derivation order of Linear (resp. Angular)

Following table represents choice of Own property.

| N | Derivation order of Linear | Derivation order of Angular | Type of Own | Implements IVelocity | Implements IAngularVelocity |

| 1 | 0 | 0 | ReferenceFrame | No | No |

| 2 | 1 | 0 | MovedFrame | Yes | No |

| 3 | 0 | 1 | RotatedFrame | No | Yes |

| 4 | 1 | 1 | Motion6DFrame | Yes | Yes |

Following code contains detection of velocity and angular velocity support:

protected override bool IsVelocity

{

get

{

if (!base.IsVelocity)

{

return false;

}

for (int i = 0; i < 3; i++)

{

if (measurements[i].GetDerivativeOrder() < 1)

{

return false;

}

}

return true;

}

}

protected override bool IsAngularVelocity

{

get

{

if (!base.IsAngularVelocity)

{

return false;

}

for (int i = 3; i < 7; i++)

{

if (measurements[i].GetDerivativeOrder() < 1)

{

return false;

}

}

return true;

}

}

Type of Own property is defined by following way:

bool velocity = IsVelocity;

bool angularVelocty = IsAngularVelocity;

if (velocity & angularVelocty)

{

relative = new Motion6DFrame();

owp = new Motion6DFrame();

}

else if (angularVelocty)

{

relative = new RotatedFrame();

owp = new RotatedFrame();

}

else if (velocity)

{

relative = new MovedFrame();

owp = new MovedFrame();

}

else

{

relative = new ReferenceFrame();

owp = new ReferenceFrame();

}

11. Superposition of 6D motions

The "Plane and rotodome" sample shows 6D motion superposition. However superposition can be recursive as it is presented below:

The Frame 2 is moved with respect to Frame 1. Otherwise Frame 3 is moved with respect to Frame 2.

11.1 Motion of Helicopter

Good example of relative motion is motion of helicopter. Rotor of helicopter is moved with respect to fuselage,

Otherwise main rotor blades cyclically moved throughout rotation. Following picture represents rotor assembly:

Following movie shows motion of helicopter blades:

Following picture represents a helicopter motion model:

Objects with  contain necessary kinematics formulae. The Fuselage position is a fixed reference frame of the fuselage. This frame can be installed on a moving frame. Objects with

contain necessary kinematics formulae. The Fuselage position is a fixed reference frame of the fuselage. This frame can be installed on a moving frame. Objects with  are moving frames. The Tail rotor motion is rotating frame which is installed on the Fuselage position. This frame is the reference frame of the tail rotor. The Base of MR is installed on the Fuselage position. It is the frame of the main rotor of the helicopter. The BL 1, ..., BL 5 are positions of blades:

are moving frames. The Tail rotor motion is rotating frame which is installed on the Fuselage position. This frame is the reference frame of the tail rotor. The Base of MR is installed on the Fuselage position. It is the frame of the main rotor of the helicopter. The BL 1, ..., BL 5 are positions of blades:

All these frames are installed on the Base of MR and do not take to account a blade pitch. Blades PL 1, ..., PL 5 are installed on BL 1, ..., BL 5. Blades PL 1, ..., PL 5 are responsible for a blade's pitch.

11.2 Variable-Sweep Wing

Another sample is

variable-sweep wing:

A plane is moved with respect a fuselage and a high-lift device is moved with respect the plane. Suppose that Frame 2 is moved with respect to Frame 1 and parameters of relative motion are differentiable. Should Frame 2 support velocity calculation? The answer is: it depends on the Frame 1. If the Frame 1 does not support velocity calculation then the Frame 2 also does not support it. Following table contains dependence of a velocity calculation support.

| N | Frame 1 supports velocity calculation | Frame 1 supports angular velocity calculation | Relative linear parameters are differentiable | Relative angular parameters are differentiable | Frame 2 supports velocity calculation | Frame 2 supports angular velocity calculation |

| 1 | No | No | No | No | No | No |

| 2 | Yes | No | No | No | No | No |

| 3 | No | Yes | No | No | No | No |

| 4 | Yes | Yes | No | No | No | No |

| 5 | No | No | Yes | No | No | No |

| 6 | Yes | No | Yes | No | No | No |

| 7 | No | Yes | Yes | No | No | No |

| 8 | Yes | Yes | Yes | No | Yes | No |

| 9 | No | No | No | Yes | No | No |

| 10 | Yes | No | No | Yes | No | No |

| 11 | No | Yes | No | Yes | No | Yes |

| 12 | Yes | Yes | No | Yes | No | Yes |

| 13 | No | No | Yes | Yes | No | No |

| 14 | Yes | No | Yes | Yes | No | No |

| 15 | No | Yes | Yes | Yes | No | Yes |

| 16 | Yes | Yes | Yes | Yes | Yes | Yes |

Following code contains detection of velocity calculation support:

...

protected virtual bool IsVelocity

{

get

{

if (parent == null)

{

return true;

}

return parent.Own is IVelocity;

}

}

...

protected override bool IsVelocity

{

get

{

if (!base.IsVelocity)

{

return false;

}

for (int i = 0; i < 3; i++)

{

if (measurements[i].GetDerivativeOrder() < 1)

{

return false;

}

}

return true;

}

}

Velocity and angular velocity of the Frame 2 can be calculated by following way.

where:

- angular velocities of Frame 1, Frame 2 and relative angular velocity;

- angular velocities of Frame 1, Frame 2 and relative angular velocity;- A1, A2 - orientation matrixes of Frame 1 and Frame 2;

- V1, V2 - velocities of Frame 1 and Frame 2;

- rr - relative position vector of Frame 2 with respect to Frame 1;

- Vr - relative velocity vector of Frame 2 with respect to Frame 1;

12 Relative measurements

Following picture have two frames

We would like define parameters of relative motion which depend on properties of the frames by following way:

| N | Frame 1 implements IVelocity | Frame 1 implements IAngularVelocity | Frame 2 implements IVelocity | Frame 2 implements IAngularVelocity | Range velocity | Relative velocity components Vx, Vy, Vz | Relative angular velocity components |

| 1 | No | No | No | No | No | No | No |

| 2 | Yes | No | No | No | No | No | No |

| 3 | No | Yes | No | No | No | No | No |

| 4 | Yes | Yes | No | No | No | No | No |

| 5 | No | No | Yes | No | No | No | No |

| 6 | Yes | No | Yes | No | Yes | No | No |

| 7 | No | Yes | Yes | No | No | No | No |

| 8 | Yes | Yes | Yes | No | Yes | No | No |

| 9 | No | No | No | Yes | No | No | No |

| 10 | Yes | No | No | Yes | No | No | No |

| 11 | No | Yes | No | Yes | No | No | No |

| 12 | Yes | Yes | No | Yes | No | No | No |

| 13 | No | No | Yes | Yes | No | No | No |

| 14 | Yes | No | Yes | Yes | Yes | No | No |

| 15 | No | Yes | Yes | Yes | No | No | No |

| 16 | Yes | Yes | Yes | Yes | Yes | No | No |

13 Physical fields

13.1 Outlook

Standard natural science education includes following disciplines:

- Mechanics;

- Field theory;

- Other disciplines.

Now we would like study field theory. Field theory could not be separated from geometry and mechanics. Let us consider

Coulomb's law which states that the magnitude of the Electrostatics force of interaction between two point charges is directly proportional to the scalar multiplication of the magnitudes of charges and inversely proportional to the square of the distances between them.

So electrostatic field depends on geometrical position.

Basic types of physical types are presented below:

IPhysicalField - basic interface of all physical fields;IFieldConsumer - consumer of physical field;FieldLink - link between IPhysicalField and IFieldConsumer. This class is an arrow because it implements ICategoryArrow interface.

What is a field consumer? It is an object which depends of field. For example an airplane depends of radar irradiation field. Otherwise the airplane reflects irradiation. So airplane is simultaneously both IFieldConsumer and IPhysicalField. Following code represents implementation of these types:

public interface IPhysicalField

{

int SpaceDimension

{

get;

}

int Count

{

get;

}

object GetType(int n);

object GetTransformationType(int n);

object[] this[double[] position]

{

get;

}

}

public interface IFieldConsumer

{

int SpaceDimension

{

get;

}

int Count

{

get;

}

IPhysicalField this[int n]

{

get;

}

void Add(IPhysicalField field);

void Remove(IPhysicalField field);

void Consume();

}

[Serializable()]

public class FieldLink : ICategoryArrow, IRemovableObject, ISerializable, IFieldFactory

{

#region Fields

static private IFieldFactory factory = new FieldLink();

protected object obj;

private IFieldConsumer source;

private IPhysicalField target;

#endregion

#region Ctor

public FieldLink()

{

}

protected FieldLink(SerializationInfo info, StreamingContext context)

{

}

#endregion

#region ICategoryArrow Members

ICategoryObject ICategoryArrow.Source

{

get

{

return source as ICategoryObject;

}

set

{

source = value.GetSource<IFieldConsumer>();

}

}

ICategoryObject ICategoryArrow.Target

{

get

{

return target as ICategoryObject;

}

set

{

IFieldFactory f = factory;

if (f != null)

{

IPhysicalField ph = value.GetTarget<IPhysicalField>();

if (ph != null)

{

target = ph;

if (source.SpaceDimension != target.SpaceDimension)

{

throw new CategoryException("Illegal space dimension");

}

source.Add(target);

return;

}

}

CategoryException.ThrowIllegalTargetException();

}

}

bool ICategoryArrow.IsMonomorphism

{

get { throw new Exception("The method or operation is not implemented."); }

}

bool ICategoryArrow.IsEpimorphism

{

get { throw new Exception("The method or operation is not implemented."); }

}

bool ICategoryArrow.IsIsomorphism

{

get { throw new Exception("The method or operation is not implemented."); }

}

ICategoryArrow ICategoryArrow.Compose(ICategory category, ICategoryArrow next)

{

throw new Exception("The method or operation is not implemented.");

}

#endregion

#region IAssociatedObject Members

object IAssociatedObject.Object

{

get

{

return obj;

}

set

{

obj = value;

}

}

#endregion

#region IRemovableObject Members

void IRemovableObject.RemoveObject()

{

source.Remove(target);

}

#endregion

#region ISerializable Members

void ISerializable.GetObjectData(SerializationInfo info, StreamingContext context)

{

}

#endregion

#region IFieldFactory Members

IFieldConsumer IFieldFactory.GetConsumer(object obj)

{

if (obj is IAssociatedObject)

{

IAssociatedObject ao = obj as IAssociatedObject;

return ao.GetObject<IFieldConsumer>();

}

return null;

}

IPhysicalField IFieldFactory.GetField(IFieldConsumer consumer, object obj)

{

if (obj is IAssociatedObject)

{

IAssociatedObject ao = obj as IAssociatedObject;

object o = ao.GetObject<PhysicalField.Interfaces.IPhysicalField>();

}

return null;

}

#endregion

#region Specific Members

static public IFieldFactory Factory

{

get

{

return factory;

}

set

{

factory = value;

}

}

#endregion

}

13.2 Covariant Physical Fields

Vector field can be simply an

ordered set of 3 real parameters or it can be

covariant. Following picture explains meaning of the "covariant" word.

If 3D vector is not covariant then its components depend on sensor position only. Covariant vector components depend on both orientation and position. Values of components are projections of geometric vector to sensor's axes of reference. The picture above presents two orientations of sensor: blue and green. Projections of field vector A

are different for these different orientations. Besides covariant vectors Framework supports covariant tensors.

Such tensors can be used in Gravimetry.

Let us consider following task. We have a spatially fixed electrical charge which interacts with another charge. Following picture represents this phenomenon:

The Electrostatics field object represents field of fixed charge. It is linked with motionless spatial frame. The Field link is an arrow of FieldLink type, it has an  icon. Source of Field is the Sensor object of

icon. Source of Field is the Sensor object of PhysicalFieldMeasurements3D type. The PhysicalFieldMeasurements3D type supplies virtual measurements of field parameters. Otherwise the Sensor object is linked to Motion frame object. It means position of virtual position of Sensor coincides with position of Motion frame. Results of virtual field measurements are exported in the Motion equations object which represents

Newton's Second Law. Properties of Motion equations are presented below:

Otherwise parameters of Motion equations are exported as coordinates of the Motion frame:

This sample is a good demonstration of abstract nonsense because it includes following three domains:

- Information flow;

- 6D Motion;

- Physical fields.

14. Bridge pattern instead multiple inheritance

The Bridge Pattern decouples an abstraction from its implementation so that the two can vary independently. This decomposition provides economy in above case of physical fields. We do not need class which simultaneously implements both IPosition and IPhysicalField. Instead we have a Position class which contains a field which implements IPhysicalField interface. This situation is generalized such that object has children. Any object with children fields implements following interface:

public interface IChildrenObject

{

IAssociatedObject[] Children

{

get;

}

}

Following functions find a child such that it implements necessary interface:

public static T GetObject<T>(this IAssociatedObject obj) where T : class

{

if (obj is T)

{

return obj as T;

}

if (obj is IChildrenObject)

{

IChildrenObject co = obj as IChildrenObject;

IAssociatedObject[] ch = co.Children;

if (ch != null)

{

foreach (IAssociatedObject ob in ch)

{

T a = GetObject<T>(ob);

if (a != null)

{

return a;

}

}

}

}

return null;

}

public static T GetObject<T>(this IAssociatedObject obj, string message) where T : class

{

T a = GetObject<T>(obj);

if (a != null)

{

return a;

}

throw new Exception(message);

}

public static T GetSource<T>(this IAssociatedObject obj) where T : class

{

return GetObject<T>(obj, CategoryException.IllegalSource);

}

public static T GetTarget<T>(this IAssociatedObject obj) where T : class

{

return GetObject<T>(obj, CategoryException.IllegalTarget);

}

Application of these functions is shown below:

ICategoryObject ICategoryArrow.Source

{

get

{

return source as ICategoryObject;

}

set

{

source = value.GetSource<IFieldConsumer>();

}

}

ICategoryObject ICategoryArrow.Target

{

get

{

return target as ICategoryObject;

}

set

{

target = value.GetTarget<IPhysicalField>();

source.Add(target);

}

}

15 Statistics

The staticstics domain contains following basic objects:

- Structured selection (

IStructuredSelection intreface) - Collection of structured collections (

IStructuredSelectionCollection intreface) - Consumer of a collection of structured selections (

IStructuredSelectionConsumer intreface) - Link between consumer of selections and a collection of structured selections(

SelectionLink class which implements ICategoryArrow interface)

Source (resp. Target) of SelectionLink is an object of IStructuredSelectionConsumer (resp. IStructuredSelectionCollection) type

Following code represents these interfaces:

public interface IStructuredSelection

{

int DataDimension

{

get;

}

double? this[int n]

{

get;

}

double GetWeight(int n);

double GetApriorWeight(int n);

int GetTolerance(int n);

void SetTolerance(int n, int tolerance);

bool HasFixedAmount

{

get;

}

string Name

{

get;

}

}

public interface IStructuredSelectionCollection

{

int Count

{

get;

}

IStructuredSelection this[int i]

{

get;

}

}

public interface IStructuredSelectionConsumer

{

void Add(IStructuredSelectionCollection selection);

void Remove(IStructuredSelectionCollection selection);

}

[Serializable()]

public class SelectionLink : CategoryArrow, IRemovableObject, ISerializable

{

#region Fields

private int a = 0;

private IStructuredSelectionConsumer source;

private IStructuredSelectionCollection target;

#endregion

#region Constructors

public SelectionLink()

{

}

protected SelectionLink(SerializationInfo info, StreamingContext context)

{

info.GetValue("A", typeof(int));

}

#endregion

#region ICategoryArrow Members

public override ICategoryObject Source

{

get

{

return source as ICategoryObject;

}

set

{

source = value.GetSource<IStructuredSelectionConsumer>();

}

}

public override ICategoryObject Target

{

get

{

return target as ICategoryObject;

}

set

{

IStructuredSelectionCollection c =

value.GetTarget<IStructuredSelectionCollection>();

target = c;

source.Add(target);

}

}

#endregion

#region IRemovableObject Members

public void RemoveObject()

{

source.Remove(target);

}

#endregion

#region ISerializable Members

public void GetObjectData(SerializationInfo info, StreamingContext context)

{

info.AddValue("A", a);

}

#endregion

}

Above interfaces are very abstract. Let us consider an example of these interfaces. Suppose that we have following table.

We would like approximate this table by following equation:

Y=aX2+ bY + c;

where a, b, c are unknown parameters. Following picture contains solution of this task.

The Selection is a object of Series type which implements the IStructuredSelectionCollection interface. It contains two selections:

- X - values of above table (X - coordinates of chart)

- Y - values of above table (Y - coordinates of chart)

The GLM is an object of AliasRegression type which implements a IStructuredSelectionConsumer interface.The SL link with  icon is a link of the

icon is a link of the SelectionLink type. The SL links GLM with Selection. It means that GLM statistical analysis Selection, i.e. GLM defines unknown parameters (a, b and c) by

Generalized linear model. This example is very simple. However these are very complicated samples.

This article contains a complicated sample of an

Orbit determination which contains:

Many samples are contained in

my article devoted to regression.

16 Statistics + 6D Motion. Pulse-Doppler radar application

Pulse-Doppler radars is a system capable of detecting a target's distance and its radial velocity (range-rate). Some radars systems capable of detecting

altitude and azimuth

Let us consider determination of target's motion parameters by two pulse radars.

Following picture represents this situation

The Motion parameters component defines motion parameters given by

Formula_1= at + b;

Formula_1= ct + d;

Formula_1= ft + g

where t is the time, a, b, c, d, f, d are constants which we would like to define. The Motion parameters component implies symbolic calculation of time derivation. Following table contains mapping between Motion parameters parameters and Target Frame motion parameters:

| N | Motion parameters output parameter | Target Frame motion parameter |

| 1 | Formula_1 | X- coordinate |

| 2 | Formula_2 | Y- coordinate |

| 3 | Formula_3 | Z- coordinate |

Since Motion parameters supplies symbolic calculation of derivations the Target Frame is supplied by implicit calculation of velocity. The Relative 1 (resp. Relative 2) supplies parameters of

Target Frame motion with respect to Frame 1 (resp. Frame 2). The Measurements calculates parameters supplied by radars.

Target's distance and its radial velocity are given directly from

Relative 1 and Relative 2 (both Relative 1 and Relative 2 implicitly calculate radial velocity). Altitude ? and azimuth a are given by:

Full picture of motion determination is presented below:

This picture contains following additional parameters:

- Selections target's distance, radial velocity, altitude and azimuth

- Accumulator of measurements

- Standard deviations of selections

- Selections with

unequal variances

- General linear model component

The GLM is general linear model which has following properties:

Right part contains defined parameters a, b, c, d, f, g of Motion parameters. Middle part contains calculated parameters. Right part contains selections with unequal variances.

The general linear model defines values of parameters a, b, c, d, f,

g such that square difference between values of selections and calculated values become minimal.

17 Image processing

The image processing domain contains following basic types:

- Provider of bitmap (

IBitmapProvider intreface) - Consumer of bitmap (

IBitmapConsumer intreface) - Link between a consumer of bitmap and a provider of bitmap(

BitmapConsumerLink class which implements ICategoryArrow interface)

An  icon corresponds to the

icon corresponds to the BitmapConsumerLink arrow. Following code represents these interfaces:

public interface IBitmapProvider

{

Bitmap Bitmap

{

get;

}

}

public interface IBitmapConsumer

{

void Process();

IEnumerable<IBitmapProvider> Providers

{

get;

}

void Add(IBitmapProvider provider);

void Remove(IBitmapProvider provider);

event Action<IBitmapProvider, bool> AddRemove;

}

[Serializable()]

public class BitmapConsumerLink : CategoryArrow, IRemovableObject, ISerializable

{

#region Fields

static public readonly string ProviderExists = "Bitmap provider already exists";

public static readonly string SetProviderBefore =

"You should create bitmap provider before consumer";

private int a = 0;

private IBitmapConsumer source;

private IBitmapProvider target;

#endregion

#region Constructors

public BitmapConsumerLink()

{

}

protected BitmapConsumerLink(SerializationInfo info, StreamingContext context)

{

info.GetValue("A", typeof(int));

}

#endregion

#region ICategoryArrow Members

public override ICategoryObject Source

{

get

{

return source as ICategoryObject;

}

set

{

source = value.GetSource<IBitmapConsumer>();

}

}

public override ICategoryObject Target

{

get

{

return target as ICategoryObject;

}

set

{

target = value.GetTarget<IBitmapProvider>();

source.Add(target);

}

}

#endregion

#region IRemovableObject Members

public void RemoveObject()

{

if (source != null & target != null)

{

source.Remove(target);

}

}

#endregion

#region ISerializable Members

public void GetObjectData(SerializationInfo info, StreamingContext context)

{

info.AddValue("A", a);

}

#endregion

#region Specific Members

public static void Update(IBitmapConsumer consumer)

{

IEnumerable<IBitmapProvider> providers = consumer.Providers;

foreach (IBitmapProvider provider in providers)

{

if (provider is IBitmapConsumer)

{

IBitmapConsumer c = provider as IBitmapConsumer;

Update(c);

}

}

consumer.Process();

}

#endregion

#region Private

IBitmapConsumer AssociatedSource

{

get

{

if (source == null)

{

return null;

}

if (source is IAssociatedObject)

{

IAssociatedObject ao = source as IAssociatedObject;

object o = ao.Object;

if (o is IBitmapConsumer)

{

return o as IBitmapConsumer;

}

}

return null;

}

}

#endregion

}

Following picture represents an example of image processing: