Contents

- Based on the Azure Lease Blob

- Custom Metadata properties TTL and DBC

- Loosely decouple pattern

- Multiple Worker Roles

- Built-in tinny scheduler

- No Database needs

Windows Azure Blob Storage is a service represented by a virtual storage for storing unstructured (virtual) data that can be accessed via http or https protocol. The blob storage plays in the modern distributed architecture very significant role for storing images, documents, files, video, backups, troubleshooting data, etc. Mostly those kind of resources are "live forever" after they have been stored. Basically, they can live independently from the application that created them.

Introducing new features of the Azure Blob Storage (packaged inside the Windows Azure for .NET SDK 2.0) support by version of the 2012-02-12 REST API such as Blob & Container Leases creates a new opportunity for Azure Blobs in the event driven distributed architecture. The business processors can establish and manage a lock on Azure Storage Blob & Container for write and delete operations. More details can be found in my previous article Using Azure Lease Blob.

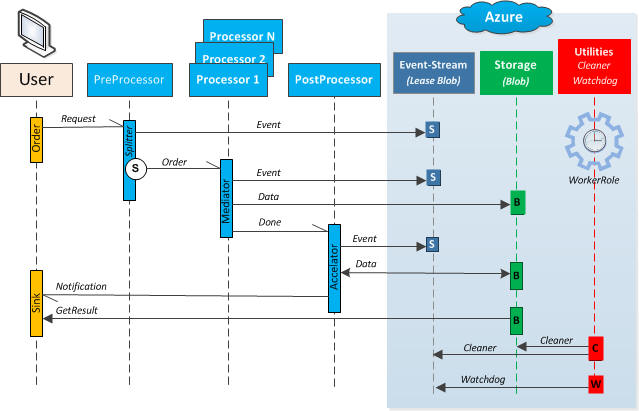

Basically, in this event-driven distributed architecture, the lease blob is holding an Event-Stream of the business processing, data are stored in the Blob Storage and business processes are driven by short messages in the fire and forget manner (or Pub/Sub using a Azure Service Bus). The following picture shows an example of this sequence scenario:

The above picture shows a position of the Azure Blob Storage within the event-driven architecture, where the order message is split and distributed to the processors (workers) in the parallel manner and based on the Event-Stream (lease Blob) the Post-Process can trigger an acceleration of the result and deliver the final notification message to the User. During this processing, the Event-Stream and data are temporary stored in the Azure Blob Storage. When the process is completed, these resources can be deleted after certain time or moved to an archive container with a different access policy.

Like another entity of the Azure infrastructure such as Azure Service Bus, the consumer has a capability to determine the lifetime of the resource such as TimeToLive (TTL) and also moving the entity to the DeadLetterQueue (DQL). It will be great having these features for Azure Blob Storage built-in the Azure Service, but today we have to build it on the consumer side.

This article shows how we can easily extend this feature such as TimeToLive (TTL) and DeadBlobContainer (DBC) in the loosely decoupled manner.

The following picture shows the requirements for blobs mark for TTL and DBC:

The above process is very straightforward. We need some service that will look at the blobs for their TTL (and DBC) properties and make an action such as Move or Delete. As we know, the Azure Blob doesn't have these properties, therefore we have to create and store them in the blob's Metadata. That's the only requirement for the Azure Blob in our application. Note, that without these TTL and DBC custom properties we cannot perform the Moving and/or Deleting blob resources in the container.

Of course, there is a way to mark all blobs in the container for TTL and DBC, but it will require additional service for that process.

Once we have marked the blob for TTL/DBC, we need some service (the best solution is worker role) to scan blobs in the container for the deletion or movement to the specific container known as DeadBlobContainer. This service is time driven, for instance every 10 minutes. In this article I will describe a design and implementation of the worker role for TTL and DBC features of the Azure Blob Storage.

Lastly, in the case of the Moving blob to the specific DeadBlobContainer, the TTL property stored in the blob metadata are removed. Based on the application requirements, the blob can hold additional properties in the metadata such as SourceUri, etc. to see from where the blob has been moved.

Note, that the DBC feature is an optional to the TTL. For "cleaner service" is mandatory property TimeToLive (TTL) to start performing task such as Move and/or Delete. The blob can be permanently deleted by setting the TTL property metadata.

OK, let's continue with the concept and design. I am assuming you have a working knowledge of the Windows Azure Platform.

The Concept of Azure Blob TimeToLive (TTL/DBC) is based on the pooling blobs for expiring their Utc DateTime value stored in the custom metadata property TimeToLive. The following screen snippet shows an example of the custom blob metadata properties TTL and DBC:

Once the blob is marked for TTL (and with optional property DBC), it is ready for pooling process. That's the easy part of the concept and design. The application can easily setup any custom metadata for specific blob. In the case of moving a blob to the DeadBlobContainer, it is recommended to add more details about the blob into its metadata such as SourceUri, etc.

As you know, it is not difficult to clean-up few blobs in the couple containers. But making a generic cleaner service for purging and moving blobs from many containers with thousands of blobs will require additional considerations such as scalability, performance, etc. That's the second part of the Concept and Design for Azure Blob TTL/DBC.

The magic work to meet that requirement for service scalability and performance is relay on the feature of the Azure Blob such as Lease Blob and ContinuationToken for paging blobs from the container. These two magic features of the Azure Blob enables us to create a design that will be scalable based on the needs just by adding more worker role instances.

The following screen snippet shown this part:

The worker role needs one Lease Blob for holding state of the purging process across the multiple instances. The purging job state is stored in this lease blob where a user (or another application) can specify which containers (from any accounts) are going to be purged. Note, that the private containers must be addressed with shared access signature (SAS) and with valid policy for List/Delete/Read.

In the case of moving a blob to the DeadBlobContainer (DBC), the worker role needs to know an account of the DBC container. Note, that there is only one common DBC container for purging blobs from any scanned containers.

During the pooling time, the worker role (only one at the time) needs to exclusively obtain the job state from the Lease Blob in order to start pulling up (page by page) blobs from the containers based on the status and ContinuationToken. Once the worker role has this page of blobs, it will release the Lease Blob to allow another instance to do the same action based on the state process stored in this Lease Blob.

The process of the deleting and moving blob out of the Lease Blob sequence in a highly parallel and asynch manner is performed by using the C# 5.0 asynch/await keywords for multiple cores. The blobs in the specific containers can be filtered by prefix. The status of the pulling blobs (including their metadata/properties) by page is indicated by the following enum values:

Note, that the state=Off will skip a specific container from the purging process. When the process of the pulling blobs is completed, their status is setup for Init and timestamp will update for DateTime.UtcNow value to indicate when this occurred, including the counter of the total purged blobs. The next pooling will start from this time.

Implementation of the Azure Blob TTL blobs service is very straightforward by worker role. The purging job loop with pooling scheduler is shown in the following code snippet:

public override void Run()

{

Trace.TraceInformation("WorkerRoleTimeToLiveBlob entry point called, Id={0}", RoleEnvironment.CurrentRoleInstance.Id);

int poolingTimeInMin = Int32.Parse(RoleEnvironment.GetConfigurationSettingValue("RKiss.TTLBlob.PoolingTimeInMin"));

TimeSpan sleepingTime = TimeSpan.FromMinutes(poolingTimeInMin);

while (true)

{

var ttl = new TimeToLiveJob();

Task.Run(() => ttl.StartAsync(sleepingTime)).Wait();

DateTime dtNow = DateTime.UtcNow;

sleepingTime = RoundUp(dtNow, TimeSpan.FromMinutes(poolingTimeInMin)) - dtNow;

Trace.TraceInformation("[{0}] Working", sleepingTime, "Information");

Thread.Sleep(sleepingTime);

}

}

The async task StartAsync represents the actual work of the moving and deleting TTL blobs. The job must be completed before the next scheduled loop. The current time is round-up for pooling time and this difference is setup for sleeping time.

As you can see the above implementation is encapsulated into the static method StartAsync, so let's look at inside this power-horse logic.

First of all, the following picture shows a class diagram for objects used in this implementation:

Basically, we have only one abstraction such as Containers class. This class represents a job state for containers stored in the Lease Blob in the xml formatted text. Based on this object, each worker role can pull-up their page of the blobs for moving and/or deleting action. Number of blobs in the page result is configurable and default value is 100.

OK, let's assume we have this page of the blobs, the following code snippet shows how this page can be processed for moving and deleting TTL blobs in the parallel async/await manner:

Parallel.ForEach(listBlobs, new ParallelOptions {MaxDegreeOfParallelism = 15}, async blob =>

{

try

{

if (blob.Metadata.ContainsKey("TimeToLive") && DateTime.Parse(blob.Metadata["TimeToLive"]) <= DateTime.UtcNow)

{

string ttl = blob.Metadata["TimeToLive"];

if (blob.Metadata.ContainsKey("DeadBlobContainer") && !string.IsNullOrEmpty(connectionstringForDBC))

{

var client = CloudStorageAccount.Parse(connectionstringForDBC).CreateCloudBlobClient();

string destRef = blob.Metadata["DeadBlobContainer"];

if (destRef.EndsWith("/"))

destRef += blob.Name;

else if (destRef.IndexOf('/') < 0)

destRef += "/" + blob.Name;

CloudBlockBlob destBlob = client.GetBlockBlobReference(destRef);

destBlob.Container.CreateIfNotExists();

blob.Metadata.Remove("TimeToLive");

await Task.Factory.FromAsync(blob.BeginSetMetadata(null, null), blob.EndSetMetadata);

Task<string> copy = Task.Factory.FromAsync<string>(

destBlob.BeginStartCopyFromBlob(blob, null, null), destBlob.EndStartCopyFromBlob);

copy.Wait();

if (destBlob.CopyState.Status != CopyStatus.Success)

{

throw new Exception("Copy blob failed, url=" + blob.Name);

}

Trace.TraceInformation("[{0}] COPY [{1}] {2}",

(DateTime.Now - dtStart).TotalMilliseconds.ToString("######"),

blob.Metadata["DeadBlobContainer"], blob.Name);

}

Interlocked.Increment(ref counter);

await Task.Factory.FromAsync<bool />(blob.BeginDeleteIfExists(null, null), blob.EndDeleteIfExists);

Trace.TraceInformation("[{0}] DELETE [{1}] {2}",

(DateTime.Now - dtStart).TotalMilliseconds.ToString("######"), ttl, blob.Name);

}

}

catch (Exception ex)

{

Trace.TraceInformation(ex.InnerException == null ? ex.Message : ex.InnerException.Message);

}

});

As you can see, the destination blob name is configurable based on the

DeadBlobContainer value with optional ended character '/' which allows to keep an original blob name under specified path. The DBC can also be created on the fly if doesn't exist. All Azure Storage Blob client Begin/End methods are running as an async/await Task.

The following code snippet shows how we can obtain a list of the blobs within the Lease Blob sequence:

var container = job.Container.AsParallel()

.FirstOrDefault(c => c.Status == TokenStatus.Init || c.Status == TokenStatus.Current);

if (container != null)

{

Trace.TraceInformation("[{0}] Get {1}",

(DateTime.Now - dtStart).TotalMilliseconds.ToString("######"), container.ToString());

var cbc = new CloudBlobContainer(new Uri(container.Uri));

var brs = await Task.Factory.FromAsync<BlobResultSegment>(

cbc.BeginListBlobsSegmented(container.Prefix, true, BlobListingDetails.Metadata, pageSize,

new BlobContinuationToken() { NextMarker = container.Token },

new BlobRequestOptions(), null, null, null),

cbc.EndListBlobsSegmented);

if (brs.ContinuationToken != null)

{

container.Token = brs.ContinuationToken.NextMarker;

container.Status = TokenStatus.Current;

}

else

{

container.Token = string.Empty;

container.Status = container.Status == TokenStatus.Init ?

TokenStatus.FirstAndlast : TokenStatus.Last;

}

listOfBlobs = brs.Results.Cast<CloudBlockBlob>().ToList();

Trace.TraceInformation("[{0}] Put {1}",

(DateTime.Now - dtStart).TotalMilliseconds.ToString("######"), container.ToString());

}

else

{

Trace.TraceInformation("DONE status=Init");

job.Container.AsParallel().Where(c => c.Status != TokenStatus.Off)

.ForAll(c => { c.Status = TokenStatus.Init; });

job.Timestamp = DateTime.UtcNow.ToString();

}

We can get the first container for paging from the Lease Blob state. Then, we call segmented ListBlobs asynchronously in the async/await parallel fashion to obtain one page of the blobs filtered by prefix. Based on the result, the job state is updated and returned back to the Lease Blob.

Once all containers are done, the job state is updated into the Init state and job.Timestamp is marked. As you can see, the process is very fast. In fact, it has to be because that's the goal within the Lease Blob such as get the page of blobs and release a Lease Blob as soon as possible for other worker role instance.

First of all, the following are prerequisites:

The usage of the Azure TTL Blobs is very straightforward based on the marking blob for TTL and having the service for processing moving and/or deleting blob from its container. In this section I will describe how we can test this usage and scenario.

First of all let's describe the worker role service solution. The following screen snippet shows AzureTimeToLiveBlob solution package included in this article:

The solution has only one worker role where the scheduler job for TTL Blobs is implemented. Before running the worker role by Azure Emulator; you need to customize settings for your needs and environment. Please follow these instruction steps. Note, that we will need to use a tool for exploring Azure Storage Blobs. You can use your favorite Azure Storage Explorer. In this article I will use free Azure Storage Explorer by Neudesic built by my friend David Pallmann.

Step 1. Creating a Lease Blob

- Using Azure Storage Explorer create a private blob with name, for example:

TimeToLiveBlobs with text/xml ContentType and SAS policy for RWDL. - Generate SAS signature for this blob and copy to the Clipboard.

- Open Role Settings and paste the clipboard into the Value of the

RKiss.TTLBlob.LeaseBlob. - Update a Value of the

RKiss.TTLBlob.AccountForDBC for your azure storage account. Your preferred TTL blobs for DeadBlobContainer. - For test purposes only, change the

RKiss.TTLBlob.PoolingTimeInMin settings for 1 minute.

Step 2. Creating content for Lease Blob

In this step we are going to create content for Lease Blob such as the description of the containers where the worker role service will process a TTL job.

- Open the file

TimeToLive_template.xml from solution for its customizing which is adding a specific Container for TTL job.

The Container element has the following attributes:

<Container name="myName"

status="Init"

prefix=""

uri="https://myaccount.blob.core.windows.net/events?sr=c&si=mypolicy&sig=mySignature"

token="" />

- Update

uri attribute with your SAS signed container Uri. Note, that the character '&' must be escaped by '&' in the uri query. - In the case of filtering blobs, we can use standard prefix for blobs.

- Note, that the

token attribute is used for service to store ContinuationToken for the specific container. - Add more Container elements into the collection based on your needs.

- Now, copy the content of the

TimeToLive_template.xml file to the clipboard and paste it into the Lease Blob content and save it.

That's all, basically we can run our worker role service.

Step 3. Run Worker Role Service.

Compile solution and press Ctrl+F5 to run a service under the Azure Emulator. The service is instrumented with TraceInformation checkpoints, therefore we can see what's going on in the worker role.

The following picture shows two instances of the worker role waiting for next pooling time. In this example, there are waiting (sleeping) for almost 6 minutes. Also, you can see that the first instance was faster than second one, because retry counter shows one pooling acquires cycle for Lease Blob:

Another way to see how the service is working is to use a browser for pop-up a content of the Lease Blob. Each time when the pooling job is completed, the timestamp is marked.

Now we have one more step. It is the final step where we can prove the TTL blobs are working.

Step 4. Mark blobs for TTL/DBC

In this step we need to mark blobs for TTL. Basically, this is the responsibility of the application, but in our test case we can do it with Azure Storage Explorer.

Double click on the specific blob in the container for purging and click on the Metadata tab:

In the above example, the specific blob has been marked for TTL and DBC. The blob at the TTL time will be moved to the container dbc with prefix deleteme/.

You can mark more blobs in the container(s) specified in the Lease Blob (see Step 2.) with different DBC value, for instance: dbc/deleteme.

In this case, the blob is going to move to the container dbc under a new name such as deleteme. Note, the moved file will overwrite existing one.

The following screen snippet shows an example of the checkpoints from the worker role instances. You can see, there is one DELETE blob made by the second instance:

To see how the worker role instances are working, use the browser for pop-up the content of the Lease Blob. The following screen snippet shows my example:

Step 4. Deploying on Azure

Now we can deploy the service on the Azure. The following picture shows an example of the purging hundreds TTL blobs every 10 minutes. As you can see, that the two ExtraSmall instances are not busy:

This article described how we can easily extend Azure Blob Storage for TimeToLive and DeadBlobContainer features similar to other Azure entities such as Azure Service Bus. Azure TTL Blob will find a good opportunity in the event-driven distributed architecture, where Lease Blobs played the fundamental role and the blobs are created dynamically and must live for certain period of time. The described concept is based on the Lease Blob for sharing a TTL Job across multiple worker role instances. I hope you enjoyed it.

[1] Windows Azure

[2] Windows Azure SDK 2.1 for .Net

[3] Introducing Windows Azure Storage Client Library 2.1 for .NET and Windows Runtime

[4] Lease Blob

[5] How to use the Windows Azure Storage

[6] What's New in Windows Azure

[7] Azure Storage Explorer