Tables are the major data element of ANKHOR FlowSheet. Their structure and usage is a mix of the tables in classic spreadsheets, SQL databases and arrays/structures/dictionaries in programming languages. They overcome several of the limitations of tables found in those environments.

Table Size

ANKHOR Tables can be large both in number of rows as well as number of columns. Most SQL databases have a strong limit on the number of possible columns. Spreadsheets are also quite limited in number of columns as well as rows.

It is not uncommon to have tables with millions of columns in ANKHOR.

Large arrays start to get problematic in many programming languages due to memory fragmentation problems. This has become less of an issue with 64bit operating systems, where there is enough virtual address space to allocate huge tables. ANKHOR uses a two level approach for storing large tables to avoid the complexity of allocating large contiguous memory areas.

Number of Tables

Many SQL implementations start to slow down once the number of parallel tables in use goes beyond a modest number of e.g. 100. The number in an SQL schema may be significantly larger, but most tables are not combined into single queries. There is no limit on the number of tables combined in a single ANKHOR graph.

Juggling with more than a dozen tables in a spreadsheet is complex, tedious and error prone – and clearly not recommended in any scenario that is supposed to be maintained for longer than one session.

Column or Row

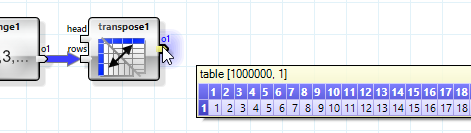

Most functions in spreadsheets work regardless of column or row orientation of the source data area. A table in an SQL database has a strong separation between column and row orientation. It is not possible to simply flip between column or row structure (aka transpose a table).

Loops and loop derived functions in ANKHOR are row oriented, but transposing a table is a frequent and cheap operation allowing a free transition between row or column oriented processing. Most aggregation or sorting macros are already provided in a row as well as a column form.

Dynamics and Growths

Growing or shrinking SQL tables by rows is a bread-and-butter operation and - besides querying - the most common usage. This is different in Spreadsheets, where tables have a fixed size in the grid, and growing a complex spreadsheet beyond some initially planned size becomes painful. Dynamic growth or shrinking a table based on source data is even more complex or rather impossible for most spreadsheet users.

Growing or shrinking tables in ANKHOR is painless and a common operation. Most ANKHOR tables are created dynamically, similar to SQL views or temporary tables and are not size limited by any other element beside system memory.

Changing the column layout of an SQL table is a complex and in many cases rather expensive endeavour, that is not undertaken light-hearted. While this is a normal limitation of a database, it becomes rather problematic, when trying to build complex processing operations using SQL. The column structure in ANKHOR is not fixed and it is quite normal to join or remove columns during processing, similar to joins in SQL. The resulting columns structure is in most cases a simple result of the operation.

Temporaries and Intermediate Results

Processing complex SQL queries usually results in many temporary tables or views, and a good deal of the performance work on SQL databases focuses on the question, whether to materialize these temporary tables or not. Results in spreadsheets are usually simple cells, so if one needs a complete table as an intermediate result she has to copy the formula to all target cells. This is quite problematic for tables with various sizes and becomes more and more complex with the number of intermediate tables – there simply is no good place in a spreadsheet to put those intermediate tables.

Intermediate results are ANKHOR's bread and butter - most operators can generate intermediate tables as a result. Working with the intermediate results is simple for the ANKHOR user and is a big part of its data centric approach to programming.

Inter Row Processing

Creating expressions that span multiple rows (beside simple aggregation) is a common operation in spreadsheets, but hard to maintain, if the source table in question is of dynamic size. SQL databases treat all rows as individual elements of a set, which have no inherent order and thus cannot address one another in a relative form (such as the previous or next row). One can work around this problem with the help of “self joins” and computed indices, but this requires some rather arcane knowledge, frequently non-standard extensions and is usually opening a can of worms on the performance side.

ANKHOR tables have a natural order, similar to spreadsheets, so it is quite simple to combine several rows into one computation. A common usage scenario is linear (or higher order) interpolation, where one has to find the two rows in an ordered table that form the upper and lower neighbours of the definition value.

Recursion – Table in a Table

Spreadsheets support only simple data elements in tables, such as numbers or strings, so there is clearly no easy way to stuff a table into the cell of another table – so if you have a variable size element e.g. email addresses of a client, you have to provision multiple columns or repeat the entry. In SQL one would normalize this scenario by adding a second table with e-mail addresses that uses the primary key of the customer table as a foreign key – this technique starts to fail (or lead again to self joins) when trees or other higher order recursive structures are needed.

ANKHOR supports two types of recursive tables in tables structure. The simple option is boxing, which packs a complete table into a data element. The more versatile option is the use of TAG – lists which are similar to dictionaries in script languages and allow easy combination of recursive and non-recursive data structures.

Polymorphism and Dynamic Types

SQL is very strict in its typing of columns. Each column may only have one type, e.g. integer of a given size, float or string. This is great for data bases that require strict data integrity, but it can become quite burdensome when using SQL for calculations, e.g. combining several tables into temporary tables. Spreadsheets on the other hand allow complete freedom of data types in each cell. There is no fixed type required for a column.

ANKHOR tables are similar to spreadsheet tables - a cell may contain any kind of simple (or boxed) value. Values are dynamically converted into matching types when needed by applied operators.

Joining Tables

SQL is very powerful when it comes to join tables using one or more key columns. This cannot be said for spreadsheets where usually only a single column and perfect match is supported. There are extensions for most spreadsheet applications to support some kind of join, but it does usually not reach the power of SQL joins.

ANKHOR does not provide SQL-style joins as primitive operators, but offers various simple operators to create the same result, as well as some library operators that perform common SQL join types (e.g. inner, outer or left join).

Performance of ANKHOR Tables

The huge number of intermediate tables and the availability of these data sets for inspection in the application put quite a burden on ANKHOR's solver engine. All intermediate results must be available to the user when she hovers over a connector or inspects it in one of the data view – but should not eat precious memory for storage.

There is also a performance penalty for generating all those intermediate results. An SQL database creates an execution plan that tries to minimize the amount of disk accesses while executing a request. Fortunately ANKHOR is a strict in-memory tool, so there is no need for a global execution plan.

ANKHOR employs various means to improve memory usage and performance when working with large tables.

Parallel Processing

Operations that work on independent cells of large tables are spread over all available processors. This significantly improves processing of large data sets in modern multi-core processors. This greatly benefits from ANKHOR's in-memory nature, because there is no performance lost due to unordered disk accesses.

The structured nature of ANKHOR tables makes it easy to spot those parallel execution opportunities.

Data Compression

ANKHOR provides automatic and on request column-compressed tables. Each column that benefits from compression is split into a data dictionary and a bit-packed index into this dictionary. This can result in a data reduction of more than 90% for tables with mostly categorical data. Boolean columns (and rows) in tables are naturally packed into single bits per data value.

Another style of data compression is used for sparse tables with only a comparatively low number of non zero or neutral cells. These tables use a dictionary for the row, column and value all non zero cells, and can thus reach even higher compression ratios.

Compressed tables are not only beneficial for memory conservation, they can also speed up many operations by reducing the number of operations to the size of the dictionary instead of the complete table. An operation on a column-compressed table that is not significantly reducing the size of the dictionary (e.g. adding a value) will reuse the index table, thus conserving memory and processing time.

Dynamic Joins or Segmentations

A significant quantity of table operations in ANKHOR are splitting tables by columns, selecting rows or joining tables by columns or rows. These operations are in most cases not actually executed but handled by creating a proxy table (similar to a non-realized view in a data base). These proxy tables know how to access the actual data element (or series of elements in a bulk operation) and can thus act as if they would be the real processing result.

This is quite easy for selecting individual columns or row ranges. The proxy can easily return the value in question by simply adding a base to the row number of returning a value from the table instead of a complete row.

A non-consecutive selection of rows is handled with a bit-packed index table and a reference to the original table and can thus respond fast to a request for rows.

Joining two or more tables results in a balanced binary tree that guarantees an O(log n) access to any row of the resulting table.

ANKHOR tables with multiple columns are row oriented (single columns and lists are not). Thus it becomes complex to generate table proxies that represent tables that are formed by joining several columns or selecting multiple columns from a table. The solution implemented in the proxies for these cases is an on-demand creation of row proxies when needed. These row proxies are cached in blocks and can thus be cheaply created or destroyed during processing.

Optimized Layout of Small Table Rows

Many non-trivial data types in ANKHOR such as 2D or 3D vectors, colours or TAG lists are implemented using small table rows. The usual memory layout of an ANKHOR table row in a 64 bit system is as follows:

- 64 Bit C++ v-table pointer

- 32 Bit reference count

- 32 Bit flags and hash

- 64 Bit pointer to table head / type

- 64 Bit pointer to array of cells of table

This is an overhead of four 64Bit words per row.

A small table with less than twenty cells reduces this further by embedding the cells into the row structure. This does not only save the pointer to the array, it also reduces the number of memory allocations required to allocate the row. The overhead is reduced to three 64 Bit words per row.

Small tables for built-in types are compressed one step further by removing the pointer to the table head. The head is embedded as a constant virtual function of the table class. Thus we only have two 64 Bit words overhead per row.

Central Meta Data Dictionary

ANKHOR uses optimized algorithms for tables with certain properties such as:

- Composed of only simple values

- Composed of only numeric/boolean/date values

- Ordered / sorted

- Only unique values

- Only small integers

- Small range of values

It would be quite expensive to calculate these properties for all temporary tables, even storing this data with the table would be a waste in most cases. ANKHOR uses a centralized meta data dictionary for all tables which is queried or extended whenever one of these properties is checked for a table. A common operation is to look for the next smaller or equal value in a table, which can be implemented quite efficiently if the table is sorted. This sorted property is only checked once for a table and then maintained in this dictionary.

A second meta data dictionary with complete hashes is maintained for tables that are used as index (searching for matching rows).

Deferred Execution

Several ANKHOR libraries work with the concept of deferred execution. The operators of these libraries are not actually performing work, instead they simply record their task in a data structure and return an object with a display method. Combining several of these “proxy” type operators can create a complex task that is finally executed by an “execute” or “query” operator.

This is possible because ANKHOR treats its operators and graphs as data elements that can be modified, created and executed by other operators. A common structure of these deferred execution libraries is a compile operator that generates an actual graph from the data structure created by the proxy operator. This graph is then optimized by the built-in optimizing operator and either directly executed or sent to the remote execution server for execution.

The display method will be invoked, when the user inspects the intermediate objects created by the proxy operators. These methods will render the state of the execution on the fly using the same compiling technique but only the part of the data constructed so far.

Conclusion

The flexibility, power and ease of use of ANKHOR tables are neither found in classic SQL nor in spreadsheets. The built-in optimizations allow ANKHOR to present a simple-to-use paradigm of data centric modelling instead of either complex scripts and expressions or un-maintainable wallpapers of cells with hidden formulas and built-in pitfalls.