Introduction

This will only be a short article about a single trick - how to solve a special thread-synchronisation-problem.

All you need is to call Delegate.BeginInvoke() / Delegate.EndInvoke(), no Callback, Waithandle and stuff is required.

Assume, a (main-)thread starts multiple side-threads, and before the main-thread can process on it must wait until all side-threads have done their jobs. Logically the overall wait-time is nearly exactly the time which the slowest side-thread needs (the other threads of course are run out already at that time). How can this be achieved?

First the complete code, in VB and C#:

Imports System.Collections.Generic

Imports System.Linq

Imports System.Threading

Public Module Module1

Private Sub ThreadOut(ByVal ParamArray args As Object())

Console.WriteLine(Thread.CurrentThread.ManagedThreadId.ToString()

& ": " & String.Concat(args))

End Sub

Private Function ThreadFunc(ByVal halfAmount As Integer) As Integer

ThreadOut("start sleep for ", halfAmount)

Thread.Sleep(halfAmount)

ThreadOut("sleeped for ", halfAmount)

Return halfAmount * 2

End Function

Public Sub Main(ByVal args As String())

Do

Console.WriteLine()

Console.WriteLine("start? y / n")

If Console.ReadKey().KeyChar <> "y"c Then Return

Console.WriteLine()

Dim sleepTimes = New Integer() {1500, 500, 2500}

Dim dlg = New Func(Of Integer, Integer)(AddressOf ThreadFunc)

Dim asyncResults = From n In sleepTimes _

Select dlg.BeginInvoke(n, Nothing, Nothing)

Dim results = New List(Of Integer)()

Dim sw = System.Diagnostics.Stopwatch.StartNew()

For Each asyncRes In asyncResults.ToArray()

results.Add(dlg.EndInvoke(asyncRes))

Next

Console.WriteLine()

Console.WriteLine(String.Format("Done in {0} ms", sw.ElapsedMilliseconds))

Console.WriteLine()

Console.WriteLine("results:")

For Each itm In results

Console.WriteLine(itm)

Next

Loop

End Sub

End Module

using System;

using System.Collections.Generic;

using System.Linq;

using System.Threading;

class Program {

static private void ThreadOut(params object[]args) {

Console.WriteLine(Thread.CurrentThread.ManagedThreadId.ToString() + ": "

+string.Concat(args));

}

static private int ThreadFunc(int halfAmount) {

ThreadOut("start sleep for " , halfAmount);

Thread.Sleep(halfAmount);

ThreadOut("sleeped for ", halfAmount);

return halfAmount * 2;

}

static void Main(string[] args) {

while(true) {

Console.WriteLine();

Console.WriteLine("start? y / n");

if(Console.ReadKey().KeyChar != 'y') return;

Console.WriteLine();

var sleepTimes = new int[] { 1500, 500, 2500 };

var dlg = new Func<int, int>(ThreadFunc);

var asyncResults = from n in sleepTimes select dlg.BeginInvoke(n, null, null);

var results = new List<int>();

var sw = System.Diagnostics.Stopwatch.StartNew();

foreach(var asyncRes in asyncResults.ToArray())

results.Add(dlg.EndInvoke(asyncRes));

Console.WriteLine();

Console.WriteLine(string.Format("Done in {0} ms", sw.ElapsedMilliseconds));

Console.WriteLine();

Console.WriteLine("results:");

foreach(var val in results) Console.WriteLine(val);

}

}

}

Let me repeat the main-points (already mentioned in the kernel-comments):

- Create a delegate from the method to be executed asynchronously

- Loop the data and start processing by calling

Delegate.BeginInvoke(dataItem) - collect each returned IASyncResult. This is very fast, since BeginInvoke() does not process anything, but only triggers the parallel execution. - Loop the

IASyncResults, and call Delegate.EndInvoke() - collect the result-data into the final result-collection.

The last point is the trick: EndInvoke blocks until the parallel process is run out, and can deliver its result. Therefore the first EndInvoke-call blocks, and waits for the side-threads ending. After that, it directly runs into the next EndInvoke-call.

Now, if the second process was faster than the first process, the second EndInvoke-call will not block, because it can deliver its result immediately. Otherwise it will block too, but only for that time-amount for which the second process is slower than the first.

And so on.

You see: The overall wait-time will be nearly exactly the time which the slowest process needs.

Points of Interest

Better Approaches

In most cases, there are better approaches than to keep the main-thread waiting:

- You can trigger a notification for each result directly from the side-threads to the main-thread, and process it directly, when the result is evaluated. E.g., you can use the approach shown in my article AsyncWorker [ ], to implement that (type-)safe and easy.

- You can hold a queue of jobs, and one side-thread executes them one after another. Notification can be implemented either for each done job or when all jobs are completed.

Threadpool-behavior

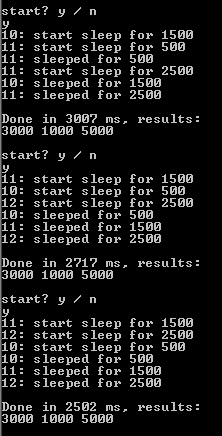

Run the given app, execute the parallel processes three times, and see the output:

The number lines are output by the side-thread (the numbers are Thread-IDs).

You see, the Threadpool needs to "warm up" before it becomes real performant: In the first execution, the pool dispatches the three jobs to only two threads - so the overall wait-time is the sum of job#2 and job#3.

The second execution also is much slower than expected - it seems the threadpool-management takes a lot of time for itself.

From the third execution on there are three side-threads, starting immediately and the overall wait-time is nearly that time, which the slowest process needed.

Even the order of starts is mixed up (compare it with the order of the code-given input-data) - that is, how parallel processes should behave.

Set the threadpool on "warmed up".

In some cases, it's an effective optimization to set the minimum-number of running threads in the threadpool, before requesting them:

ThreadPool.SetMinThreads(3, 0);

Now the performance immediately is nearly as good as the 3rd run mentioned above. Maybe you want to reset the number afterwards to the previous value, since a thread is an "expensive" resource.