Introduction

Health Warning - This code features the infamous GOTO in the SQL statements

We had a problem. A big problem. Our centralised alarm event database system was growing to extremes. Bigger than the box could handle and throwing money at it wasn't the answer, it would only delay the problem to another day.

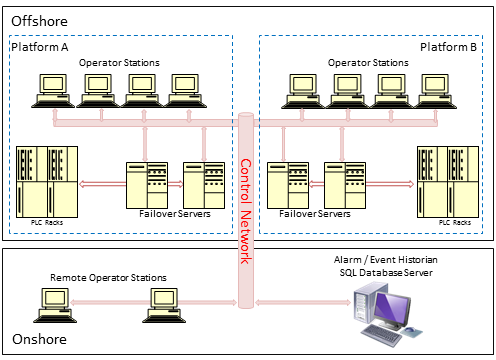

This particular system was taking the data from two offshore platforms and storing in a centralised alarm/event historian in an onshore 'bunker'.

The two platforms were normally pushing about 25,000 records per day each, however if a significant platform event occurred or a fault existed on a field device, then this might typically be around 100,000 events or in a fault scenario push towards 1,000,000.

Every so often the server would fill up, run out of disk space and prevent the offshore servers from flushing their local buffers to the historian.

The manufacturer provided a manual tool to purge history. This would take a long time, would regularly fail for reasons like running out of disk space needed to execute the queries. This meant that it could take days of picking away at the data until it was possible to slowly run larger and larger time frames until sufficient space was free again to put the server back 'online'. During this time, offshore data buffers were overflowing and data was being lost. Not critical, but annoying. If a platform tripped for any reasons during the time the purge was ongoing, chances are we would not be able to analyse the event in any great detail.

The basic schematic of the (relevant parts of the) system is shown below:

A Quick Look At The Data

It was easy enough to open up SQL Management tools and have a look at the data/tables, this would maybe help understand some of the issues more.

Looking at the various tables for things that relate to Events and Alarms the following schema elements were identified.

Now, we are starting to get an understanding of what is going on.

Step 1: Understanding the problem

The main problem was easy, not enough server resources, i.e. too small disks. As stated above, this could easily be fixed temporarily by throwing money at the problem, but this wouldn't solve the issue long term.

The secondary problem was probably the most significant. The purge tool would not run with clients still feeding data. The tool was proprietary 3rd Party and no one knew what it was doing internally. There was no documentation. Not forgetting this was a manual tool. Someone had to remember to check SQL storage space and run the tool as necessary to free up space.

The first utility tool that I decided to use to help understand what was going on was the SQL Query Profiler. Starting this up and watching what was going on, allowed me to see what processes were connecting etc, enabling filters to be set to simplify the process on peeking under the hood.

Using the profiler, the purge tool was initiated and watching for the queries coming through it was easy to pick up what SQL statements were being fired off against the box and in what order, how far they are getting and why things were failing.

Recovered Queries

There were effectively 8 steps recovered from the profiler, these were;

Select * From Comment Where CommentID In

(Select CommentID From AlarmDetail Where AlarmID In

(Select AlarmID From AlarmMaster Where Origination Time < 'yyyy/mm/dd'))

Delete From AlarmDetail Where AlarmID in

(Select AlarmID From AlarmMaster Where Origination Time < 'yyyy/mm/dd')

Delete From Comment Where CommentID In (xxxx,yyyy,zzzz,....)

IF @@TRANCOUNT > 0 Rollback Tran

Delete From AlarmMaster Where OriginationTime < 'yyyy/mm/dd'

Delete From Events Where EventTime < 'yyyy/mm/dd'

Delete From TagStatus When ChangeTime < 'yyyy/mm/dd'

IF @@TRANCOUNT > 0 Commit Tran

The 'yyyy/mm/dd' were the same values across each step and was the date submitted via the purge tool GUI.

The ID used in (xxxx,yyyy,zzzz,....) where the record IDs recovered from Step 1 of the process.

You can also see the nested Selects, these are probably not helping with the size of the query and intermediate processing required.

@xyz are local variable used in any SQL query/procedure and @@xyz are server level variables

We are definitely getting somewhere now. What I did also observe was when one of the offshore clients pushed data to the server, that is when the purge tool failed and that is due to the Rollback statement which is conditional on the @@TRANCOUNT, i.e. if there are other transactions pending, don't go any further.

Step 2 - Working A Solution

Looking at what we recovered it was easy to see where there are no dependencies. I also proved that by trying to delete data in the wrong order resulted in Foreign Key Constraint exceptions.

I also want to do this in small batches to prevent huge transaction log requirements etc.

The process must be fully automated. To do this I set up a maintenance job that ran every night during a typically quiet time, e.g. 3am, This would be when it was very unlikely that others would be polling the database to investigate plant issues that have previously occurred etc. Note: the data is classified as 'non-critical' and after about four months it is pretty much useless. Any events that have occurred after this time have already been fully investigated. For this reason, we are happy to delete the data rather than export it to longer term storage etc.

In the maintenance job I created four SQL queries that would run in sequence. These four queries would;

- Delete the Event records

- Delete the AlarmDetail records

- Delete the AlarmMaster records

- Delete the Alarm Comment records

Stage 1 - Remove Event Records

At the start of the query you will see we tell the server which database to USE WWALMD in this case. I can only assume that this name is short for WonderWare Alarm Database, as it was an WonderWare InTouch HMI (human machine interface). For more about this product, visit: www.wonderware.com

Next up there are few declarations for the variables that will be used in the query. We set these up as appropriate, using a DATEADD function to calculate the earliest date to retain the records.

Next up we establish the record count to delete and simply loop through these records deleting and committing the transactions in the batch size defined.

We use the @@ROWCOUNT to get the number of records deleted by the previously executed statement, this allows us to count down the number of records as we loop through each batch.

The other stages are done in the same way, but just changing the relevant table and field names where required.

USE WWALMDB

Declare @Days as BigInt

Declare @StartDate as DateTime

Declare @Count as bigint

Declare @RowCountTotal as bigint

Declare @BatchSize as bigint

Declare @MSG as nvarchar(256)

Set @Days = -120

Set @StartDate = DateAdd(dd,@Days,GetDate());

Set @MSG = 'Start Date For Purge: ' + cast(@StartDate as varchar );

RAISERROR (@MSG,0,1) With NOWAIT

Set @RowCountTotal = 0

Set @BatchSize = 10000

Select @Count = (Select Count(EventID) From Events Where EventTime < @StartDate);

Set @MSG = 'Records To Delete = ' + cast( @Count as varchar)

RAISERROR (@MSG,0,1) With NOWAIT

LoopStart:

IF (@Count - @RowCountTotal) > @BatchSize

Begin

Begin Transaction

Delete Top (@BatchSize) From Events Where EventTime < @StartDate

Set @RowCountTotal = @RowCountTotal + @@RowCount

Commit Transaction

Goto LoopStart

End

Else

If (@Count - @RowCountTotal) > 0

Begin

Begin Transaction

Delete Top (@Count - @RowCountTotal) From Events Where EventTime < @StartDate

Set @RowCountTotal = @RowCountTotal + @@RowCount

Commit Transaction

End

Set @MSG = 'Completed Purge Operation of Events.';

RAISERROR (@MSG,0,1) With NOWAIT

Set @MSG = 'Total Records Purged: ' + cast(@RowCountTotal as varchar);

RAISERROR (@MSG,0,1) With NOWAIT

Stage 2 - Remove Alarm Detail Records

USE WWALMDB

Declare @Days as BigInt

Declare @StartDate as DateTime

Declare @Count as bigint

Declare @RowCountTotal as bigint

Declare @BatchSize as bigint

Declare @MSG as nvarchar(256)

Set @Days = -120

Set @StartDate = DateAdd(dd,@Days,GetDate());

Set @MSG = 'Start Date For Purge: ' + cast(@StartDate as varchar );

RAISERROR (@MSG,0,1) With NOWAIT

Set @RowCountTotal = 0

Set @BatchSize = 10000

Select @Count = (Select Count(AlarmDetailID) From AlarmDetail Where TransitionTime < @StartDate);

Set @MSG = 'Records To Delete = ' + cast( @Count as varchar)

RAISERROR (@MSG,0,1) With NOWAIT

LoopStart:

IF (@Count - @RowCountTotal) > @BatchSize

Begin

Begin Transaction

Delete Top (@BatchSize) From AlarmDetail Where TransitionTime < @StartDate

Set @RowCountTotal = @RowCountTotal + @@RowCount

Commit Transaction

Goto LoopStart

End

Else

If (@Count - @RowCountTotal) > 0

Begin

Begin Transaction

Delete Top (@Count - @RowCountTotal) From AlarmDetail Where TransitionTime < @StartDate

Set @RowCountTotal = @RowCountTotal + @@RowCount

Commit Transaction

End

Set @MSG = 'Completed Purge Operation of AlarmDetail.';

RAISERROR (@MSG,0,1) With NOWAIT

Set @MSG = 'Total Records Purged: ' + cast(@RowCountTotal as varchar);

RAISERROR (@MSG,0,1) With NOWAIT

Stage 3 - Remove Alarm Master Records

USE WWALMDB

Declare @Days as BigInt

Declare @StartDate as DateTime

Declare @Count as bigint

Declare @RowCountTotal as bigint

Declare @BatchSize as bigint

Declare @MSG as nvarchar(256)

Set @Days = -120

Set @StartDate = DateAdd(dd,@Days-1,GetDate());

Set @MSG = 'Start Date For Purge: ' + cast(@StartDate as varchar );

RAISERROR (@MSG,0,1) With NOWAIT

Set @RowCountTotal = 0

Set @BatchSize = 10000

Select @Count = (Select Count(OriginationTime) From AlarmMaster Where OriginationTime < @StartDate);

Set @MSG = 'Records To Delete = ' + cast( @Count as varchar)

RAISERROR (@MSG,0,1) With NOWAIT

LoopStart:

IF (@Count - @RowCountTotal) > @BatchSize

Begin

Begin Transaction

Delete Top (@BatchSize) From AlarmMaster Where OriginationTime < @StartDate

Set @RowCountTotal = @RowCountTotal + @@RowCount

Commit Transaction

Goto LoopStart

End

Else

If (@Count - @RowCountTotal) > 0

Begin

Begin Transaction

Delete Top (@Count - @RowCountTotal) From AlarmMaster Where OriginationTime < @StartDate

Set @RowCountTotal = @RowCountTotal + @@RowCount

Commit Transaction

End

Set @MSG = 'Completed Purge Operation of Alarm Master.';

RAISERROR (@MSG,0,1) With NOWAIT

Set @MSG = 'Total Records Purged: ' + cast(@RowCountTotal as varchar);

RAISERROR (@MSG,0,1) With NOWAIT

Stage 4 - Remove Alarm Comment Records

USE WWALMDB

Declare @Days as BigInt

Declare @StartDate as DateTime

Declare @Count as bigint

Declare @RowCountTotal as bigint

Declare @BatchSize as bigint

Declare @MSG as nvarchar(256)

Set @Days = -120

Set @StartDate = DateAdd(dd,@Days -1,GetDate());

Set @MSG = 'Start Date For Purge: ' + cast(@StartDate as varchar );

RAISERROR (@MSG,0,1) With NOWAIT

Set @RowCountTotal = 0

Set @BatchSize = 10000

Select @Count = (Select Count(CommentTime) From Comment Where CommentTime < @StartDate);

Set @MSG = 'Records To Delete = ' + cast( @Count as varchar)

RAISERROR (@MSG,0,1) With NOWAIT

LoopStart:

IF (@Count - @RowCountTotal) > @BatchSize

Begin

Begin Transaction

Delete Top (@BatchSize) From Comment Where CommentTime < @StartDate

Set @RowCountTotal = @RowCountTotal + @@RowCount

Commit Transaction

Goto LoopStart

End

Else

If (@Count - @RowCountTotal) > 0

Begin

Begin Transaction

Delete Top (@Count - @RowCountTotal) From Comment Where CommentTime < @StartDate

Set @RowCountTotal = @RowCountTotal + @@RowCount

Commit Transaction

End

Set @MSG = 'Completed Purge Operation of Comment.';

RAISERROR (@MSG,0,1) With NOWAIT

Set @MSG = 'Total Records Purged: ' + cast(@RowCountTotal as varchar);

RAISERROR (@MSG,0,1) With NOWAIT

Points of Interest

The Alarm Master data would nearly always fail towards the end of the stage as there would be some foreign key constraints still present. This wasn't a problem though as these would be swept up in the next days purge cycle.

I also noted that the TagStatus query wasn't required, as there was no data ever present in this table.

I am no SQL guru and this certainly was an interesting challenge. Pouring over the SQL Books Online for hour on end, lots of trial and error until Bingo, it works!

Because it also works in smaller transactional batches, it means the Control System can continue to push new records to the database. The transaction log also was kept smaller.

Also, and a big also, we did not need to go and spend more money on hardware which was going to be made redundant.

This went on to serve us well for a long time (5+ years) and is reaching its retirement shortly as we have nearly finished the migration to a new control system with much improved alarm historian features.

Elegant it may not be, but it works. And more importantly, it works reliably. No more spending hours recovering the system picking off data to get us through another couple of weeks to then repeat.

Sometimes, a hacky hack is just what is needed. Even if there are a few GOTOs in the mix :)

Back-up Lesson

You will notice that the dates in the code are 2010, but the narrative says been in use for 5+ years, well we had a server meltdown and nobody had taken backups of the queries. As a result of this I had to rewrite them again. Probably not written the same the second time round, although from memory I think they are better than the first attempt, but in principle they do the same thing.

This time, I kept copies of the scripts......

SQL References

History

- 30th November 2013 - Narrative updates

- 29th November 2013 - First article version