Introduction

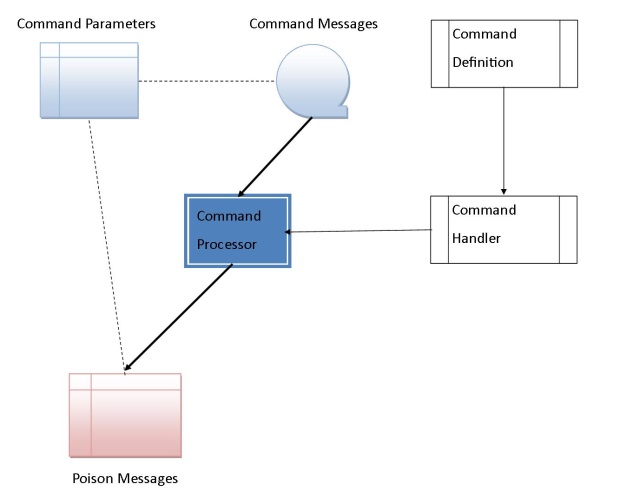

At the end of the article CQRS on Windows Azure - The Command side, we finished up with an architecture and code to define specific commands and to pass the commands on using a queue and table to hold the command and its parameters respectively. In this article, I'm going to look at the other side of the command leg - receiving the command and processing it.

Background

At its simplest, the process for the command processor is:

- Take the next command off the queue

- Find the appropriate handler for that type of command

- Read the parameters for the command

- Pass these to the command handler

- Wait for the command to complete

Then take the next command off the queue.

In a world where processing was infinite and free, where commands could always complete and hardware was 100% reliable, this would be all that is needed. However, this is not the case so additional thought and code is required.

Processing Is Not Infinite Nor Free

On Windows Azure, there are restrictions that impact how you set up your application. The first is that the Queue Storage is throttled at 500 reads per second, and if your application is considered to be too burdensome, it can be throttled back even more aggressively.

Therefore, you cannot have the queue reading code in a tight loop - it makes sense to have your own minimum inter-read timespan.

In addition, processing is not free. On Windows Azure, there is a charge (albeit very small) levied every time you access a queue even if there are no messages on that table.

For this reason, you should read commands in batches rather than one at a time, and you should also dynamically increase your inter-read timespan if you have multiple occurrences of a read cycle that has no commands on the queue to process.

Public Interface IPullModeCommandProcessor

Property BatchSize As Integer

Property InterPollingDelay As TimeSpan

End Interface

Commands Cannot Always Complete

There are two classes of reason why a command cannot complete - transient issues such as busy hardware and permanent issues such as impossible commands. In most business contexts, it is not sensible to catalogue and classify all the possible causes of failure so instead a compromise is used: retry a number of times but if you still fail, put the command aside as impossible. In my preference, this number-of-retries rule can be set on a per-command definition basis as some types of commands are more susceptible to repeatable transient error than others.

ReadOnly Property RecomendedPoisonMessageCeiling As Integer

Every time any command is read from the queue, the DequeueCount property is checked and if greater than or equal to the poison message ceiling the command is added to a "poison messages" table and deleted from the queue. This prevents it being attempted again.

If a message dequeue count is less than this ceiling, then it is not deleted from the queue until it completes successfully. This means that if, for any reason, the command fails to complete, it will be presented for a retry again at a later time.

However, some commands may fail in a partially-complete status. For example, if there was a command that was to convert a hundred files from one format to another and it failed on the 30th file, then when it came around again, a choice would need to be made - roll back the changes from the failed command or start from where the failed command got to. In either of these cases, we need to track how far a command has progressed by having a "step completed" event.

...

Public NotInheritable Class CommandStepCompletedEventArgs

Inherits EventArgs

ReadOnly m_instanceIdentifier As Guid

ReadOnly m_stepNumber As Integer

Public ReadOnly Property CommandInstanceIdentifier As Guid

Get

Return m_instanceIdentifier

End Get

End Property

Public ReadOnly Property StepNumber As Integer

Get

Return m_stepNumber

End Get

End Property

Public Sub New(ByVal commandInstanceIdentifierIn As Guid, ByVal stepCompletedIn As Integer)

m_instanceIdentifier = commandInstanceIdentifierIn

m_stepNumber = stepCompletedIn

End Sub

End Class

Hardware is Not 100% Reliable

The command handler is implemented as a worker role, and this worker role may fail at any time. Azure does spin up a new instance if any worker role failure is detected but this does take a small amount of time and this will impact processing.

The solution to this is to scale the worker role horizontally (elastically) by having at least one more worker running than is required - thus if a node fails, this spare can take up the slack.

Notification of Statuses

A common question is how do I feed back to the user that the command they have issued is done? My recommendation here is to use the command unique identifier and have a query that returns the status of the command. This query can then be polled - either explicitly by the client or by a task on the server end - and any status changes sent back accordingly.

This maintains the purity of the separation between command and query which allows for them to be scaled independently.

Implementing commands as an event stream

One interesting way of doing the command side in a CQRS system is to implement each command's life cycle as an event stream and to use a projection over that event stream to get the current status of the command.

For example you might have command events defined for "Command Created", "Command Execution Started", "Fatal Error", "Command Completed" and so on. Each of these would have the event properties to add detail to what has happened to the command and by "playing through" the event stream for a command you could then derive its current state.

This also allows the command handler to keep a set of "to do" commands by playing through their event streams so that you do not have to explicitly re-issue any commands if they are requeued after a transient fault.

References

The Microsoft documentation - in particular, How to use queues, are a primary reference for this article.

History

- 2014-02-02 Initial version

- 2016-04-15 Added notification of statuses

- 2017-01-08 Added "Implementing commands as an event stream" idea