So you have this SQL Server database that your application uses and it somehow seems to be slow. How do you troubleshoot this problem? Where do you look? What do you measure? I hope this article will answer enough questions to get you started so that you can identify the bottlenecks yourself, or know what to search for to further extend your arsenal and knowledge.

How Does SQL Server Work

To be able to troubleshoot performance problems, you need to have an understanding of how SQL Server works. The grossly simplified explanation is that SQL Server executes your queries as follows:

- The application sends a

request to the server, containing a stored procedure name or some T-SQL statements. - The request is placed in a queue inside SQL Server memory.

- A free thread from SQL Server’s own thread pool picks up the request, compiles it and executes it.

- The request is executed statement by statement, sequentially. A statement in a request must finish before the next starts, always. Stored procedures execute the same way, statement by statement.

- Statements can read or modify data. All data is read from in-memory cache of the database (the

buffer pool). If data is not in this cache, it must be read from disk into the cache. All updates are written into the database log and into the in-memory cache (into the buffer pool), according to the Write-Ahead Logging protocol. - Data locking ensures correctness under concurrency.

- When all statements in the request have executed, the thread is free to pick up another request and execute it.

- A request is currently executing that is suspended right now you can see what is it waiting on and how long has been waiting for.

For a much more detailed explanation, read Understanding how SQL Server executes a query.

A request is either executing or is waiting

This may sound trivial, but understanding that the request is either executing or is waiting for something (is suspended) is the key to troubleshooting SQL Server performance. If requests sent to SQL Server take a long time to return results, then they either take a long time executing or they take a long time waiting. Knowing whether it is one case or the other is crucial to figure out the performance bottlenecks. Additionally, if the requests take a long time waiting, we can dig further and find out what they are waiting for and for how long.

Understanding Request Waits

Waiting requests have Wait Info data

Whenever a request is suspended, for any reason, SQL Server will collect information about why it was suspended and for how long. In the internal SQL Server code, there is simply no way to call the function that suspends a task without providing the required wait info data. And this data is then collected and made available for you in many ways. This wait info data is crucial in determining performance problems:

- A request is currently executing that is executing right now you can see what is the last thing it waited on.

- You can understand when requests are waiting on other requests.

- You can get aggregates for what are the most waited for (busy) resources in the entire server.

- You can understand which physical (hardware) resources are saturated and are causing performance problems.

Wait Info for Currently Executing Requests

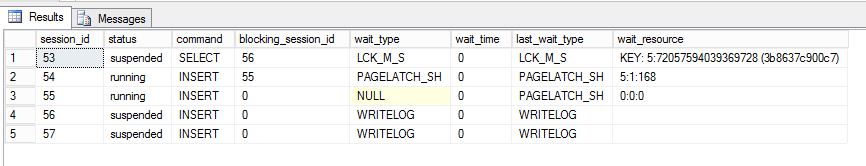

For every request executing on SQL Server, there is a row in sys.dm_exec_requests. Querying this DMV, at any moment, gives you a quick view of everything executing right then. The wait_type, wait_time and last_wait_type columns will give you an immediate feel of what is ‘running’ vs. what is ‘waiting’ and what is being waited for:

select session_id,

status,

command,

blocking_session_id,

wait_type,

wait_time,

last_wait_type,

wait_resource

from sys.dm_exec_requests

where r.session_id >= 50

and r.session_id <> @@spid;

- Request on session 53 is a

SELECT and is currently waiting. It is waiting on a lock. - Session 54 is an

INSERT and is currently waiting. It is waiting on a page latch. - Session 55 is an

INSERT and is currently running. Previous wait was a page latch. - Session 56 is an

INSERT and is currently waiting. It is waiting for the database log to flush (is committing a transaction). - Session 57 is an

INSERT and is currently waiting. It is waiting for the database log to flush.

Note that the request for session 54 has a status of running but it is actually waiting. This is because latch waits are expected to be short. The blocking_session_id also tells us, for some of the waiting tasks, what other request is holding the resource currently being waited. Session 53 is waiting on session 56 for a KEY lock resource, which means the SELECT is trying to read a row locked by the INSERT. The SELECT will not resume until the INSERT commits. Session 54 is waiting on session 55 for a page latch, which means that the session 55 INSERT is modifying that data page right now and the data on the page is unstable, not allowed to be read. Sessions 56 and 57 are waiting but there is no other session blocking them. They are waiting for the log to ‘flush’, meaning they must ensure the commit log record for their transaction had been durably written to disk. They will not resume until the disk controller acknowledges that the log was written.

Here is another example:

- Request on session 53 is a

COMMIT and is currently waiting. It is waiting for the database log to flush. - Session 54 is a

SELECT and is currently waiting. It is waiting on lock. - Session 55 is a

SELECT and is currently waiting. It is waiting on lock. - Session 56 is a

SELECT and is currently waiting. It is waiting on lock. - Session 57 is a

SELECT and is currently waiting. It is waiting on lock.

Note that in this example, every request is actually suspended. At this moment, the server is basically doing nothing. We have 4 sessions (54, 55, 56 and 57) waiting on the same lock (the row with the key lock resource KEY: 5:72057594039369728 (ac11a2bc89a9)). Session 54 is waiting on session 55 and session 55 is waiting on session 53 which means really session 54 is waiting on session 53. So everybody waits on session 53, which is waiting for the disk controller to write a log record on disk. We can also see, from the wait_time column, how long each session has waited for: about 160 milliseconds. Notice how in the previous example, we had only one request actually running out of 5. I’m running these queries on a workstation with 8 cores, plenty of RAM and a decent disk, so there is plenty of hardware to carry these requests, but instead, they are most of the times waiting instead of executing.

To make your requests faster, you need to have them be executing rather than waiting.

Is having most (or even all) requests waiting, rather than executing, something unusual? Not at all, this is the norm! Any time you will look at a SQL Server executing an even moderate load, you’ll see that most requests are waiting and only a few are executing. What you need to watch out for are long waits or short but repeated waits that add up. Long waits indicate some resource that is held for long times, and typically this occurs with locks. Repeated short waits indicate a resource that is being saturated, possibly a hot spot for performance.

Before I go further, I just want to show sys.dm_os_waiting_tasks, which is a SQL Server DMV specifically designed to show currently waiting tasks:

select * from sys.dm_os_waiting_tasks

where r.session_id >= 50

and r.session_id <> @@spid;

- Session 53 is waiting for the log to flush

- Session 57 is waiting for 40 ms for a lock on a row and is blocked by session 53 (which therefore must be owning the lock)

- Session 54 is waiting for 37 ms for a lock on a row and is blocked by session 57 (but session 57 is in turn blocked by session 53)

The situation we have here is much similar to the previous one, where we had 4 SELECT sessions blocked by an INSERT. He can see here two requests blocked by attempting to read a row (so they’re probably SELECT) and the blocking session is waiting for its transaction to durably commit. The information in this DMV is very similar to the one in sys.dm_exec_requests and the later one has more information, but there is one important case where the information in sys.dm_exec_requests is misleading: parallel queries.

To understand CXPACKET wait types you need to look into child parallel tasks

When a statement can benefit from parallel execution, the engine will create multiple tasks for the request, each processing a subset of the data. Each one of these tasks can execute on a separate CPU/core. The request communicates with these tasks using basically a producer-consumer queue. The query operator implementing this queue is called an Exchange operator (I’m really simplifying here, read The Parallelism Operator (aka Exchange) for a more accurate description). If this producer-consumer queue is empty (meaning the producers did not push any data into it) the consumer must suspend and wait and the corresponding wait type is the CXPACKET wait type. Requests that show this wait type are really showing that the tasks which should have produced data to consume are not producing any (or enough) data. These producer tasks in turn may be suspended, waiting on some other wait type and that is what is blocking your request, not the exchange operator. Here is an example:

select r.session_id,

status,

command,

r.blocking_session_id,

r.wait_type as [request_wait_type],

r.wait_time as [request_wait_time],

t.wait_type as [task_wait_type],

t.wait_duration_ms as [task_wait_time],

t.blocking_session_id,

t.resource_description

from sys.dm_exec_requests r

left join sys.dm_os_waiting_tasks t

on r.session_id = t.session_id

where r.session_id >= 50

and r.session_id <> @@spid;

Here we can see that request on session 54 has 3 tasks waiting, and while the request wait_type shows CXPACKET, one of the parallel child tasks is actually waiting on a page lock.

Aggregated Wait Stats: sys.dm_os_wait_stats

SQL Server aggregates statistics about all wait types and exposes them in a sys.dm_os_wait_stats. If looking at the currently executing requests and the waiting tasks showed us what was being waited at any moment, the aggregate stats give a cumulative status since server start up. Querying this DMV is straightforward, but interpreting the results is a bit more tricky:

select *

from sys.dm_os_wait_stats

order by wait_time_ms desc;

First, what’s the deal with all those wait types that take the lion’s share at the top of the result? DIRTY_PAGE_POOL, REQUEST_FOR_DEADLOCK_SEARCH, LAZYWRITER_SLEEP? We did not see any request ever waiting for these! Here is why: by adding the WHERE session_id >= 50 we filtered out background tasks, threads internal for SQL Server that are tasked with various maintenance jobs. Many of these background tasks follow a pattern like “wait for an event, when the event is signaled do some work, then wait again”, while others are patterned in a sleep cycle pattern (“sleep for 5 seconds, wake up and do something, go back to sleep 5 seconds”). Since no task can suspend itself without providing a wait type, there are wait types to capture when these background tasks suspend themselves. As these patterns of execution result in the task being actually suspended almost all time, the aggregated wait time for these wait types often trumps every other wait type. The SQL Server community came to name these wait types ‘benign wait types’ and most experts have a list of wait types they simply filter out, for example, see Filtering out benign waits:

select *

from sys.dm_os_wait_stats

WHERE [wait_type] NOT IN (

N'CLR_SEMAPHORE', N'LAZYWRITER_SLEEP',

N'RESOURCE_QUEUE', N'SQLTRACE_BUFFER_FLUSH',

N'SLEEP_TASK', N'SLEEP_SYSTEMTASK',

N'WAITFOR', N'HADR_FILESTREAM_IOMGR_IOCOMPLETION',

N'CHECKPOINT_QUEUE', N'REQUEST_FOR_DEADLOCK_SEARCH',

N'XE_TIMER_EVENT', N'XE_DISPATCHER_JOIN',

N'LOGMGR_QUEUE', N'FT_IFTS_SCHEDULER_IDLE_WAIT',

N'BROKER_TASK_STOP', N'CLR_MANUAL_EVENT',

N'CLR_AUTO_EVENT', N'DISPATCHER_QUEUE_SEMAPHORE',

N'TRACEWRITE', N'XE_DISPATCHER_WAIT',

N'BROKER_TO_FLUSH', N'BROKER_EVENTHANDLER',

N'FT_IFTSHC_MUTEX', N'SQLTRACE_INCREMENTAL_FLUSH_SLEEP',

N'DIRTY_PAGE_POLL', N'SP_SERVER_DIAGNOSTICS_SLEEP')

order by wait_time_ms desc;

Now we have a more coherent picture of the waits. The picture tells us what wait types are most prevalent, on aggregate, on this SQL Server instance. This can be an important step toward identifying a bottleneck cause. Furthermore, interpreting the data is data is subjective. Is the aggregate WRITELOG value of 4636805 milliseconds good or bad? I don’t know! Is an aggregate, accumulated since this process is running, so will obviously contiguously increase. Perhaps it had accumulated values from periods when the server was running smooth and from periods when it was running poorly. However, I now know that, from all ‘non bening’ wait types, WRITELOG is the one with the highest total wait time. I also know that max_wait_time for these wait types, so I know that there was at least one time when a task had to wait 1.4 seconds for the log to flush. The wait_task_count tells me how many times a task waited on a particular wait type, and dividing wait_time_ms/wait_task_count will tell me the average time a particular wait type has been waited on. And I see several lock related wait types in top: LCK_M_S, LCK_M_IS and LCK_M_IX. I can already tell some things about the overall behavior of the server, from a performance point of view: flushing the log is most waited resource on the server, and lock contention seems to be the next major issue. CXPACKET wait types are right there on top, but we know that most times CXPACKET wait types are just surrogate for other wait types, as discussed above. How about those other with types in the list, like SOS_SCHEDULER_YIELD, PAGELATCH_EX/SH or PAGEIOLATCH_EX/SH/UP?

Common Wait Types

In order to investigate wait types and wait times, either aggregate or for an individual query, one needs to understand what the wait type names mean. Many of the names are self-describing, some are cryptic in their name and some are downright deceitfully named. I think the best resource to read a detailed description of the names is the Waits and Queues white paper. It has an alphabetical list of wait types, description and what physical resource is correlated with. The white paper is a bit old (it covers SQL Server 2005) but still highly relevant. The wait type names for important resources has not changed since SQL server 2005, what you’ll be missing will be newer wait type names related to post SQL Server 2005 features. The wait types are briefly documented also in the sys.dm_os_wait_types topic on MSDN.

Disk and IO Related Wait Types

-

PAGEIOLATCH_*

- This is the quintessential IO, data read from disk and write to disk wait type. A task blocked on these wait types is waiting for data to be transferred between the disk and the in-memory data cache (the buffer pool). A system that has high aggregate

PAGEIOLATCH_* wait types is very likely memory starved and is spending much time reading data from the disk into the buffer pool. Be careful not to confuse this wait type with the PAGELATCH_* wait type (not the missing IO in the name). -

WRITELOG

- This wait type occurs when a task is issuing a

COMMIT and it waits for the log to write the transaction commit log record to disk. High average wait times on this wait type indicate that the disk is writing the log slowly and this slows down every transaction. Very frequent waits on this wait type are indicative of doing many small transaction and having to block frequently to wait for COMMIT (remember that all data writes require a transaction and one is implicitly created for each statement if no BEGIN TRANSACTION is explicitly used). -

IO_COMPLETION

- This wait type occurs for tasks that are waiting for something else that ordinary data IO. E.g.,. loading an assembly DLL, reading and writing TEMPDB sort files, some special DBCC data reading operations.

-

ASYNC_IO_COMPLETION

- This wait type is mostly associated with backup, restore, database and database file operations.

If your wait time analysis finds that IO and disk are important wait types, then your should focus on analyzing disk activity.

Memory Related Wait Types

-

RESOURCE_SEMAPHORE

- This wait type indicate queries that are waiting for a memory grant. See Understanding SQL Server memory grant. OLTP type workload queries should not require memory grants, if you see this wait type on an OLTP system, then you need to revisit your application design. OLAP workloads often are in need of (sometimes large) memory grants and large wait times on this wait type usually point toward RAM increase upgrades.

-

SOS_VIRTUALMEMORY_LOW

- Are you still on 32 bit systems? Move on!

Network Related Wait Types

-

ASYNC_NETWORK_IO

- This wait type indicates that the SQL Server has result sets to send to the application but the application does not process them. This may indicate a slow network connection. But more often, the problem is with the application code, it is either blocking while processing the result set or is requesting a huge result set.

CPU, Contention and Concurrency Related Wait Types

For further details, I recommend reading the Diagnosing and Resolving Spinlock Contention on SQL Server white paper.

-

LCK_*

- Locks. All wait types that start with

LCK indicate a task suspended waiting for a lock. LCK_M_S* wait types indicate a task that is waiting to read data (shared locks) and is blocked by another task that had modified the data (had acquired an exclusive LCK_MX* lock). LCK_M_SCH* wait types indicate object schema modification locks and indicate that access to an object (e.g.,. a table) is blocked by another task that did a DDL modification to that object (ALTER). -

PAGELATCH_*

- Do not confuse this wait type with the

PAGEIOLATCH_* wait types. High wait times on these wait types indicate a hot spot in the database, a region of the data that is very frequently updated (e.g., it could be a single record in a table that is constantly modified). To further analyze, I recommend the Diagnosing and Resolving Latch Contention on SQL Server white paper. -

LATCH_*

- These wait types indicate contention on internal SQL Server resources, not on data (unlike

PAGELATCH_* they do not indicate a hot spot in the data). To investigate these waits, you need to dig further using the sys.dm_os_latch_stats DMV which will detail the wait times by latch type. Again, the best resource is Diagnosing and Resolving Latch Contention on SQL Server white paper. -

CMEMTHREAD

- This wait type occurs when tasks block on waiting to access a shared memory allocator. I placed this here, in concurrency section, and not in ‘memory’ section because the problem is related to internal SQL Server concurrency. If you see high

CMEMTHREAD wait types, you should make sure you are at the latest SQL Server available Service Pack and Cumulative Update for your version, because some of these kind of problems denote internal SQL Server issues and are often addressed in newer releases. -

SOS_SCHEDULER_YIELD

- This wait type can indicate spinlock contention. Spinlocks are extremely light wait locking primitives in SQL Server used for protecting access to resources that can be modified within few CPU instructions. SQL Server tasks acquire spinlocks by doing interlocked CPU operations in a tight loop, so contention on spinlocks burns a lot of CPU time (CPU usage counters show 90-100% usage, but progress is slow). Further analysis need to be done using

sys.dm_os_spinlock_stats:

select * from sys.dm_os_spinlock_stats

order by spins desc;

-

RESOURCE_SEMAPHORE_QUERY_COMPILE

- This wait type indicates that a task is waiting to compile its request. High wait times of this type indicate that query compilation is a bottleneck. For more details, I recommend reading Troubleshooting Plan Cache Issues.

-

SQLCLR_QUANTUM_PUNISHMENT

- This wait type occurs if you run CLR code inside the SQL Server engine and your CLR code does not yield the CPU in time. This results in an throttling of the CLR code (“punishment”). If you have CLR code that can potentially hijack the CPU for a long time, you must call

Thread.BeginThreadAffinity(). For more details, I recommend watching Data, Faster: Microsoft SQL Server Performance Techniques with SQLCLR.

Special Wait Types

-

TRACEWRITE

- This wait type indicates that tasks are being blocked by SQL Profiler. This wait type occurs only if you have SQL Profiler attached to the server. This wait type

occur often during investigation if you had set up a SQL Profiler trace that is too aggressive (gets too many events). -

PREEMPTIVE_OS_WRITEFILEGATHER

- This wait type occurs, among other things, when auto-growth of files is triggered. Auto-growth occurs when a insufficiently sized file is grown by SQL Server and is a hugely expensive event. During growth event, all activity on the database is frozen. Data file growth can be made fast by enabling instant file growth, see Database File Initialization. But log growth cannot benefit from instant file initialization so log growth is always slow, sometimes very slow. Log Auto-Growth events can be diagnosed by simply looking at the Log Growths performance counter in the SQL Server, Databases Object, if is anything but

0, it means log auto-growth had occurred at least once. Real-time monitoring can be done by watching for Data File Auto Grow Event Class and Log File Auto Grow Event Class events in SQL Profiler.

I did not cover many wait types here (e.g., CLR_*, SQLCLR_*, SOSHOST_*, HADR_*, DTC_* and many more). If you encounter a wait type you do not understand, usually just searching online for its name will reveal information about what that wait type is and what potential bottleneck it indicates, if any.

Analyze Disk Activity: IO Stats

SQL Server needs to read and write to the disk. All writes (inserts, updates, deletes) must be written to disk. Queries always return data from the in-memory cache (the buffer pool) but the cache may not contain the desired data and has to be read from disk. Understanding if the IO is a bottleneck for your performance is a necessary step in any performance investigation. SQL Server collects, aggregates and exposes information about every data and log IO request. First thing I like to look at is sys.dm_io_virtual_file_stats. This DMV exposes the number of writes and reads on each file for every database in the system, along with the aggregated read and write IO ‘stall’ times. Stall times are the total time tasks had to block waiting for transfer of data to and from disk.

select db_name(io.database_id) as database_name,

mf.physical_name as file_name,

io.*

from sys.dm_io_virtual_file_stats(NULL, NULL) io

join sys.master_files mf on mf.database_id = io.database_id

and mf.file_id = io.file_id

order by (io.num_of_bytes_read + io.num_of_bytes_written) desc;

The per file IO stats are aggregated since server start up, but be aware that they reset for each file if it ever goes offline. The total number of bytes transferred (reads and writes) is a good indicator of how busy a database is from the IO point of view. The stalls indicate which IO subsystem (which disk) is busy and may even be saturated.

Analyzing Individual Query Execution

When analyzing an individual statement or query for performance allows us to understand what the query is doing, how much is executing vs. what is waiting on. The most simple analysis is to use the execution statistics. These work by first running the appropriate SET STATISTICS ... ON in your session and then executing the statement or query you want to analyze. In addition to the usual result, the query will also report execution statistics, as follows:

-

- The query parse time, compile time and execution time are reported. If you execute a batch or a stored procedure with multiple statements, then you get back the time for each statement, which helps in determining which statement in a procedure is the expensive one. But we’ll see that analyzing the execution plan reveals the same information, and more.

-

- The IO statistics show, for each statement, the number of IO operations. The result shows several metrics for each table touched by the statement:

-

scan count

- Number of times the scans or seeks were started on the table. Ideally each table should be scanned at most once.

-

logical reads

- Number of data pages from which rows were read from the in-memory cache (the buffer pool).

-

physical reads

- Number of data pages from which data was had to be transferred from disk in-memory cache (the buffer pool) and the task had to block and wait for the transfer to finish.

-

read-ahead reads

- Number of data pages that were asynchronously transferred from disk into the buffer pool opportunistically and the task did not had to wait for the transfer.

-

LOB logical/physical/read-ahead reads

- Same as their non-lob counterparts, but referring to reading of large columns (LOBs).

There is also the SET STATISTICS PROFILE ON, which provides a detailed execution information for a statement, but much of the information overlaps with the execution plan info and I think analyzing the execution plan is easier and yields better information.

Logical Reads are the best measure of a statement cost.

A large logical reads value for a statement indicates an expensive statement. For OLTP environments, the number of logical reads should be in single digits. Large logical reads count translate to long execution times and high CPU consumption even under ideally conditions. Under less ideal conditions, some or all of these logical reads turn into physical reads which mean long wait times waiting for disk transfer of data into the buffer pool. Large logical reads are indicative of large data scans, usually entire table end-to-end scan. They are most times caused by missing indexes. In OLAP, decision and DW environments, scans and large logical reads may be unavoidable as indexing for an OLAP workload is tricky, and indexing for an ad-hoc analytic workload is basically impossible. For these later cases, the answer usually relies in using DW specific technologies like columnstores.

Information about query execution statistics can also be obtained using SQL Profiler. The SQL:StmtCompleted, SQL:BatchCompleted,SP:StmtCompleted and RPC:Completed capture the number of page reads, the number of page writes and the execution time of an individual invocation of a statement, a stored procedure or a SQL batch. The number of reads is logical reads. Note that monitoring with SQL Profiler for individual statement completed events will have effect on performance and on a big busy server it can cause significant performance degradation.

You can also see the number of logical reads, reads and writes as it happens by looking at the sys.dm_exec_requests but this information is visible only if while a request is running. Furthermore, the number aggregates all statements in a request so you cannot determine the cost of an individual statement using this DMV.

Analyzing Individual Query Execution Wait Times

If you need to drill down more detail that execution time and IO statistics for a query or for a stored procedure, the best information is the wait types and wait times that occurred during execution. Obtaining this information is no longer straightforward though, it involves using Extended Events monitoring. The method I’m presenting here is based on the Debugging slow response times in SQL Server 2008 article. It involves creating an extended event session that captures the sqlos.wait_info info and filters the extended events session for a specific execution session (SPID):

create event session session_waits on server

add event sqlos.wait_info

(WHERE sqlserver.session_id=<execution_spid_here> and duration>0)

, add event sqlos.wait_info_external

(WHERE sqlserver.session_id=<execution_spid_here> and duration>0)

add target package0.asynchronous_file_target

(SET filename=N'c:\temp\wait_stats.xel', metadatafile=N'c:\temp\wait_stats.xem');

go

alter event session session_waits on server state= start;

go

With the Extended Events session created and started, you can now run the query or procedure you want to analyze. After that, stop the Extended Event session and look at the captured data:

alter event session session_waits on server state= stop;

go

with x as (

select cast(event_data as xml) as xevent

from sys.fn_xe_file_target_read_file

('c:\temp\wait_stats*.xel', 'c:\temp\wait_stats*.xem', null, null))

select * from x;

go

As you can see, the Extended Events session captured each individual wait, each incident when the session was suspended. If we click on an individual captured event, we see the event data XML:

<event name="wait_info" package="sqlos" timestamp="2014-01-14T12:08:14.388Z">

<data name="wait_type">

<value>8</value>

<text>LCK_M_IX</text>

</data>

<data name="opcode">

<value>1</value>

<text>End</text>

</data>

<data name="duration">

<value>4</value>

</data>

<data name="signal_duration">

<value>2</value>

</data>

</event>

This is a 4 millisecond wait for an IX mode lock. Another 2 milliseconds had spent until the task resumed, after the lock was granted. You can shred out the XML into columns for a better view:

with x as (

select cast(event_data as xml) as xevent

from sys.fn_xe_file_target_read_file

('c:\temp\wait_stats*.xel', 'c:\temp\wait_stats*.xem', null, null))

select xevent.value(N'(/event/data[@name="wait_type"]/text)[1]', 'sysname') as wait_type,

xevent.value(N'(/event/data[@name="duration"]/value)[1]', 'int') as duration,

xevent.value(N'(/event/data[@name="signal_duration"]/value)[1]', 'int') as signal_duration

from x;

Finally, we can aggregate all the data in the captured Extended Events session:

with x as (

select cast(event_data as xml) as xevent

from sys.fn_xe_file_target_read_file

('c:\temp\wait_stats*.xel', 'c:\temp\wait_stats*.xem', null, null)),

s as (select xevent.value(N'(/event/data[@name="wait_type"]/text)[1]', 'sysname') as wait_type,

xevent.value(N'(/event/data[@name="duration"]/value)[1]', 'int') as duration,

xevent.value(N'(/event/data[@name="signal_duration"]/value)[1]', 'int') as signal_duration

from x)

select wait_type,

count(*) as count_waits,

sum(duration) as total__duration,

sum(signal_duration) as total_signal_duration,

max(duration) as max_duration,

max(signal_duration) as max_signal_duration

from s

group by wait_type

order by sum(duration) desc;

This is an exceptional wealth of information about what happened during the execution of my request. 742 times, it waited on the log commit to be written to disk for a total of ~14 seconds wait time, 12 times it waited for a lock, 2 times for a page latch, etc. If you are curious, my 'workload' I captured was a single row INSERT in a loop.

Analyzing Execution Plans

SQL Server can expose the actual execution plan for queries. The actual query tree gets serialized as XML and there are several ways to obtain it:

If you don't know anything about execution plans, then focus on thick arrows.

I will not enter into much detail into how to analyze a query plan because the subject is way too vast for this article. Execution plans capture what the query did, namely what operators it executed, how many times each operator was invoked, how much data each operator processed and how much data it produced in turn, and how much actual time it took for each operation. Unlike the wait times analysis, analyzing the execution focuses on runtime and where was the actual CPU time consumed. The graphical display of SSMS simplifies the information in an execution plan XML to make it more easily consumable and the graphical representation makes it easy to stop the bottlenecks. Thick arrows represent large data transfers between operators, meaning that operators had to look at a lot of rows to produce their output. Needless to say, the more data an operator needs to process, the more time it takes. Hovering over operators display a popup info note with details about the operator. A large actual row count shows large amount of data being processed. The popup include also the estimated row count which is how much data SQL Server had predicted the operator will process, based on the table statistics. A wild discrepancy between actual and estimated often is responsible for bad performance and can be tracked to outdated statistics on the table.

Another thing that is of interest in the execution plan are the operators themselves. Certain operators are inherently expensive, for example sort operators. An expensive operator coupled with a large data input is usually the cause of a huge execution time. Even if you do not know which operators are expensive and which are not, the execution plan contains the cost associated with each operator and you can use this information to get an idea for where problems may be.

Identifying Problem Queries

SQL Server exposes the runtime execution statistics for most queries it had run in sys.dm_exec_query_stats. This DMV shows the number of times a query had run, total/max/min/last active CPU time ('worker time'), total/max/min/last elapsed wall-clock time, total/max/min/last logical reads and logical writes, total/max/min/last number of rows returned. This kind of information is a real treasure cove to identify problems in an application:

-

Large Execution Count

- Queries that are run frequently are the most sensitive for performance. Even if they have acceptable performance, if they are run often, then small improvements in each individual execution can yield overall significant speed. But even better is to look, with a critical eye, into why the is query executed often. Application changes can reduce the number of execution count.

-

Large Logical Reads

- A query that has to scan large amounts of data is going to be a slow query, there is no question about this. The focus should be on reducing the amount of data scanned, typically the problem is a missing index. A 'bad' query plan can also be a problem. A high number of logical reads is accompanied by a high worker time but the issue is not high CPU usage, but the focus should be on large logical reads.

-

Large Physical Reads

- This is basically the same problem as large logical reads, but it also indicates that your server does not have sufficient RAM. Luckily this is, by far, the easiest problem to solve: just buy more RAM, max out the server RAM slots. You're still going to have a large logical reads, and you should address that problem too, but addressing an application design issue is seldom as easy as ordering 1TB of RAM...

-

High Worker Time with Low Logical Reads

- This case is not very common and indicates an operation that is very CPU intensive even with little data to process. String processing and XML processing are typical culprits and you should look at moving the CPU burn toward the periphery, in other words, perform the CPU intensive operations on the client, not on the server.

-

High Elapsed Time with Log Worker Time

- A query that takes a long wall-clock time but utilizes few CPU cycles is indicative of blocking. This is a query that was suspended most of the time, waiting on something. Wait analysis should indicate the contention cause, the resource being waited for.

- High Total Rows Count

- Large result sets requested by the application can be justified in an extremely low number of cases. This problem must be addressed in the application design.

Finding a problem query using sys.dm_exec_query_stats becomes a simply exercise of ordering by the desired criteria (by execution count to find frequently run queries, by logical reads, by elapsed time, etc.). Then get the text of the query in question use sys.dm_exec_sql_text, and to get the query execution plan, use sys.dm_exec_query_plan:

select st.text,

pl.query_plan,

qs.*

from sys.dm_exec_query_stats qs

cross apply sys.dm_exec_sql_text(qs.sql_handle) as st

cross apply sys.dm_exec_query_plan(qs.plan_handle) as pl;

If you do not know what kind of problem query to look for to start with, my advice is to focus in this order:

- High execution count. Identifying what queries are run most often is in my opinion more important that identifying which queries are particularly slow. More often than not, the queries found to be run most often are a surprise and simply limiting the execution count yield significant performance improvements.

- High logical reads. Large data scans are the usual culprit for most performance problems. They may be caused by missing indices, by a poorly designed data model, by 'bad' plans caused by outdated statistics or parameter sniffing and other causes.

- High elapsed time with low worker time. Blocking queries do not tax the server much, but your application users do not care if the time they waited in front of the screen for a refresh was spent active or blocked.

Missing Indexes

Repeatedly, I said that performance problems are caused by 'missing indexes', and SQL Server has a feature aptly named Missing Indexes:

The missing indexes feature uses dynamic management objects and Showplan to provide information about missing indexes that could enhance SQL Server query performance.

Personally, I seldom focus my analysis on the built in missing index feature. To start with, the feature has known limitations, but more important is that the feature is starting with the solution, without first searching for a cause. I always start with identifying problem queries first, searching for ones which would yield the highest bang for the buck. Once a problem query is identified as causing large scans, the missing index DMVs can be consulted to identify a candidate. But you should always take the recommendations with a grain of salt. The missing index feature does not know what the query is doing from an application business logic point of view. Reviewing the query can potentially find a better solution than what the automated missing index feature may recommend.

Experienced administrators have in place automation that periodically inspects the missing index DMVs and find potential hot spots and problems. This is a very good proactive approach. But in most cases, the performance problems are hot and immediate, and finding them using wait analysis or query execution stats is a more direct process. For a more in depth discussion of missing indexes DMVs, I recommend sp_BlitzIndex.

TEMPDB Performance

tempdb is the special SQL Server database that is used to store any transient data, including things like temporary tables, static cursors, table variables, sort runs, internal worktables, the version store and piles more. Even though the problems that can occur with tempdb are ultimately CPU, IO or contention problems, tempdb deserves a special topic because some of these problems can be understood at a higher level as tempdb problems, as opposed to, say, low level latch contention problems. tempdb also tends to manifest a set of problems entirely of its own, specially due to the very high rate of creation and destruction of objects in tempdb. MSDN has an Optimizing tempdb Performance topic which contains some practical advice, and Cindy Gross has compiled a collection of SQL Server TempDB IO Best Practices.

Make each tempdb data file the same size

In general, I tried to emphasize the importance of measuring and understanding SQL Server performance characteristics instead of blindly following advice. But here is one piece of advice that I always give, without any secondary consideration: make sure tempdb is stored on files of identical size, with identical growth characteristics. I've spent just too many hours chasing difficult to diagnose issues to discover that they're ultimately caused by unaligned tempdb files.

I would also recommend as mandatory reading Martin Smith's answer to this dba.stackexchange.com question: What's the difference between a temp table and table variable in SQL Server?. The answer goes in great detail not only from a tempdb usage point of view, but also impact on locking, compilation, parallelism and indexability, all critical components of performance.

SQL Server Performance Counters

Performance counters offer a different perspective into SQL Server performance than the wait analysis and execution DMVs do. The biggest advantage of performance counters is that they are extremely cheap to collect and store so they lend themselves as the natural choice for long term monitoring. Looking at current performance counter numbers can reveal potential problems, but interpreting the values is subject to experience and there are a lot of lore and myths on certain magical values. For analyzing performance problems on a server that you have access and is misbehaving right now, a more direct approach using wait analysis and execution stats is my recommended approach. Where performance counters shine is comparing current behavior with past behavior. for which, obviously, you need to have performance counters values collected and saved from said past. These should be part of an established baseline, which has captured the server behavior under workload when the server was behaving correctly and was not experiencing problems. If you do no have a baseline, consider establishing one.

The performance counters infrastructure workhorse is the logman.exe utility. For a quick introduction, read Two Minute Drill: LOGMAN.EXE. I recommend you familiarize yourself with this tool. I favor it over the graphic interface (perfmon.exe) because, as a command line interface (CLI) tool, it allows for easy scripting. Telling someone "run the attached script" is always easier that telling "click the button, select A from the list, then choose menu B, click twice in the middle of the red square, etc.". The graphic perfmon.exe tool is the tool of choice for displaying performance counters.

Performance counters, if collected at sufficient resolution, are also an excellent tool for determining correlation. The graphic display makes it easy to spot counters that tend to spike together, or in an anti-correlated pattern (one spikes the other dips). Other patterns also become evident in the graphic visualization, like lag (e.g., a counter spike is always preceded by another one increasing). Short of having a data scientist doing factorial analysis on your counters, your own eyes are the best analysis tool.

SQL Server Usage Performance Counters

-

- If you want one counter to best represent the overall performance throughput of your server, this is it. It captures the workload pressure (how many requests the application is making), and how is the server coping with the workload. Trends in this counter need to be further analyzed: an increase in the value could mean the server is performing better, because it can accept and handle more requests, but it can also mean the application is sending more requests (bigger, heavier workload), for example more your application is serving more client requests.

-

- The number of active transactions (any type). Be aware that there are at least two counters named

Transactions, one in the SQL Server:General Statistics object and another one in the SQL Server:Transactions object. I'm referring to the later one. Knowing the number of active transactions is important to set a background on your understanding of the workload. -

- This is especially useful when troubleshooting spikes or jagged performance. For example, if you can correlate moments where performance drops with moments when the blocking increase you have found an area to focus your investigation on: blocking.

-

- Understanding whether errors occur in your workload is a key point in performance troubleshooting. Eliminating errors is an easy path toward performance increase.

-

- Related to the number of Errors/sec, monitoring the rate of deadlocks can quickly point toward a performance problem. It is hard to believe that you are not aware that deadlocks are occurring, given the impact they have on the application. But I've seen cases where the deadlocks were quietly swallowed in the application or simply there was no communication between the organization monitoring the application and the DBA organization monitoring the server.

-

- I can hardly think of any event in SQL Server execution that has a more dramatic impact on performance than log growth. During log growth, every write request effectively freezes as it waits for available log to generate the write-ahead log records necessary to record any update. Log growth cannot use instant file initialization so it must wait until the log file is grown and the newly allocated disk space is filled with 0s. Your application users might as well watch paint dry... Often cases, this is aggravated by a log file gone out of control with 10% increments which is now growing in hundreds of megabytes or even gigabytes at a time. The impact on performance is usually devastating, and is also difficult to track down after the fact. It shows as a sudden performance dip (actually often a complete freeze) that lasts few seconds or even minutes, and then everything is again fast until the next log growth event. The article How to shrink the SQL Server log offers an explanation why log growth can occur. Data and log growth can also be tracked by non-zero values for the

PREEMPTIVE_OS_WRITEFILEGATHER wait info statistics. - Data File(s) Size (KB)

- Monitoring the data size is interesting on its own, but there is another reason why this counter should be collected: it can show when database growth events occur. Unlike Log Growths, data growths do not have counter of their own (shame!), but the events can be deduced from sudden jumps in the data file size. Note that data growth can leverage instant file initialization so the impact may not be as dramatic. You should always ensure instant file initialization is enabled for SQL Server, if is not the impact may be serious, specially if you encounter tempdb growth events (which in my experience occurs on most deployments).

IO Related Performance Counters

-

- The rate at which data pages are read from disk. This will be the main driver factor for disk read IO. Normally, your workload should read only a very small amount of data from disk, the data should be cached in memory. Constant reading is a sign of data scans (missing indexes) and/or insufficient memory (RAM).

-

- Together with the

Log Flush Waits/sec, Log Flush Wait Time and Log Flush Write Time (ms), you can quickly get a feel of how much blocking occurs due to transactions waiting to commit (log flush events). For well written OLTP intensive applications the rate at which transactions can be committed is often the main performance bottleneck. -

- I intentionally put data write after log writes, because most times is the log that is the problem. Only few workloads are data-write heavy. However, data-write can interfere with disk IO, specially with log writes, and cause delays in log flush waits that are very visible as application performance drops. The typical pattern is some 'longer' time of good performance with periodic 'short' times of performance dip. 'Long' and 'short' are relative, sometime you see 1-2 minutes 'long' and 10-15 seconds as 'short', but other times is 10-15 minutes 'long' and 1-2 minutes 'short'. The jagged pattern is the key, with a strong correlation between performance dips and data-writes spikes. The pattern is caused by checkpoints and the timing is driven by the

recovery interval option. Looking at Checkpoint pages/sec will quickly corroborate this hypothesis. -

- This counter is the usual culprit to blame

Page writes/sec counter spikes. -

- This performance counter exposes the wait info object for log writes. The advantage of using performance counters vs. the DMVs is to leverage the performance counters infrastructure, namely collecting the values in a continuous fashion using the operating system collectors.

-

- Same as above, this is a wait info exposed through performance counters. Strictly speaking, this does not measure IO, it measures only when blocking caused by IO occurs. You cannot distinguish between read IO and write IO with this counter.

-

- Often the IO requirements of the maintenance backup operations are forgotten when doing performance analysis. Understanding the additional IO pressure a backup adds and tracking it through this counter is important on its own, but is even more important to understand correlation between spikes in this counter and general performance degradation. This is a scenario I've see all too often: the maintenance plan kicks in and the overall throughput dips significantly, but nobody understands why.

-

- This category is the operating system counter for monitoring the IO operations. The typical focus is not on throughput, but on queue length, indicating IO operations that take a long time. For a more thorough explanation, read Windows Performance Monitor Disk Counters Explained, Monitoring Usage and Monitoring Queue Length. Operating system exposes IO counters for each individual drive separately, always corroborate findings with the SQL Server's own IO stats as reported by the virtual IO stats DMVs. Another good resource to read is this SQL Server Technet wiki page: Monitoring Disk Usage.

-

- The Process counter category has a different instance for each OS executing process and is very important to analyze when the overall IO on the system does not match the IO as reported by SQL Server. Often times, you'll discover that another process is causing IO making the disks busy and increasing the response time in SQL Server. Unfortunately, monitoring this counter is difficult long term because instances come and go as OS executable processes start and terminate.

-

- Despite being in the Memory counter category, understanding if and when paging occurs is critical in understand IO performance as paging can introduce a large spike in IO requests. See Monitoring Paging.

Memory Related Performance Counters

-

- PLE is measuring the average time a page stays cached in memory before being evicted because of the need to read more data from disk. The DBA lore gives this counter a magical value of 300 (5 minutes). This originates with the The 5 Minute Rule for Trading Memory for Disc Accesses paper published back in 1985, then revised as The Five-Minute Rule Ten Years Later and again revised as The Five-Minute Rule 20 Years Later. This 2011 SQL Magazine Q&A with Paul Randal goes on to say:

The value of 300 seconds is a Microsoft recommendation in a white paper that was written five years ago. It’s not a relevant guideline nowadays, and even back in 2006 I would have argued that aiming for a set value isn’t a valid recommendation.

Analyzing PLE is useful as it gives a single value to get a feel for the server memory health, but do not panic if the value is 297 and relax is 302... More important is to understand trends and the dynamic of this value. A jagged pattern (periodic dips) indicates something that comes and creates a great disturbance in the server memory, perhaps a periodic query that causes a scan, evicting much of the cached data. An overall trend in reducing PLE indicates your workload increases but the server hardware is the same and is a great indicator of potential future problems, better to alleviate now.

On machines with NUMA nodes, which in this day and age means pretty much every server, you should pay attention to the PLE per node using the SQL Server:Buffer Node\Page life expectancy counter. Read Page Life Expectancy isn’t what you think….

-

- This category tracks the how is memory used inside the SQL Server process. the absolute values themselves are somehow esoteric, but having a baseline to compare against and looking at long term trends is a great way to determine what changed and what components start putting SQL Server under memory pressure.

-

- I'm calling out this counter in the Memory Manager category because is particularly important in understanding blocking caused by memory grant waits. Memory grants are only needed for certain query operators and queries requesting large memory grants tend to wreak havoc with your SQL Server performance. Read Understanding SQL server memory grant for more details.

-

- Related to the above, this is the memory grant wait info exposed as a performance counter.

-

- The Process category OS performance counter has an instance for each process in the system and allows you to easily track other processes that may consume memory, causing external memory pressure for SQL Server and ultimately performance degradation.

CPU Performance Counters

-

- I really hope this one doesn't need an explanation. The OS reported CPU usage counter.

-

- I'm mentioning this counter here, in the CPU category, because it is a critical counter to analyze when considering CPU usage in SQL Server. When this counter correlates strongly with CPU usage is a clear indicator of why the CPU usage increased: more pages are scanned, the queries simply look at more data and therefore consume more CPU. The usual culprit is, again, table scans (i.e. missing index, poor data modeling or a bad query plan).

-

- The Process category OS performance counter has an instance for each process in the system and allows you to easily track other processes that may consume CPU, causing SQL Server performance degradation.

SQL Server Blocking Related Performance Counters

-

- Monitoring and analyzing the locking performance counters can reveal if lock related blocking is a significant cause of performance problems. Look specially at the

Lock Wait Time (ms) value, it will give you an insight on how much percent of the time is spent by queries being blocked waiting for locks. You won't be able to determine exactly what query is blocking what, but knowing that lock waits are significant allows you to focus on analyzing the block chains. sp_whoIsActive is a great tool for that. -

- Most important wait info types are reported also as performance counters. Analyzing these is similar to analyzing the wait statistics using the DMVs, but the performance counters have the advantage of being available in the whole performance counters toolset ecosystem, making easy to collect, store and analyze. Not all wait info types are exposed as performance counters.

-

- If you see high

Average Latch Wait Time (ms) values, you should perform an investigation to find the problem latches. I recommend you read Diagnosing and Resolving Latch Contention on SQL Server.

Network Performance Counters

ProTip: Network is not your bottleneck. Network performance comes into the picture usually only for deployments using Database Mirroring or Availability Groups. These features are indeed network hungry and require a fast, high bandwidth-low latency connection between the nodes. Furthermore, both DBM and AG tend to push their problems up the stack, resulting in performance degradation when the network traffic cannot keep up with the workload.

-

- This counter shows the wait info stats related to the server being blocked because the client is not consuming the result sets. Most often, this does not indicate a network problem, but an application design problem: the client is requesting large result sets.

-

- These counters show the network traffic generated by Service Broker and Database Mirroring. Availability Groups have a more specialized counter category in SQL Server:Availability Replica.

-

- This is the performance counter where all the network activity is exposed, from all processes.

-

- These categories are important for monitoring the health of the traffic, not the amount. Specifically, they can indicate network errors, dropped packets and retries.

Defining Your Own Counters

You can actually report custom performance counters from T-SQL. The SQL Server:User Settable is specifically designed for this and allows you to report up the 10 user defined values to be monitored and collected by the system performance counters tools. You publish counter values by using sp_user_counter1 through sp_user_counter10:

EXECUTE sp_user_counter1 @value;

For application developers, it is also possible to add performance counters to the application itself. Publishing performance counters from managed (.NET) application code is trivially easy, and you can automate the somehow boring process of declaring them, see Using XSLT to generate Performance Counters code.

Advanced Performance Analysis

Developers are familiar with performance analysis using sample profiling, with tools like VTune, F1 or WPA. The sample profiling technique consists of periodically taking a stack sample of each thread in the profiled process, up to several hundred times per second. The process is non-invasive, it does not require a specially instrumented process, any process can be profiled. It is also fairly lightweight, taking several hundred samples per second has no measurable impact on the performance of the process being sampled. The trick is interpreting the observations. Using the Microsoft public symbols server, you can resolve the collected samples to actual function names in the source code (note that in my experience F1 and WPA do a much better job at handling the LTCG code of SQL Server than VTune does). With some common sense, one can make an educated guess what exactly was the SQL Server doing during the period being sampled. This technique makes sense only if you are seeing significant CPU usage. If the performance is bad and you see slow queries but low CPU usage, it means the queries are waiting and you should focus instead on wait info analysis. Sample profiling of a blocked process will reveal nothing useful.

WPA is one of my favorite performance tools and it can do more than just sample profiling. It can visualize XPerf ETW traces and this makes it extremely powerful. Existing documentation and whitepapers for WPA tends to focus on multimedia and game development, but if you are and adventurous spirit and want to learn about how your favorite database works, using WPA to analyze SQL Server works can reveal a great deal of information and help you spot performance improvements that can be leveraged in your application. One of my favorite use cases for WPA is to collect IO activity (this will collect every single IO request) and then visualize the Disk Offset as a graph. This is an incredibly simple, yet powerful, way to visualize random IO vs. sequential IO patterns. If you have a feeling WPA and XPerf/ETW are somehow related to SQL Server Extended Events, that is because they are, very much so. Finally, some readings to get you started on using WPA:

The USE Method

USE stands for Utilization, Saturation and Errors, and the USE Method is a formalized approach to investigating performance troubleshooting. Its focus is on identifying resources in a system and then for each one, measure the utilization (percent in use compared to maximum bandwidth), the saturation of the resource (extra work queued because the resource cannot service due to 100% utilization) and errors.

When used in SQL Server context, the tricky part is to identify the resources and how to measure the utilization/saturation/errors. Some resources are obvious: disk, CPU, memory, network. Sometimes even simply starting with the USE method and measuring these hardware parameters is a good way to start a performance investigation, at the very least you'll know what hardware component is saturated, if any. But knowing how SQL Server works can reveal more detailed, internal resources that can be analyzed. In Understanding how SQL Server executes a query I have shown this image:

Many of the components in the image above can be investigated as Resources in the USE method. Applying this methodology requires some thinking outside the box since you will not find much references online about how to apply the USE method to SQL Server, what resources to look for and how to measure the utilization, saturation and errors for each. I myself do not have a clear picture yet for each resource involved and I'm still collecting facts and know-how on the subject. But I like the USE approach because it gives a method, a strategy to follow in troubleshooting performance.