Check this Video for Apache Hadoop Installation in Windows

- Introduction

- Hadoop 2.3 for Windows 7/8/8.1 - Specifically Built for Windows x64

- Hadoop 2.3 for Windows (112.5 MB)

Finally Github Link:

https://github.com/prabaprakash/Hadoop-2.3

Box Link:

https://app.box.com/s/11fwozokqmc1ohttt117

Google Drive Link:

https://drive.google.com/file/d/0Bz7A6rJcTjx_Q0RDT0FrU3dUTDQ/edit?usp=sharing

Dropbox Link:

https://www.dropbox.com/s/p8xsfmx9g76pn0t/hadoop-2.3.0.tar.gz

- Pre Configured file - https://github.com/prabaprakash/Hadoop-2.3-Config/archive/master.zip

- Java SDK / Runtime 1.6 Madatory

Download Links: http://download.oracle.com/otn/java/jdk/6u31-b05/jdk-6u31-windows-x64.exe

Reference Link: http://www.oracle.com/technetwork/java/javasebusiness/downloads/java-archive- downloads-javase6-419409.html

- Map Reduce Jobs in Java

- Redgate HDFS Explorer - http://bigdatainstallers.azurewebsites.net/files/HDFS%20Explorer/beta/1/HDFS%20Explorer%20-%20beta.application

- Eclipse Plugin for Hadoop MapReduce Jobs with Simple HDFS Explorer and Code Completion Configuartion like Visual Studio

- Eclipse IDE - http://www.eclipse.org/downloads/download.php?file=/technology/epp/downloads/release/kepler/SR2/eclipse-jee-kepler-SR2-win32-x86_64.zip

- Hadoop MapReduce Plugin for Eclipse - https://github.com/winghc/hadoop2x-eclipse-plugin/archive/master.zip

- Datasets

- Recipe Samples

Source Code: https://github.com/prabaprakash/Hadoop-Map-Reduce-Code

Documentation:

https://github.com/prabaprakash/Hadoop-Map-Reduce-Code/blob/master/Recipe%20Sample/Recipe%20Documentation.docx

- If You Need Hadoop 2.5.1 Native Built for Ubuntu 14.10

Setup: https://github.com/prabaprakash/Hadoop-2.5.1-Binary

Config: https://github.com/prabaprakash/Hadoop-2.5.1-Config-Files

1. Introduction

- Hadoop is a free, Java-based programming framework that supports the processing of large data sets in a distributed computing environment.

- It is part of the Apache project sponsored by the Apache Software Foundation.

- Hadoop makes it possible to run applications on systems with thousands of nodes involving thousands of terabytes.

- Its distributed file system facilitates rapid data transfer rates among nodes and allows the system to continue operating uninterrupted in case of a node failure.

- This approach lowers the risk of catastrophic system failure, even if a significant number of nodes become inoperative.

- Hadoop was inspired by Google's MapReduce, a software framework in which an application is broken down into numerous small parts.

- Any of these parts (also called fragments or blocks) can be run on any node in the cluster. Doug Cutting, Hadoop's creator, named the framework after his child's stuffed toy elephant.

- The current Apache Hadoop ecosystem consists of the Hadoop kernel, MapReduce, the Hadoop distributed file system (HDFS) and a number of related projects such as Apache Hive, HBase and Zookeeper.

- The Hadoop framework is used by major players including Google, Yahoo and IBM, largely for applications involving search engines and advertising. The preferred operating systems are Windows and Linux but Hadoop can also work with BSD and OS

We aren't able to understand Apache Hadoop Framework without Interactive Sessions, so I will list some YouTube playlists that will explain Apache Hadoop interactively/:

Playlist 1 - By Lynn Langit

http://www.youtube.com/playlist?list=PL8C3359ECF726D473

Playlist 2 - By handsonerp

http://www.youtube.com/user/handsonerp/search?query=hadoop

Playlist 3- By Eduraka!

http://www.youtube.com/playlist?list=PL9ooVrP1hQOHpJj0DW8GoQqnkbptAsqjZ

Some Ways to Install Hadoop in Windows

- Cygwin

- http://sundersinghc.wordpress.com/2013/04/08/running-hadoop-on-cygwin-in-windows-single-node-cluster/

- http://bigdata.globant.com/?p=7

- http://alans.se/blog/2010/hadoop-hbase-cygwin-windows-7-x64/#.U0bamFerMiw

- Azure HD Insight Emulator

- http://azure.microsoft.com/en-us/documentation/articles/hdinsight-get-started-emulator/

- Build Hadoop for Windows

- By Apache Doc - https://svn.apache.org/viewvc/hadoop/common/branches/branch-2/BUILDING.txt?view=markup

- Perfect Guide By Abhijit Ghosh from https://app.box.com/s/11fwozokqmc1ohttt117

- HortonWorks for Windows (Hadoop 2.0) and also SandBox Images of Hadoop 2.0 for Hyper-V / Vmware / Virtual Box

- HortonWorks for Windows - http://hortonworks.com/partner/microsoft/

- Sandbox 2.0 - http://hortonworks.com/products/hortonworks-sandbox/

- Clodera VM

- http://www.cloudera.com/content/support/en/downloads.html

Other Cloud Services

- Azure HD Insight

- Amazon Elastic Map Reduce

- IBM Blue Mix - Hadoop Service

2. Hadoop 2.3 for Windows 7/8/8.1 - Specifically Builded for Windows x64

I built Hadoop 2.3 for windows x64 with the help of steps provided by Abhijit Ghosh from http://www.srccodes.com/p/article/38/build-install-configure-run-apache-hadoop-2.2.0-microsoft-windows-os

[INFO] Executed tasks

[INFO]

[INFO] --- maven-javadoc-plugin:2.8.1:jar (module-javadocs) @ hadoop-dist ---

[INFO] Building jar: C:\hdp\hadoop-dist\target\hadoop-dist-2.3.0-javadoc.jar

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] Apache Hadoop Main ................................ SUCCESS [1.847s]

[INFO] Apache Hadoop Project POM ......................... SUCCESS [3.218s]

[INFO] Apache Hadoop Annotations ......................... SUCCESS [3.812s]

[INFO] Apache Hadoop Assemblies .......................... SUCCESS [0.522s]

[INFO] Apache Hadoop Project Dist POM .................... SUCCESS [3.717s]

[INFO] Apache Hadoop Maven Plugins ....................... SUCCESS [6.613s]

[INFO] Apache Hadoop MiniKDC ............................. SUCCESS [7.117s]

[INFO] Apache Hadoop Auth ................................ SUCCESS [5.104s]

[INFO] Apache Hadoop Auth Examples ....................... SUCCESS [4.230s]

[INFO] Apache Hadoop Common .............................. SUCCESS [3:18.829s]

[INFO] Apache Hadoop NFS ................................. SUCCESS [13.442s]

[INFO] Apache Hadoop Common Project ...................... SUCCESS [0.066s]

[INFO] Apache Hadoop HDFS ................................ SUCCESS [2:45.070s]

[INFO] Apache Hadoop HttpFS .............................. SUCCESS [40.280s]

[INFO] Apache Hadoop HDFS BookKeeper Journal ............. SUCCESS [10.956s]

[INFO] Apache Hadoop HDFS-NFS ............................ SUCCESS [5.037s]

[INFO] Apache Hadoop HDFS Project ........................ SUCCESS [0.075s]

[INFO] hadoop-yarn ....................................... SUCCESS [0.070s]

[INFO] hadoop-yarn-api ................................... SUCCESS [1:12.357s]

[INFO] hadoop-yarn-common ................................ SUCCESS [46.634s]

[INFO] hadoop-yarn-server ................................ SUCCESS [0.071s]

[INFO] hadoop-yarn-server-common ......................... SUCCESS [10.907s]

[INFO] hadoop-yarn-server-nodemanager .................... SUCCESS [25.635s]

[INFO] hadoop-yarn-server-web-proxy ...................... SUCCESS [4.293s]

[INFO] hadoop-yarn-server-resourcemanager ................ SUCCESS [30.427s]

[INFO] hadoop-yarn-server-tests .......................... SUCCESS [3.817s]

[INFO] hadoop-yarn-client ................................ SUCCESS [7.340s]

[INFO] hadoop-yarn-applications .......................... SUCCESS [0.068s]

[INFO] hadoop-yarn-applications-distributedshell ......... SUCCESS [3.047s]

[INFO] hadoop-yarn-applications-unmanaged-am-launcher .... SUCCESS [2.346s]

[INFO] hadoop-yarn-site .................................. SUCCESS [0.101s]

[INFO] hadoop-yarn-project ............................... SUCCESS [4.986s]

[INFO] hadoop-mapreduce-client ........................... SUCCESS [0.137s]

[INFO] hadoop-mapreduce-client-core ...................... SUCCESS [51.554s]

[INFO] hadoop-mapreduce-client-common .................... SUCCESS [28.285s]

[INFO] hadoop-mapreduce-client-shuffle ................... SUCCESS [3.548s]

[INFO] hadoop-mapreduce-client-app ....................... SUCCESS [22.627s]

[INFO] hadoop-mapreduce-client-hs ........................ SUCCESS [12.972s]

[INFO] hadoop-mapreduce-client-jobclient ................. SUCCESS [51.921s]

[INFO] hadoop-mapreduce-client-hs-plugins ................ SUCCESS [2.340s]

[INFO] Apache Hadoop MapReduce Examples .................. SUCCESS [9.765s]

[INFO] hadoop-mapreduce .................................. SUCCESS [3.397s]

[INFO] Apache Hadoop MapReduce Streaming ................. SUCCESS [16.817s]

[INFO] Apache Hadoop Distributed Copy .................... SUCCESS [37.303s]

[INFO] Apache Hadoop Archives ............................ SUCCESS [2.773s]

[INFO] Apache Hadoop Rumen ............................... SUCCESS [11.225s]

[INFO] Apache Hadoop Gridmix ............................. SUCCESS [7.554s]

[INFO] Apache Hadoop Data Join ........................... SUCCESS [3.982s]

[INFO] Apache Hadoop Extras .............................. SUCCESS [4.627s]

[INFO] Apache Hadoop Pipes ............................... SUCCESS [0.080s]

[INFO] Apache Hadoop OpenStack support ................... SUCCESS [8.620s]

[INFO] Apache Hadoop Client .............................. SUCCESS [8.964s]

[INFO] Apache Hadoop Mini-Cluster ........................ SUCCESS [0.186s]

[INFO] Apache Hadoop Scheduler Load Simulator ............ SUCCESS [16.472s]

[INFO] Apache Hadoop Tools Dist .......................... SUCCESS [7.326s]

[INFO] Apache Hadoop Tools ............................... SUCCESS [0.066s]

[INFO] Apache Hadoop Distribution ........................ SUCCESS [1:09.690s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 17:47.469s

[INFO] Finished at: Sun Mar 23 18:01:41 IST 2014

[INFO] Final Memory: 131M/349M

[INFO] ------------------------------------------------------------------------

Step to Installation

- Download Hadoop 2.3 for Windows (112.5 MB) from my box account - https://app.box.com/s/11fwozokqmc1ohttt117

- Also Download the configuration file from my box account - https://github.com/prabaprakash/Hadoop-2.3-Config/archive/master.zip

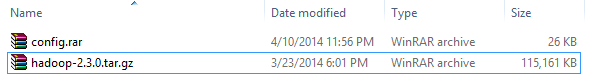

You have these files with you

fine!

- Open hadoop-2.3.0.tar.gz with winrar ,extract in local disk

- Open config.rar with winrar

Open bin directory in winrar. extract yarn.cmd file into c:\hadoop-2.3.0\bin folder

Open config\etc\hadoop extract

- yarn-site.xml

- mapred.xml

- https-site.xml

- hdfs-site.xml

- hadoop-policy.xml

- core-site.xml

- capacity-scheduler.xml

to c:\hadoop-2.3.0\etc\hadoop and replace it.

- It's mandatory, because Apache Developer build Hadoop framework using Java 1.6 so, we needed Java 1.6 sdk, and also Java 1.6 Runtime

- Download Java SDK 1.6.0_31

http://download.oracle.com/otn/java/jdk/6u31-b05/jdk-6u31-windows-x64.exe

Then Install It

- Set The Environmental Variables

Control Panel\System and Security\System

Open Advanced System Settings

Then, add new variable " HADOOP_HOME " - value " c:\hadoop-2.3.0 "

Also add new variable " JAVA_HOME " - value " java installation path "

System Variables -> Path -> Edit

Add Hadoop bin path, Java 6 bin path -> click ok

- Then Open hadoop-env.cmd in wordpad located in C:\hadoop-2.3.0\etc\hadoop\hadoop-env.cmd

Set the JAVA_HOME path in line 25! remember not JDK bin path.

- Let Play with Apache Hadoop 2.3

- Open cmd as adminstrator

C:\Windows\system32>cd c:\hadoop-2.3.0\bin

c:\hadoop-2.3.0\bin>hadoop

Usage: hadoop [--config confdir] COMMAND

where COMMAND is one of:

fs run a generic filesystem user client

version print the version

jar <jar> run a jar file

checknative [-a|-h] check native hadoop and compression libraries availabilit

y

distcp <srcurl> <desturl> copy file or directories recursively

archive -archiveName NAME -p <parent> <src>* <dest> create a hadoop archi

ve

classpath prints the class path needed to get the

Hadoop jar and the required libraries

daemonlog get/set the log level for each daemon

or

CLASSNAME run the class named CLASSNAME

Most commands print help when invoked w/o parameters.

c:\hadoop-2.3.0\bin>cd c:\hadoop-2.3.0\bin

c:\hadoop-2.3.0\bin>hadoop

Usage: hadoop [--config confdir] COMMAND

where COMMAND is one of:

fs run a generic filesystem user client

version print the version

jar <jar> run a jar file

checknative [-a|-h] check native hadoop and compression libraries availabilit

y

distcp <srcurl> <desturl> copy file or directories recursively

archive -archiveName NAME -p <parent> <src>* <dest> create a hadoop archi

ve

classpath prints the class path needed to get the

Hadoop jar and the required libraries

daemonlog get/set the log level for each daemon

or

CLASSNAME run the class named CLASSNAME

Most commands print help when invoked w/o parameters.

c:\hadoop-2.3.0\bin>hadoop namenode -format

</br>

It will create a HDFS in your system and format it.

c:\hadoop-2.3.0\bin>cd..

c:\hadoop-2.3.0>cd sbin

c:\hadoop-2.3.0\sbin>start-dfs.cmd

c:\hadoop-2.3.0\sbin>start-yarn.cmd

starting yarn daemons

So check, whether Apache Namenode & Datanode, Apache Yarn Nodemanger & Yarn Resouce Manager is running concurrenlty.

OK, let's go to mapreduce

3. Some Map Reduce Jobs

- I had seen every where programmer begin their first mapreduce programming using simple WordCount program.

- I was bored, so let's begin with recipe's .

- Download the Recipeitems-latest.son file ( 26 MB)

http://openrecipes.s3.amazonaws.com/recipeitems-latest.json.gz

- Create a folder in c:\> named as hwork

Extract recipe-latest.json.gz in c:\>hwork folder. it was about 150 MB.

It contain about 1.5 Lakh of Recipe Items

{ "_id" : { "oid" : "5160756b96cc62079cc2db15" }, "name" : "Drop Biscuits and Sausage Gravy", "ingredients" : "Biscuits\n3 cups All-purpose Flour\n2 Tablespoons Baking Powder\n1/2 teaspoon Salt\n1-1/2 stick (3/4 Cup) Cold Butter, Cut Into Pieces\n1-1/4 cup Butermilk\n SAUSAGE GRAVY\n1 pound Breakfast Sausage, Hot Or Mild\n1/3 cup All-purpose Flour\n4 cups Whole Milk\n1/2 teaspoon Seasoned Salt\n2 teaspoons Black Pepper, More To Taste", "url" : "http://thepioneerwoman.com/cooking/2013/03/drop-biscuits-and-sausage-gravy/", "image" : "http://static.thepioneerwoman.com/cooking/files/2013/03/bisgrav.jpg", "ts" : { "date" : 1365276011104 }, "cookTime" : "PT30M", "source" : "thepioneerwoman", "recipeYield" : "12", "datePublished" : "2013-03-11", "prepTime" : "PT10M", "description" : "Late Saturday afternoon, after Marlboro Man had returned home with the soccer-playing girls, and I had returned home with the..." }

- Downlod Gson Libray for Java to deserialize the json

https://code.google.com/p/google-gson/downloads/detail?name=google-gson-2.2.4-release.zip&can=2&q=

extract the zip files, copy all jar files and paste into C:\hadoop-2.3.0\share\hadoop\common\lib folder.....

approximately 1.5 Lakh recipe items are there in json file , my intention is to go the number of items per "cooktime"

PT0H20M 25

PT0H25M 24

PT0H2M 3

PT0H30M 74

PT0H34M 1

PT0H35M 31

PT0H3M 1

PT0H40M 67

PT0H45M 74

PT0H50M 52

PT0H55M 10

PT0H5M 118

PT0H6M 1

PT0H7M 1

PT0H8M 6

PT0M 80

- Map Reduce Code

Recipe.java

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

import com.google.gson.Gson;

public class Recipe {

public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable>{

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

Gson gson = new Gson();

public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

Roo roo=gson.fromJson(value.toString(),Roo.class);

if(roo.cookTime!=null)

{

word.set(roo.cookTime);

}

else

{

word.set("none");

}

context.write(word, one);

}

}

public static class IntSumReducer

extends Reducer<Text,IntWritable,Text,IntWritable> {

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values,

Context context

) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length != 2) {

System.err.println("Usage: recipe <in> <out>");

System.exit(2);

}

@SuppressWarnings("deprecation")

Job job = new Job(conf, "Recipe");

job.setJarByClass(Recipe.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

class Id

{

public String oid;

}

class Ts

{

public long date ;

}

class Roo

{

public Id _id ;

public String name ;

public String ingredients ;

public String url ;

public String image ;

public Ts ts ;

public String cookTime;

public String source ;

public String recipeYield ;

public String datePublished;

public String prepTime ;

public String description;

}

By dafault, Hadoop itself read the input file line by line and send it to

class TokenizerMapper

In TokenizeMapper class, we deserializing the JSON string, then initalize it to Roo Class.

So by roo instantiate object, we will get cooktime, then set in to "Mapper context."

In TokenizerReduce class, count the number of items, then set into "Reducer context"

- We need to compile it.

Create and copy Recipe.java in c:\>hwork folder, then follow the given command

c:\Hwork>javac -classpath C:\hadoop-2.3.0\share\hadoop\common\hadoop-common-2.3.0.jar;C:\hadoop-2.3.0\share\hadoop\mapreduce\hadoop-mapreduce-client-core-2.3.0.jar;C:\hadoop-2.3.0\share\hadoop\common\lib\gson-2.2.4.jar;C:\hadoop-2.3.0\share\hadoop\common\lib\commons-cli-1.2.jar Recipe.java

Now our mapreduce program is compiled successfully. Then we need to create a jar file because Hadoop need jar file to run it.

To make jar, follow the below command

C:\Hwork>jar -cvf Recipe.jar *.class

added manifest

adding: Id.class(in = 217) (out= 179)(deflated 17%)

adding: Recipe$IntSumReducer.class(in = 1726) (out= 736)(deflated 57%)

adding: Recipe$TokenizerMapper.class(in = 1887) (out= 820)(deflated 56%)

adding: Recipe.class(in = 1861) (out= 1006)(deflated 45%)

adding: Roo.class(in = 435) (out= 293)(deflated 32%)

adding: Ts.class(in = 201) (out= 168)(deflated 16%)

We are ready to run mapreduce program, but before we need to copy c:\>hwork\recipe-items.json file to Hadoop distributed filesystem, follow the steps given below

c:\hadoop-2.3.0\sbin>hadoop fs -mkdir /in

c:\hadoop-2.3.0\sbin>hadoop fs -copyFromLocal c:\Hwork\recipeitems-latest.json /in

So We Copied the file from local to Hadoop Distributed File System...

Run The mapreduce ......

c:\hadoop-2.3.0\sbin>hadoop jar c:\Hwork\Recipe.jar Recipe /in /out

14/04/12 00:52:02 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

14/04/12 00:52:03 INFO input.FileInputFormat: Total input paths to process : 1

14/04/12 00:52:03 INFO mapreduce.JobSubmitter: number of splits:1

14/04/12 00:52:04 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1397243723769_0001

14/04/12 00:52:04 INFO impl.YarnClientImpl: Submitted application application_1397243723769_0001

14/04/12 00:52:04 INFO mapreduce.Job: The url to track the job: http://OmSkathi:8088/proxy/application_1397243723769_0001/

14/04/12 00:52:04 INFO mapreduce.Job: Running job: job_1397243723769_0001

14/04/12 00:52:16 INFO mapreduce.Job: Job job_1397243723769_0001 running in uber mode : false

14/04/12 00:52:16 INFO mapreduce.Job: map 0% reduce 0%

14/04/12 00:52:26 INFO mapreduce.Job: map 100% reduce 0%

14/04/12 00:52:33 INFO mapreduce.Job: map 100% reduce 100%

14/04/12 00:52:34 INFO mapreduce.Job: Job job_1397243723769_0001 completed successfully

14/04/12 00:52:34 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=3872

FILE: Number of bytes written=180889

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=119406749

HDFS: Number of bytes written=2871

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=7383

Total time spent by all reduces in occupied slots (ms)=5121

Total time spent by all map tasks (ms)=7383

Total time spent by all reduce tasks (ms)=5121

Total vcore-seconds taken by all map tasks=7383

Total vcore-seconds taken by all reduce tasks=5121

Total megabyte-seconds taken by all map tasks=7560192

Total megabyte-seconds taken by all reduce tasks=5243904

Map-Reduce Framework

Map input records=146949

Map output records=146949

Map output bytes=1387492

Map output materialized bytes=3872

Input split bytes=113

Combine input records=146949

Combine output records=293

Reduce input groups=293

Reduce shuffle bytes=3872

Reduce input records=293

Reduce output records=293

Spilled Records=586

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=70

CPU time spent (ms)=5108

Physical memory (bytes) snapshot=370135040

Virtual memory (bytes) snapshot=428552192

Total committed heap usage (bytes)=270860288

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=119406636

File Output Format Counters

Bytes Written=2871

Map Reduce Job Completed Successfully ,Now Check Output folder " /out "

c:\hadoop-2.3.0\sbin>hadoop fs -ls /out

Windows_NT-amd64-64

Found 2 items

-rw-r--r-- 1 PrabaKarthi supergroup 0 2014-04-12 00:52 /out/_SUCCESS

-rw-r--r-- 1 PrabaKarthi supergroup 2871 2014-04-12 00:52 /out/part-r-00000

Open the ouput files.have a good look , you will enjoy hadoop analytics work by Apache

c:\hadoop-2.3.0\sbin>hadoop fs -cat /out/part-r-00000

P0D 121

P1D 2

P1DT6H 1

P4DT8H 1

PT 8491

PT0H10M 56

PT0H12M 1

PT0H14M 1

PT0H15M 55

PT0H1M 1

PT0H20M 25

PT0H25M 24

PT0H2M 3

PT0H30M 74

PT0H34M 1

PT0H35M 31

PT0H3M 1

PT0H40M 67

PT0H45M 74

PT0H50M 52

PT0H55M 10

PT0H5M 118

PT0H6M 1

PT0H7M 1

PT0H8M 6

PT0M 80

PT1008H 1

PT100M 1

PT10H 102

PT10H0M 2

PT10H10M 5

PT10H15M 4

PT10H20M 1

PT10H25M 1

PT10H30M 5

PT10H35M 1

PT10H40M 1

PT10H45M 1

PT10M 9982

So, we done mapreduce job. Every one knows about, but I am going list the tools make your work more easier, then before.

4. Redgate HDFS Explorer

I get bored while copying local files to Hadoop filesystem using command and also retrive the Hadoop filesystem data using command. I got this open source software is very fun, first download it (2.5 MB)

http://bigdatainstallers.azurewebsites.net/files/HDFS%20Explorer/beta/1/HDFS%20Explorer%20-%20beta.application

- Install it, we already copied the configuration files for Hadoop 2.3, so, our hadoop filesystem will be accessible remotely, also using webclient in Java, C#, Python etc.

- Open HDFS Explorer

File->Add Connection

Browse our Hadoop File System in Graphical File Explorer. Copy the input file from local disk and paste it in hdfs, also copy the output form hdfs and paste it in your local disk, you can do every operation, what a traditional file explorer will do. Enjoy with HDFS Explorer

Fine hdfs explorer is good, but I was bored writting mapreduce coding in Notepad++ without perfect intellisene, indentation. I got eclipse plugin for hadoop mapreduce. Let's go to next topic

Fine hdfs explorer is good, but I was bored writting mapreduce coding in Notepad++ without perfect intellisene, indentation. I got eclipse plugin for hadoop mapreduce. Let's go to next topic

5. Eclipse Plugin for Hadoop MapReduce Jobs with Simple HDFS Explorer and Auto Code Completion Configuartion like Visual Studio

Eclipse IDE - http://www.eclipse.org/downloads/download.php?file=/technology/epp/downloads/release/kepler/SR2/eclipse-jee-kepler-SR2-win32-x86_64.zip

Hadoop MapReduce Plugin for Eclipse - https://github.com/winghc/hadoop2x-eclipse-plugin/archive/master.zip

Let begin, Download the Above " Eclipse Kepler IDE " ( 250 MB ) , also Download the Hadoop MapReduce Pluign for Eclipse (23 MB).

- Extract the Eclipse IDE

For explanation : extract eclipse IDE in D:\>eclipse

- Open hadoop2x-eclipse-plugin-master.zip

goto "release" directory , extract " hadoop-eclipse-kepler-plugin-2.2.0.jar " file into eclispe\plugin folder

Let Rock and Role.

- Open Eclipse IDE (Run As Administrator)

Choose Your Own WorkPlace Location -> Click OK

Menu->Window->Open Perspective->Other->Map/Reduce

- I love Visual Studio more so, I need intellisense and code formatting as like Visual Studio (somehow) for eclipse? Some Configuration, which make work easier

Menu->Window->Preference->Java->Editor->Content Assistent->"Auto Activation"

- check enable auto activation

- auto activation delay(ms):0

- auto activation trigger for java : .(abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ

- auto activation triggers for javadoc:@#

Apply->Ok

- Configure Hdfs and Map/reduce connection

Map/reduce location->new hadoop location

Location name : "some name", and other are same as given below in the image, don't modify because we configured the mapreduce address, dfs address already in c:\hadoop-2.3.0\etc\hadoop folder.

Simple HDFS Explorer

- File->new project->map /reduce Project->next

It showing error becasue hadoop installation folder not configured correctly

Now, browse installation directory and click apply

Click next

Import, usually we will do mistake here becasue Hadoop 2.3 need jdk 6 for runtime/compilation so and so

Click Add Library ->JRE System Library

Click installed JRE's

Click installed JRE's

Add -> Standard VM

Browse the jdk 1.6 location and click finish

ok->ok->finish->finish

So, Hadoop 2.3 libraries are added -> good , again we got jdk 1.7 error ,we need jdk 1.6

Change to jdk 1.6 ->click ok

Finally Hadoop 2.3 Libraries a along with Jdk 1.6.

Your Eclipse is Configured Perfectly for Hadoop MapReduce Coding and Exection along with Intellisense. Let's code

- Add new -> Recipe.java in src folder,then copy and paste the above code

- Right click -> Recipe.java -> Runs As->Run on Hadoop

- Map Reduce Job is Running

- Job Completed

Examples

- Hadoop : WordCount with Custom Record Reader of TextInputFormat

6. Datasets

- Large Public Datasets

- Free Large datasets to experiment with Hadoop

- Explain patent data set in Hadoop example

- 60,000+ Documented UFO Sightings With Text Descriptions And Metadata

- Recipe-Items List

Reference Books

- Hadoop Map Reduce CookBook - Srinath Perera

- Big Data Analytics: From Strategic Planning to Enterprise Integration with Tools, Techniques, NoSQL, and Graph

- Hadoop: The Definitive Guide MapReduce for the Cloud

Reference Links

- Searchcloudcomputing.techtarget.com

- Hadoop: What it is, how it works, and what it can do

- IBM: What is Hadoop?

- Hadoop at Yahoo

Conclusion

I am sure, this article will be helpful for Beginners & Intermediary Programmers to Bootstrap Apache Hadoop (Big Data Analytics Framework ) in Windows Environment.

Yours Friendly

Prabakaran.A