Introduction

This is the third article about code generation in my series and will discuss the move of a business application into the cloud (IaaS). The first article introduced the usage of UML and model transformation to become faster. The second article discussed the extensibility or enhancements XSLT provides.

Background

The reason to move into the cloud today is clear. You are able to quickly deploy your system without waiting for the hardware to be shipped and setup. The main driver is cost savings and agility to respond to demand.

Architecture

Before we begin to code, planning and some research is required. Some questions come into play when thinking about a distributed environment. These are as follows:

- Security - Is paramount and should be addressed very early.

- Brokered authentication - One source for the credential store.

- Scope of the distributed architecture - Migration of internal client / server application into Cloud.

First, security should be taken into account. Moving an application from an internal scope (intranet) into a public (internet) scope requires some analysis of existing architecture.

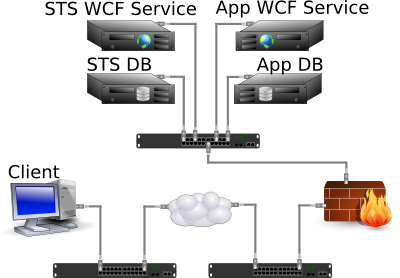

The following image depicts the existing client / server architecture:

The prototype application that has been created in the last two articles, used a database backend that effectively ran on the same machine (Sqlite), but a typical remote database server could be assumed here. In that scenario, security was the responsibility of the database and thus a database login. A login mechanism implemented in the application was simply no real security taking into account the database credentials were stored in the client. The client has direct access to the database and thus may be a target for gaining access. Using logins at database level for each user is on the other hand a licensing issue and may be not cheap, thus an application level login was chosen.

When an internet scenario is considered, the trust to the client may be lost due to the fact, you can't really control what client is logging into the database. If the client is responsible to handle authentication per user, the client may omit this step and directly connect to the database and let the user do anything. The client cannot be trusted any more.

After taking into account that the server must at least take the role for the authentification it is time to think twice about this. What is, if the application want to communicate to another backend? Here comes a pattern into play: Brokered authentication.

Finishing the basic design will show some massive changes:

The client is assumed to be anywhere in the internet and thus is no longer trusted to directly connect to the database backend. A server must be placed between the database and the client. This is the time to tell a little bit about XPO, a commercial ORM developed by http://www.devexpress.com. They provide classes to make the live easier. The client connection string that previously was connecting directly to the database (in my case Sqlite), is now changed to connect to a data store service. This is a client server library supporting WCF as a communication mechanism. This made it easy to split the code for client and server related components. Changing a connection string at the first step seemed to be an easy integration into the previous client.

Until now I got my first distributed scenario where the client connects to a WCF service that provides the database backend via the IDataStore interface (XPO). I was able to use wsHttpBinding and made the communication secure. This is another point in the internet or public scenario. Data must be encrypted when traveling over an untrusted network.

Now the application was able to communicate over the internet in a secure fashion, but - it still had the same authentication mechanism - in the client. The client contained some internal tables that are added to the generated code to enable authentication via a simple login screen. Moving this to the same WCF service did not really made the service secure. Anyone could access this service as long as the IDataService interface is used in a compatible client. The client still authenticated itself by querying against the internal tables I added into the generated code.

The depicted internet scenario in the second illustration shows the next step of separation of authentication and application concerns. Added is a separate WCF service using the same technology as of the WCF service the application now uses. This new service implements the brokered authentication pattern using WIF. A client must contact this service prior to accessing the application service to obtain a token. The WCF application service accepts from now on only issued tokens by this brokered authentication service. WIF (the security token service) is contacted by the client to obtain the token and provides the login credentials the user has provided to the login screen.

Here is the next change in the template to no longer querying to the internal user table for an user with that password. Instead the credentials are stored into a SecureString (.NET) and later used in the IDataStore client as login credentials. The WCF client internally then contacts the STS to get the token and if correct, it could contact the desired WCF service presenting the token for auth.

After the basic scenario was setup (code changes and WCF configuration to authenticate against STS), I was able to make a first test deployment. At this point the services are implemented as Windows Services and I installed them manually using InstallUtil.

Writing the plain code prototype parts

As you have read in the last chapter, the code was nearly ready for templatization. All components are ready for deployment and it was time to change the code generator templates at the points I made changes in the prototype code modified by hand. Cleanup was needed and also some additional stuff like an MSI installer for the services. Done this increased the bunch of Visual Studio projects in my solution. I knew I had to do several changes in the existing code generator template and add new stuff to it for the completely new projects (STS WCF service, application WCF service, respective installer projects using WIX).

Templatize the parts

Here I like to introduce the first code snipped that helped me a lot to change the existing templates:

<Macro name="Templatize" Ctrl="yes" Alt="no" Shift="yes" Key="84">

<Action type="3" message="1700" wParam="0" lParam="0" sParam="" />

<Action type="3" message="1601" wParam="0" lParam="0" sParam="&" />

<Action type="3" message="1625" wParam="0" lParam="0" sParam="" />

<Action type="3" message="1602" wParam="0" lParam="0" sParam="&amp;" />

<Action type="3" message="1702" wParam="0" lParam="768" sParam="" />

<Action type="3" message="1701" wParam="0" lParam="1609" sParam="" />

<Action type="3" message="1700" wParam="0" lParam="0" sParam="" />

<Action type="3" message="1601" wParam="0" lParam="0" sParam="<" />

<Action type="3" message="1625" wParam="0" lParam="0" sParam="" />

<Action type="3" message="1602" wParam="0" lParam="0" sParam="&lt;" />

<Action type="3" message="1702" wParam="0" lParam="768" sParam="" />

<Action type="3" message="1701" wParam="0" lParam="1609" sParam="" />

<Action type="3" message="1700" wParam="0" lParam="0" sParam="" />

<Action type="3" message="1601" wParam="0" lParam="0" sParam=">" />

<Action type="3" message="1625" wParam="0" lParam="0" sParam="" />

<Action type="3" message="1602" wParam="0" lParam="0" sParam="&gt;" />

<Action type="3" message="1702" wParam="0" lParam="768" sParam="" />

<Action type="3" message="1701" wParam="0" lParam="1609" sParam="" />

</Macro>

This snipped is a Notepad++ macro to help formatting pasted code into XSLT capable code. It replaces special characters into special representation to not interfere with the characters used to format XML documents. The shortcut is Ctrl+T for 'templatize' :-)

Use this only in plain code contents and not within a XSLT template. If you do it, you also replace XML tags that are from the XSLT template. I follow the practice to create a new document, paste my code there, press Ctrl+T Ctrl+A Ctrl+C and I have it prepared for insertion into the template. This is very fast and you do not miss any character corrupting the template.

Include the templates in the existing one

Using the above macro in Notepad++ I was able to very quickly change the template code at various places regarding the protptype code that was generated from the previous version of the template.

As a sample, here is a previous code snipped in one template that required a change:

protected static bool _DALInitialized = false;

public static void InitDAL()

{

if (_DALInitialized)

return;

_DALInitialized = true;

try

{

<xsl:if test="$USE_XPO='yes'">

DevExpress.Xpo.XpoDefault.DataLayer = DevExpress.Xpo.XpoDefault.GetDataLayer(

<xsl:if test="$DB_ENGINE='postgresql'">

DevExpress.Xpo.DB.PostgreSqlConnectionProvider.GetConnectionString("vmhost.behrens.de", "dba", "trainres", "<xsl:value-of select="$ApplicationName"/>DevExpress"),

</xsl:if>

<xsl:if test="$DB_ENGINE='access'">

DevExpress.Xpo.DB.AccessConnectionProvider.GetConnectionString(Path.Combine(Environment.GetFolderPath(Environment.SpecialFolder.MyDocuments), @".\<xsl:value-of select="$ApplicationName"/>.mdb")),

</xsl:if>

<xsl:if test="$DB_ENGINE='sqlite'">

<xsl:if test="$ApplicationName='lbDMFManager'">

DevExpress.Xpo.DB.SQLiteConnectionProvider.GetConnectionString(Path.Combine(@"C:\lbDMF", @"lbDMF.db3")),

</xsl:if>

<xsl:if test="$ApplicationName!='lbDMFManager'">

DevExpress.Xpo.DB.SQLiteConnectionProvider.GetConnectionString(Path.Combine(Environment.GetFolderPath(Environment.SpecialFolder.MyDocuments), @".\<xsl:value-of select="$ApplicationName"/>.db3")),

</xsl:if>

</xsl:if>

DevExpress.Xpo.DB.AutoCreateOption.DatabaseAndSchema);

</xsl:if>

}

catch (Exception e)

{

XtraMessageBox.Show("Something went wrong while connecting to the database '<xsl:value-of select="$ApplicationName"/>DevExpress': " + e.Message, "Error");

}

}

The function is responsible to make a connection to the database backend. Due to the fact, that I am using a XPO ORM, I was able to change the code in an isolated manner. The DevExpress guys have made a great job to enable WCFing the DAL by changing some connection string related code only. The result of the change was to query for a flag to enable remote communication. The following code contains bold marked text to highlight some important configurability of the template. These parameters are passed into the template for easy feature selection, because I liked to also be able to switch back using a local scenario only. (The switchback is actually not tested, but planned)

protected static bool _DALInitialized = false;

protected static Binding CreateBinding(string security_mode)

{

Binding _binding;

switch (security_mode)

{

<xsl:if test="$SUPPORT_BROKERED_AUTH='yes'">

case "Brokered_Auth":

CustomBinding bindingBA = new CustomBinding("UsernameBinding");

_binding = bindingBA;

break;

</xsl:if>

case "Transport":

NetTcpBinding bindingT = new NetTcpBinding(SecurityMode.Transport);

bindingT.Security.Transport.ProtectionLevel = System.Net.Security.ProtectionLevel.EncryptAndSign;

bindingT.Security.Transport.ClientCredentialType = TcpClientCredentialType.None;

bindingT.MaxReceivedMessageSize = Int32.MaxValue;

bindingT.ReaderQuotas.MaxArrayLength = Int32.MaxValue;

bindingT.ReaderQuotas.MaxDepth = Int32.MaxValue;

bindingT.ReaderQuotas.MaxBytesPerRead = Int32.MaxValue;

bindingT.ReaderQuotas.MaxStringContentLength = Int32.MaxValue;

_binding = bindingT;

break;

case "Message":

NetTcpBinding bindingM = new NetTcpBinding(SecurityMode.Message);

bindingM.Security.Transport.ProtectionLevel = System.Net.Security.ProtectionLevel.EncryptAndSign;

bindingM.Security.Message.ClientCredentialType = MessageCredentialType.None;

_binding = bindingM;

break;

case "None":

NetTcpBinding bindingN = new NetTcpBinding(SecurityMode.None);

_binding = bindingN;

break;

default:

NetTcpBinding bindingD = new NetTcpBinding();

_binding = bindingD;

break;

}

return _binding;

}

protected static X509CertificateValidationMode SetCertificateValidationMode(string X509CertificateValidationMode)

{

X509CertificateValidationMode mode = System.ServiceModel.Security.X509CertificateValidationMode.ChainTrust;

switch (X509CertificateValidationMode)

{

case "None":

mode = System.ServiceModel.Security.X509CertificateValidationMode.None;

break;

case "PeerTrust":

mode = System.ServiceModel.Security.X509CertificateValidationMode.PeerTrust;

break;

case "PeerOrChainTrust":

mode = System.ServiceModel.Security.X509CertificateValidationMode.PeerOrChainTrust;

break;

case "ChainTrust":

mode = System.ServiceModel.Security.X509CertificateValidationMode.ChainTrust;

break;

}

return mode;

}

protected string convertToUNSecureString(SecureString secstrPassword)

{

IntPtr unmanagedString = IntPtr.Zero;

try

{

unmanagedString = Marshal.SecureStringToGlobalAllocUnicode(secstrPassword);

return Marshal.PtrToStringUni(unmanagedString);

}

finally

{

Marshal.ZeroFreeGlobalAllocUnicode(unmanagedString);

}

}

public bool InitDAL(string user, SecureString pass)

{

if (_DALInitialized)

return true;

try

{

<xsl:if test="$USE_DATASTORECLIENT='yes'">

string host = ConfigurationManager.AppSettings.Get("DataStoreHost");

string port = ConfigurationManager.AppSettings.Get("DataStorePort");

string security_mode = ConfigurationManager.AppSettings.Get("DataStoreSecurityMode");

string X509CertificateValidationMode = ConfigurationManager.AppSettings.Get("X509CertificateValidationMode");

string LocalMachine_My_BySubject_ClientCertificate = ConfigurationManager.AppSettings.Get("LocalMachine_My_BySubject_ClientCertificate");

string LocalMachine_My_BySubject_ServerCertificate = ConfigurationManager.AppSettings.Get("LocalMachine_My_BySubject_ServerCertificate");

string DnsIdentity = ConfigurationManager.AppSettings.Get("DnsIdentity");

string auxPath = "net.tcp://" + host + ":" + port + "/<xsl:value-of select="$ApplicationName"/>Service";

Binding binding = CreateBinding(security_mode);

EndpointAddress addr;

if (DnsIdentity != null && DnsIdentity != "")

{

addr = new EndpointAddress(new Uri(auxPath), EndpointIdentity.CreateDnsIdentity(DnsIdentity));

}

else

{

addr = new EndpointAddress(new Uri(auxPath));

}

DevExpress.Xpo.DB.DataStoreClient dataLayer;

if (security_mode == "Brokered_Auth")

{

dataLayer = new DevExpress.Xpo.DB.DataStoreClient("<xsl:value-of select="$ApplicationName"/>Endpoint");

}

else

{

dataLayer = new DevExpress.Xpo.DB.DataStoreClient(binding, addr);

}

string password = convertToUNSecureString(pass);

InMemoryIssuedTokenCache cache = new InMemoryIssuedTokenCache();

dataLayer.ClientCredentials.ServiceCertificate.Authentication.CertificateValidationMode = SetCertificateValidationMode(X509CertificateValidationMode);

<xsl:if test="$SUPPORT_BROKERED_AUTH='yes'">

if (security_mode == "Brokered_Auth") {

DurableIssuedTokenClientCredentials durableCreds = new DurableIssuedTokenClientCredentials();

durableCreds.IssuedTokenCache = cache;

dataLayer.ChannelFactory.Endpoint.Behaviors.Remove<ClientCredentials>();

dataLayer.ChannelFactory.Endpoint.Behaviors.Add(durableCreds);

dataLayer.ClientCredentials.ServiceCertificate.Authentication.CertificateValidationMode = SetCertificateValidationMode(X509CertificateValidationMode);

if (LocalMachine_My_BySubject_ServerCertificate != null && LocalMachine_My_BySubject_ServerCertificate != "")

dataLayer.ClientCredentials.ServiceCertificate.SetDefaultCertificate(StoreLocation.LocalMachine, StoreName.My, X509FindType.FindBySubjectName, LocalMachine_My_BySubject_ServerCertificate);

if (LocalMachine_My_BySubject_ClientCertificate != null && LocalMachine_My_BySubject_ClientCertificate != "")

dataLayer.ClientCredentials.ClientCertificate.SetCertificate(StoreLocation.LocalMachine, StoreName.My, X509FindType.FindBySubjectName, LocalMachine_My_BySubject_ClientCertificate);

dataLayer.ChannelFactory.Credentials.UserName.UserName = user;

dataLayer.ChannelFactory.Credentials.UserName.Password = password;

}

</xsl:if>

DevExpress.Xpo.SimpleDataLayer dtLayer = new DevExpress.Xpo.SimpleDataLayer(dataLayer);

DevExpress.Xpo.XpoDefault.DataLayer = dtLayer;

if (security_mode == "Brokered_Auth")

{

try

{

DevExpress.Xpo.UnitOfWork uow = new DevExpress.Xpo.UnitOfWork();

uow.ExplicitBeginTransaction();

uow.ExplicitRollbackTransaction();

password = "";

}

catch (MessageSecurityException secex)

{

if (secex.InnerException != null)

{

XtraMessageBox.Show("Something went wrong while connecting to the database '<xsl:value-of select="$ApplicationName"/>': " + secex.InnerException.Message, "Error");

return false;

}

}

}

</xsl:if>

<xsl:if test="USE_DATASTORECLIENT!='yes'">

<xsl:if test="$USE_XPO='yes'">

DevExpress.Xpo.XpoDefault.DataLayer = DevExpress.Xpo.XpoDefault.GetDataLayer(

<xsl:if test="$DB_ENGINE='postgresql'">

DevExpress.Xpo.DB.PostgreSqlConnectionProvider.GetConnectionString("vmhost.behrens.de", "dba", "trainres", "<xsl:value-of select="$ApplicationName"/>"),

</xsl:if>

<xsl:if test="$DB_ENGINE='access'">

DevExpress.Xpo.DB.AccessConnectionProvider.GetConnectionString(Path.Combine(Environment.GetFolderPath(Environment.SpecialFolder.MyDocuments), @".\<xsl:value-of select="$ApplicationName"/>.mdb")),

</xsl:if>

<xsl:if test="$DB_ENGINE='sqlite'">

<xsl:if test="$ApplicationName='lbDMFManager'">

DevExpress.Xpo.DB.SQLiteConnectionProvider.GetConnectionString(Path.Combine(@"C:\lbDMF", @"lbDMF.db3")),

</xsl:if>

<xsl:if test="$ApplicationName!='lbDMFManager'">

DevExpress.Xpo.DB.SQLiteConnectionProvider.GetConnectionString(Path.Combine(Environment.GetFolderPath(Environment.SpecialFolder.MyDocuments), @".\<xsl:value-of select="$ApplicationName"/>.db3")),

</xsl:if>

</xsl:if>

DevExpress.Xpo.DB.AutoCreateOption.DatabaseAndSchema);

</xsl:if>

</xsl:if>

}

catch (Exception e)

{

XtraMessageBox.Show("Something went wrong while connecting to the database '<xsl:value-of select="$ApplicationName"/>': " + e.Message, "Error");

return false;

}

_DALInitialized = true;

return true;

}

Other parts in the template were changed in the same way by inspecting the prototype code for changes to get the stuff running in internet scenario. Here I suggest using a version management system for storing the versions of the code to ease the comparsion.

There were also many new files in the project that required overhaul of the complete template to integrate new XSLT files into the generation process. I do not like to put all these here into the article, because it is quite a bit too much :-)

Paramererize WCF endpoints and certificates

Important stuff for the reader is the configuration where are the services running. You usually do not have access to my cloud environment and thus need to make some changes in the template and some changes in the application models as well. The changes in the template are more likely static and relate to the certificates used in the system for securing the communication channel. You need to provide your own.

Changing the certificate thumbprints in the template is an exercise for you :-)

The other part is an application configuration that is stored as UML parameters in the UML package containing the classes for the entities. Effectively the inner most package. The following parameters are required:

- An MSI upgrade code for the application product, effectively a UUID you could generate within Visual Studio.

- An MSI product id for the application product. Both are required for the WCF application service, not the client. The client still did not contain an installer.

- A version is also required for the MSI.

- A datastore backend WCF address. This is the service providing the data for the application.

- A Sts backend WCF address. The client will contact this service to obtain a token.

The configuration names and values are repeated here for reference:

UpgradeCode = 48dc066c-<snip>

ProductCode = 0BB62EC4-<snip>

ProductVersion = 1.0.4.0

DataStoreBaseAddress = net.tcp://cloud.yourserver.de:<port>

StsBaseAddress = net.tcp://cloud.yourserver.de:<port>

Modify STS template service

As a note I like to explain here how I have created the STS backend service. It is based upon an article by Pablo M. Cibraro: http://weblogs.asp.net/cibrax/federation-over-tcp-with-wcf. The sample code, he provided is a simple console demonstration about a NetTcpBinding utilizing a federated scenario. Inspecting the code and doing several trials, it was possible to get my version of it using a Windows service hosting the WCF STS service. The base for my templatizing of this component.

Note: Using the net tcp binding scenario showed up some extra work and complexity I had to understand, but it was worth the work to base my implementation upon the work from Pablo. It is simply a much cheaper payload overhead and thus reduces the traffic and increases the speed. But note, this is a binding for .NET code only. The fact, that I am using .NET at the client, helped to decide using it.

After I have got my STS service up and running including the POC based code, I need to make it a really usable component. The template was created with the basic POC scenario and only needed to be modified. The required code was taken over from it's origin in my template located in the client and placed into the template to be accessed from the STS logic. Effectively the entity class 'SecurityUsers' was copied and the template was modified acordingly. The next step was to implement a real authentication mechanism. I replaced the code that consists of a mock user account by the logic accessing and filtering the login attempt with the password provided. This is a class the WCF configuration hooks into the correct place to activate this mechanism. There is more code to be changed, but also the schema needs to be enhanced to change the hardcoded services that are enlisted in the audience uri configuration (app config) and the SAML creation logic. It was time to create a new UML model.

Create an UML model for STS backend schema

To get it quickly done, I copied over the entity classes of the client based authentication mechanism and changed the code in question to get the configured values from the database instead of the app config. I had a login feature where I utilized the SecurityUsers table in the STS service.

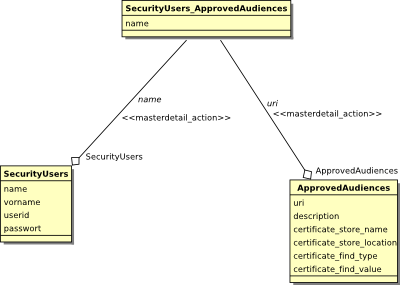

Then I created an UML model that will be used to configure the remaining parts that yet has been made hardcoded. The database schema of the required tables looks like this here:

The UML model is used for two issues. One is the STS service and another is it's management application. Producing the management application first, I was able to grab more code!

After the code for the new management client has been created I have grabbed the remaining entity classes to get it into the STS service. Done so I replaced relevant code to get the SAML tokens generated upon the configuration in the database. Also the creation of the enlisted audience uri's is done with the configuration tables.

Even the STS service would be written once, I decided to put it into a template. This is because the most code could be generated in case if I need more tables to build STS functionality upon. I think it is not much more work to manage the template instead to manage a crosscutting library including a product (the STS service + management). This is a point one can argue against my decision.

Conclusion

Using code generation techniques improves speed of development. It has helped me to develop parts of the code one usually would write manually. Even the STS service prototype has been written manually (by taking samples), it was later enhanced by generated code. So it is really possible to use model driven development methodologies in the area of service oriented environments. Using a 100% generation approach you have some issues about complaints against code that would not need to be generated, but in a prototyping development, it helps you to quickly create a standalone solution.

Using the code

The code for this article is based upon my software release and thus can be downloaded at the places listed here:

http://lbdmf.sourceforge.net/

The download packages are as following:

The prototyping application: http://sourceforge.net/projects/lbdmf/files/lbdmf/lbDMF-1.0.4.2-final/lbDMF-BinSamples-1.0.4.2-final.exe/download

The code generator template: http://sourceforge.net/projects/lbdmf/files/lbdmf/lbDMF-1.0.4.4-final/lbDMF-CAB-DevExpress-Generator-Compilation-1.0.4.4-final.exe/download

A documentation how to use BoUML or ArgoUML to create the UML models can be downloaded here:

http://sourceforge.net/projects/lbdmf/files/lbdmf/lbDMF-1.0.4.2-final/ApplicationprototypingDokumentation.pdf/download (To be updated later)

The prototyping application contains an UML model for the STSManagement application and a XMI model that could be imported into ArgoUML. So you would be able to generate the required code and setup your own environment.

Note: You need an evaluation version of XPO and the WinForms Library from DevExpress. Also you need a version of Visual Studio 2010 or newer. I have not tested to use SharpDevelop, thus I cannot tell you if that will work.

Points of Interest

Are there any issues yet? Yes, the code does not yet implement authorization. This is also an important feature, real applications need. There is more research required to implement a practically usable mechanism. This is based to the case where I am using XPO and need to investigate how to implement it using that library.

Also there is no code that utilizes federation between services and more than one STS. So I have something for the future.

History

Corrected images. They were not displayed correctly.

Initial release.