Introduction

[toc]

This article presents a tool, named SiteMapper that produces

Google site maps. The first part of this article is a User Manual

for the tool; the second describes its implementation.

Table of Contents

The symbol

[toc]

returns the reader to the top of the Table of Contents.

User Manual

[toc]

Although usually directed to start at a web site's root (usually

index.htm or default.html), SiteMapper operates starting at any

page in a web site. This starting page is referred to as the

topmost file.

If the topmost file is a descendent of the web site's root,

SiteMapper will traverse the site from that point, downward.

However,

SiteMapper may also traverse web pages above the topmost file. This

could occur if some page makes a reference to another page higher in

the web site's "tree".

For example, most well designed web sites allow a reader to click on

some image (usually in the upper left of the page) to return to the

web site's home page. This requires that SiteMapper continue

traversal downward from that page. But this action could cause a

recursion. So there is a built-in mechanism to avoid such

recursion. When a page is revisited, the page is marked as a

Duplicate Link and further downward traversal from that page is

inhibited.

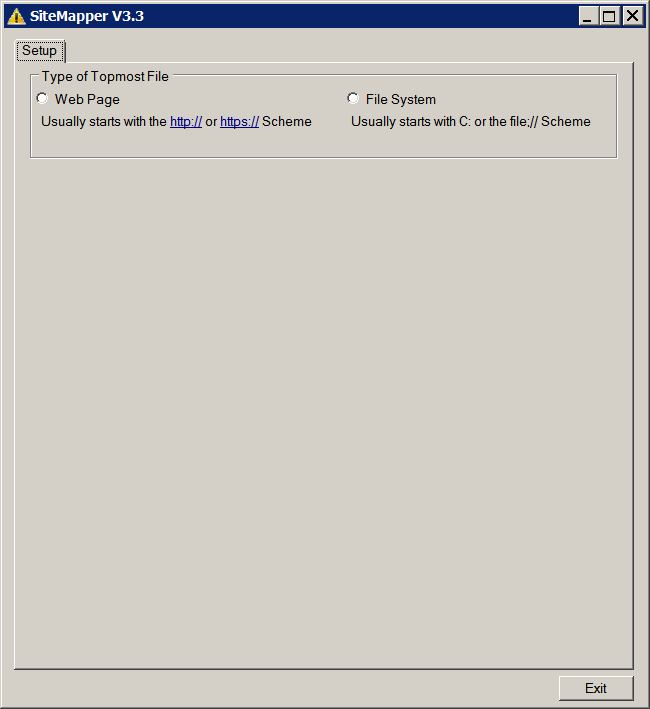

Type of the Topmost File

[toc]

When SiteMapper first executes, the Type of Topmost File GroupBox

opens and the user must choose the type of topmost file that

the tool will access: web page or local file system. The

distinction is required to insure that the correct

Scheme

[^] is supplied in the

Uri

[^]. The topmost file may be on the local

file system or on a site on the web.

Name of the Topmost File

[toc]

When a RadioButton in the Type of Topmost File GroupBox is

checked, either the File Name or Web Page GroupBox opens. In

addition, the

Site Map Options,

Tree Reporting Options, and

Traversal Limits GroupBoxes and the

Prepare Site Map

Button appear.

If the file system option was chosen, the user must supply the name

of the topmost file, located on the local computer. The user may

either type the filename directly in the TextBox or click on

the Browse button. If the Browse button is clicked, an open file

DialogBox will be presented.

If the web page option was chosen, the user must enter the

URL

[^] of the topmost page, located on the web.

Warning

Although the tool will work against any web site, choosing a web

site such as "www.google.com" or "codeproject.com" will probably

cause an exception because memory was exceeded. This tool was not

developed to support very large web sites, although it could be

modified to do so. The traversal limit control will limit the

number of pages retrieved and thus avoid (hopefully) an exception.

Whichever topmost filename option is chosen, if, after the

filename has been entered in the TextBox and with the cursor

still in the TextBox, the Enter key may be pressed to start

traversal. Note that the options that will be used during

traversal will be those selected at the time that the Enter key

was pressed. This shortcut is an alternative to clicking the

Prepare Site Map Button.

Site Map Options

[toc]

Site map options control what is contained in the site map. Using

the default site map options will produce the simplest form of a

site map accepted by Google. Using the test web site contained

within the SiteMapper project, the default site map options will

produce the following:

="1.0"="utf-16"

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<url>

<loc>file:///C:/Gustafson/Utilities_2014/SiteMapper/TestWebSite/a.html</loc>

</url>

<url>

<loc>file:///C:/Gustafson/Utilities_2014/SiteMapper/TestWebSite/c.html</loc>

</url>

<url>

<loc>file:///C:/Gustafson/Utilities_2014/SiteMapper/TestWebSite/g.html</loc>

</url>

<url>

<loc>file:///C:/Gustafson/Utilities_2014/SiteMapper/TestWebSite/d.html</loc>

</url>

<url>

<loc>file:///C:/Gustafson/Utilities_2014/SiteMapper/TestWebSite/h.html</loc>

</url>

<url>

<loc>file:///C:/Gustafson/Utilities_2014/SiteMapper/TestWebSite/j.html</loc>

</url>

<url>

<loc>file:///C:/Gustafson/Utilities_2014/SiteMapper/TestWebSite/i.html</loc>

</url>

<url>

<loc>file:///C:/Gustafson/Utilities_2014/SiteMapper/TestWebSite/e.html</loc>

</url>

<url>

<loc>file:///C:/Gustafson/Utilities_2014/SiteMapper/TestWebSite/f.html</loc>

</url>

<url>

<loc>file:///C:/Gustafson/Utilities_2014/SiteMapper/TestWebSite/b.html</loc>

</url>

</urlset>

The description of the site map tags,

provided by Google

[^], is:

| Tag

| Required?

| Description

|

| <urlset>

| Required

| Encloses all information about the set of URLs included in the Sitemap.

|

| <url>

| Required

| Encloses all information about a specific URL.

|

| <loc>

| Required

| Specifies the URL. For images and video, specifies the landing page (aka

play page, referrer page). Must be a unique URL.

|

| <lastmod>

| Optional

| The date the URL was last modified, in YYYY-MM-DDThh:mmTZD format (time

value is optional).

|

| <changefreq>

| Optional

| Provides a hint about how frequently the page is likely to change. Valid

values are:

- always - use for pages that change every time they are accessed.

- hourly

- daily

- weekly

- monthly

- yearly

- never - use this value for archived URLs.

|

| <priority>

| Optional

| Describes the priority of a URL relative to all the other URLs on the

site. This priority can range from 1.0 (extremely important) to 0.1 (not

important at all). Does not affect your site's ranking in Google search

results. Because this value is relative to other pages on your site, assigning

a high priority (or specifying the same priority for all URLs) will not

help your site's search ranking. In addition, setting all pages to the

same priority will have no effect.

|

If a SiteMapper user wants one or more of the optional site map

tags (i.e., <lastmod>, <changefreq>, or

<priority>), check the appropriate box. When checked,

additional information will be solicited.

Using the same test web site as above, a site map that contains all

of the site map tags is depicted in the figure to the left.

The resulting site map may be copied (Ctrl-A, Ctrl-C, Ctrl-V) or it

may be saved to a file by clicking on the Save Site Map Button. If

that button is clicked, a Save As DialogBox will open.

The order in which the pages (Uri's) are presented is the order in

which they were encountered during the traversal.

Tree Reporting Options

[toc]

Tree Reporting Options control what is contained in the site tree,

an interactive tree that provides the structure of the site being

mapped.

The tree appears like a TreeView but with an additional

expand/collapse symbol to the right of the page name. The

expand/collapse symbol appears if one or more of the content

CheckBoxes (CSS, Icons, Images, or JavaScript) was checked and that

type of content is found in the page.

The user may specify whether or not

Broken Links and/or

External Pages

are to be reported. A broken link is a reference that cannot

be retrieved. An external page is one that does not have the

topmost page as its base.

IsBaseOf

[^]

is used to determine if a Uri is the base of another Uri.

Regardless of these settings, neither broken links nor external

pages will be included in the site map. However, if checked, the

site tree will display these pages.

An example of a Site Tree, generated using the default site tree

options and expanding all children, is depicted in the figure to the

left.

The user may specify that the site tree display

Duplicate Links.

These links occur when a single page is linked to by more than one

page on the site.

An example of an expanded Site Tree, generated using all of the site

tree options and expanding all children, is depicted in the figure

to the right.

When the expand/collapse symbol is clicked, the internal file

inclusions of the web page are displayed. These inclusions include:

CSS files

Icon files

Image files

JavaScript files

For each of the site tree content options checked, during web page

examination, the files for each checked content type are recorded.

If there are no file inclusions within the web page or if no

CheckBoxes were checked, no expand/collapse symbol will appear.

An example of an expanded site tree, generated using all of the site

tree options and expanding all children content, is depicted in the

figure to the left.

Traversal Limits

[toc]

To insure that a very large web site traversal is somewhat limited,

the user may specify a value in the Maximum Number of Web Pages

NumericUpDown control. When the number of web pages recorded exceeds

the value in that control, traversal terminates. The information

collected up to that point can be displayed.

Prepare Site Map

[toc]

When all of the setup options meet the user's needs, the Prepare

Site Map Button is clicked. At that time, the actual web site

traversal starts and the Status GroupBox is displayed..

Progress is reported by displaying the web page currently being

retrieved as well as by a numeric count of the web pages so far

processed. This is displayed in the figure to the left, above. It

should be noted that there is a delay between clicking the

Prepare Site Map Button and the first reported activity. After the

first web page has been retrieved, following pages are retrieved

more quickly.

When the web site has been traversed, the status is reported as

Site Map Prepared. In addition, two TabPages appear at the top of

Site Mapper Form. This is displayed in the figure to the right,

above.

Additional Considerations

[toc]

When the Site tree is displayed, web page links may be presented in

any one of five colors.

| Color | Meaning |

| Black

| Normal web page link.

|

| Dodger Blue

| Duplicate web page link.

|

| Red

| A broken link encountered during the traversal.

|

| Green

| An external web page link (i.e., one that is not a descendent

of the topmost file).

|

| Dark Violet

| Follow web page link. When the label of a link is clicked, the

color changes from Black

to Dark Violet. When the

label is colored Dark Violet,

a second click will cause the web page to be displayed in a

separate window.

|

Implementation

[toc]

SiteMapper is basically a web crawler that records web pages.

Depending upon the user's choice of options, SiteMapper records all

of the links contained within them. This section discusses the

SiteMapper implementation. First, the data structures that are key

to recording the structure of the web site. Then the algorithms that

perform the traversal and reporting.

Data Structures

[toc]

BrotherTree

[toc]

The singularly most important data structure used in SiteMapper is

a BrotherTree.

The BrotherTree is made up of BrotherNodes, each one pointing

to its parent, children, and siblings (right-brothers).

The BrotherTree for the test web site, used earlier, is

depicted in the figure to the left.

With the exception of the root (the top-left node), all nodes have a

parent. Although all nodes have a left-brother link, it is not used

in SiteMapper

BrotherNode

[toc]

As mentioned above, the BrotherTree is made up of BrotherNodes.

These nodes contain links to other nodes as well as information

about the web page that the node represents.

The following table defines each of the BrotherNode members.

| Name | Purpose | | Node Uri | The Uri of the web page | | Link Text | The text in the link for the web page | | Parent | A link to the BrotherNode that references this page

| | Right Brother | A link to a BrotherNode, discovered later in the

traversal, that is a sibling of this node (i.e.,

referenced by this node's parent)

| | Left Brother | A link to a BrotherNode, discovered earlier in the

traversal, that is a sibling of this node (i.e.,

referenced by this node's parent)

| | Child | A link to the BrotherNode that is a child of this node

| | CSS | List of the CSS files referenced by the web page | | Icon | List of the Icon files referenced by the web page | | Image | List of the image file referenced by the web page | | JavaScript | List of the JavaScript files referenced by the web page | | Broken | true,

if the web page cannot be retrieved;

false,

otherwise

| | Duplicate | true,

if the web page is a duplicate reference;

false,

otherwise

| | External | true,

if the web page is an external reference;

false,

otherwise

| | Depth | Indicates the depth of the web page in the BrotherTree | | Follow Link | true,

if the web page is to be followed if the user clicks a

second time on the displayed node label;

false,

otherwise

| | Display Children | true,

if the children of this node are to be displayed;

false,

otherwise

| | Display Content | true,

if the contents (lists of CSS, Icon, Image, and

JavaScript files) of this node are to be displayed;

false,

otherwise

|

|

|

BrotherNodes are added to the BrotherTree with the following method.

public void add_node ( BrotherNode parent,

BrotherNode new_node )

{

if ( new_node == null )

{

throw new ArgumentException ( @"may not be null",

@"new_node" );

}

else if ( Root == null )

{

Root = new_node;

}

else if ( parent == null )

{

throw new ArgumentException ( @"may not be null",

@"parent" );

}

else

{

new_node.Parent = parent;

if ( parent.Child == null )

{

parent.Child = new_node;

}

else

{

BrotherNode node = parent.Child;

BrotherNode next_node = node.RightBrother;

while ( next_node != null )

{

node = next_node;

next_node = next_node.RightBrother;

}

node.RightBrother = new_node;

new_node.LeftBrother = node;

new_node.Child = null;

}

}

node_count++;

}

Note that a LeftBrother is included in the BrotherNode, SiteMapper

does not currently use it.

List Head and Node

[toc]

Each BrotherNode has four lists associated with it. Each list

contains included files (i.e., CSS, Icon, Image, and JavaScript)

that are associated with the web page.

|

| CSS | Cascading Style Sheet inclusions are detected when the

following link tag is encountered and are recorded in

the list with the ListType of CSS.

<link rel="stylesheet" ... href="?.css" />

| | Icon | Icons are detected when the following link tag is

encountered and are recorded in the list with the

ListType of ICON.

<link ... rel="[shortcut ]icon" href="?.ico" />

| | Image | Images are detected when the following img tag is

encountered and are recorded in the list with the

ListType of IMAGE.

<img src="?" alt="?" ... />

| | JavaScript | JavaScript sources detected when the following script

tag is encountered and are recorded in the list with the

ListType of JAVASCRIPT.

<script ... src="?.js"></script>/>

|

|

Algorithms

[toc]

Probably the most important algorithm is the one that traverses the

web site pages. However, to understand how everything goes together,

it may be useful to understand the overall working of SiteMapper.

SiteMapper Overview

[toc]

SiteMapper execution can be divided into six distinct steps.

| 1 | Initialize SiteMapper | Housekeeping functions are performed before continuing

execution. These tasks include: establishment of event handlers

and initializing the GUI (initialize all three TabPages and

display the Setup TabPage, limiting the display to the Type

of the Topmost File GroupBox).

|

| 2 | Collect user input | User interaction with SiteMapper is accomplished through the

raising and processing of events. As a user checks a RadioButton

or a CheckBox, presses a Button, chooses an item from a

ComboBox, or sets a value in a NumericUpDown, SiteMapper records

the users input. User input is saved in the ApplicationState

Class so that it may be accessed from any of the SiteMapper

Classes.

|

| 3 | Verify user input | The design of the GUI is such that, with the exception of the

topmost file name TextBox, all user interaction is automatically

validated. To simplify the code, event handlers that collect the

state of each type of control are defined: BUT_Click for all

Buttons, CHKBX_CheckedChanged for all CheckBoxes, and

RB_CheckedChanged for all RadioButtons. There are some

exceptions but, as the name implies, they are exceptions.

The characters in web page names that are entered into the web

page topmost file TextBox are not verified (i.e.,

RFC 1738 - Uniform Resource Locators (URL)

[^] is not applied). The characters

entered into the filename topmost file TextBox are only limited

to 260 characters in length.

|

| 4 | Traverse the Web Site | To maintain a responsive user interface, the actual traversal of

the web pages is performed asynchronously by a

BackgroundWorker Class

[^]

thread.

|

| 5 | Create Site Map | At the conclusion of the web site traversal, the Site Map is

created and placed within a RichTextBox in the Site Map TabPage.

Then both the Site Map and Site Tree TabPages are made visible.

|

| 6 | Create Site Tree | The Site Tree resembles a TreeView but with sufficient

differences that a new ScrollableControl, named BrotherTreeView,

was developed. When the Site Map TabPage is

entered, the BrotherTreeView OnPaint event is raised. The

BrotherTreeView also reacts to user input on any part of the

Site Tree.

|

Traversing the Web Site

[toc]

Traversing a web site is straight-forward. Actually it's

straight-forward only because of the

Html Agility Pack

[^] (HAP). Unfortunately, as with most

CodePlex projects the documentation is non-existent. For

documentation, I would strongly recommend

Parsing HTML Documents with the Html Agility Pack

[^]

by Scott Mitchell of 4GuysFromRolla.com.

As mentioned earlier, the traversal is performed in an asynchronous

background thread. The input required to traverse a web site is the

BrotherNode for the topmost file. That BrotherNode is retrieved from

the Thread_Input property

Root. To report traversal progress, the

Thread_Output properties

Activity and WebPage are updated and the ReportProgress event is

raised. Both classes are depicted following the

TraverseWebSite_BW_DoWork

code and its discussion.

void TraverseWebSite_BW_DoWork ( object sender,

DoWorkEventArgs e )

{

BrotherNode root = null;

ThreadInput thread_input = null;

ThreadOutput thread_output = null;

BackgroundWorker worker = null;

worker = ( BackgroundWorker ) sender;

thread_input = ( ThreadInput ) e.Argument;

thread_output = new ThreadOutput ( );

root = thread_input.Root;

State.site_tree = new BrotherTree ( );

State.site_tree.add_node ( null, root );

stack = new Stack<BrotherNode> ( );

stack.Push ( root );

visited = new Dictionary < string, BrotherNode > ( );

visited.Add ( root.NodeUri.AbsoluteUri, root );

while ( stack.Count > 0 )

{

int count = 0;

string extension = String.Empty;

BrotherNode node = stack.Pop ( );

Uri uri = node.NodeUri;

if ( root.NodeUri.IsBaseOf ( node.NodeUri ) )

{

node.External = false;

}

else

{

node.External = true;

continue;

}

if ( !String.IsNullOrEmpty ( uri.Fragment ) ||

( uri.AbsoluteUri.IndexOf ( @"#" ) > 0 ) ||

( uri.AbsoluteUri.IndexOf ( @"%23" ) > 0 ) )

{

continue;

}

extension = Path.GetExtension ( uri.AbsoluteUri ).

ToLower ( ).

Trim ( );

if ( State.excluded_extension.Contains ( extension ) )

{

continue;

}

if ( worker.CancellationPending )

{

e.Cancel = true;

break;

}

if ( BrotherTree.NodeCount >= State.maximum_pages )

{

stack.Clear ( );

continue;

}

thread_output.Activity = @"Retrieving";

thread_output.WebPage = node.NodeUri.AbsoluteUri;

worker.ReportProgress ( count++, thread_output );

HAP.HtmlDocument web_page = new HAP.HtmlDocument ( );

try

{

WebRequest web_request;

WebResponse web_response;

web_request = WebRequest.Create ( uri.

AbsoluteUri );

web_response = web_request.GetResponse ( );

using ( Stream stream = web_response.

GetResponseStream ( ) )

{

web_page.Load ( stream );

}

}

catch ( WebException we )

{

node.Broken = true;

continue;

}

extract_hyperlinks ( web_page, root, node );

if ( State.report_css )

{

extract_css ( web_page, node );

}

if ( State.report_icons )

{

extract_icons ( web_page, node );

}

if ( State.report_images )

{

extract_images ( web_page, node );

}

if ( State.report_javascript )

{

extract_javascript ( web_page, node );

}

}

}

TraverseWebSite_BW_DoWork

performs the following tasks:

- The root is extracted from

Thread_Input, added to

the BrotherTree, pushed on a Stack, and added to the visited

Dictionary.

- As long as the stack is not empty, the following actions are

taken:

- A node is popped off the stack. The first time, the node

that is popped off will be the root; thereafter it will be a

node that is a descendant of the root.

- If the node is external, the node is so marked and control

returns to the top of the loop.

- If the node is a fragment, control returns to the top of

the loop.

- If the node's extension is one of the excluded extensions

(currently: .pdf, .gif, .jpg, .png, .tif, .zip), control

returns to the top of the loop. The excluded extensions are

defined in the Constants class.

- If the BrotherTree NodeCount is greater than or equal to the

maximum number of pages to be retrieved, the stack is

emptied and control returns to the top of the loop. This

effectively terminates the loop.

- A progress report is made.

- A new Html Agility Pack HtmlDocument is created.

- The HtmlDocument is filled from a WebResponse; if not

successful, the node is marked broken and control returns to

the top of the loop.

- The hyperlinks in the current page are extracted and

recorded. The code for the

extract_hyperlinks

method follows.

- Each content type specified by the user is extracted.

The Html Agility Pack has a Load method:

HAP.HtmlDocument web_page = new HAP.HtmlWeb ( ).Load ( uri.AbsoluteUri );

However, that method will not load a file system web site. For that

reason, the traversal method uses WebRequest and WebResponse.

The Thread_Input class is:

namespace DataStructures

{

public class ThreadInput

{

public BrotherNode Root { get; set; }

}

}

The Thread_Output class is:

namespace DataStructures

{

public class ThreadOutput

{

public string Activity { get; set; }

public string WebPage { get; set; }

}

}

The most important of the extract methods is

extract_hyperlinks:

void extract_hyperlinks ( HAP.HtmlDocument web_page,

BrotherNode root,

BrotherNode parent )

{

HAP.HtmlNodeCollection hyperlinks;

hyperlinks = web_page.DocumentNode.SelectNodes (

@"//a[@href]" );

if ( ( hyperlinks == null ) || ( hyperlinks.Count == 0 ) )

{

return;

}

foreach ( HAP.HtmlNode hyperlink in hyperlinks )

{

HAP.HtmlAttribute attribute;

string destination = String.Empty;

string extension = String.Empty;

string linked_text = String.Empty;

BrotherNode new_node = null;

Uri next_uri;

attribute = hyperlink.Attributes [ @"href" ];

if ( attribute == null )

{

continue;

}

if ( String.IsNullOrEmpty ( attribute.Value ) )

{

continue;

}

destination = attribute.Value;

next_uri = new_uri ( root.NodeUri,

destination,

parent );

if ( next_uri == null )

{

continue;

}

if ( !String.IsNullOrEmpty ( hyperlink.InnerText ) )

{

linked_text =

String.Join (

@" ",

Regex.Split ( hyperlink.InnerText,

@"(?:\r\n|\n|\r)" ) ).

Trim ( );

}

if ( visited.ContainsKey ( next_uri.AbsoluteUri ) )

{

if ( State.display_duplicate_pages )

{

BrotherNode old_node = null;

string uri = next_uri.AbsoluteUri;

visited.TryGetValue ( uri,

out old_node );

if ( old_node != null )

{

string new_parent = String.Empty;

string old_parent = String.Empty;

new_node = new BrotherNode ( uri,

linked_text,

parent );

new_parent = parent.NodeUri.AbsoluteUri;

if ( old_node.Parent == null )

{

continue;

}

old_parent = old_node.Parent.NodeUri.

AbsoluteUri;

if ( old_parent.Equals ( new_parent ) )

{

continue;

}

if ( new_node.Depth >= old_node.Depth )

{

old_node.Duplicate = false;

new_node.Duplicate = true;

State.site_tree.add_node ( parent,

new_node );

}

else

{

old_node.Duplicate = true;

new_node.Duplicate = false;

State.site_tree.add_node ( parent,

new_node );

visited.Remove ( uri );

visited.Add ( uri, new_node );

}

}

}

}

else

{

new_node = new BrotherNode ( next_uri.AbsoluteUri,

linked_text,

parent );

new_node.External = true;

if ( root.NodeUri.IsBaseOf ( next_uri ) )

{

new_node.External = false;

stack.Push ( new_node );

}

visited.Add ( next_uri.AbsoluteUri, new_node );

State.site_tree.add_node ( parent, new_node );

}

if ( BrotherTree.NodeCount >= State.maximum_pages )

{

stack.Clear ( );

break;

}

}

}

extract_hyperlinks

is passed the following parameters.

| Parameter | Class | Description |

| web_page | HtmlDocument | The Html Agility Pack HtmlDocument of the web page currently

being examined by

TraverseWebSite_BW_DoWork.

Note that the Html Agility Pack HtmlDocument differs from the

System.Windows.Forms HtmlDocument. Thus the need for the HAP

prefix (using HAP = HtmlAgilityPack;).

|

| root | BrotherNode | The BrotherNode that contains the topmost file name data.

|

| parent | BrotherNode | The BrotherNode of the web page currently being examined by

TraverseWebSite_BW_DoWork.

|

extract_hyperlinks

performs the following tasks:

- Retrieve all hyperlinks in the web page and store them in the

HAP.HtmlNodeCollection hyperlinks collection. The selector

"//a[@href]" selects all anchor elements that have an attribute

named "href".

- If no hyperlinks exist, return.

- For each hyperlink in the collection, take the following

actions:

- Retrieve the hyperlink attribute "href".

- Retrieve the attribute value, naming it "destination". This

destination is the Url of a web page referenced by this web

page.

- Create a Uri from the destination using the topmost file's

Uri as the base.

For example, if only "page.html" was the destination,

and "http://domain.com/directory/index.html" was the root's

Uri, the resulting Uri would be

"http://domain.com/directory/page.html".

- Extract the link text from the anchor element.

- At this point, a hyperlink (Uri) and its link text have been

retrieved. A test to determine if the Uri has already been

visited determines the next actions to take.

- If the Uri has already been visited (i.e., found in the

visited Dictionary) and the user does not desire to view

duplicate links, the Uri is ignored. Otherwise, the

already visited node is retrieved.

- If the already visited node's parent is null

(implying that the already visited node is the

BrotherTree root), ignore the Uri.

- If the already visited node's parent equals the new

node's parent, the Uri is ignored.

- Create a new BrotherNode

- Which node is to be marked as a duplicate, is based

upon the depth of each node.

- If the new node's depth is greater than or equal

to the already visited node's depth, the new

node is marked as a duplicate, the already

visited node is marked as a non-duplicate, and

the new node is added to the BrotherTree.

- If the new node's depth is less than the already

visited node's depth, the already visited node

is marked as a duplicate, the new node is marked

as a non-duplicate, the new node is added to the

BrotherTree, and the already visited node is

replaced in the visited Dictionary by the new

node.

- If the Uri has not already been visited (i.e., not found

in the visited Dictionary):

- Create a new BrotherNode

- Mark the new node's External property based upon the

relationship between the new node and the

Brothertree root.

- Add the new node to the visited Dictionary.

- Add the new node to the BrotherTree.

- If the BrotherTree NodeCount is greater than or equal to the

maximum number of pages to be retrieved, the stack is

emptied and the extraction of hyperlinks returns.

The other extract methods

extract_css,

extract_icons,

extract_images, and

extract_javascript are much

simpler. As shown in the following table, the major differences

between the extract methods, are the XPath strings used to retrieve

the appropriate HtmlNodeCollection.

| Method | XPath |

| extract_css | "//link[@href]" with attributes "rel" and "stylesheet" |

| extract_icons | "//link[@href]" with attributes "rel" and "icon" |

| extract_images | "//img[@src]" with attribute "src" |

| extract_javascript | "//script[@src]" |

Whether the method executes is dependent upon the options set by the

user in the Tree Reporting Options.

Displaying the Web Site Tree

[toc]

The class

BrotherTreeView

implements a

ScrollableControl

[^]. The BrotherTreeView is similar to the

TreeView

[^]

class except that it performs special expand and collapse operations

when the user clicks on an expand/collapse arrow or on a web page

label.

Conclusion

[toc]

This article has presented a tool that generates a Google site

map for a user-specified web site.

References

[toc]

Development Environment

[toc]

SiteMapper control was developed in the following environment:

|

| Microsoft Windows 7 Professional SP1

|

| | Microsoft Visual Studio 2008 Professional SP1

|

|

| Microsoft .Net Framework Version 3.5 SP1

|

|

| Microsoft Visual C# 2008

|

| SiteMapper V3.3

| 06/16/2014

| Original Article

|