Introduction

This article describes SimpleScene, a simple 3d scene manager, written in C# and the OpenTK managed wrapper around OpenGL. The code (and binaries!) run unmodified on Mac, Windows, and Linux. The code also includes a WavefrontOBJ file reader in C#.

Background

When writing a game, it is often advantageous to start with a 3d engine, such as Unity, Torque, Unreal Engine, or an open-source option such as Ogre or Axiom. However, if your goal is to get your hands dirty and learn about OpenGL, GLSL, and 3d engine concepts, the sheer size and complexity of those projects can be overwhelming.

SimpleScene is a small 3d scene manager and 3d example intended to fill this need. I've attempted to favor directness in the code over flexible abstractions, as this makes it easier to follow, learn from, and modify. The code is offered into the public domain, without any license restrictions. You can check it out or download it from the SimpleScene github.

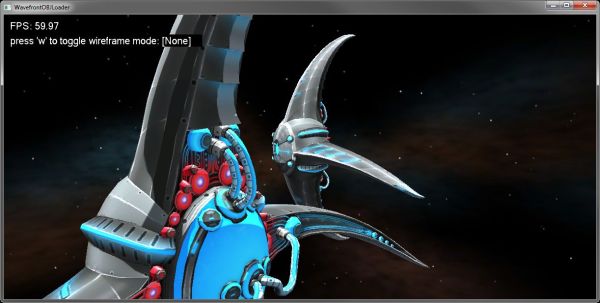

The WavefrontOBJLoader example program is based on the transitional GLSL 120, because it has very wide device compatibility. This is the first GLSL which supports geometry shaders, which were used to implement single-pass wireframes-- a shader-based method of adding object wireframes which doesn't have the perfomance and visual artifacts of re-drawing GL-Lines over the object.

What is a Scene Manager?

In short, a scene-manager takes care of managing and drawing your 3d scene of objects, so you can think at the abstraction of moving 3d objects around, isntead of how to transform and paint them into a camera and viewport.

3d APIs such as OpenGL, Direct3d, and WebGL are called immediate-mode rendering APIs. They allow you to take a clear buffer of pixels and start painting 3d shapes, or rasterizing, into that buffer. The set of shapes drawn into the buffer is commonly referred to as a scene. Therefore, a scene-manager takes over the work of transforming a set of objects, their positions, orientations, animation states, rendering states, and any other relevant information -- into a set of immediate mode calls.

Is a Scene Manager a Game Engine?

Not exactly, and the line between them is definetly fuzzy.

A game-engine contains a scene-manager, but it typically also contains lots of other code assuming that the program will act in a particular way. The most common game-engine assumptions are things like (a) assuming only a single operating system window, (b) assuming a continuous-repaint update-redraw, where the full scene is updated every frame, and (c) object meshes are not arbitrarily edited. Making assumptions like this makes it simpler to integrate physics, collision detection, and even certain types of object networking to work right out of the box. However, these assumptions may also be deeply ingrained in the code-base, making it impractical to use the game-engine for non-game applications.

A scene-manager, on the other hand, is more general purpose. SimpleScene does not set policies about building a particular type of application. Following the examples above, SimpleScene (a) does not create windows, so you can create and control them as you like, (b) does not have a render loop, allowing you to control when and how to repaint portions of your window, and (c) allows you to plug in whatever type of mesh-handers you like, including mesh-representations which are editable. On the other hand, it does not come with physics or collision detection either.

For example, SimpleScene currently includes functionality for:

- rendering 3d meshes through opengl, including support for loading WavefrontOBJ files

- multi-texture rendering (diffuse, specular, and ambient/glow maps)

- object wireframes, via GLSL single-pass or brute-force GL line over-draw

- CPU frustrum culling

- Dynamic Bounding-Volume-Hiearchy, for space-partitioning

- 3d object "picking" through CPU mouse-ray intersection tests (bounding sphere and precise-mesh)

- cross-platform 2d vector and font drawing via a GDI-like wrapper around agg-sharp

However, it does *not* include features typically found in modern game engines:

- collision detection or physics

- dynamic lighting

- threaded simulation, networking, threaded rendering (yet!)

How do I use a scene manager?

At the highest level, you must create a viewport window, decide how many scenes you would like to render into that viewport, and setup the matrix transformations required to project each scene into your viewport.

SimpleScene includes an example WavefrontOBJLoader program, which loads a sample skybox, OBJ 3d model "spaceship", and draws a GL-Point based starfield environment. We're not going to review every element of using OpenGL or OpenTK here, but instead provide a glance over some of the main elements. You can load, compile, and run this project using either Visual Studio or Xamarin / MonoDevelop.

The rendering for WavefrontOBJLoader is split into three different scenes. A scene is a set of objects which are rendered together, into the same camera and screen viewport projection. These are declared in Main.cs, and setup in Main_setupScene.cs:

SSScene scene;

SSScene hudScene;

SSScene environmentScene;

Our environmentScene includes our skybox and starfield, and is rendered in an "infinite projection". It's projected into the viewport using the camera rotation, but not it's position. It also ignores z-testing and is drawn first. This gives it the appearance of being infinitely far away.

Our hudScene includes the FPS display and wireframe toggle instructions. It's a special scene which is rendered without a 3d perspective projection, making it easy to put 2d UI elements onto the top of the scene rendering. This type of layer is nicknamed HUD after the heads-up-display on a pilot's visor.

Our scene includes the 3d elements being drawn, which in this case is just two copies of the main 3d model we loaded.

Add Objects to a Scene

In order for there to be something interesting to render, we need to add objects to the scene. you can see this happening in Main_setupScene.cs. Here is how we add the main "spaceship" model, loaded from our OBJ/MTL file and textures.

SSObject droneObj = new SSObjectMesh (

new SSMesh_wfOBJ (

SSAssetManager.mgr.getContext ("./drone2/"),

"drone2.obj",

true, shaderPgm));

scene.addObject (this.activeModel = droneObj);

droneObj.renderState.lighted = true;

droneObj.ambientMatColor = new Color4(0.2f,0.2f,0.2f,0.2f);

droneObj.diffuseMatColor = new Color4(0.3f,0.3f,0.3f,0.3f);

droneObj.specularMatColor = new Color4(0.3f,0.3f,0.3f,0.3f);

droneObj.shininessMatColor = 10.0f;

droneObj.MouseDeltaOrient(-40.0f,0.0f);

droneObj.Pos = new OpenTK.Vector3(-5,0,0);

The 2d HUD objects are added in a similar way, only we leave the z-coordinate set to zero.

fpsDisplay = new SSObjectGDISurface_Text ();

fpsDisplay.Label = "FPS: ...";

hudScene.addObject (fpsDisplay);

fpsDisplay.Pos = new Vector3 (10f, 10f, 0f);

fpsDisplay.Scale = new Vector3 (1.0f);

Render the Scene

You can see these three scenes being setup and rendered inside Main_renderScene.cs and the OpenTK game-window callback OnRenderFrame. First we clear the render buffer:

GL.Enable(EnableCap.DepthTest);

GL.DepthMask (true);

GL.ClearColor(0.0f, 0.0f, 0.0f, 0.0f);

GL.Clear(ClearBufferMask.ColorBufferBit | ClearBufferMask.DepthBufferBit);

Then we setup a an "infinite project" matrix to render the environment Scene, which includes the skybox. Because we're rendering the skybox first, we disable depth testing entirely. A more modern technique is to render the skybox after rendering all opaque objects, and forced to "infinite depth" with depth testing on. This avoids writing skybox pixels which are fully occluded by solid geometry. However, I stuck with the simplicity of rendering it first.

{

GL.Disable(EnableCap.DepthTest);

GL.Enable(EnableCap.CullFace);

GL.CullFace (CullFaceMode.Front);

GL.Disable(EnableCap.DepthClamp);

Matrix4 projMatrix = Matrix4.CreatePerspectiveFieldOfView (fovy, aspect, 0.1f, 2.0f);

environmentScene.setProjectionMatrix (projMatrix);

environmentScene.setInvCameraViewMatrix (

Matrix4.CreateFromQuaternion (

scene.activeCamera.worldMat.ExtractRotation ()

).Inverted ());

environmentScene.Render ();

}

Then we setup the perspective projection and render the main-scene's 3d objects.

{

GL.Enable (EnableCap.CullFace);

GL.CullFace (CullFaceMode.Back);

GL.Enable(EnableCap.DepthTest);

GL.Enable(EnableCap.DepthClamp);

GL.DepthMask (true);

scene.setInvCameraViewMatrix (scene.activeCamera.worldMat.Inverted ());

Matrix4 projection = Matrix4.CreatePerspectiveFieldOfView (fovy, aspect, 1.0f, 500.0f);

scene.setProjectionMatrix (projection);

scene.SetupLights ();

scene.Render ();

}

Finally, we setup an orthographic matrix, and render the HUD scene elements.

GL.Disable (EnableCap.DepthTest);

GL.Disable (EnableCap.CullFace);

GL.DepthMask (false);

hudScene.setProjectionMatrix(Matrix4.Identity);

hudScene.setInvCameraViewMatrix(

Matrix4.CreateOrthographicOffCenter(0,ClientRectangle.Width,ClientRectangle.Height,0,-1,1));

hudScene.Render ();

After the scene is rendering, you'll probably want some code enabling the user to interact with scene elements. In our simple example, the only interaction we have is with the camera. The mouse wheel zooms it in and out, and dragging orbits it around our model object. We also setup the key 'w' to toggle between three different wireframe display modes. You can see the handlers for this in Main_setupInput.cs.

How does a Scene Manager draw the scene?

Under the covers, the scene manager keeps track of all the objects in the scene, their positions, and their transformation matricies. Whenever it's asked to render the scene, it walks the scene, updating the projection matrix before rendering the object out to the immediate-mode API, in this case OpenTK / OpenGL.

A very simple example of drawing a mesh can be found in SSObjectCube.cs. This class renders a unit-sized cube using the legacy OpenGL API. It doesn't have to manage matricies, because the scene-manager has already handled that for us in SSScene and SSObject. This makes the Render code easy to follow, as it just renders itself using OpenGL as if it is centered at the origin.

public override void Render(ref SSRenderConfig renderConfig) {

base.Render (ref renderConfig);

var p0 = new Vector3 (-1, -1, 1);

var p1 = new Vector3 ( 1, -1, 1);

var p2 = new Vector3 ( 1, 1, 1);

var p3 = new Vector3 (-1, 1, 1);

var p4 = new Vector3 (-1, -1, -1);

var p5 = new Vector3 ( 1, -1, -1);

var p6 = new Vector3 ( 1, 1, -1);

var p7 = new Vector3 (-1, 1, -1);

GL.Begin(BeginMode.Triangles);

GL.Color3(0.5f, 0.5f, 0.5f);

drawQuadFace(p0, p1, p2, p3);

drawQuadFace(p7, p6, p5, p4);

drawQuadFace(p1, p0, p4, p5);

drawQuadFace(p2, p1, p5, p6);

drawQuadFace(p3, p2, p6, p7);

drawQuadFace(p0, p3, p7, p4);

GL.End();

}

For clarity, our implementation of drawQuadFace uses the legacy OpenGL drawing-calls, which involve calling a function per paramater. While this is easy to read when trying to understand 3d, calling a GL function for every vertex has gone the way of the dodo-bird. Modern OpenGL 2+, OpenGLES, and Direct3D have eliminated this calling-interface in favor of much faster vertex-buffers, which we'll talk about later. This is because calling a function per vertex is incredibly slow, and from a managed-language like C# it's positively lethargic. Every one of those GL.Vertex3() calls is hopping through a managed-to-native thunk.

Here you can see the code it uses to draw each quad-face of the cube:

private void drawQuadFace(Vector3 p0, Vector3 p1, Vector3 p2, Vector3 p3) {

GL.Normal3(Vector3.Cross(p1-p0,p2-p0).Normalized());

GL.Vertex3(p0);

GL.Vertex3(p1);

GL.Vertex3(p2);

GL.Normal3(Vector3.Cross(p2-p0,p3-p0).Normalized());

GL.Vertex3(p0);

GL.Vertex3(p2);

GL.Vertex3(p3);

}

To under more modern vertex-buffers and index-buffers, as well as texturing, we'll need to look at SSMesh_wfOBJ.cs. This class understands how to take the data-model produced by the WavefrontOBJLoader class, and format it for rendering through OpenGL. Because Wavefront OBJ doesn't support animations, the format is rather simple. It's a list of geometry subsets, each mapped to a material definition. That material-definition may include static shading colors and up-to four textures (diffuse, specular, ambient, and bump).

When dealing with static (non-animated) 3d meshes, quite a bit of the work is just shuffling buffers of bits around, converting from one format to another. The wavefront file loader moves 3d data from the ascii file-format into an intermediate in-memory representation. The SSMesh_wfOBJ.cs then loads and passes that data to OpenGL, by setting up data-structures for the information, passing them as vertex-and-index buffers, and sending textures to the videocard. When rendering, SSMesh_wfOBJ then just points the GPU at these datasets and pushes the "do your thing button", and voila, 3d shapes come out.

Okay, it's not quite as simple as that, but it's close. The one additional piece of magic we have not talked about is the shaders, written in a language called GLSL (the OpenGL Shading Language). Direct3d has it's own equivilant HLSL. For a while, Nvidia had their own special shading language called Cg which attempted to provide one language which would convert into GLSL and HLSL, but it's been depreciated. Now we all write GLSL for cross-platform OpenGL, and HLSL for Windows Direct3D.

What is a shader?

Long long ago, 3d rasterization hardware included a fixed-function set of capabilities. At first hardware could render shaded triangles only, with no image texture. Then eventually it was possible to add a single-texture to the triangles. Then someone had the idea to layer and combine multiple textures onto the same triangle. Each generation of the hardware was fixed-function, in that it could do only what it was designed to do, no more, and no less.

As 3d hardware became more and more popular, the software folks started tricking the hardware into doing things the fixed-functions were not intended to do, by creating odd texture-inputs and using the fixed blend modes in strange ways. Eventually, everyone realized these graphics chips would be much more useful and flexible if they had software of their own -- and thus began the path to the modern day general-purpose GPU.

Your GPU is much like your CPU, with one special difference. Wheras your CPU is designed to run one copy of a really really large software program. Your GPU is designed to run lots of simultaneous copies of a really really small software program. Those really small programs are called shaders. When we use them for 3d rendering, they handle the many small tasks in 3d rendering which must be repeated millions and millions of times per frame..

Vertex operations happen once per vertex. Geometry operations happen once per geometric primitive (i.e. triangle), and pixel operations happen once per pixel (only we call them fragments or texels, not pixels). This yields the three types of shaders: Vertex shaders, Geometry shaders, and Fragment shaders.

Just like our SSObjectCube class is written as if it's the only cube in existance, a shader too is written to handle only a single operation. It's invoked once for each occurance of that type of operation, often simultaneously across the GPU hardware's highly parallel shader units. This means shader programming is a bit different than regular programming. We can't talk to any of the other occurances of shaders which are running, because we have no idea if or when they might run. A shader just deals with the inputs of it's task at hand, and outputs what is required.

There are books and websites dedicated to explaining the details of shaders and shader programming. At this point we're going to jump right into SimpleScene shaders, and how our example project's shader works.

Our Shaders and Single-Pass-Wireframes

Our shaders are setup in Main_setupShaders.cs. The asset manager finds them in Assets/shaders. Each type of shader is in its own file.

I admit that my intent in keeping SimpleScene as simple and direct as possible has failed a bit in the shaders. Currently I have three different shading techniques mixed into one pile of shaders. (a) a basic per-pixel glow+diffuse+specular shader, (b) the single-pass wifeframe calculations, and (c) a not-yet-working bump-mapping shader. In the future I plan to clean this mess up, fix the bump mapping, and separate these into separate shaders so it's easier to understand. However, I didn't want perfect to be the enemy of good, and so you get to see my work-in-progress shader code.

The first thing to note about our shaders is that they are using GLSL 120. This is a "transitional" form of GLSL, in that it has one-foot in the world of legacy fixed-function pipelines, and one foot in the world of modern GPU programming. I used this for two specific reasons. First, it has the widest hardware compatibility - specificaly it is the most compatible GLSL with geometry shaders necessary for single-pass wireframes. Second, because it works easily with both legacy OpenGL function-per-paramater calls, and vertex-buffers, it allows the rest of SimpleScene to intermix these two modes easily while still using the same shader code. Once we move to modern GLSL, the entire fixed-function pipeline is gone, the functional interface no longer works, and all data is supplied to the card via generic buffers. This is better, more general purpose, and more efficient, but not necessarily better from an educational standpoint -- one of the major goals of SimpleScene.

In traditional wireframe rendering, the object is drawn once normally, then again as a set of wireframe lines. This has several issues, first, it's slow to draw the object twice. Second, the lines "z-fight" with the original model, and are sometimes not visible. Workarounds can try to make them more visible in more cases, but no workaround is perfect. A better solution, is single-pass wireframes in GLSL. Rather than drawing separate wireframe lines, we simply calculate the pixels of a triangle which lie on the edge, and paint those a different color while rendering the triangle. This allows us not only to avoid the two problems above, but it also allows us to adjust the color of the wireframe based on the pixels it's painting over (dark on light and light on dark). Here are screenshots of the results:

You can learn more about how OpenGL shading works, and about these shaders, by reading the code, the references below, and just poking around and experimenting with on your own. If you have questions about specific parts of the code, or how to achieve an effect yourself, post in the comments and I'll do my best to answer.

Followup Questions?

Having trouble understanding part of the code? Want advice about how to add a specific feature? Post a topic below and I'll do my best to answer.

References

History

- 2014-07: added GDIviaAGG for cross-platform 2d vector graphics

- 2014-07: added ray-intersection based 3d picking (bounding sphere and precise-mesh)

- 2014-07: first release of code and article