Introduction

In this article we will look at dense motion estimation based on polymonial repsentation of image. The polynomial basis representation of the image is obtained by approximating the local neighborhood of image using quadratic polynomial basis. The displacement between adjacent frames can be obtained by equating the coefficients of the basis.

Introduction

- This article describes a fast dense optical flow computation algorithm by [4]. In the earlier articles it was seen that a local neighborhood of image can be represented using polynomial basis. Using this representation estimation of dense optical flow is obtained at each point in the image.

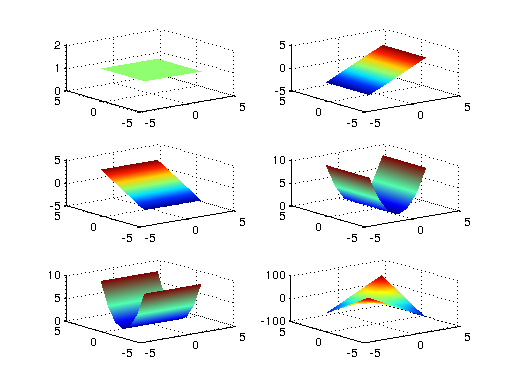

2D basis function

Polynomial basis

- Assuming how a polynomial transforms under translation using the polynomial expansion coefficients derived from current and previous frames and estimate of displacement vector is obtained.

- The idea of polynomial expansion is to approximate a neighborhood of a point in a 2D function with a polynomial.Considering quadratic polynomial basis \(1,x^2,y^2,x,y,xy\), pixel values in a neighborhood of image is represented by

$f(x) ~ x^T A x + b^T x + c$

where A is a symmetric matrix,b is a vector and c is a scalar - The coefficients can be estimated by weighted least square estimate of pixel values about neighborhood as seen in the earlier article. As with all optical flow algorithm,the brightness constancy assumption is made. The brighness of a path of image in adjacent frames is constant.

- Consider a translational motion \(d\) ecountered at point \(\mathbf{(x,y)}\) in the image.

$\begin{eqnarray*}\\ f_1(x) = x^T A_1 x + b_{1}^Tx +c_1 \\ f_2(x) = f_1(x-d) = (x-d)^T A_1 (x-d) + b_{1}^T(x-d) +c_1 \\ f_2(x) = f_1(x-d) = (x)^T A_1 (x) + (b_{1}-2A_1d)^T(x) \\ + d^TAd-b_1^Td+c_1 \\ \end{eqnarray*}$

Equating coefficients in the two polynomials due to brightness constancy assumption.

$\begin{eqnarray*}\\ A_2 = A_1 \\ b_2=(b_{1}-2A_1d)\\ c_2=d^TAd-b_1^Td+c_1 \end{eqnarray*}$

Assuming \(A\) is non-singular

$\begin{eqnarray*}\\ d=-\frac{1}{2}A^-1(b_2-b_1)\\ \end{eqnarray*}$

Thus by equating the coefficients of the polynomial the displacement vector can be obtained at each point in the image assuming there is overlap between the region of interest ie image neighborhood in adjacent frames.

- Let us say we have the estimate of displacement \(\bar{d}\) We extract the ROI about the neighborhood at point \(P(x,y)\) and point \(P(x+d.x,y+d.y)\) The polynomial basis are extracted and the computation that is shown above is performed.

- The total displacement can be estimated as

$\begin{eqnarray*} &\bar{b}_2=b_{1}-2A_1 (\bar{d}) \\ & \text { we know $\bar{d}$,$A_1$,$b_1$} \\ & d = -0.5*A^{-1} (b_2+\bar{b}_2 - b_1) \end{eqnarray*}$

- Thus an iterative scheme can be used where in every successive iteration a better estimate of displacement vector is obtained. The iterations can be terminated when change is displacement vector is below is threshold in successive iterations or specific number of iterations have been completed. The intial estimate of displacement vector is assumed to be \(\mathbf{(0,0)}\) Thus ROI or the image patch in the current and previous frames are at the same.

01

12 void updatePoly(const float *ptr1,const float *ptr2,Point2f d,bool flag,

13 float *M,Point p,Size s)

14 {

15 int x=p.x;

16 int y=p.y;

17 const int BORDER = 5;

18 static const float border[BORDER] = {0.14f, 0.14f, 0.4472f, 0.4472f, 0.4472f};

19

20 float r[5];

21 float r2,r3,r4,r5,r6;

22 if(flag==true)

23 {

24

25 r4=(ptr1[2]+ptr2[2])*0.5;

26 r5=(ptr1[3]+ptr2[3])*0.5;

27 r6=(ptr1[4]+ptr2[4])*0.25;

28

29 }

30 else

31 {

32 r[0]=0.f;

33 r[1]=0.f;

34 r[2]=ptr1[2];

35 r[3]=ptr1[3];

36 r[4]=ptr1[4]*0.5;

37 r2=r[0];

38 r3=r[1];

39 r4=r[2];

40 r5=r[3];

41 r6=ptr1[4]*0.5;

42

43

44 }

45

46

47 r2=(ptr1[0]-ptr2[0])*0.5;

48 r3=(ptr1[1]-ptr2[1])*0.5;

49

50

51

52 53

54 r2 += r4*d.x + r6*d.y;

55 r3 += r6*d.x + r5*d.y;

56

57

58 if( (unsigned)(x - BORDER) >= (unsigned)(s.width - BORDER*2) ||

59 (unsigned)(y - BORDER) >= (unsigned)(s.height - BORDER*2))

60 {

61 float scale = (x < BORDER ? border[x] : 1.f)*

62 (x >= s.width - BORDER ? border[s.width - x - 1] : 1.f)*

63 (y < BORDER ? border[y] : 1.f)*

64 (y >= s.height - BORDER ? border[s.height - y - 1] : 1.f);

65

66 r2 *= scale; r3 *= scale; r4 *= scale;

67 r5 *= scale; r6 *= scale;

68 }

69

70

71

72 M[0] = r4*r4 + r6*r6;

73 M[1] = (r4 + r5)*r6;

74 M[2] = r5*r5 + r6*r6;

75 M[3] = r4*r2 + r6*r3;

76 M[4] = r6*r2 + r5*r3;

77 }

- The method \(\textbf{EstimateFlow}\) computes the coefficients \(A,b_2,b_1\) required for displacentt field computation.The \(\textbf{EstimateFlow}\) functions call the method \(\textbf{UpdatePoly}\) for each pixel in the image.

- The displacement field obtained may be discontinuous and contain noise and other atrifacts.Since it is reasonable to assume that if motion is encounted at a point, the neighborhood pixels also encounter the same motion. The displacement vector can be averaged over a neighborhood to get a better estimate of the displacement field.

- The method \(\textbf{AverageFlow}\) computes the average of coefficients \(A_1,b_2,b_1\) and then computes the displacement flow field.

01

08 void AverageFlow( const Mat& _R0, const Mat& _R1,Mat& _flow, Mat& matM)

09 {

10 int x, y, i, width = _flow.cols, height = _flow.rows;

11

12

13 cv::GaussianBlur(matM,matM,Size(15,15),sigma);

14

15

16 for( y = 0; y < height; y++ )

17 {

18 double g11, g12, g22, h1, h2;

19 float* flow = (float*)(_flow.data + _flow.step*y);

20 float *coeff=(float *)(matM.data+matM.step*y);

21

22 for( x = 0; x < width; x++ )

23 {

24 g11 = coeff[x*5];

25 g12 = coeff[x*5+1];

26 g22 = coeff[x*5+2];

27 h1 = coeff[x*5+3];

28 h2 = coeff[x*5+4];

29

30 double idet = 1./(g11*g22 - g12*g12 + 1e-3);

31

32 flow[x*2] = (float)((g11*h2-g12*h1)*idet);

33 flow[x*2+1] = (float)((g22*h1-g12*h2)*idet);

34 }

35

36

37 }

38

39

40 EstimateFlow(_R0, _R1, _flow, matM,0,flow.rows);

41 }

- This approach may in case of large displaement.Hence a multi scale estimation is performed. The estimation of flow field is performed as the smallest resolution.The displacement computed at the lower resolution is used as estimate for peform displacement field computation at higher resolution.

- Dense optial flow computed for two frames is shown in figure 2b

Frame 1

Frame 2

Optical Flow

Conclusion

This article describes the theory and implementation details of the dense optical flow algorithm based on paper by [4].This code for the algorithm can be found at github repository https://github.com/pi19404/OpenVisionin files DenseOf.cpp and DenseOf.hpp files. In the future article we will look at optimizing the code using SSE,NEOM and OpenCL optimizations to enable real time computation of the dense optical flow fields

References

- Kenneth Andersson and Hans Knutsson. Continuous normalized convolution. In: ICME (1). IEEE, 2002, pp. 725 728. isbn: 0-7803-7304-9. url: http://dblp.uni-trier.de/db/conf/icmcs/icme2002-1.html

- Kenneth Andersson, Carl-Fredrik Westin, and Hans Knutsson. Prediction from o -grid samples using continuous normalized convolution. In: Signal Processing 87.3 (Mar. 22, 2007), pp. 353 365. url: http://dblp.uni- trier.de/db/journals/sigpro/sigpro87.html

- Gunnar Farnebäck. Motion-based Segmentation of Image Sequences . LiTH-ISY-EX-1596. MA thesis. SE-581 83 Linköping, Sweden: Linköping University, 1996.

- Gunnar Farnebäck. Polynomial Expansion for Orientation and Motion Estimation . Dissertation No 790, ISBN 91-7373-475-6. PhD thesis. SE-581 83 Linköping,Sweden: Linköping University, Sweden, 2002.

- Gunnar Farneback. Two-Frame Motion Estimation Based on Polynomial Expan-sion . In: SCIA. LNCS 2749. Gothenburg, Sweden, 2003, pp. 363 370.